Gordon Cheng

Object Classification Utilizing Neuromorphic Proprioceptive Signals in Active Exploration: Validated on a Soft Anthropomorphic Hand

May 23, 2025Abstract:Proprioception, a key sensory modality in haptic perception, plays a vital role in perceiving the 3D structure of objects by providing feedback on the position and movement of body parts. The restoration of proprioceptive sensation is crucial for enabling in-hand manipulation and natural control in the prosthetic hand. Despite its importance, proprioceptive sensation is relatively unexplored in an artificial system. In this work, we introduce a novel platform that integrates a soft anthropomorphic robot hand (QB SoftHand) with flexible proprioceptive sensors and a classifier that utilizes a hybrid spiking neural network with different types of spiking neurons to interpret neuromorphic proprioceptive signals encoded by a biological muscle spindle model. The encoding scheme and the classifier are implemented and tested on the datasets we collected in the active exploration of ten objects from the YCB benchmark. Our results indicate that the classifier achieves more accurate inferences than existing learning approaches, especially in the early stage of the exploration. This system holds the potential for development in the areas of haptic feedback and neural prosthetics.

A Bio-mimetic Neuromorphic Model for Heat-evoked Nociceptive Withdrawal Reflex in Upper Limb

May 23, 2025Abstract:The nociceptive withdrawal reflex (NWR) is a mechanism to mediate interactions and protect the body from damage in a potentially dangerous environment. To better convey warning signals to users of prosthetic arms or autonomous robots and protect them by triggering a proper NWR, it is useful to use a biological representation of temperature information for fast and effective processing. In this work, we present a neuromorphic spiking network for heat-evoked NWR by mimicking the structure and encoding scheme of the reflex arc. The network is trained with the bio-plausible reward modulated spike timing-dependent plasticity learning algorithm. We evaluated the proposed model and three other methods in recent studies that trigger NWR in an experiment with radiant heat. We found that only the neuromorphic model exhibits the spatial summation (SS) effect and temporal summation (TS) effect similar to humans and can encode the reflex strength matching the intensity of the stimulus in the relative spike latency online. The improved bio-plausibility of this neuromorphic model could improve sensory feedback in neural prostheses.

Humanoid Locomotion and Manipulation: Current Progress and Challenges in Control, Planning, and Learning

Jan 03, 2025

Abstract:Humanoid robots have great potential to perform various human-level skills. These skills involve locomotion, manipulation, and cognitive capabilities. Driven by advances in machine learning and the strength of existing model-based approaches, these capabilities have progressed rapidly, but often separately. Therefore, a timely overview of current progress and future trends in this fast-evolving field is essential. This survey first summarizes the model-based planning and control that have been the backbone of humanoid robotics for the past three decades. We then explore emerging learning-based methods, with a focus on reinforcement learning and imitation learning that enhance the versatility of loco-manipulation skills. We examine the potential of integrating foundation models with humanoid embodiments, assessing the prospects for developing generalist humanoid agents. In addition, this survey covers emerging research for whole-body tactile sensing that unlocks new humanoid skills that involve physical interactions. The survey concludes with a discussion of the challenges and future trends.

On the impact of robot personalization on human-robot interaction: A review

Jan 22, 2024Abstract:This study reviews the impact of personalization on human-robot interaction. Firstly, the various strategies used to achieve personalization are briefly described. Secondly, the effects of personalization known to date are discussed. They are presented along with the personalized parameters, personalized features, used technology, and use case they relate to. It is observed that various positive effects have been discussed in the literature while possible negative effects seem to require further investigation.

Proceeding of the 1st Workshop on Social Robots Personalisation At the crossroads between engineering and humanities

Jul 10, 2023Abstract:Nowadays, robots are expected to interact more physically, cognitively, and socially with people. They should adapt to unpredictable contexts alongside individuals with various behaviours. For this reason, personalisation is a valuable attribute for social robots as it allows them to act according to a specific user's needs and preferences and achieve natural and transparent robot behaviours for humans. If correctly implemented, personalisation could also be the key to the large-scale adoption of social robotics. However, achieving personalisation is arduous as it requires us to expand the boundaries of robotics by taking advantage of the expertise of various domains. Indeed, personalised robots need to analyse and model user interactions while considering their involvement in the adaptative process. It also requires us to address ethical and socio-cultural aspects of personalised HRI to achieve inclusive and diverse interaction and avoid deception and misplaced trust when interacting with the users. At the same time, policymakers need to ensure regulations in view of possible short-term and long-term adaptive HRI. This workshop aims to raise an interdisciplinary discussion on personalisation in robotics. It aims at bringing researchers from different fields together to propose guidelines for personalisation while addressing the following questions: how to define it - how to achieve it - and how it should be guided to fit legal and ethical requirements.

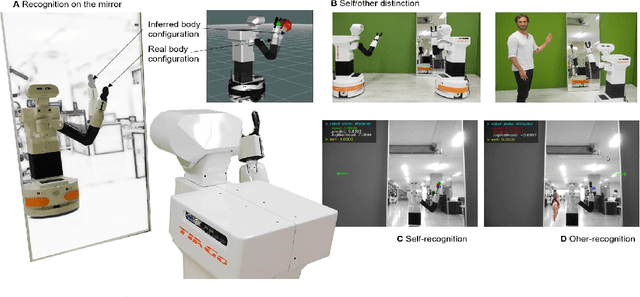

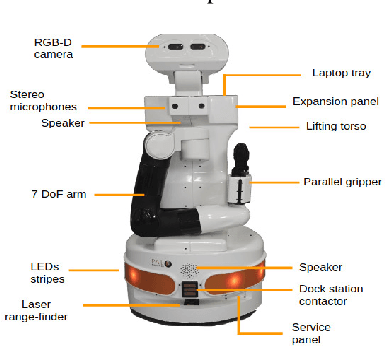

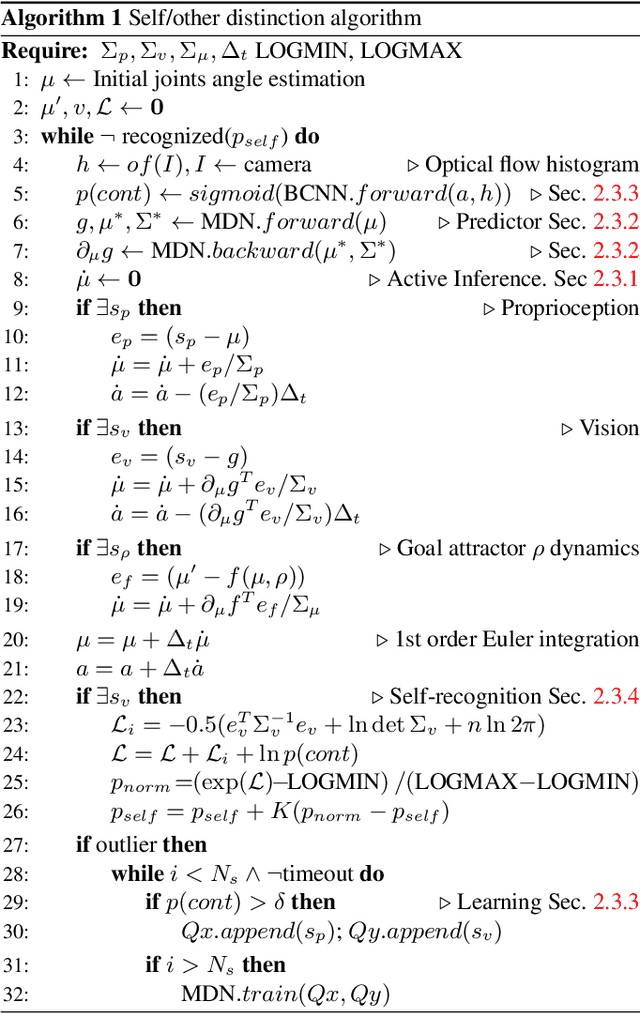

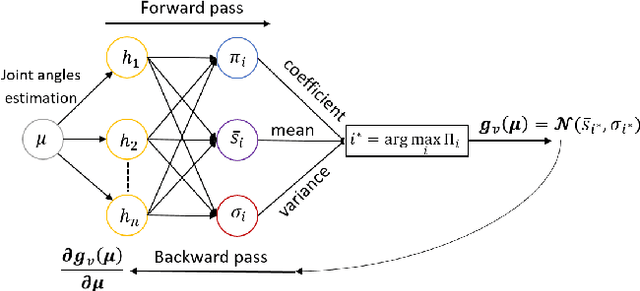

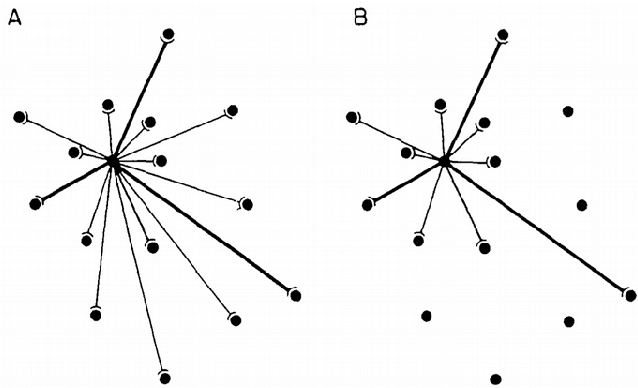

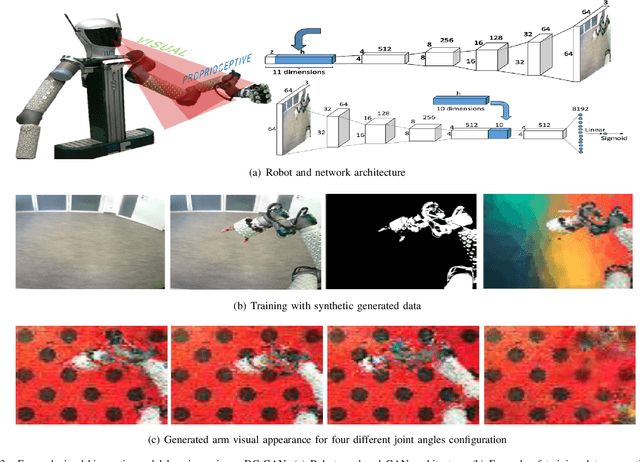

Robot self/other distinction: active inference meets neural networks learning in a mirror

Apr 11, 2020

Abstract:Self/other distinction and self-recognition are important skills for interacting with the world, as it allows humans to differentiate own actions from others and be self-aware. However, only a selected group of animals, mainly high order mammals such as humans, has passed the mirror test, a behavioural experiment proposed to assess self-recognition abilities. In this paper, we describe self-recognition as a process that is built on top of body perception unconscious mechanisms. We present an algorithm that enables a robot to perform non-appearance self-recognition on a mirror and distinguish its simple actions from other entities, by answering the following question: am I generating these sensations? The algorithm combines active inference, a theoretical model of perception and action in the brain, with neural network learning. The robot learns the relation between its actions and its body with the effect produced in the visual field and its body sensors. The prediction error generated between the models and the real observations during the interaction is used to infer the body configuration through free energy minimization and to accumulate evidence for recognizing its body. Experimental results on a humanoid robot show the reliability of the algorithm for different initial conditions, such as mirror recognition in any perspective, robot-robot distinction and human-robot differentiation.

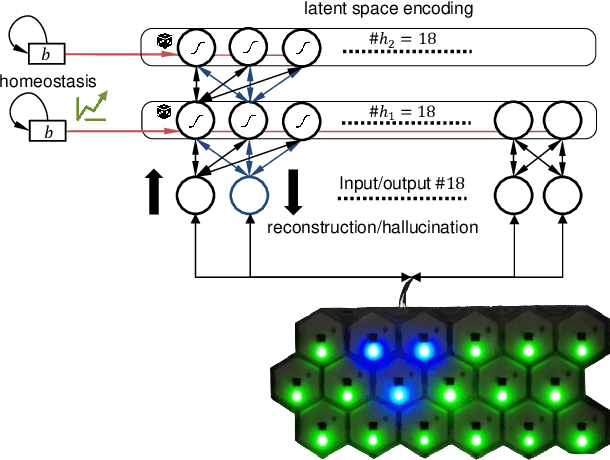

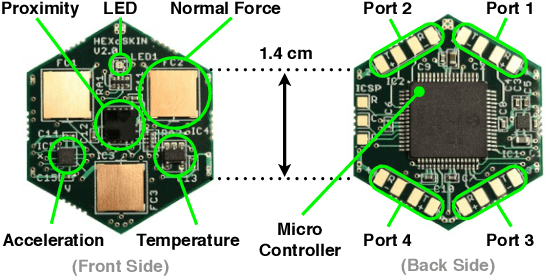

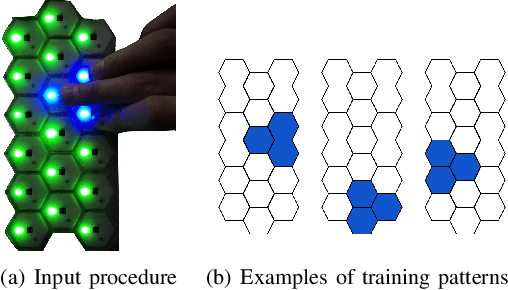

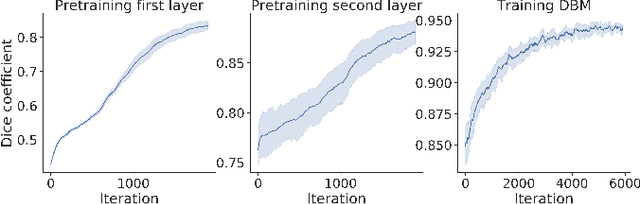

Tactile Hallucinations on Artificial Skin Induced by Homeostasis in a Deep Boltzmann Machine

Jun 26, 2019

Abstract:Perceptual hallucinations are present in neurological and psychiatric disorders and amputees. While the hallucinations can be drug-induced, it has been described that they can even be provoked in healthy subjects. Understanding their manifestation could thus unveil how the brain processes sensory information and might evidence the generative nature of perception. In this work, we investigate the generation of tactile hallucinations on biologically inspired, artificial skin. To model tactile hallucinations, we apply homeostasis, a change in the excitability of neurons during sensory deprivation, in a Deep Boltzmann Machine (DBM). We find that homeostasis prompts hallucinations of previously learned patterns on the artificial skin in the absence of sensory input. Moreover, we show that homeostasis is capable of inducing the formation of meaningful latent representations in a DBM and that it significantly increases the quality of the reconstruction of these latent states. Through this, our work provides a possible explanation for the nature of tactile hallucinations and highlights homeostatic processes as a potential underlying mechanism.

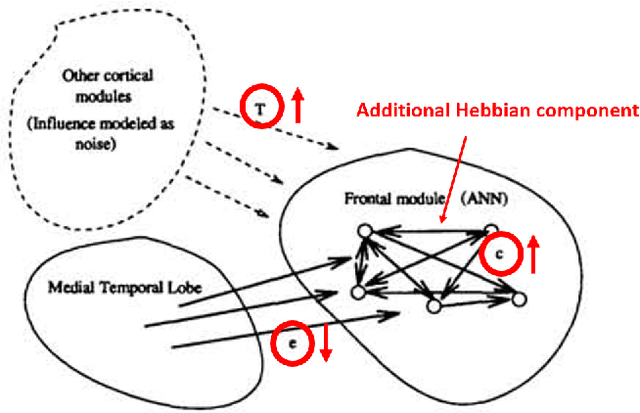

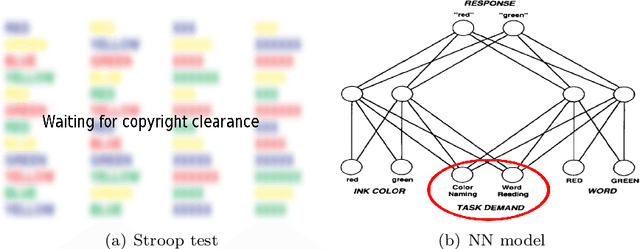

A Review on Neural Network Models of Schizophrenia and Autism Spectrum Disorder

Jun 24, 2019

Abstract:This survey presents the most relevant neural network models of autism spectrum disorder and schizophrenia, from the first connectionist models to recent deep network architectures. We analyzed and compared the most representative symptoms with its neural model counterpart, detailing the alteration introduced in the network that generates each of the symptoms, and identifying their strengths and weaknesses. For completeness we additionally cross-compared Bayesian and free-energy approaches. Models of schizophrenia mainly focused on hallucinations and delusional thoughts using neural disconnections or inhibitory imbalance as the predominating alteration. Models of autism rather focused on perceptual difficulties, mainly excessive attention to environment details, implemented as excessive inhibitory connections or increased sensory precision. We found an excessive tight view of the psychopathologies around one specific and simplified effect, usually constrained to the technical idiosyncrasy of the network used. Recent theories and evidence on sensorimotor integration and body perception combined with modern neural network architectures offer a broader and novel spectrum to approach these psychopathologies, outlining the future research on neural networks computational psychiatry, a powerful asset for understanding the inner processes of the human brain.

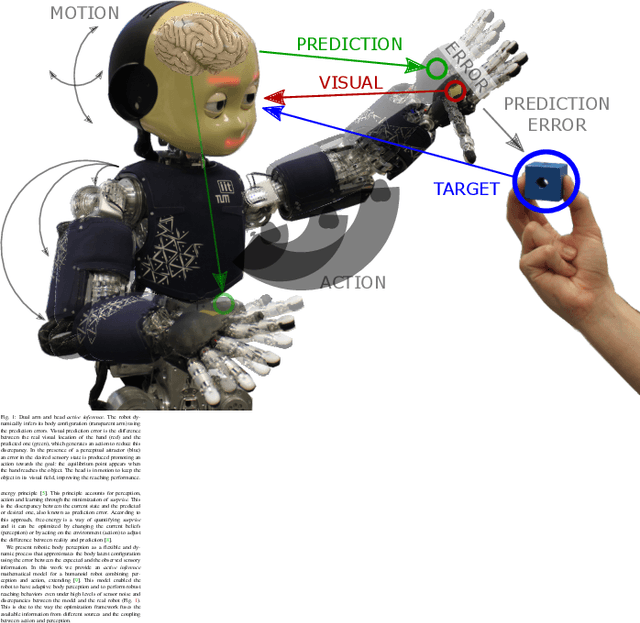

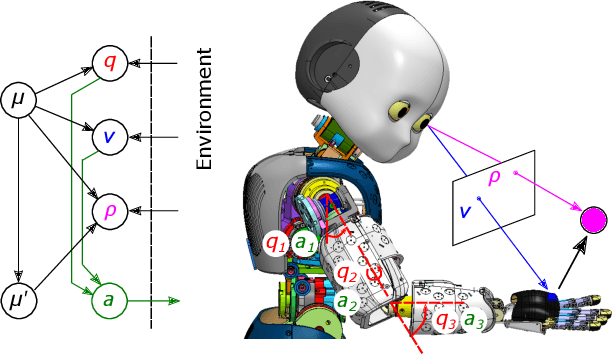

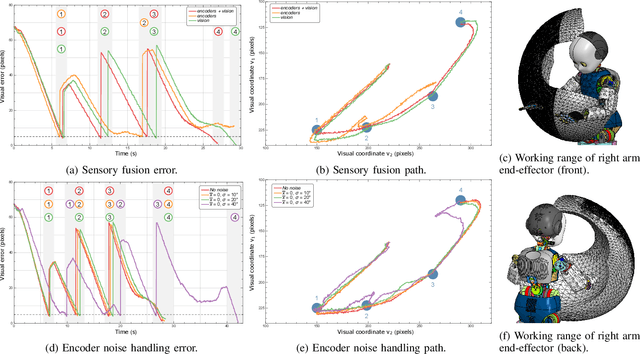

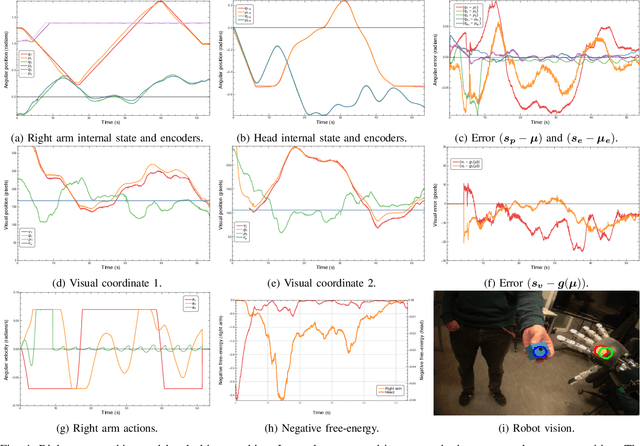

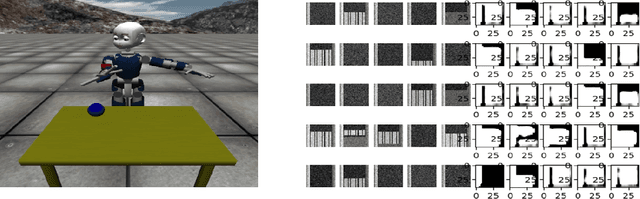

Active inference body perception and action for humanoid robots

Jun 07, 2019

Abstract:One of the biggest challenges in robotics systems is interacting under uncertainty. Unlike robots, humans learn, adapt and perceive their body as a unity when interacting with the world. We hypothesize that the nervous system counteracts sensor and motor uncertainties by unconscious processes that robustly fuse the available information for approximating their body and the world state. Being able to unite perception and action under a common principle has been sought for decades and active inference is one of the potential unification theories. In this work, we present a humanoid robot interacting with the world by means of a human brain-like inspired perception and control algorithm based on the free-energy principle. Until now, active inference was only tested in simulated examples. Their application on a real robot shows the advantages of such an algorithm for real world applications. The humanoid robot iCub was capable of performing robust reaching behaviors with both arms and active head object tracking in the visual field, despite the visual noise, the artificially introduced noise in the joint encoders (up to 40 degrees deviation), the differences between the model and the real robot and the misdetections of the hand.

Sensorimotor learning for artificial body perception

Jan 15, 2019

Abstract:Artificial self-perception is the machine ability to perceive its own body, i.e., the mastery of modal and intermodal contingencies of performing an action with a specific sensors/actuators body configuration. In other words, the spatio-temporal patterns that relate its sensors (e.g. visual, proprioceptive, tactile, etc.), its actions and its body latent variables are responsible of the distinction between its own body and the rest of the world. This paper describes some of the latest approaches for modelling artificial body self-perception: from Bayesian estimation to deep learning. Results show the potential of these free-model unsupervised or semi-supervised crossmodal/intermodal learning approaches. However, there are still challenges that should be overcome before we achieve artificial multisensory body perception.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge