Silvia Rossi

HEXAR: a Hierarchical Explainability Architecture for Robots

Jan 06, 2026Abstract:As robotic systems become increasingly complex, the need for explainable decision-making becomes critical. Existing explainability approaches in robotics typically either focus on individual modules, which can be difficult to query from the perspective of high-level behaviour, or employ monolithic approaches, which do not exploit the modularity of robotic architectures. We present HEXAR (Hierarchical EXplainability Architecture for Robots), a novel framework that provides a plug-in, hierarchical approach to generate explanations about robotic systems. HEXAR consists of specialised component explainers using diverse explanation techniques (e.g., LLM-based reasoning, causal models, feature importance, etc) tailored to specific robot modules, orchestrated by an explainer selector that chooses the most appropriate one for a given query. We implement and evaluate HEXAR on a TIAGo robot performing assistive tasks in a home environment, comparing it against end-to-end and aggregated baseline approaches across 180 scenario-query variations. We observe that HEXAR significantly outperforms baselines in root cause identification, incorrect information exclusion, and runtime, offering a promising direction for transparent autonomous systems.

PerspAct: Enhancing LLM Situated Collaboration Skills through Perspective Taking and Active Vision

Nov 11, 2025Abstract:Recent advances in Large Language Models (LLMs) and multimodal foundation models have significantly broadened their application in robotics and collaborative systems. However, effective multi-agent interaction necessitates robust perspective-taking capabilities, enabling models to interpret both physical and epistemic viewpoints. Current training paradigms often neglect these interactive contexts, resulting in challenges when models must reason about the subjectivity of individual perspectives or navigate environments with multiple observers. This study evaluates whether explicitly incorporating diverse points of view using the ReAct framework, an approach that integrates reasoning and acting, can enhance an LLM's ability to understand and ground the demands of other agents. We extend the classic Director task by introducing active visual exploration across a suite of seven scenarios of increasing perspective-taking complexity. These scenarios are designed to challenge the agent's capacity to resolve referential ambiguity based on visual access and interaction, under varying state representations and prompting strategies, including ReAct-style reasoning. Our results demonstrate that explicit perspective cues, combined with active exploration strategies, significantly improve the model's interpretative accuracy and collaborative effectiveness. These findings highlight the potential of integrating active perception with perspective-taking mechanisms in advancing LLMs' application in robotics and multi-agent systems, setting a foundation for future research into adaptive and context-aware AI systems.

Who Sees What? Structured Thought-Action Sequences for Epistemic Reasoning in LLMs

Aug 20, 2025Abstract:Recent advances in large language models (LLMs) and reasoning frameworks have opened new possibilities for improving the perspective -taking capabilities of autonomous agents. However, tasks that involve active perception, collaborative reasoning, and perspective taking (understanding what another agent can see or knows) pose persistent challenges for current LLM-based systems. This study investigates the potential of structured examples derived from transformed solution graphs generated by the Fast Downward planner to improve the performance of LLM-based agents within a ReAct framework. We propose a structured solution-processing pipeline that generates three distinct categories of examples: optimal goal paths (G-type), informative node paths (E-type), and step-by-step optimal decision sequences contrasting alternative actions (L-type). These solutions are further converted into ``thought-action'' examples by prompting an LLM to explicitly articulate the reasoning behind each decision. While L-type examples slightly reduce clarification requests and overall action steps, they do not yield consistent improvements. Agents are successful in tasks requiring basic attentional filtering but struggle in scenarios that required mentalising about occluded spaces or weighing the costs of epistemic actions. These findings suggest that structured examples alone are insufficient for robust perspective-taking, underscoring the need for explicit belief tracking, cost modelling, and richer environments to enable socially grounded collaboration in LLM-based agents.

Enhancing Robot Assistive Behaviour with Reinforcement Learning and Theory of Mind

Nov 11, 2024Abstract:The adaptation to users' preferences and the ability to infer and interpret humans' beliefs and intents, which is known as the Theory of Mind (ToM), are two crucial aspects for achieving effective human-robot collaboration. Despite its importance, very few studies have investigated the impact of adaptive robots with ToM abilities. In this work, we present an exploratory comparative study to investigate how social robots equipped with ToM abilities impact users' performance and perception. We design a two-layer architecture. The Q-learning agent on the first layer learns the robot's higher-level behaviour. On the second layer, a heuristic-based ToM infers the user's intended strategy and is responsible for implementing the robot's assistance, as well as providing the motivation behind its choice. We conducted a user study in a real-world setting, involving 56 participants who interacted with either an adaptive robot capable of ToM, or with a robot lacking such abilities. Our findings suggest that participants in the ToM condition performed better, accepted the robot's assistance more often, and perceived its ability to adapt, predict and recognise their intents to a higher degree. Our preliminary insights could inform future research and pave the way for designing more complex computation architectures for adaptive behaviour with ToM capabilities.

Multimodal Coherent Explanation Generation of Robot Failures

Oct 01, 2024Abstract:The explainability of a robot's actions is crucial to its acceptance in social spaces. Explaining why a robot fails to complete a given task is particularly important for non-expert users to be aware of the robot's capabilities and limitations. So far, research on explaining robot failures has only considered generating textual explanations, even though several studies have shown the benefits of multimodal ones. However, a simple combination of multiple modalities may lead to semantic incoherence between the information across different modalities - a problem that is not well-studied. An incoherent multimodal explanation can be difficult to understand, and it may even become inconsistent with what the robot and the human observe and how they perform reasoning with the observations. Such inconsistencies may lead to wrong conclusions about the robot's capabilities. In this paper, we introduce an approach to generate coherent multimodal explanations by checking the logical coherence of explanations from different modalities, followed by refinements as required. We propose a classification approach for coherence assessment, where we evaluate if an explanation logically follows another. Our experiments suggest that fine-tuning a neural network that was pre-trained to recognize textual entailment, performs well for coherence assessment of multimodal explanations. Code & data: https://pradippramanick.github.io/coherent-explain/.

Measuring Transparency in Intelligent Robots

Aug 29, 2024

Abstract:As robots become increasingly integrated into our daily lives, the need to make them transparent has never been more critical. Yet, despite its importance in human-robot interaction, a standardized measure of robot transparency has been missing until now. This paper addresses this gap by presenting the first comprehensive scale to measure perceived transparency in robotic systems, available in English, German, and Italian languages. Our approach conceptualizes transparency as a multidimensional construct, encompassing explainability, legibility, predictability, and meta-understanding. The proposed scale was a product of a rigorous three-stage process involving 1,223 participants. Firstly, we generated the items of our scale, secondly, we conducted an exploratory factor analysis, and thirdly, a confirmatory factor analysis served to validate the factor structure of the newly developed TOROS scale. The final scale encompasses 26 items and comprises three factors: Illegibility, Explainability, and Predictability. TOROS demonstrates high cross-linguistic reliability, inter-factor correlation, model fit, internal consistency, and convergent validity across the three cross-national samples. This empirically validated tool enables the assessment of robot transparency and contributes to the theoretical understanding of this complex construct. By offering a standardized measure, we facilitate consistent and comparable research in human-robot interaction in which TOROS can serve as a benchmark.

Increasing Transparency of Reinforcement Learning using Shielding for Human Preferences and Explanations

Nov 28, 2023Abstract:The adoption of Reinforcement Learning (RL) in several human-centred applications provides robots with autonomous decision-making capabilities and adaptability based on the observations of the operating environment. In such scenarios, however, the learning process can make robots' behaviours unclear and unpredictable to humans, thus preventing a smooth and effective Human-Robot Interaction (HRI). As a consequence, it becomes crucial to avoid robots performing actions that are unclear to the user. In this work, we investigate whether including human preferences in RL (concerning the actions the robot performs during learning) improves the transparency of a robot's behaviours. For this purpose, a shielding mechanism is included in the RL algorithm to include human preferences and to monitor the learning agent's decisions. We carried out a within-subjects study involving 26 participants to evaluate the robot's transparency in terms of Legibility, Predictability, and Expectability in different settings. Results indicate that considering human preferences during learning improves Legibility with respect to providing only Explanations, and combining human preferences with explanations elucidating the rationale behind the robot's decisions further amplifies transparency. Results also confirm that an increase in transparency leads to an increase in the safety, comfort, and reliability of the robot. These findings show the importance of transparency during learning and suggest a paradigm for robotic applications with human in the loop.

AGAR: Attention Graph-RNN for Adaptative Motion Prediction of Point Clouds of Deformable Objects

Jul 19, 2023

Abstract:This paper focuses on motion prediction for point cloud sequences in the challenging case of deformable 3D objects, such as human body motion. First, we investigate the challenges caused by deformable shapes and complex motions present in this type of representation, with the ultimate goal of understanding the technical limitations of state-of-the-art models. From this understanding, we propose an improved architecture for point cloud prediction of deformable 3D objects. Specifically, to handle deformable shapes, we propose a graph-based approach that learns and exploits the spatial structure of point clouds to extract more representative features. Then we propose a module able to combine the learned features in an adaptative manner according to the point cloud movements. The proposed adaptative module controls the composition of local and global motions for each point, enabling the network to model complex motions in deformable 3D objects more effectively. We tested the proposed method on the following datasets: MNIST moving digits, the Mixamo human bodies motions, JPEG and CWIPC-SXR real-world dynamic bodies. Simulation results demonstrate that our method outperforms the current baseline methods given its improved ability to model complex movements as well as preserve point cloud shape. Furthermore, we demonstrate the generalizability of the proposed framework for dynamic feature learning, by testing the framework for action recognition on the MSRAction3D dataset and achieving results on-par with state-of-the-art methods

Proceeding of the 1st Workshop on Social Robots Personalisation At the crossroads between engineering and humanities

Jul 10, 2023Abstract:Nowadays, robots are expected to interact more physically, cognitively, and socially with people. They should adapt to unpredictable contexts alongside individuals with various behaviours. For this reason, personalisation is a valuable attribute for social robots as it allows them to act according to a specific user's needs and preferences and achieve natural and transparent robot behaviours for humans. If correctly implemented, personalisation could also be the key to the large-scale adoption of social robotics. However, achieving personalisation is arduous as it requires us to expand the boundaries of robotics by taking advantage of the expertise of various domains. Indeed, personalised robots need to analyse and model user interactions while considering their involvement in the adaptative process. It also requires us to address ethical and socio-cultural aspects of personalised HRI to achieve inclusive and diverse interaction and avoid deception and misplaced trust when interacting with the users. At the same time, policymakers need to ensure regulations in view of possible short-term and long-term adaptive HRI. This workshop aims to raise an interdisciplinary discussion on personalisation in robotics. It aims at bringing researchers from different fields together to propose guidelines for personalisation while addressing the following questions: how to define it - how to achieve it - and how it should be guided to fit legal and ethical requirements.

An Application of a Runtime Epistemic Probabilistic Event Calculus to Decision-making in e-Health Systems

Sep 26, 2022

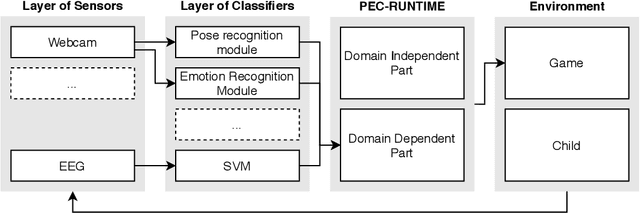

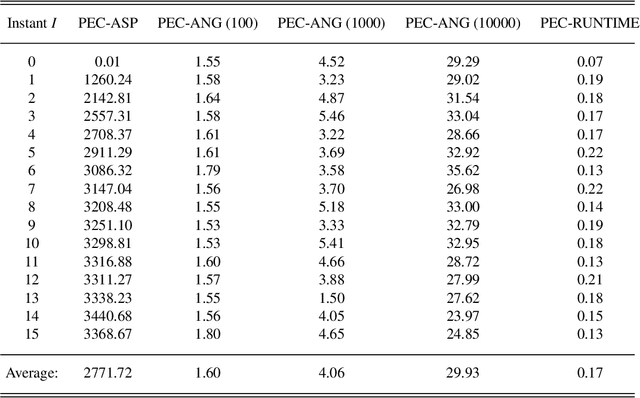

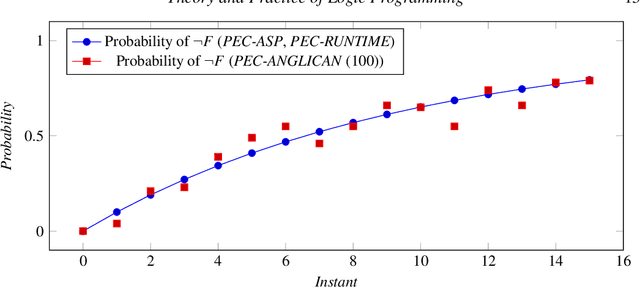

Abstract:We present and discuss a runtime architecture that integrates sensorial data and classifiers with a logic-based decision-making system in the context of an e-Health system for the rehabilitation of children with neuromotor disorders. In this application, children perform a rehabilitation task in the form of games. The main aim of the system is to derive a set of parameters the child's current level of cognitive and behavioral performance (e.g., engagement, attention, task accuracy) from the available sensors and classifiers (e.g., eye trackers, motion sensors, emotion recognition techniques) and take decisions accordingly. These decisions are typically aimed at improving the child's performance by triggering appropriate re-engagement stimuli when their attention is low, by changing the game or making it more difficult when the child is losing interest in the task as it is too easy. Alongside state-of-the-art techniques for emotion recognition and head pose estimation, we use a runtime variant of a probabilistic and epistemic logic programming dialect of the Event Calculus, known as the Epistemic Probabilistic Event Calculus. In particular, the probabilistic component of this symbolic framework allows for a natural interface with the machine learning techniques. We overview the architecture and its components, and show some of its characteristics through a discussion of a running example and experiments. Under consideration for publication in Theory and Practice of Logic Programming (TPLP).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge