Gong Cheng

Oriented R-CNN for Object Detection

Aug 12, 2021

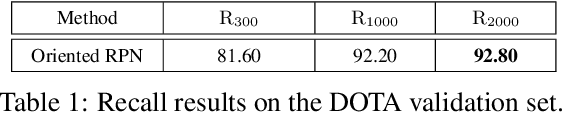

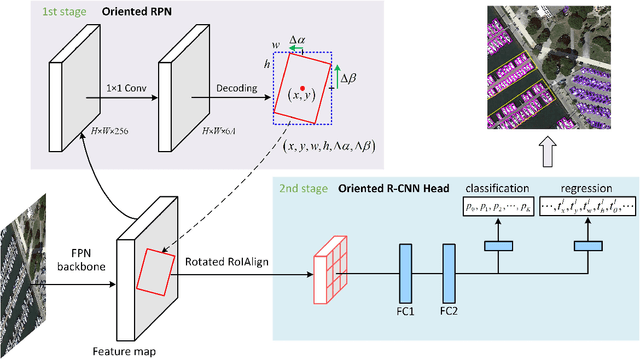

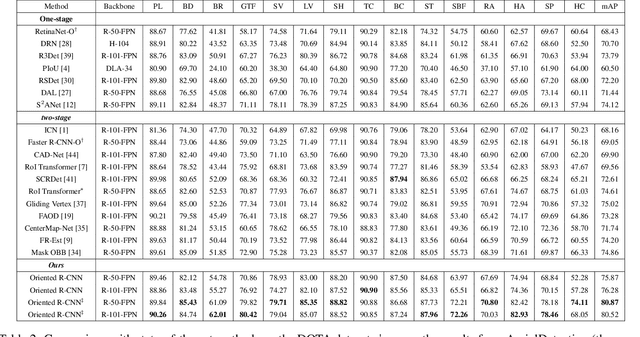

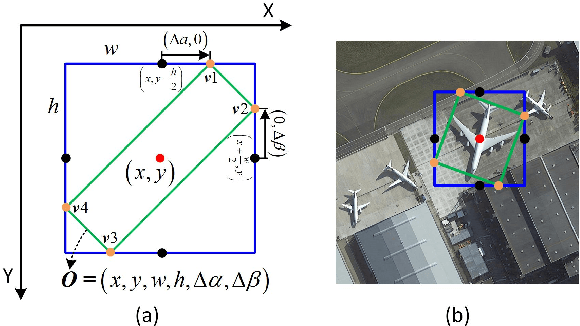

Abstract:Current state-of-the-art two-stage detectors generate oriented proposals through time-consuming schemes. This diminishes the detectors' speed, thereby becoming the computational bottleneck in advanced oriented object detection systems. This work proposes an effective and simple oriented object detection framework, termed Oriented R-CNN, which is a general two-stage oriented detector with promising accuracy and efficiency. To be specific, in the first stage, we propose an oriented Region Proposal Network (oriented RPN) that directly generates high-quality oriented proposals in a nearly cost-free manner. The second stage is oriented R-CNN head for refining oriented Regions of Interest (oriented RoIs) and recognizing them. Without tricks, oriented R-CNN with ResNet50 achieves state-of-the-art detection accuracy on two commonly-used datasets for oriented object detection including DOTA (75.87% mAP) and HRSC2016 (96.50% mAP), while having a speed of 15.1 FPS with the image size of 1024$\times$1024 on a single RTX 2080Ti. We hope our work could inspire rethinking the design of oriented detectors and serve as a baseline for oriented object detection. Code is available at https://github.com/jbwang1997/OBBDetection.

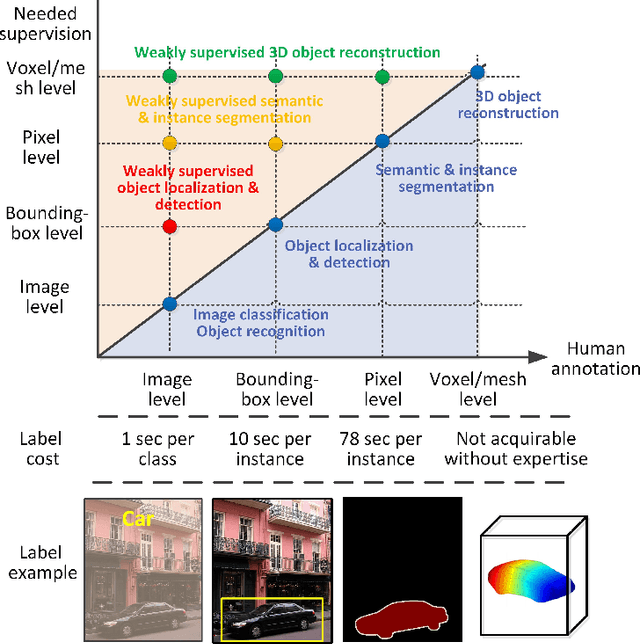

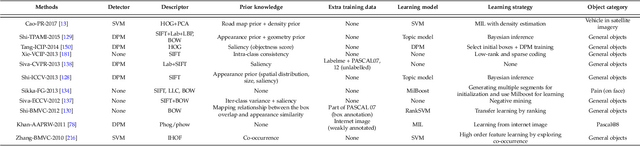

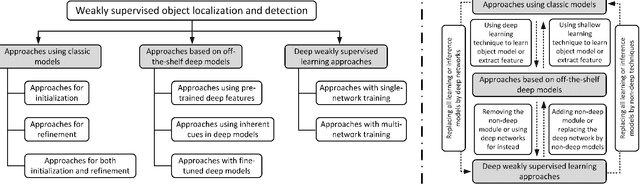

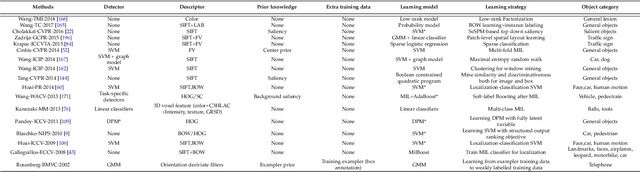

Weakly Supervised Object Localization and Detection: A Survey

Apr 16, 2021

Abstract:As an emerging and challenging problem in the computer vision community, weakly supervised object localization and detection plays an important role for developing new generation computer vision systems and has received significant attention in the past decade. As methods have been proposed, a comprehensive survey of these topics is of great importance. In this work, we review (1) classic models, (2) approaches with feature representations from off-the-shelf deep networks, (3) approaches solely based on deep learning, and (4) publicly available datasets and standard evaluation metrics that are widely used in this field. We also discuss the key challenges in this field, development history of this field, advantages/disadvantages of the methods in each category, the relationships between methods in different categories, applications of the weakly supervised object localization and detection methods, and potential future directions to further promote the development of this research field.

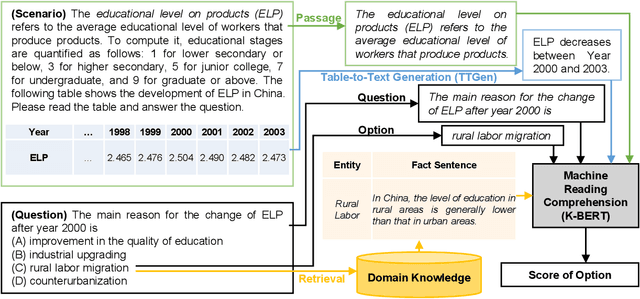

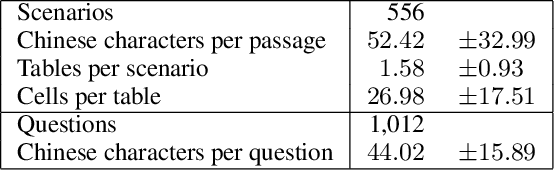

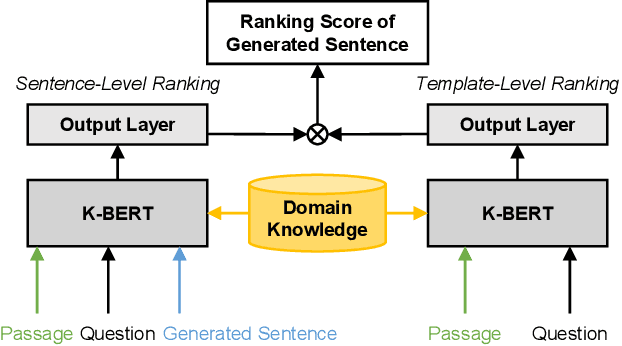

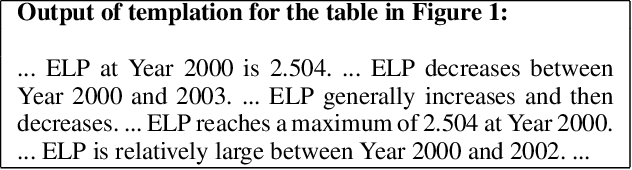

TSQA: Tabular Scenario Based Question Answering

Jan 14, 2021

Abstract:Scenario-based question answering (SQA) has attracted an increasing research interest. Compared with the well-studied machine reading comprehension (MRC), SQA is a more challenging task: a scenario may contain not only a textual passage to read but also structured data like tables, i.e., tabular scenario based question answering (TSQA). AI applications of TSQA such as answering multiple-choice questions in high-school exams require synthesizing data in multiple cells and combining tables with texts and domain knowledge to infer answers. To support the study of this task, we construct GeoTSQA. This dataset contains 1k real questions contextualized by tabular scenarios in the geography domain. To solve the task, we extend state-of-the-art MRC methods with TTGen, a novel table-to-text generator. It generates sentences from variously synthesized tabular data and feeds the downstream MRC method with the most useful sentences. Its sentence ranking model fuses the information in the scenario, question, and domain knowledge. Our approach outperforms a variety of strong baseline methods on GeoTSQA.

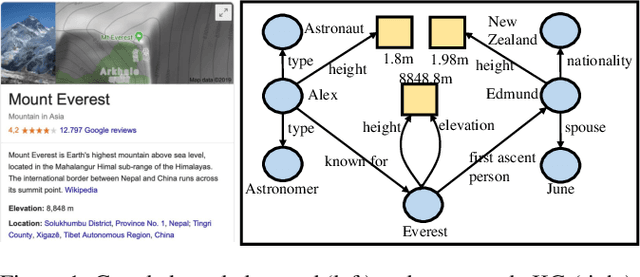

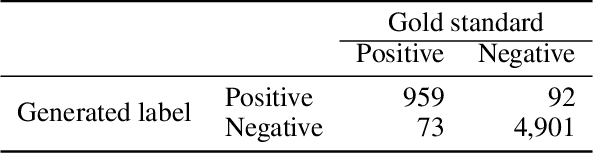

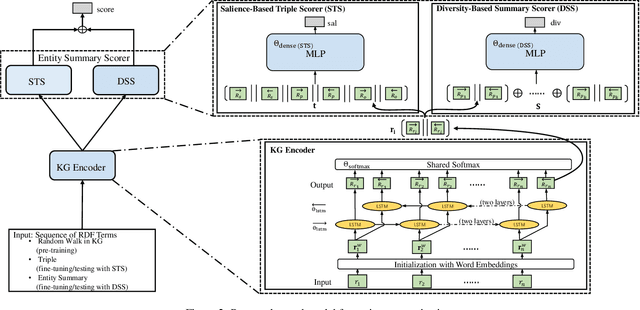

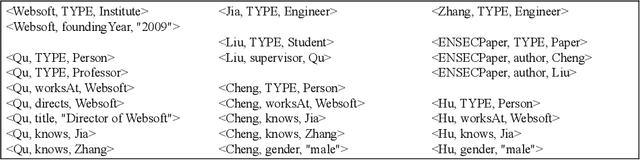

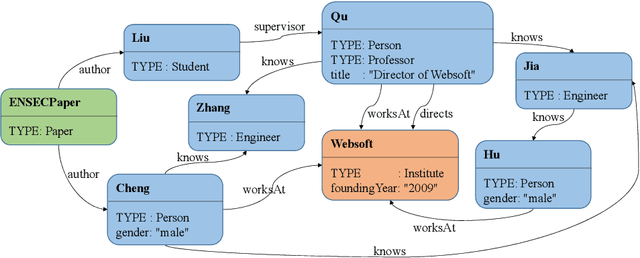

Neural Entity Summarization with Joint Encoding and Weak Supervision

May 10, 2020

Abstract:In a large-scale knowledge graph (KG), an entity is often described by a large number of triple-structured facts. Many applications require abridged versions of entity descriptions, called entity summaries. Existing solutions to entity summarization are mainly unsupervised. In this paper, we present a supervised approach NEST that is based on our novel neural model to jointly encode graph structure and text in KGs and generate high-quality diversified summaries. Since it is costly to obtain manually labeled summaries for training, our supervision is weak as we train with programmatically labeled data which may contain noise but is free of manual work. Evaluation results show that our approach significantly outperforms the state of the art on two public benchmarks.

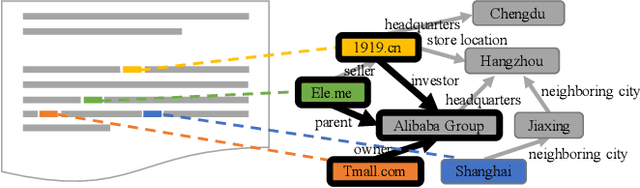

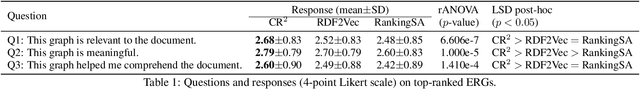

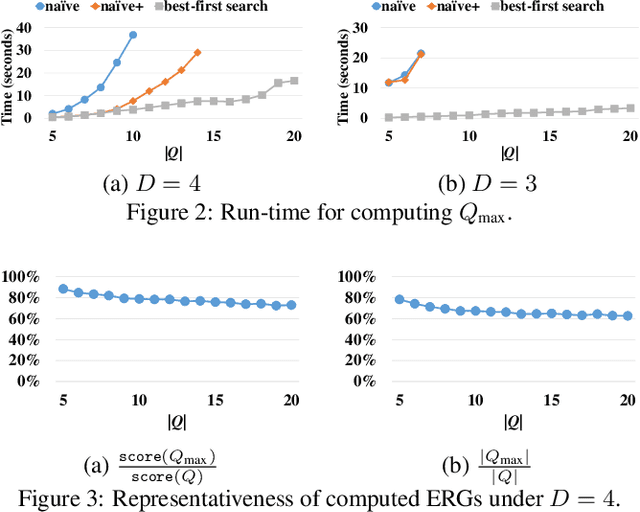

Enriching Documents with Compact, Representative, Relevant Knowledge Graphs

May 10, 2020

Abstract:A prominent application of knowledge graph (KG) is document enrichment. Existing methods identify mentions of entities in a background KG and enrich documents with entity types and direct relations. We compute an entity relation subgraph (ERG) that can more expressively represent indirect relations among a set of mentioned entities. To find compact, representative, and relevant ERGs for effective enrichment, we propose an efficient best-first search algorithm to solve a new combinatorial optimization problem that achieves a trade-off between representativeness and compactness, and then we exploit ontological knowledge to rank ERGs by entity-based document-KG and intra-KG relevance. Extensive experiments and user studies show the promising performance of our approach.

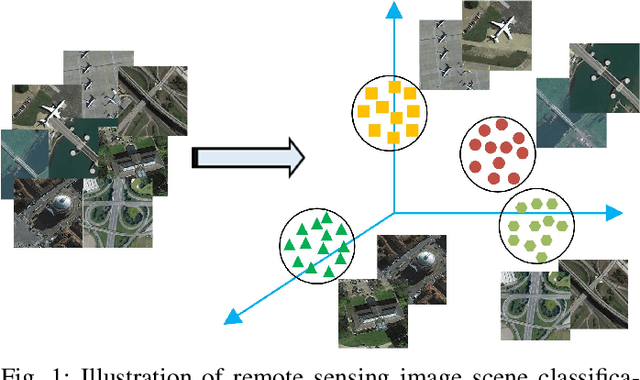

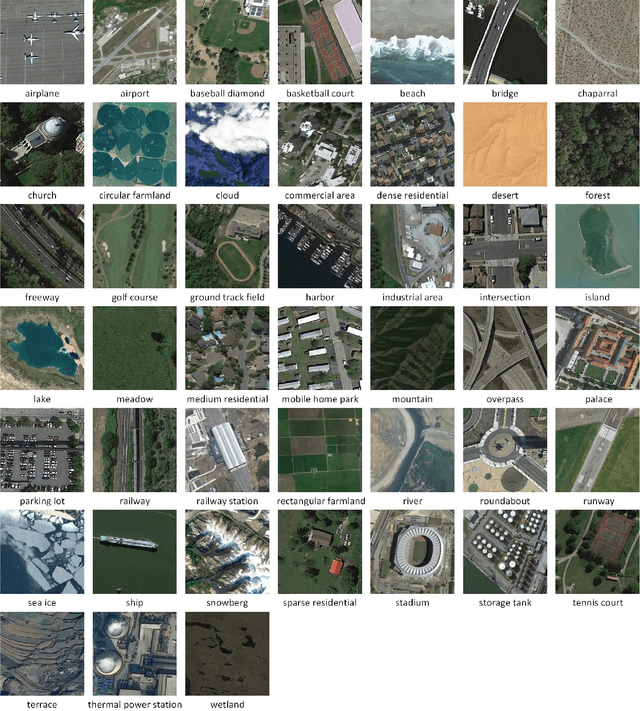

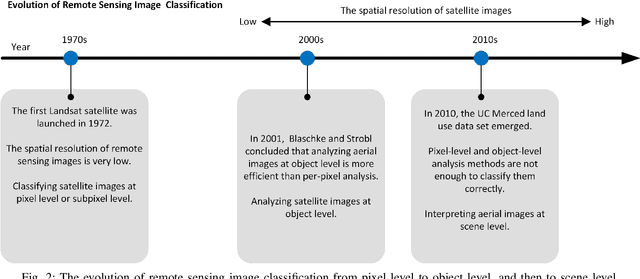

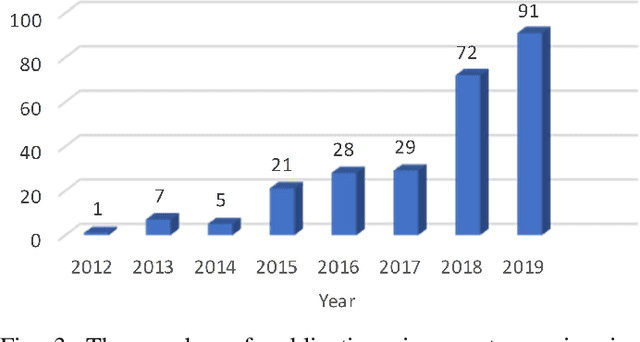

Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities

May 03, 2020

Abstract:Remote sensing image scene classification, which aims at labeling remote sensing images with a set of semantic categories based on their contents, has broad applications in a range of fields. Propelled by the powerful feature learning capabilities of deep neural networks, remote sensing image scene classification driven by deep learning has drawn remarkable attention and achieved significant breakthroughs. However, to the best of our knowledge, a comprehensive review of recent achievements regarding deep learning for scene classification of remote sensing images is still lacking. Considering the rapid evolution of this field, this paper provides a systematic survey of deep learning methods for remote sensing image scene classification by covering more than 140 papers. To be specific, we discuss the main challenges of scene classification and survey (1) Autoencoder-based scene classification methods, (2) Convolutional Neural Network-based scene classification methods, and (3) Generative Adversarial Network-based scene classification methods. In addition, we introduce the benchmarks used for scene classification and summarize the performance of more than two dozens of representative algorithms on three commonly-used benchmark data sets. Finally, we discuss the promising opportunities for further research.

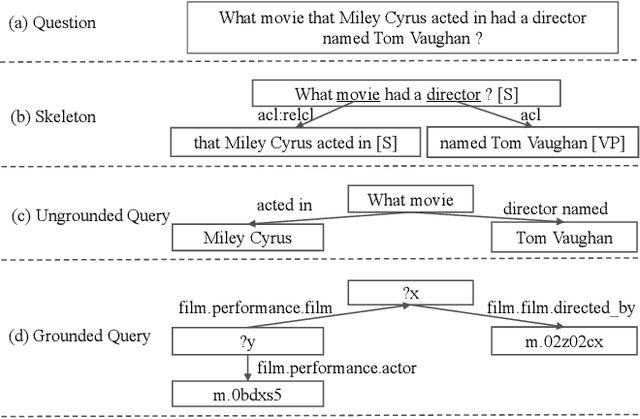

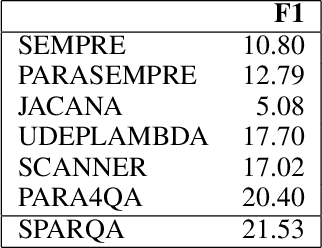

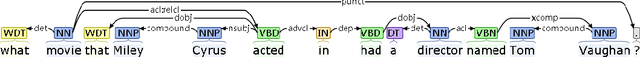

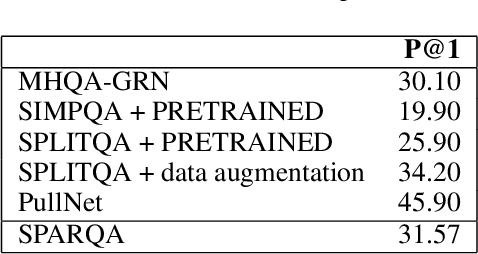

SPARQA: Skeleton-based Semantic Parsing for Complex Questions over Knowledge Bases

Mar 31, 2020

Abstract:Semantic parsing transforms a natural language question into a formal query over a knowledge base. Many existing methods rely on syntactic parsing like dependencies. However, the accuracy of producing such expressive formalisms is not satisfying on long complex questions. In this paper, we propose a novel skeleton grammar to represent the high-level structure of a complex question. This dedicated coarse-grained formalism with a BERT-based parsing algorithm helps to improve the accuracy of the downstream fine-grained semantic parsing. Besides, to align the structure of a question with the structure of a knowledge base, our multi-strategy method combines sentence-level and word-level semantics. Our approach shows promising performance on several datasets.

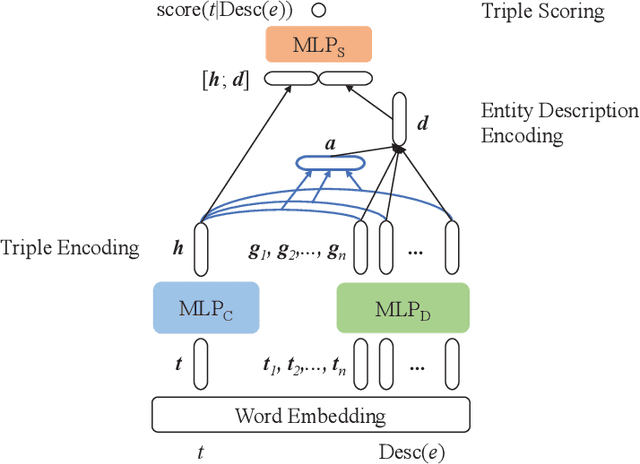

DeepLENS: Deep Learning for Entity Summarization

Mar 08, 2020

Abstract:Entity summarization has been a prominent task over knowledge graphs. While existing methods are mainly unsupervised, we present DeepLENS, a simple yet effective deep learning model where we exploit textual semantics for encoding triples and we score each candidate triple based on its interdependence on other triples. DeepLENS significantly outperformed existing methods on a public benchmark.

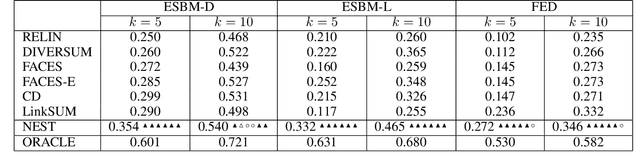

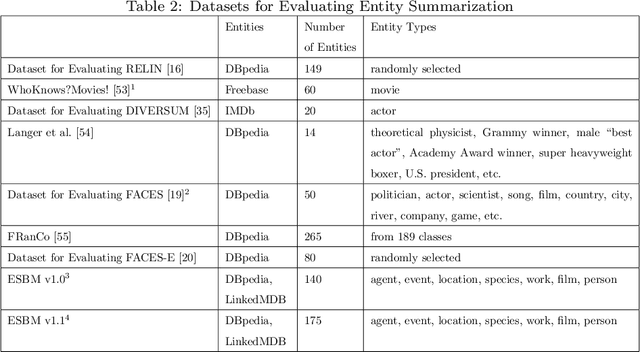

ESBM: An Entity Summarization BenchMark

Mar 08, 2020

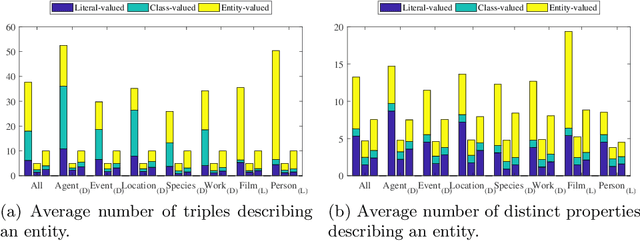

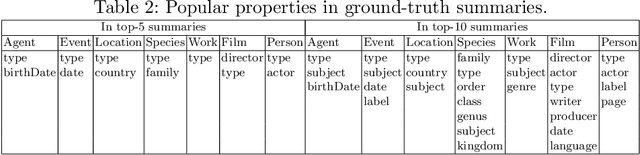

Abstract:Entity summarization is the problem of computing an optimal compact summary for an entity by selecting a size-constrained subset of triples from RDF data. Entity summarization supports a multiplicity of applications and has led to fruitful research. However, there is a lack of evaluation efforts that cover the broad spectrum of existing systems. One reason is a lack of benchmarks for evaluation. Some benchmarks are no longer available, while others are small and have limitations. In this paper, we create an Entity Summarization BenchMark (ESBM) which overcomes the limitations of existing benchmarks and meets standard desiderata for a benchmark. Using this largest available benchmark for evaluating general-purpose entity summarizers, we perform the most extensive experiment to date where 9~existing systems are compared. Considering that all of these systems are unsupervised, we also implement and evaluate a supervised learning based system for reference.

Entity Summarization: State of the Art and Future Challenges

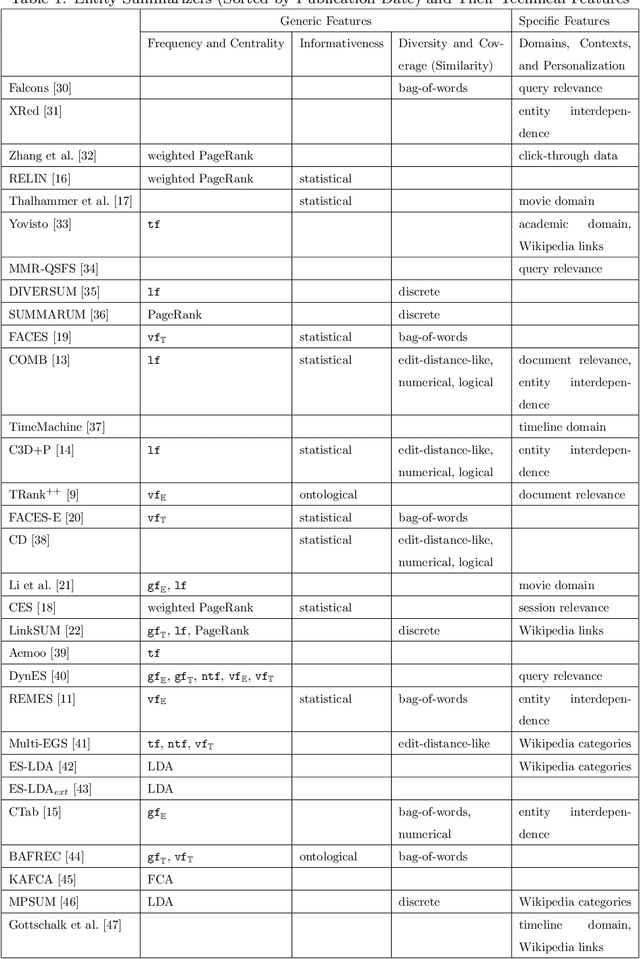

Oct 18, 2019

Abstract:The increasing availability of semantic data, which is commonly represented as entity-property-value triples, has enabled novel information retrieval applications. However, the magnitude of semantic data, in particular the large number of triples describing an entity, could overload users with excessive amounts of information. This has motivated fruitful research on automated generation of summaries for entity descriptions to satisfy users' information needs efficiently and effectively. We focus on this important topic of entity summarization, and present the first comprehensive survey of existing research. We review existing methods and evaluation efforts, and suggest directions for future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge