Frans A. Oliehoek

Exploiting Submodular Value Functions For Scaling Up Active Perception

Sep 21, 2020

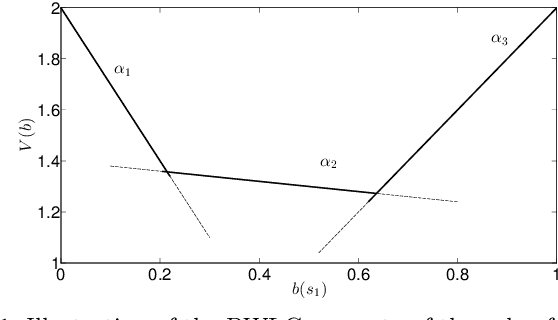

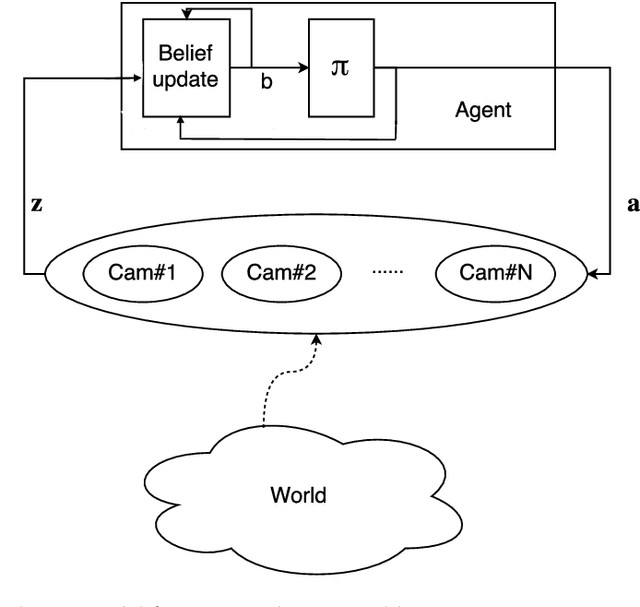

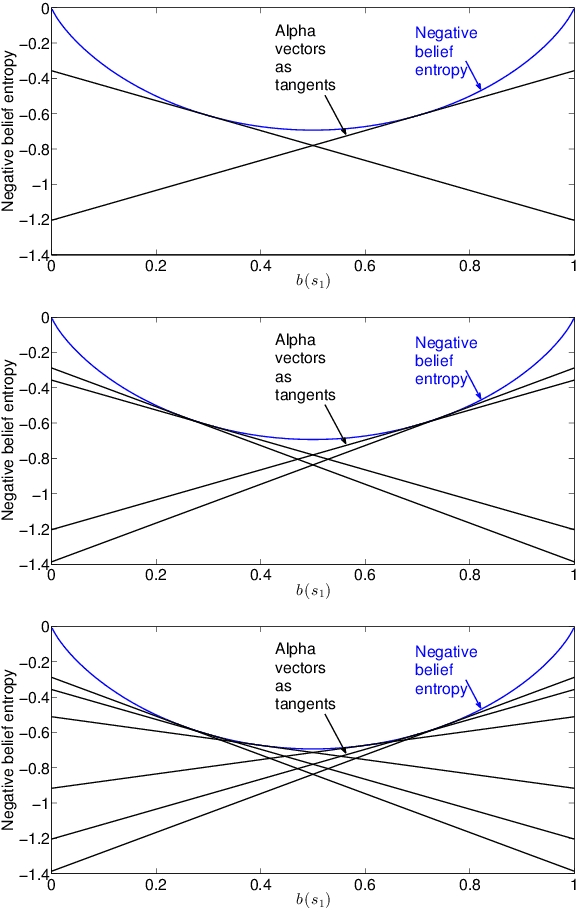

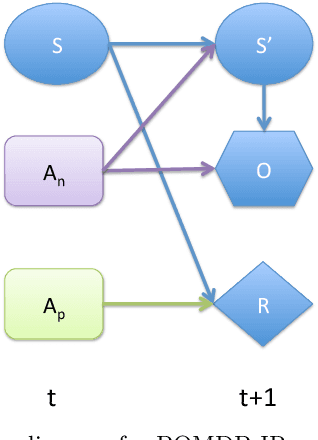

Abstract:In active perception tasks, an agent aims to select sensory actions that reduce its uncertainty about one or more hidden variables. While partially observable Markov decision processes (POMDPs) provide a natural model for such problems, reward functions that directly penalize uncertainty in the agent's belief can remove the piecewise-linear and convex property of the value function required by most POMDP planners. Furthermore, as the number of sensors available to the agent grows, the computational cost of POMDP planning grows exponentially with it, making POMDP planning infeasible with traditional methods. In this article, we address a twofold challenge of modeling and planning for active perception tasks. We show the mathematical equivalence of $\rho$POMDP and POMDP-IR, two frameworks for modeling active perception tasks, that restore the PWLC property of the value function. To efficiently plan for active perception tasks, we identify and exploit the independence properties of POMDP-IR to reduce the computational cost of solving POMDP-IR (and $\rho$POMDP). We propose greedy point-based value iteration (PBVI), a new POMDP planning method that uses greedy maximization to greatly improve scalability in the action space of an active perception POMDP. Furthermore, we show that, under certain conditions, including submodularity, the value function computed using greedy PBVI is guaranteed to have bounded error with respect to the optimal value function. We establish the conditions under which the value function of an active perception POMDP is guaranteed to be submodular. Finally, we present a detailed empirical analysis on a dataset collected from a multi-camera tracking system employed in a shopping mall. Our method achieves similar performance to existing methods but at a fraction of the computational cost leading to better scalability for solving active perception tasks.

Real-Time Resource Allocation for Tracking Systems

Sep 21, 2020

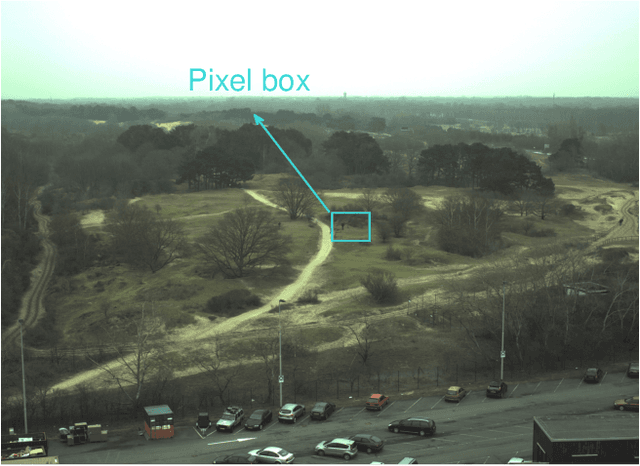

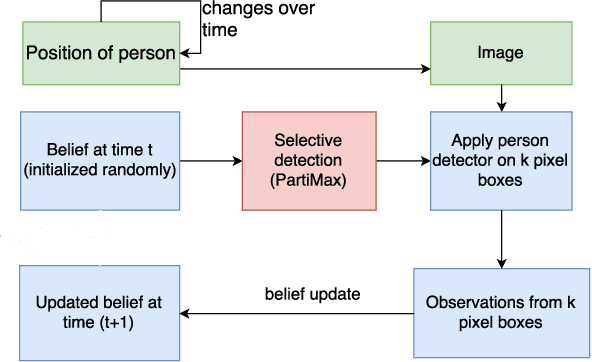

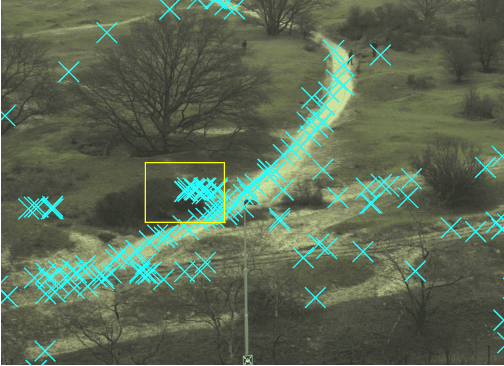

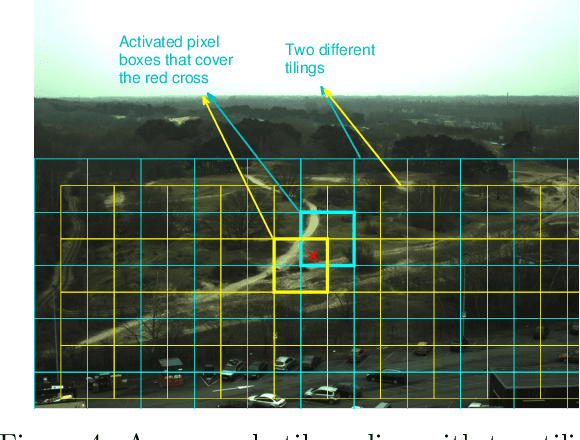

Abstract:Automated tracking is key to many computer vision applications. However, many tracking systems struggle to perform in real-time due to the high computational cost of detecting people, especially in ultra high resolution images. We propose a new algorithm called \emph{PartiMax} that greatly reduces this cost by applying the person detector only to the relevant parts of the image. PartiMax exploits information in the particle filter to select $k$ of the $n$ candidate \emph{pixel boxes} in the image. We prove that PartiMax is guaranteed to make a near-optimal selection with error bounds that are independent of the problem size. Furthermore, empirical results on a real-life dataset show that our system runs in real-time by processing only 10\% of the pixel boxes in the image while still retaining 80\% of the original tracking performance achieved when processing all pixel boxes.

* http://auai.org/uai2017/proceedings/papers/130.pdf

MDP Homomorphic Networks: Group Symmetries in Reinforcement Learning

Jun 30, 2020

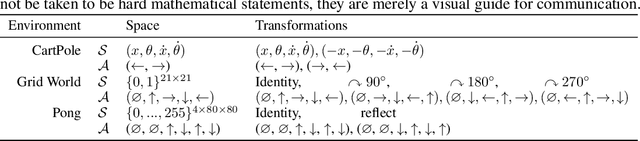

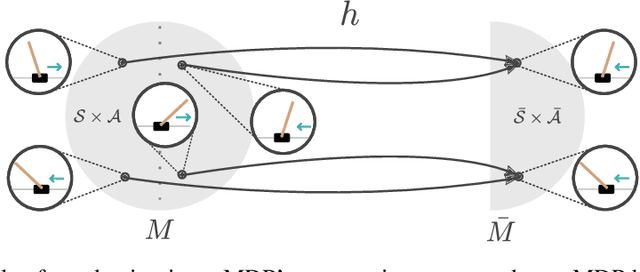

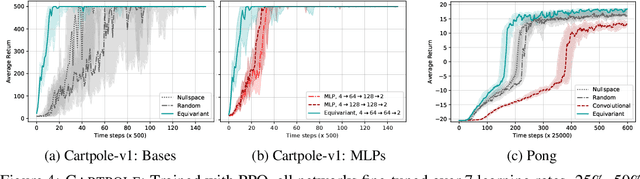

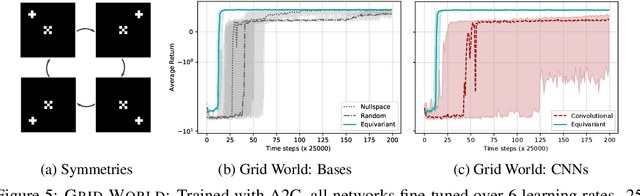

Abstract:This paper introduces MDP homomorphic networks for deep reinforcement learning. MDP homomorphic networks are neural networks that are equivariant under symmetries in the joint state-action space of an MDP. Current approaches to deep reinforcement learning do not usually exploit knowledge about such structure. By building this prior knowledge into policy and value networks using an equivariance constraint, we can reduce the size of the solution space. We specifically focus on group-structured symmetries (invertible transformations). Additionally, we introduce an easy method for constructing equivariant network layers numerically, so the system designer need not solve the constraints by hand, as is typically done. We construct MDP homomorphic MLPs and CNNs that are equivariant under either a group of reflections or rotations. We show that such networks converge faster than unstructured baselines on CartPole, a grid world and Pong.

Sensor Data for Human Activity Recognition: Feature Representation and Benchmarking

May 15, 2020

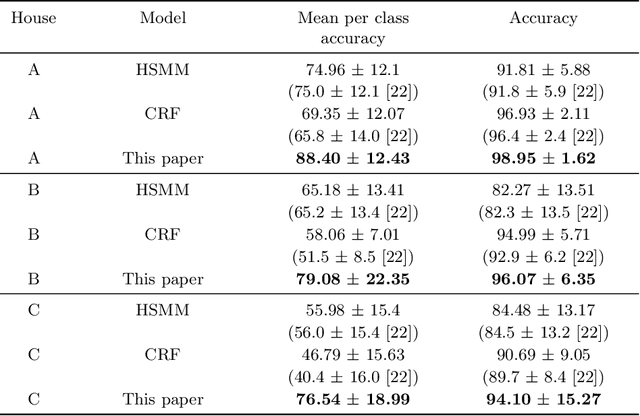

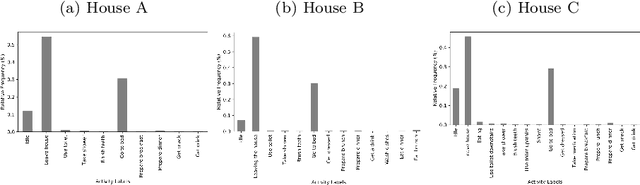

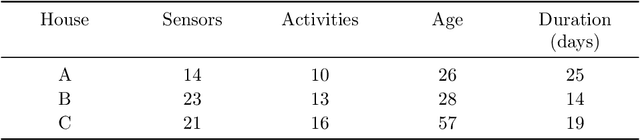

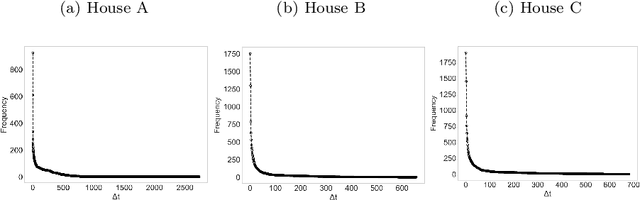

Abstract:The field of Human Activity Recognition (HAR) focuses on obtaining and analysing data captured from monitoring devices (e.g. sensors). There is a wide range of applications within the field; for instance, assisted living, security surveillance, and intelligent transportation. In HAR, the development of Activity Recognition models is dependent upon the data captured by these devices and the methods used to analyse them, which directly affect performance metrics. In this work, we address the issue of accurately recognising human activities using different Machine Learning (ML) techniques. We propose a new feature representation based on consecutive occurring observations and compare it against previously used feature representations using a wide range of classification methods. Experimental results demonstrate that techniques based on the proposed representation outperform the baselines and a better accuracy was achieved for both highly and less frequent actions. We also investigate how the addition of further features and their pre-processing techniques affect performance results leading to state-of-the-art accuracy on a Human Activity Recognition dataset.

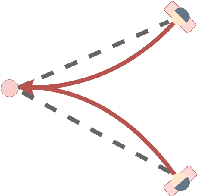

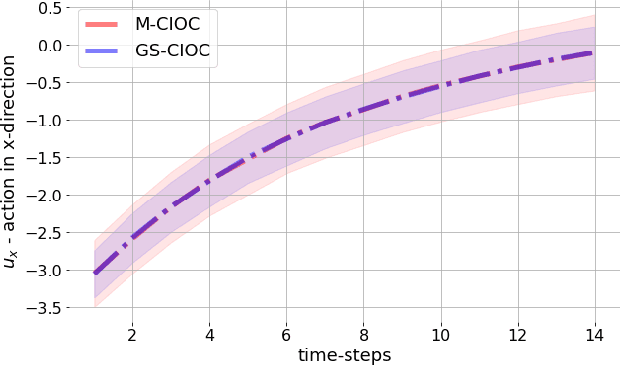

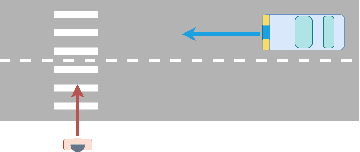

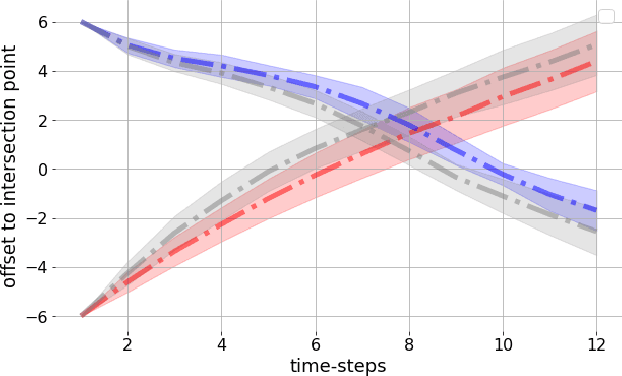

Diversity in Action: General-Sum Multi-Agent Continuous Inverse Optimal Control

Apr 27, 2020

Abstract:Traffic scenarios are inherently interactive. Multiple decision-makers predict the actions of others and choose strategies that maximize their rewards. We view these interactions from the perspective of game theory which introduces various challenges. Humans are not entirely rational, their rewards need to be inferred from real-world data, and any prediction algorithm needs to be real-time capable so that we can use it in an autonomous vehicle (AV). In this work, we present a game-theoretic method that addresses all of the points above. Compared to many existing methods used for AVs, our approach does 1) not require perfect communication, and 2) allows for individual rewards per agent. Our experiments demonstrate that these more realistic assumptions lead to qualitatively and quantitatively different reward inference and prediction of future actions that match better with expected real-world behaviour.

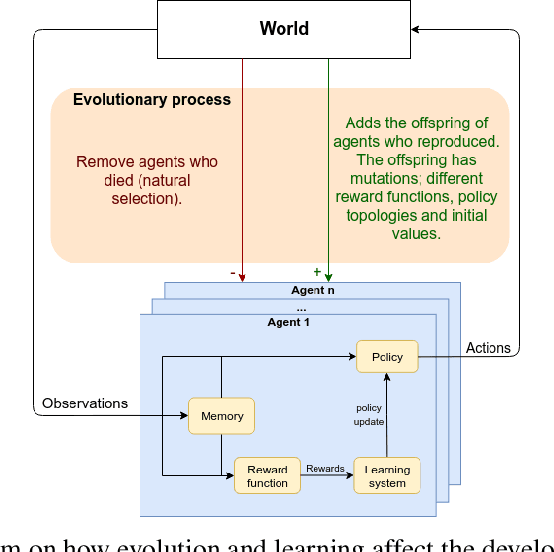

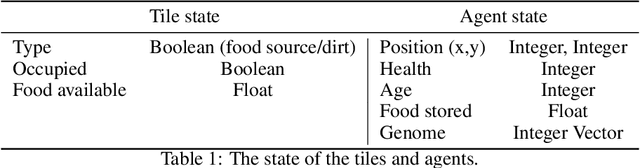

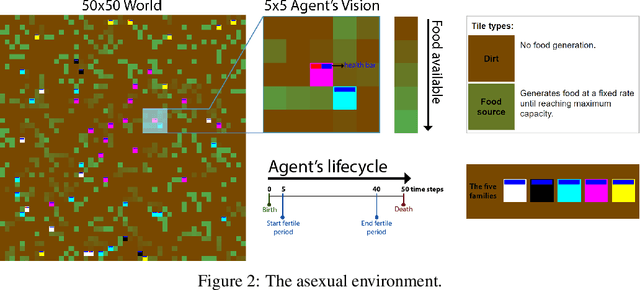

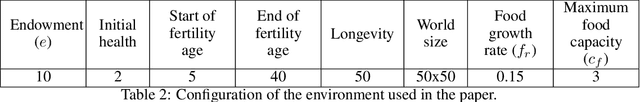

Mimicking Evolution with Reinforcement Learning

Mar 31, 2020

Abstract:Evolution gave rise to human and animal intelligence here on Earth. We argue that the path to developing artificial human-like-intelligence will pass through mimicking the evolutionary process in a nature-like simulation. In Nature, there are two processes driving the development of the brain: evolution and learning. Evolution acts slowly, across generations, and amongst other things, it defines what agents learn by changing their internal reward function. Learning acts fast, across one's lifetime, and it quickly updates agents' policy to maximise pleasure and minimise pain. The reward function is slowly aligned with the fitness function by evolution, however, as agents evolve the environment and its fitness function also change, increasing the misalignment between reward and fitness. It is extremely computationally expensive to replicate these two processes in simulation. This work proposes Evolution via Evolutionary Reward (EvER) that allows learning to single-handedly drive the search for policies with increasingly evolutionary fitness by ensuring the alignment of the reward function with the fitness function. In this search, EvER makes use of the whole state-action trajectories that agents go through their lifetime. In contrast, current evolutionary algorithms discard this information and consequently limit their potential efficiency at tackling sequential decision problems. We test our algorithm in two simple bio-inspired environments and show its superiority at generating more capable agents at surviving and reproducing their genes when compared with a state-of-the-art evolutionary algorithm.

Plannable Approximations to MDP Homomorphisms: Equivariance under Actions

Feb 27, 2020

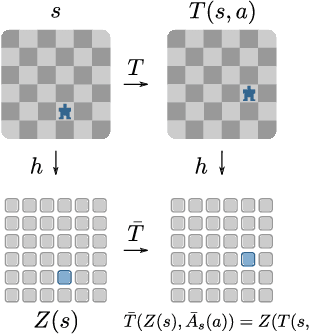

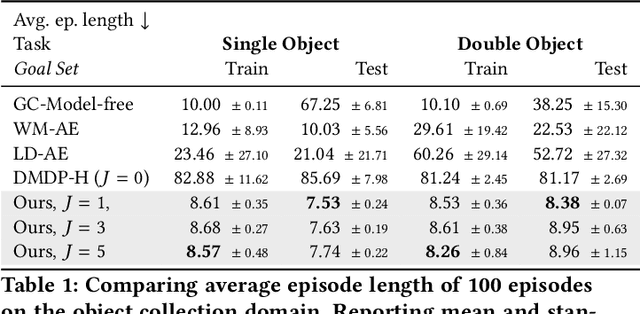

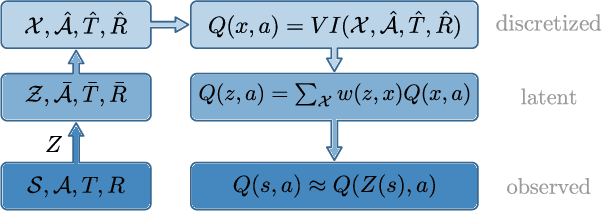

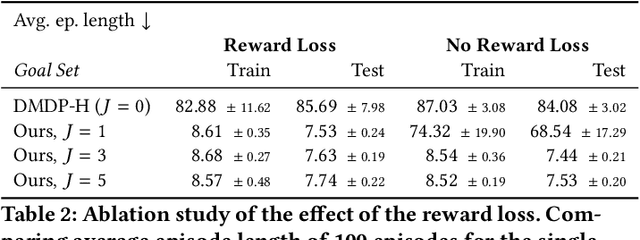

Abstract:This work exploits action equivariance for representation learning in reinforcement learning. Equivariance under actions states that transitions in the input space are mirrored by equivalent transitions in latent space, while the map and transition functions should also commute. We introduce a contrastive loss function that enforces action equivariance on the learned representations. We prove that when our loss is zero, we have a homomorphism of a deterministic Markov Decision Process (MDP). Learning equivariant maps leads to structured latent spaces, allowing us to build a model on which we plan through value iteration. We show experimentally that for deterministic MDPs, the optimal policy in the abstract MDP can be successfully lifted to the original MDP. Moreover, the approach easily adapts to changes in the goal states. Empirically, we show that in such MDPs, we obtain better representations in fewer epochs compared to representation learning approaches using reconstructions, while generalizing better to new goals than model-free approaches.

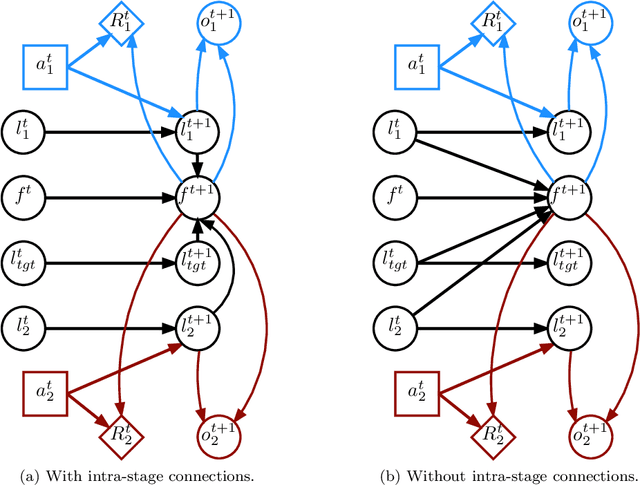

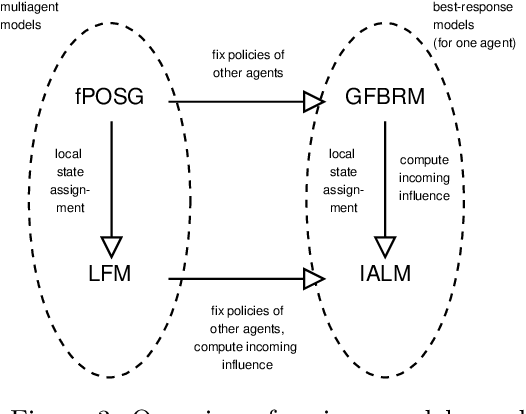

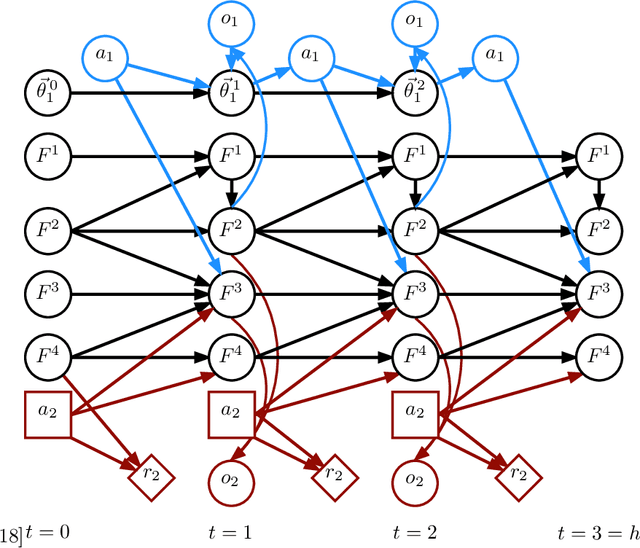

A Sufficient Statistic for Influence in Structured Multiagent Environments

Jul 22, 2019

Abstract:Making decisions in complex environments is a key challenge in artificial intelligence (AI). Situations involving multiple decision makers are particularly complex, leading to computation intractability of principled solution methods. A body of work in AI [4, 3, 41, 45, 47, 2] has tried to mitigate this problem by trying to bring down interaction to its core: how does the policy of one agent influence another agent? If we can find more compact representations of such influence, this can help us deal with the complexity, for instance by searching the space of influences rather than that of policies [45]. However, so far these notions of influence have been restricted in their applicability to special cases of interaction. In this paper we formalize influence-based abstraction (IBA), which facilitates the elimination of latent state factors without any loss in value, for a very general class of problems described as factored partially observable stochastic games (fPOSGs) [33]. This generalizes existing descriptions of influence, and thus can serve as the foundation for improvements in scalability and other insights in decision making in complex settings.

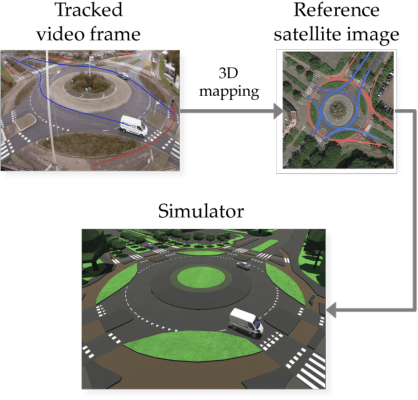

Learning from Demonstration in the Wild

Nov 08, 2018

Abstract:Learning from demonstration (LfD) is useful in settings where hand-coding behaviour or a reward function is impractical. It has succeeded in a wide range of problems but typically relies on artificially generated demonstrations or specially deployed sensors and has not generally been able to leverage the copious demonstrations available in the wild: those that capture behaviour that was occurring anyway using sensors that were already deployed for another purpose, e.g., traffic camera footage capturing demonstrations of natural behaviour of vehicles, cyclists, and pedestrians. We propose video to behaviour (ViBe), a new approach to learning models of road user behaviour that requires as input only unlabelled raw video data of a traffic scene collected from a single, monocular, uncalibrated camera with ordinary resolution. Our approach calibrates the camera, detects relevant objects, tracks them through time, and uses the resulting trajectories to perform LfD, yielding models of naturalistic behaviour. We apply ViBe to raw videos of a traffic intersection and show that it can learn purely from videos, without additional expert knowledge.

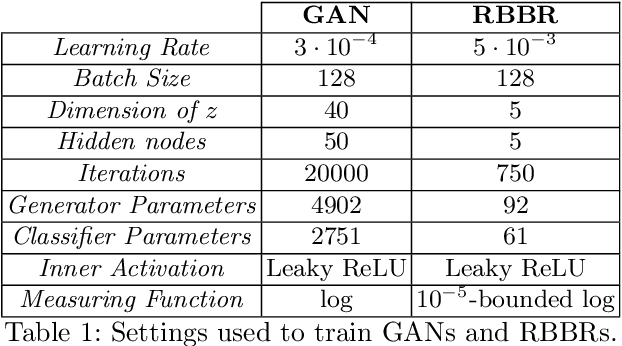

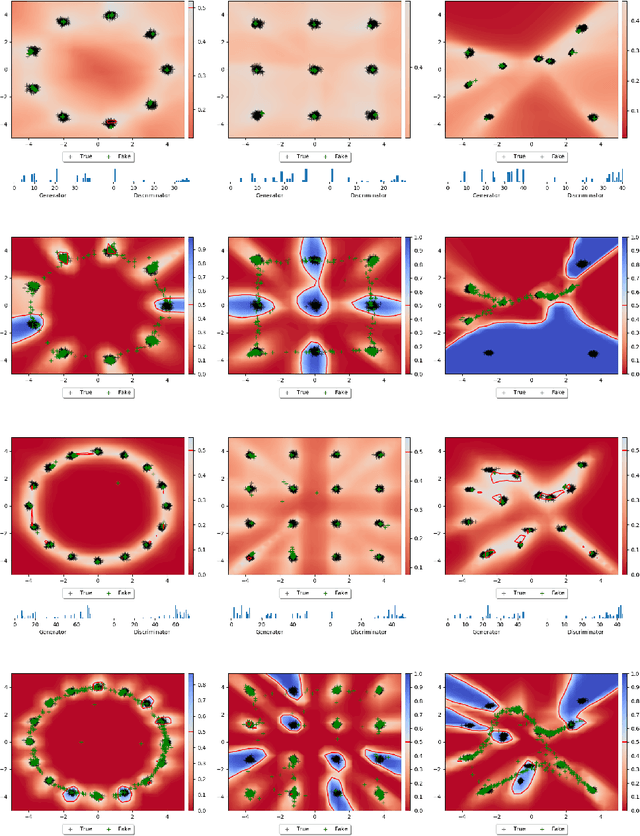

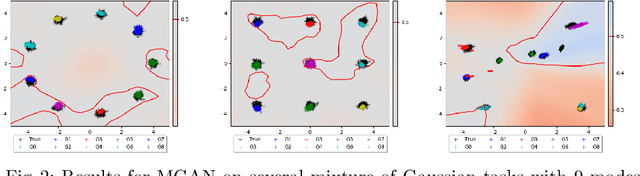

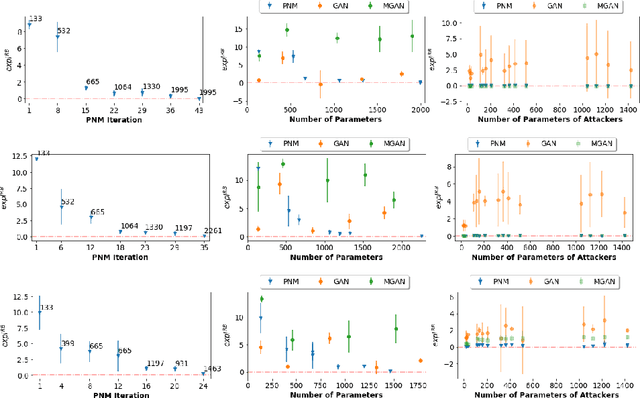

Beyond Local Nash Equilibria for Adversarial Networks

Jul 26, 2018

Abstract:Save for some special cases, current training methods for Generative Adversarial Networks (GANs) are at best guaranteed to converge to a `local Nash equilibrium` (LNE). Such LNEs, however, can be arbitrarily far from an actual Nash equilibrium (NE), which implies that there are no guarantees on the quality of the found generator or classifier. This paper proposes to model GANs explicitly as finite games in mixed strategies, thereby ensuring that every LNE is an NE. With this formulation, we propose a solution method that is proven to monotonically converge to a resource-bounded Nash equilibrium (RB-NE): by increasing computational resources we can find better solutions. We empirically demonstrate that our method is less prone to typical GAN problems such as mode collapse, and produces solutions that are less exploitable than those produced by GANs and MGANs, and closely resemble theoretical predictions about NEs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge