Elizabeth Croft

Monash University

Metrics for Evaluating Social Conformity of Crowd Navigation Algorithms

Feb 02, 2022

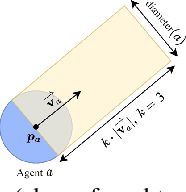

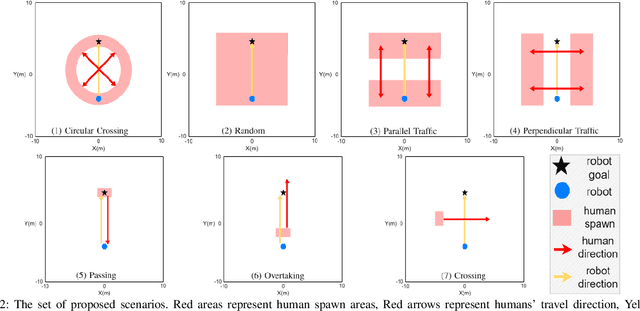

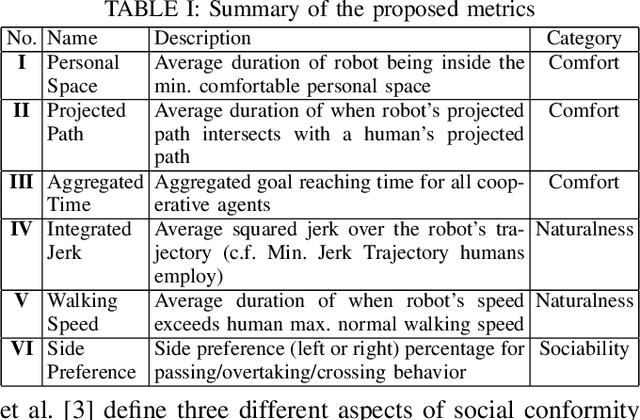

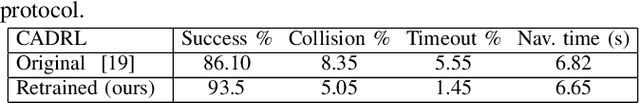

Abstract:Recent protocols and metrics for training and evaluating autonomous robot navigation through crowds are inconsistent due to diversified definitions of "social behavior". This makes it difficult, if not impossible, to effectively compare published navigation algorithms. Furthermore, with the lack of a good evaluation protocol, resulting algorithms may fail to generalize, due to lack of diversity in training. To address these gaps, this paper facilitates a more comprehensive evaluation and objective comparison of crowd navigation algorithms by proposing a consistent set of metrics that accounts for both efficiency and social conformity, and a systematic protocol comprising multiple crowd navigation scenarios of varying complexity for evaluation. We tested four state-of-the-art algorithms under this protocol. Results revealed that some state-of-the-art algorithms have much challenge in generalizing, and using our protocol for training, we were able to improve the algorithm's performance. We demonstrate that the set of proposed metrics provides more insight and effectively differentiates the performance of these algorithms with respect to efficiency and social conformity.

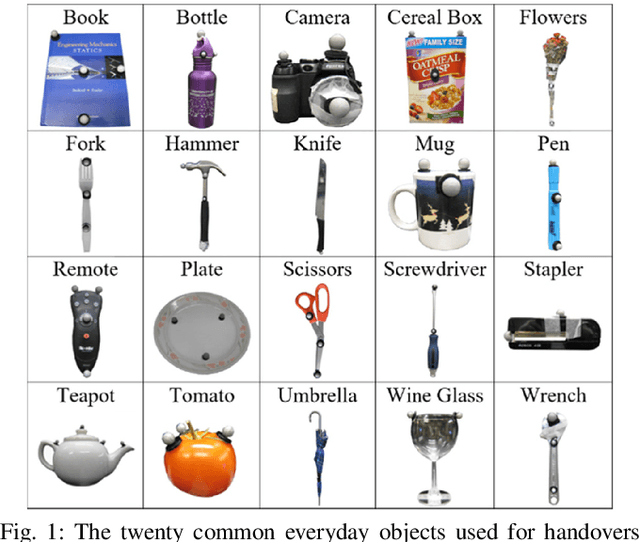

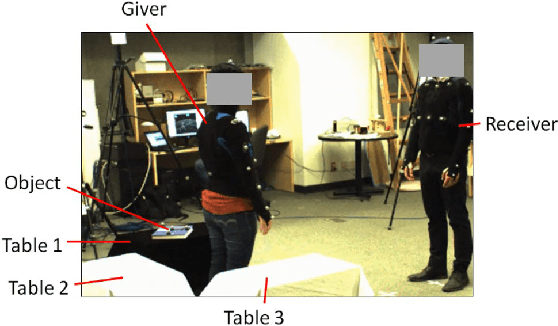

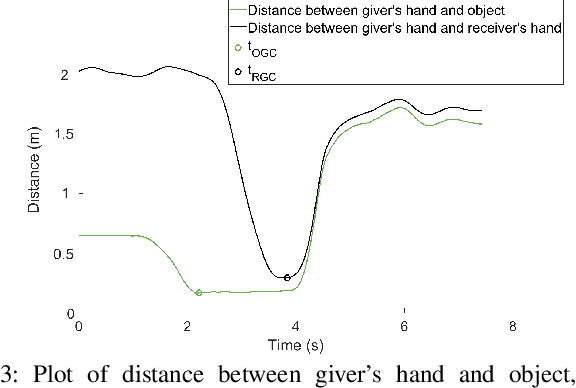

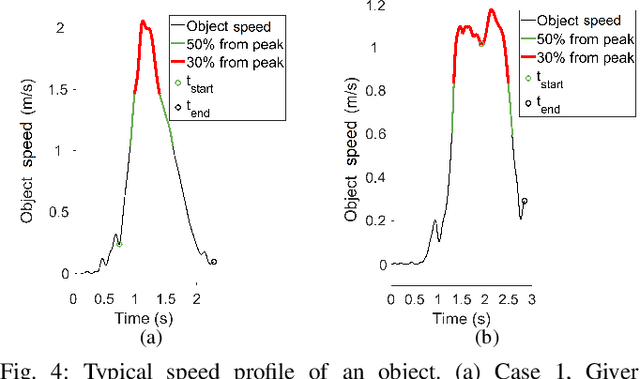

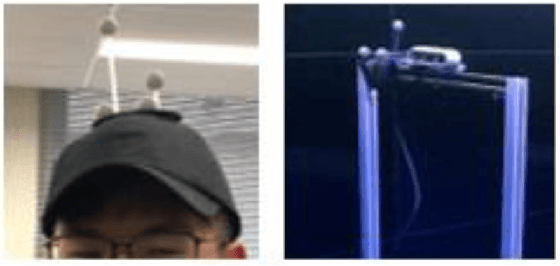

An Experimental Validation and Comparison of Reaching Motion Models for Unconstrained Handovers: Towards Generating Humanlike Motions for Human-Robot Handovers

Aug 29, 2021

Abstract:The Minimum Jerk motion model has long been cited in literature for human point-to-point reaching motions in single-person tasks. While it has been demonstrated that applying minimum-jerk-like trajectories to robot reaching motions in the joint action task of human-robot handovers allows a robot giver to be perceived as more careful, safe, and skilled, it has not been verified whether human reaching motions in handovers follow the Minimum Jerk model. To experimentally test and verify motion models for human reaches in handovers, we examined human reaching motions in unconstrained handovers (where the person is allowed to move their whole body) and fitted against 1) the Minimum Jerk model, 2) its variation, the Decoupled Minimum Jerk model, and 3) the recently proposed Elliptical (Conic) model. Results showed that Conic model fits unconstrained human handover reaching motions best. Furthermore, we discovered that unlike constrained, single-person reaching motions, which have been found to be elliptical, there is a split between elliptical and hyperbolic conic types. We expect our results will help guide generation of more humanlike reaching motions for human-robot handover tasks.

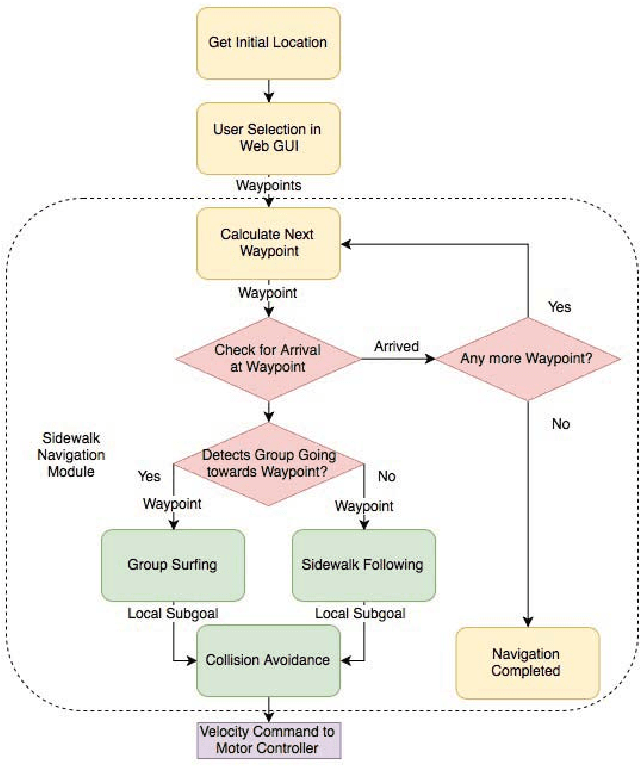

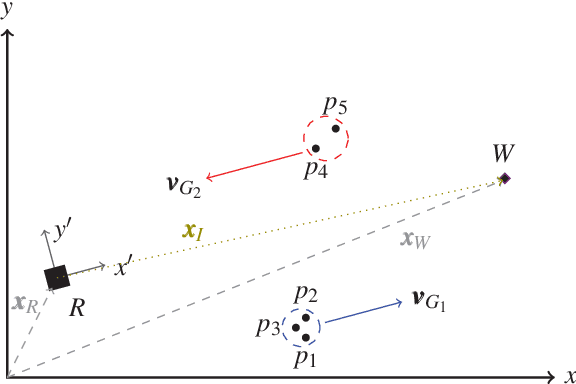

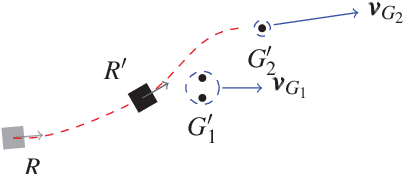

Group Surfing: A Pedestrian-Based Approach to Sidewalk Robot Navigation

Apr 13, 2021

Abstract:In this paper, we propose a novel navigation system for mobile robots in pedestrian-rich sidewalk environments. Sidewalks are unique in that the pedestrian-shared space has characteristics of both roads and indoor spaces. Like vehicles on roads, pedestrian movement often manifests as linear flows in opposing directions. On the other hand, pedestrians also form crowds and can exhibit much more random movements than vehicles. Classical algorithms are insufficient for safe navigation around pedestrians and remaining on the sidewalk space. Thus, our approach takes advantage of natural human motion to allow a robot to adapt to sidewalk navigation in a safe and socially-compliant manner. We developed a \textit{group surfing} method which aims to imitate the optimal pedestrian group for bringing the robot closer to its goal. For pedestrian-sparse environments, we propose a sidewalk edge detection and following method. Underlying these two navigation methods, the collision avoidance scheme is human-aware. The integrated navigation stack is evaluated and demonstrated in simulation. A hardware demonstration is also presented.

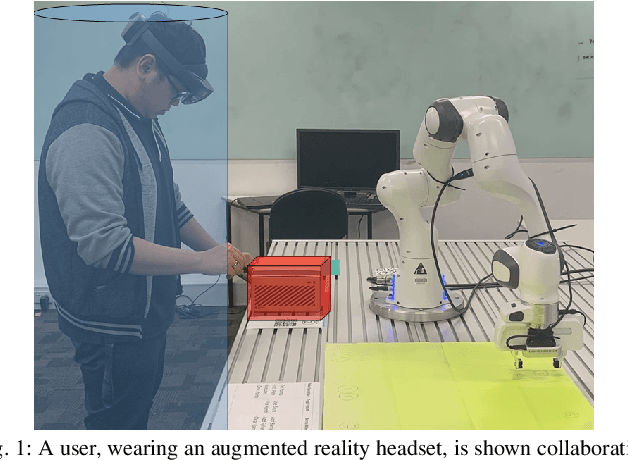

Virtual Barriers in Augmented Reality for Safe and Effective Human-Robot Cooperation in Manufacturing

Apr 12, 2021

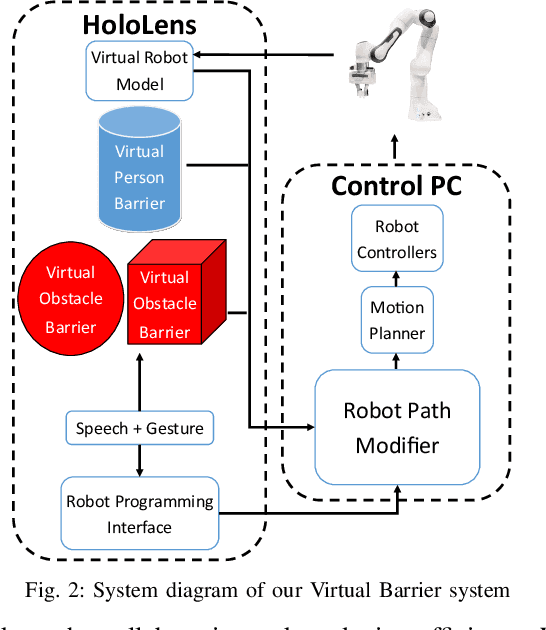

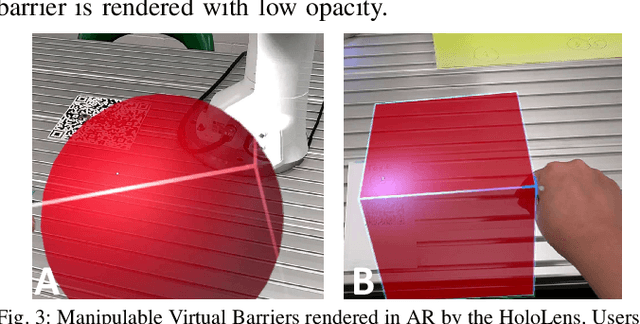

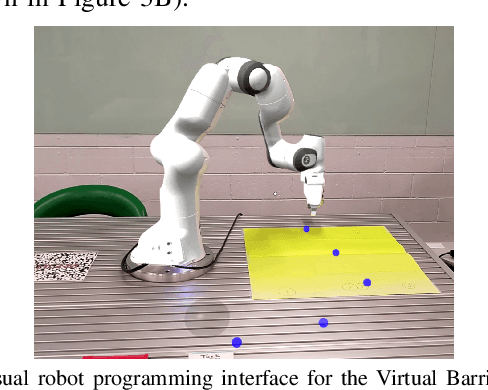

Abstract:Safety is a fundamental requirement in any human-robot collaboration scenario. To ensure the safety of users for such scenarios, we propose a novel Virtual Barrier system facilitated by an augmented reality interface. Our system provides two kinds of Virtual Barriers to ensure safety: 1) a Virtual Person Barrier which encapsulates and follows the user to protect them from colliding with the robot, and 2) Virtual Obstacle Barriers which users can spawn to protect objects or regions that the robot should not enter. To enable effective human-robot collaboration, our system includes an intuitive robot programming interface utilizing speech commands and hand gestures, and features the capability of automatic path re-planning when potential collisions are detected as a result of a barrier intersecting the robot's planned path. We compared our novel system with a standard 2D display interface through a user study, where participants performed a task mimicking an industrial manufacturing procedure. Results show that our system increases the user's sense of safety and task efficiency, and makes the interaction more intuitive.

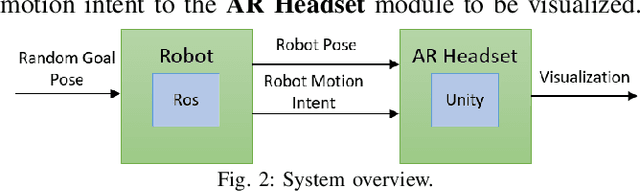

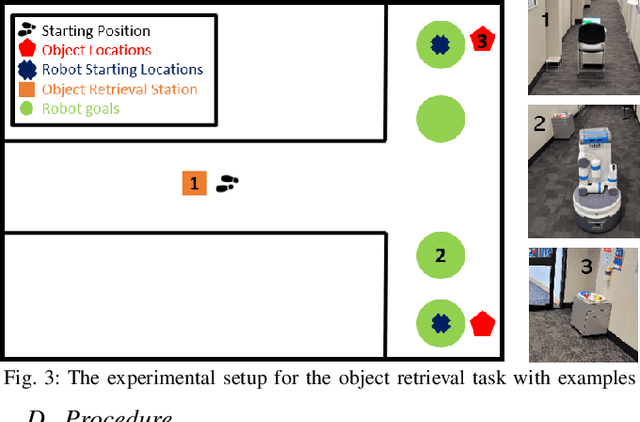

Seeing Thru Walls: Visualizing Mobile Robots in Augmented Reality

Apr 08, 2021

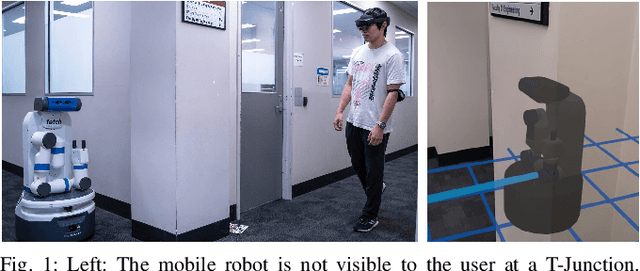

Abstract:We present an approach for visualizing mobile robots through an Augmented Reality headset when there is no line-of-sight visibility between the robot and the human. Three elements are visualized in Augmented Reality: 1) Robot's 3D model to indicate its position, 2) An arrow emanating from the robot to indicate its planned movement direction, and 3) A 2D grid to represent the ground plane. We conduct a user study with 18 participants, in which each participant are asked to retrieve objects, one at a time, from stations at the two sides of a T-junction at the end of a hallway where a mobile robot is roaming. The results show that visualizations improved the perceived safety and efficiency of the task and led to participants being more comfortable with the robot within their personal spaces. Furthermore, visualizing the motion intent in addition to the robot model was found to be more effective than visualizing the robot model alone. The proposed system can improve the safety of automated warehouses by increasing the visibility and predictability of robots.

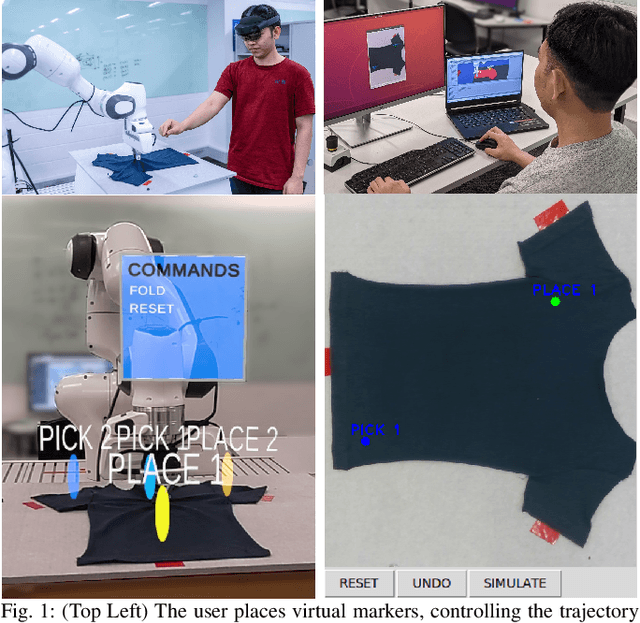

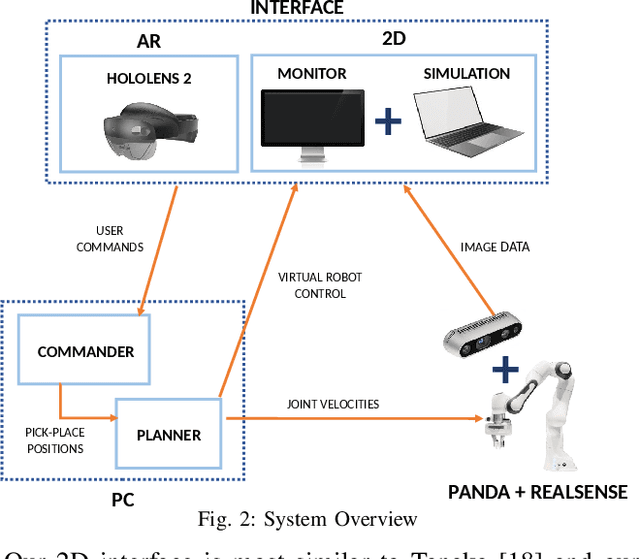

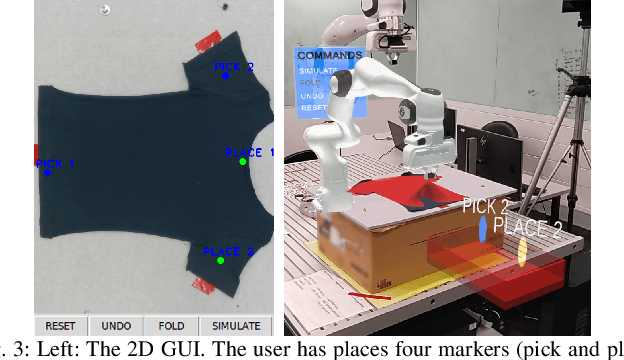

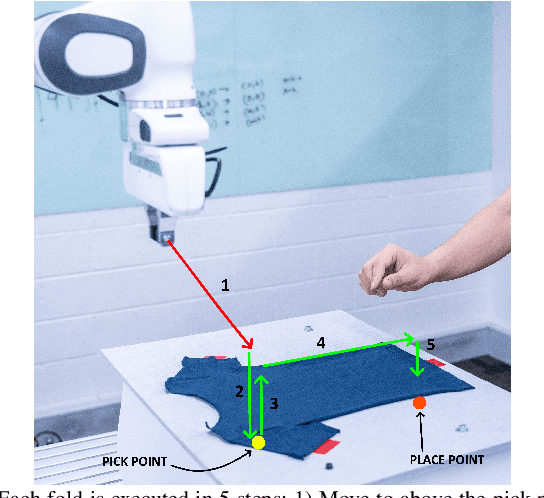

Demonstrating Cloth Folding to Robots: Design and Evaluation of a 2D and a 3D User Interface

Apr 07, 2021

Abstract:An appropriate user interface to collect human demonstration data for deformable object manipulation has been mostly overlooked in the literature. We present an interaction design for demonstrating cloth folding to robots. Users choose pick and place points on the cloth and can preview a visualization of a simulated cloth before real-robot execution. Two interfaces are proposed: A 2D display-and-mouse interface where points are placed by clicking on an image of the cloth, and a 3D Augmented Reality interface where the chosen points are placed by hand gestures. We conduct a user study with 18 participants, in which each user completed two sequential folds to achieve a cloth goal shape. Results show that while both interfaces were acceptable, the 3D interface was found to be more suitable for understanding the task, and the 2D interface suitable for repetition. Results also found that fold previews improve three key metrics: task efficiency, the ability to predict the final shape of the cloth and overall user satisfaction.

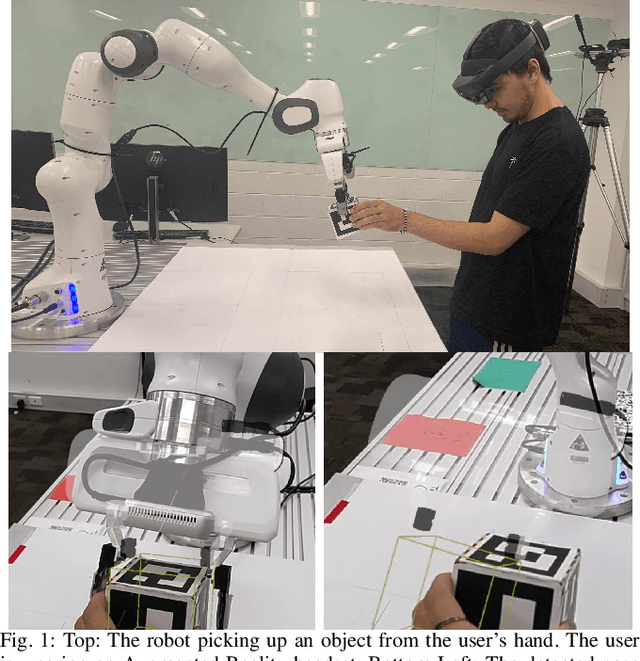

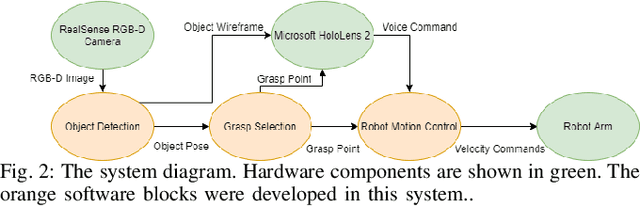

Visualizing Robot Intent for Object Handovers with Augmented Reality

Mar 06, 2021

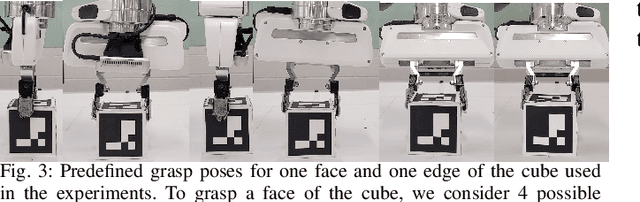

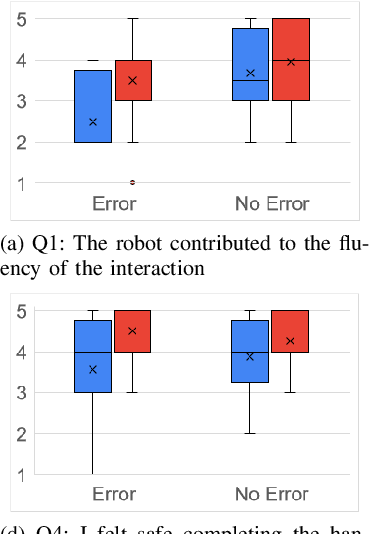

Abstract:Humans are very skillful in communicating their intent for when and where a handover would occur. On the other hand, even the state-of-the-art robotic implementations for handovers display a general lack of communication skills. We propose visualizing the internal state and intent of robots for Human-to-Robot Handovers using Augmented Reality. Specifically, we visualize 3D models of the object and the robotic gripper to communicate the robot's estimation of where the object is and the pose that the robot intends to grasp the object. We conduct a user study with 16 participants, in which each participant handed over a cube-shaped object to the robot 12 times. Results show that visualizing robot intent using augmented reality substantially improves the subjective experience of the users for handovers and decreases the time to transfer the object. Results also indicate that the benefits of augmented reality are still present even when the robot makes errors in localizing the object.

Aligning Robot's Behaviours and Users' Perceptions Through Participatory Prototyping

Jan 11, 2021

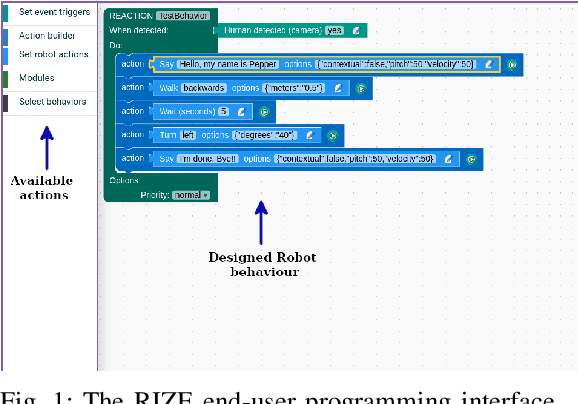

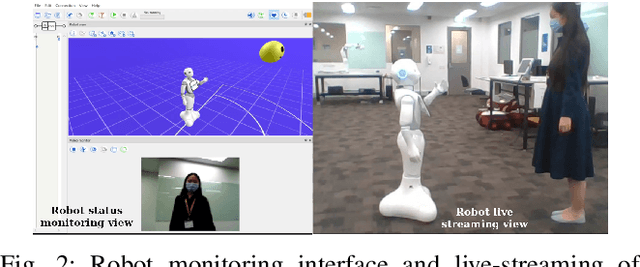

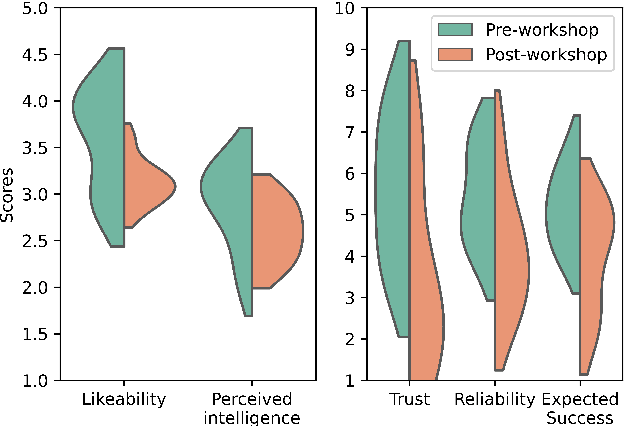

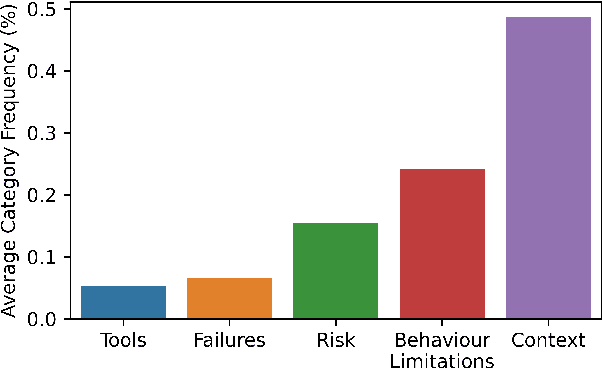

Abstract:Robots are increasingly being deployed in public spaces. However, the general population rarely has the opportunity to nominate what they would prefer or expect a robot to do in these contexts. Since most people have little or no experience interacting with a robot, it is not surprising that robots deployed in the real world may fail to gain acceptance or engage their intended users. To address this issue, we examine users' understanding of robots in public spaces and their expectations of appropriate uses of robots in these spaces. Furthermore, we investigate how these perceptions and expectations change as users engage and interact with a robot. To support this goal, we conducted a participatory design workshop in which participants were actively involved in the prototyping and testing of a robot's behaviours in simulation and on the physical robot. Our work highlights how social and interaction contexts influence users' perception of robots in public spaces and how users' design and understanding of what are appropriate robot behaviors shifts as they observe the enactment of their designs.

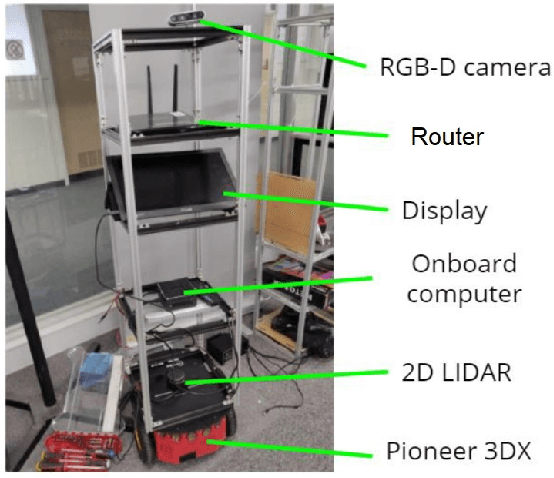

Autonomous Person-Specific Following Robot

Nov 11, 2020

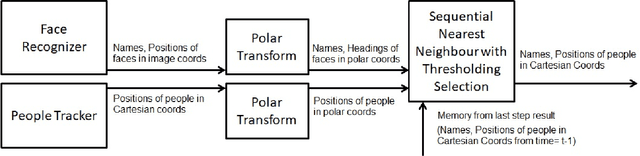

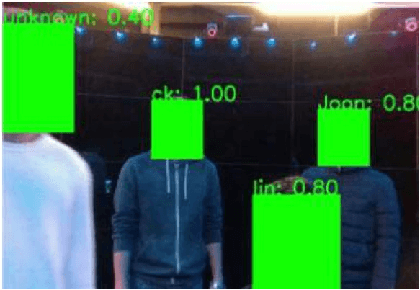

Abstract:Following a specific user is a desired or even required capability for service robots in many human-robot collaborative applications. However, most existing person-following robots follow people without knowledge of who it is following. In this paper, we proposed an identity-specific person tracker, capable of tracking and identifying nearby people, to enable person-specific following. Our proposed method uses a Sequential Nearest Neighbour with Thresholding Selection algorithm we devised to fuse together an anonymous person tracker and a face recogniser. Experiment results comparing our proposed method with alternative approaches showed that our method achieves better performance in tracking and identifying people, as well as improved robot performance in following a target individual.

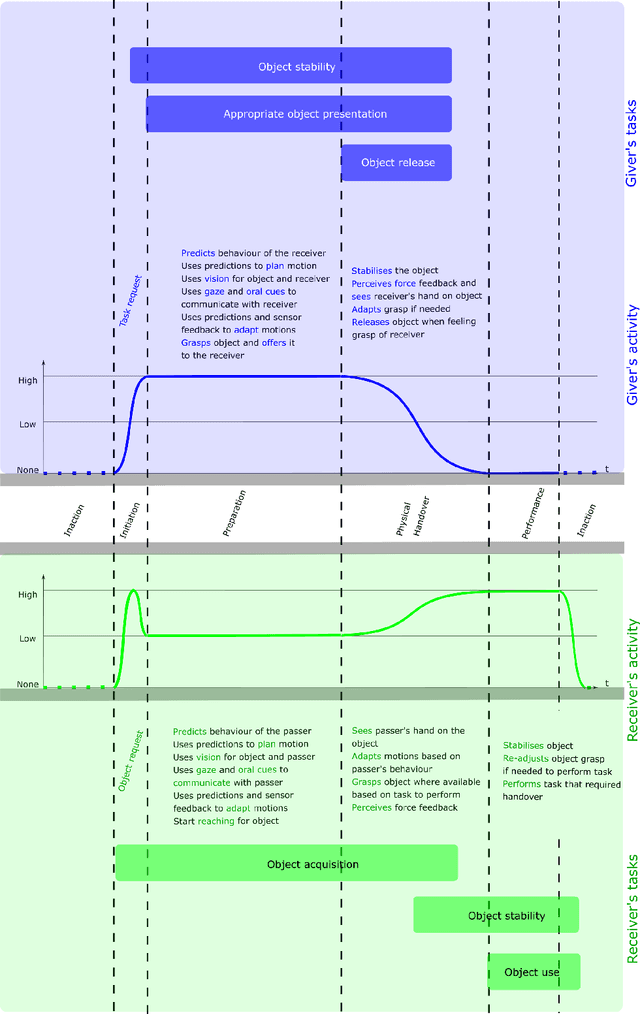

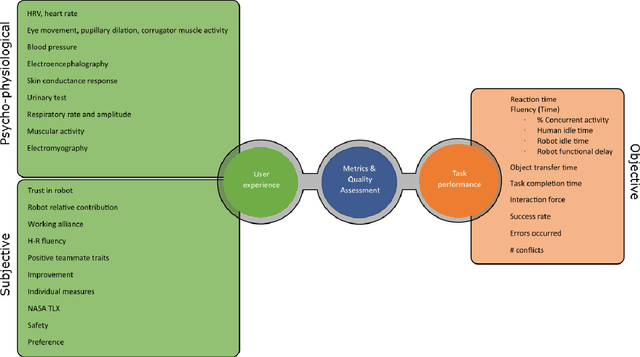

Object Handovers: a Review for Robotics

Jul 25, 2020

Abstract:This article surveys the literature on human-robot object handovers. A handover is a collaborative joint action where an agent, the giver, gives an object to another agent, the receiver. The physical exchange starts when the receiver first contacts the object held by the giver and ends when the giver fully releases the object to the receiver. However, important cognitive and physical processes begin before the physical exchange, including initiating implicit agreement with respect to the location and timing of the exchange. From this perspective, we structure our review into the two main phases delimited by the aforementioned events: 1) a pre-handover phase, and 2) the physical exchange. We focus our analysis on the two actors (giver and receiver) and report the state of the art of robotic givers (robot-to-human handovers) and the robotic receivers (human-to-robot handovers). We report a comprehensive list of qualitative and quantitative metrics commonly used to assess the interaction. While focusing our review on the cognitive level (e.g., prediction, perception, motion planning, learning) and the physical level (e.g., motion, grasping, grip release) of the handover, we briefly discuss also the concepts of safety, social context, and ergonomics. We compare the behaviours displayed during human-to-human handovers to the state of the art of robotic assistants, and identify the major areas of improvement for robotic assistants to reach performance comparable to human interactions. Finally, we propose a minimal set of metrics that should be used in order to enable a fair comparison among the approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge