Empowering parameter-efficient transfer learning by recognizing the kernel structure in self-attention

May 07, 2022Yifan Chen, Devamanyu Hazarika, Mahdi Namazifar, Yang Liu, Di Jin, Dilek Hakkani-Tur

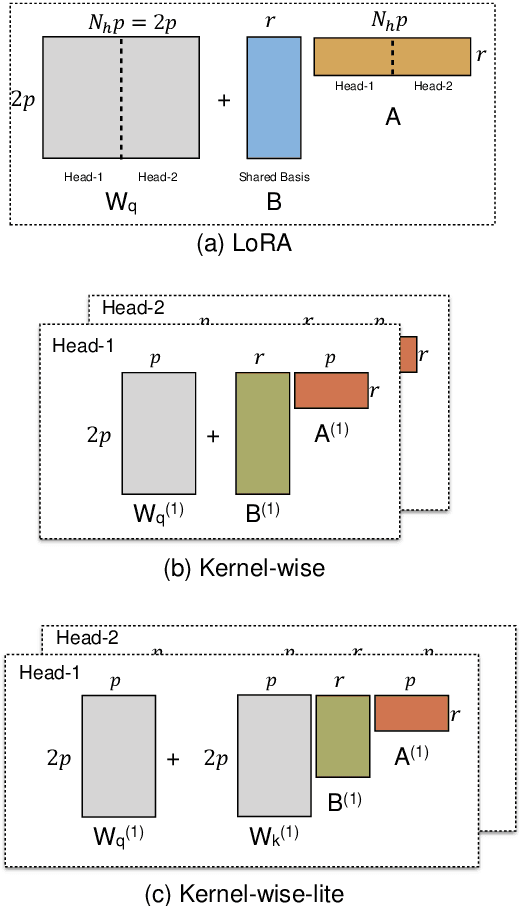

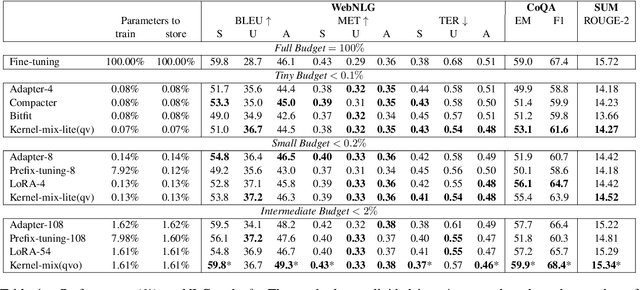

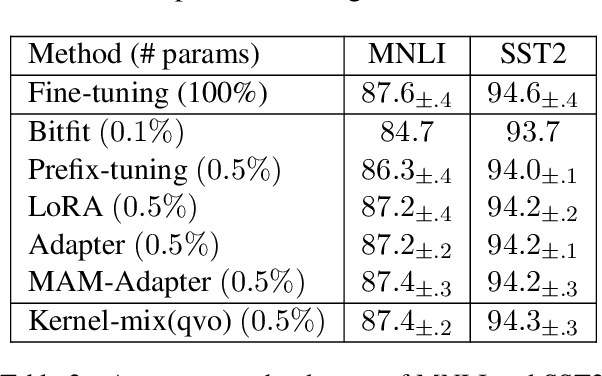

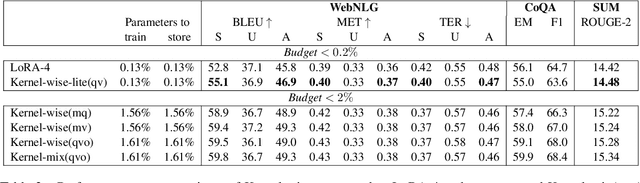

The massive amount of trainable parameters in the pre-trained language models (PLMs) makes them hard to be deployed to multiple downstream tasks. To address this issue, parameter-efficient transfer learning methods have been proposed to tune only a few parameters during fine-tuning while freezing the rest. This paper looks at existing methods along this line through the \textit{kernel lens}. Motivated by the connection between self-attention in transformer-based PLMs and kernel learning, we propose \textit{kernel-wise adapters}, namely \textit{Kernel-mix}, that utilize the kernel structure in self-attention to guide the assignment of the tunable parameters. These adapters use guidelines found in classical kernel learning and enable separate parameter tuning for each attention head. Our empirical results, over a diverse set of natural language generation and understanding tasks, show that our proposed adapters can attain or improve the strong performance of existing baselines.

Exemplars-guided Empathetic Response Generation Controlled by the Elements of Human Communication

Jun 22, 2021Navonil Majumder, Deepanway Ghosal, Devamanyu Hazarika, Alexander Gelbukh, Rada Mihalcea, Soujanya Poria

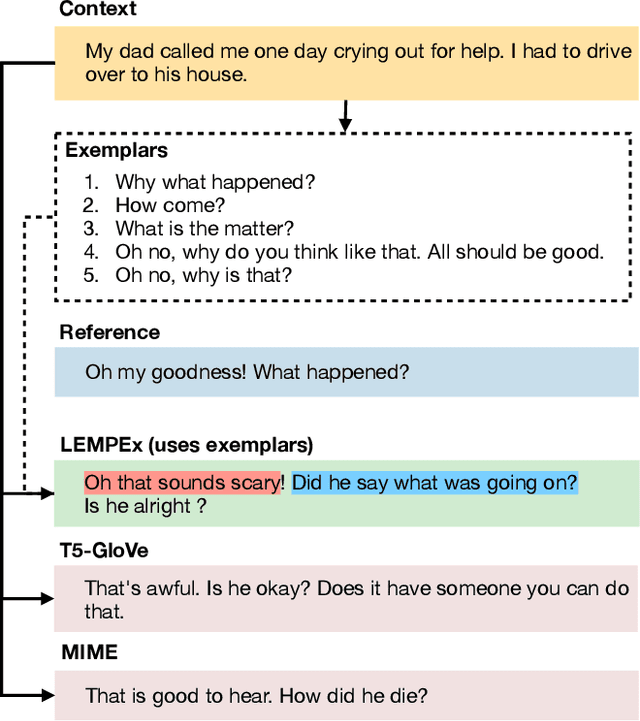

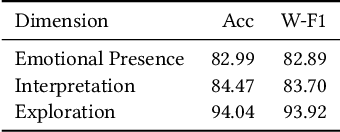

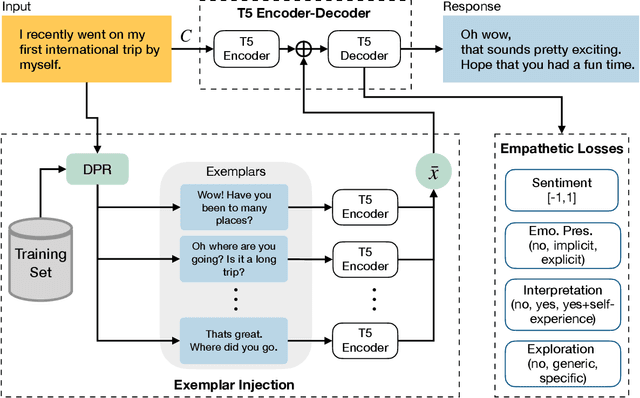

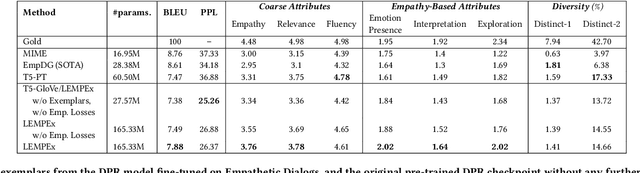

The majority of existing methods for empathetic response generation rely on the emotion of the context to generate empathetic responses. However, empathy is much more than generating responses with an appropriate emotion. It also often entails subtle expressions of understanding and personal resonance with the situation of the other interlocutor. Unfortunately, such qualities are difficult to quantify and the datasets lack the relevant annotations. To address this issue, in this paper we propose an approach that relies on exemplars to cue the generative model on fine stylistic properties that signal empathy to the interlocutor. To this end, we employ dense passage retrieval to extract relevant exemplary responses from the training set. Three elements of human communication -- emotional presence, interpretation, and exploration, and sentiment are additionally introduced using synthetic labels to guide the generation towards empathy. The human evaluation is also extended by these elements of human communication. We empirically show that these approaches yield significant improvements in empathetic response quality in terms of both automated and human-evaluated metrics. The implementation is available at https://github.com/declare-lab/exemplary-empathy.

Zero-Shot Controlled Generation with Encoder-Decoder Transformers

Jun 15, 2021Devamanyu Hazarika, Mahdi Namazifar, Dilek Hakkani-Tür

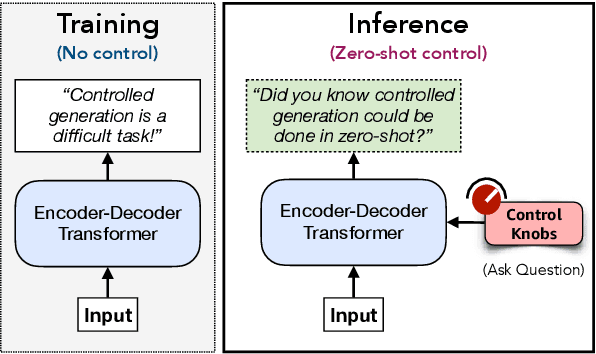

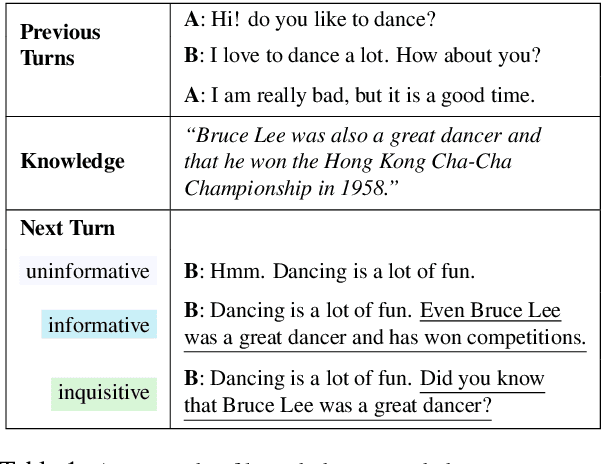

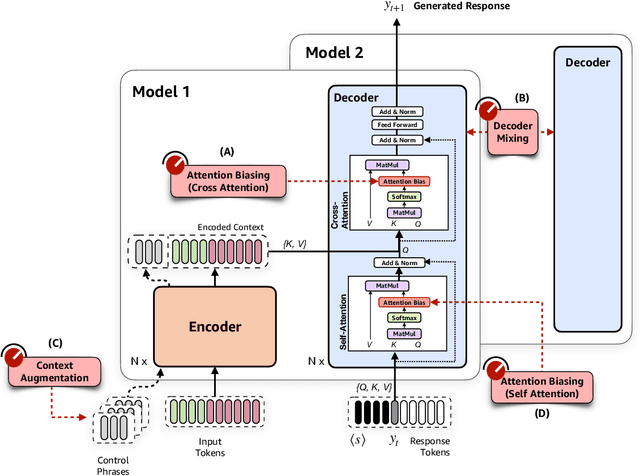

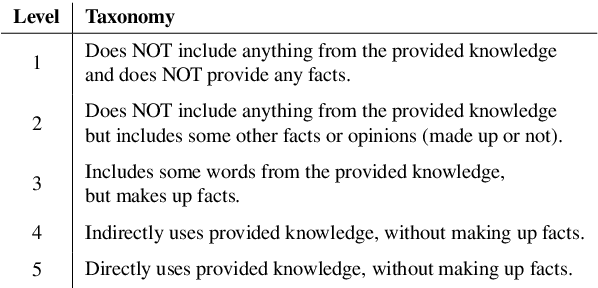

Controlling neural network-based models for natural language generation (NLG) has broad applications in numerous areas such as machine translation, document summarization, and dialog systems. Approaches that enable such control in a zero-shot manner would be of great importance as, among other reasons, they remove the need for additional annotated data and training. In this work, we propose novel approaches for controlling encoder-decoder transformer-based NLG models in zero-shot. This is done by introducing three control knobs, namely, attention biasing, decoder mixing, and context augmentation, that are applied to these models at generation time. These knobs control the generation process by directly manipulating trained NLG models (e.g., biasing cross-attention layers) to realize the desired attributes in the generated outputs. We show that not only are these NLG models robust to such manipulations, but also their behavior could be controlled without an impact on their generation performance. These results, to the best of our knowledge, are the first of their kind. Through these control knobs, we also investigate the role of transformer decoder's self-attention module and show strong evidence that its primary role is maintaining fluency of sentences generated by these models. Based on this hypothesis, we show that alternative architectures for transformer decoders could be viable options. We also study how this hypothesis could lead to more efficient ways for training encoder-decoder transformer models.

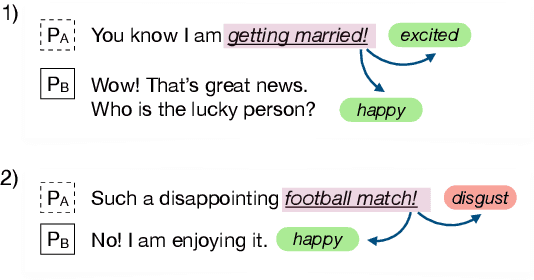

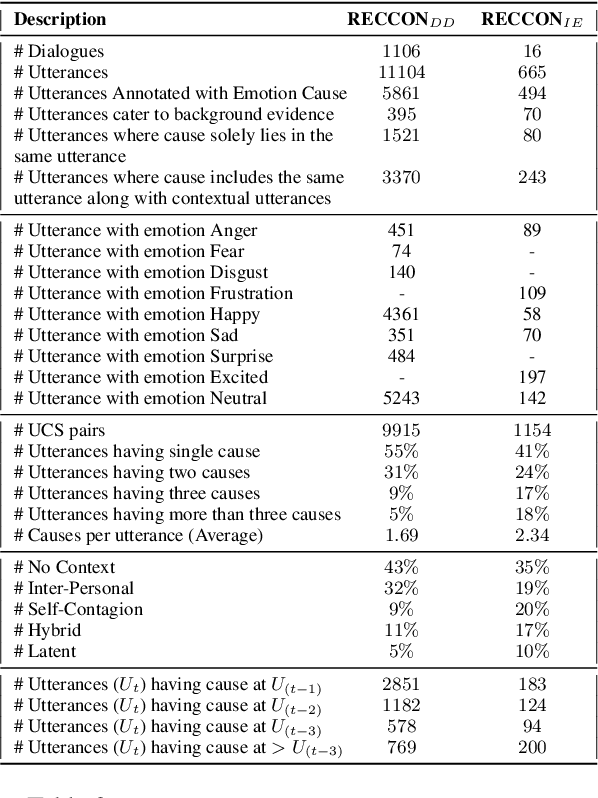

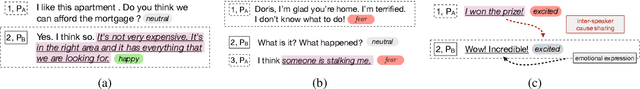

Recognizing Emotion Cause in Conversations

Dec 24, 2020Soujanya Poria, Navonil Majumder, Devamanyu Hazarika, Deepanway Ghosal, Rishabh Bhardwaj, Samson Yu Bai Jian, Romila Ghosh, Niyati Chhaya, Alexander Gelbukh, Rada Mihalcea

Recognizing the cause behind emotions in text is a fundamental yet under-explored area of research in NLP. Advances in this area hold the potential to improve interpretability and performance in affect-based models. Identifying emotion causes at the utterance level in conversations is particularly challenging due to the intermingling dynamic among the interlocutors. To this end, we introduce the task of recognizing emotion cause in conversations with an accompanying dataset named RECCON. Furthermore, we define different cause types based on the source of the causes and establish strong transformer-based baselines to address two different sub-tasks of RECCON: 1) Causal Span Extraction and 2) Causal Emotion Entailment. The dataset is available at https://github.com/declare-lab/RECCON.

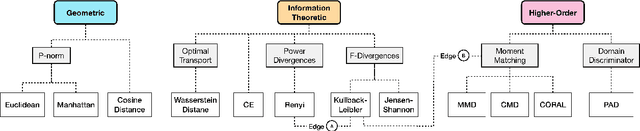

Domain Divergences: a Survey and Empirical Analysis

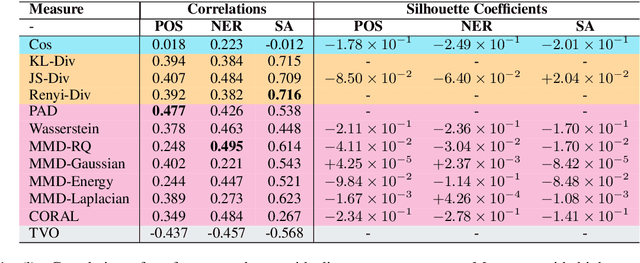

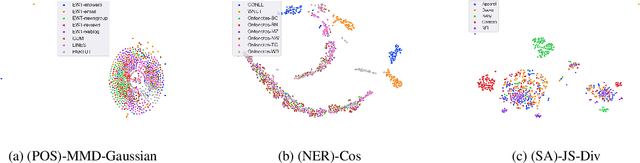

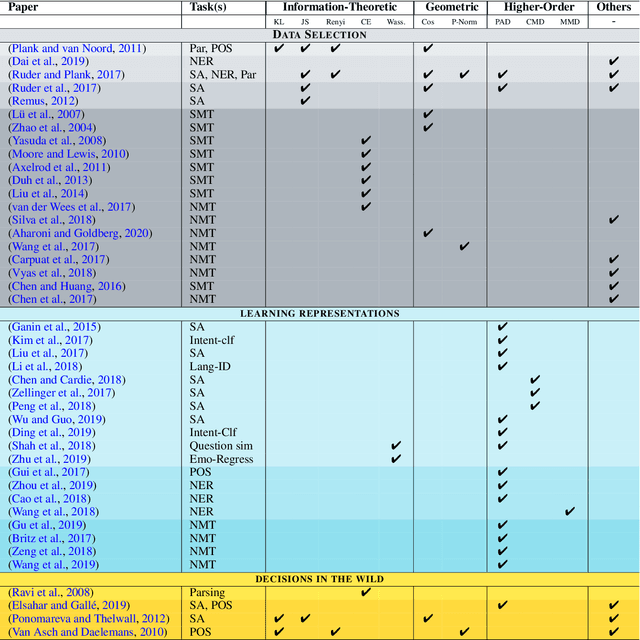

Oct 23, 2020Abhinav Ramesh Kashyap, Devamanyu Hazarika, Min-Yen Kan, Roger Zimmermann

Domain divergence plays a significant role in estimating the performance of a model when applied to new domains. While there is significant literature on divergence measures, choosing an appropriate divergence measures remains difficult for researchers. We address this shortcoming by both surveying the literature and through an empirical study. We contribute a taxonomy of divergence measures consisting of three groups -- Information-theoretic, Geometric, and Higher-order measures -- and identify the relationships between them. We then ground the use of divergence measures in three different application groups -- 1) Data Selection, 2) Learning Representation, and 3) Decisions in the Wild. From this, we identify that Information-theoretic measures are prevalent for 1) and 3), and higher-order measures are common for 2). To further help researchers, we validate these uses empirically through a correlation analysis of performance drops. We consider the current contextual word representations (CWR) to contrast with the older word distribution based representations for this analysis. We find that traditional measures over word distributions still serve as strong baselines, while higher-order measures with CWR are effective.

Emerging Trends of Multimodal Research in Vision and Language

Oct 19, 2020Shagun Uppal, Sarthak Bhagat, Devamanyu Hazarika, Navonil Majumdar, Soujanya Poria, Roger Zimmermann, Amir Zadeh

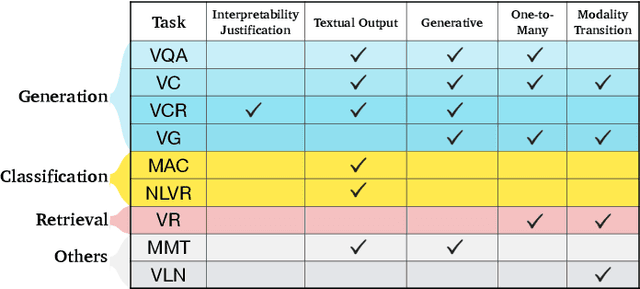

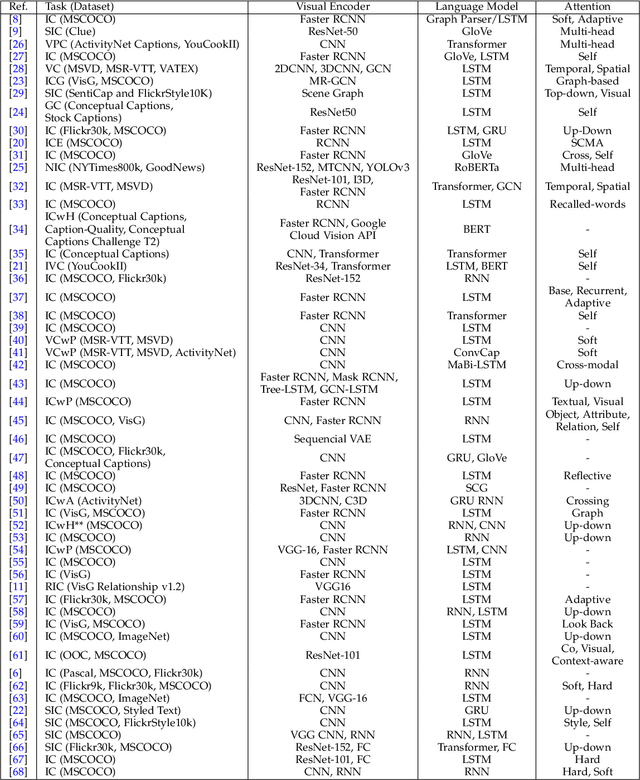

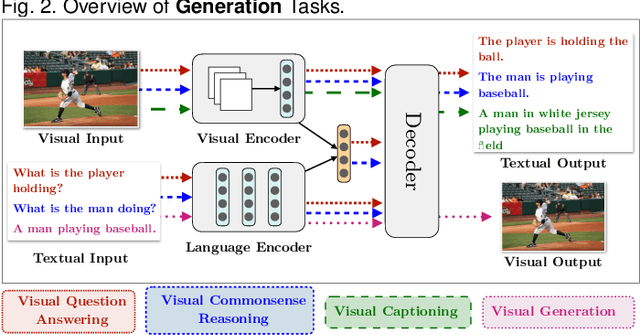

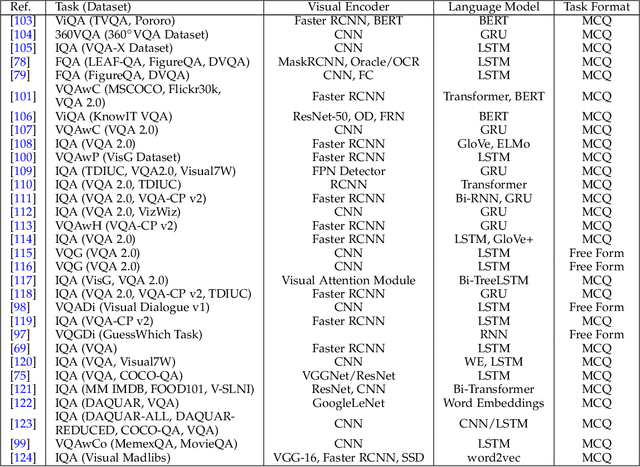

Deep Learning and its applications have cascaded impactful research and development with a diverse range of modalities present in the real-world data. More recently, this has enhanced research interests in the intersection of the Vision and Language arena with its numerous applications and fast-paced growth. In this paper, we present a detailed overview of the latest trends in research pertaining to visual and language modalities. We look at its applications in their task formulations and how to solve various problems related to semantic perception and content generation. We also address task-specific trends, along with their evaluation strategies and upcoming challenges. Moreover, we shed some light on multi-disciplinary patterns and insights that have emerged in the recent past, directing this field towards more modular and transparent intelligent systems. This survey identifies key trends gravitating recent literature in VisLang research and attempts to unearth directions that the field is heading towards.

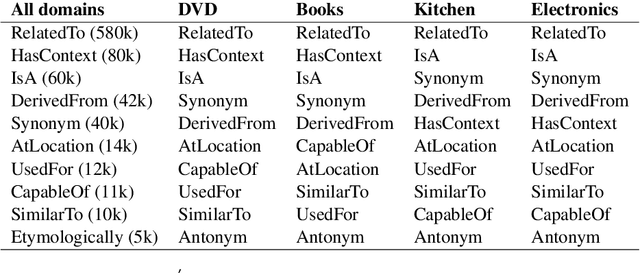

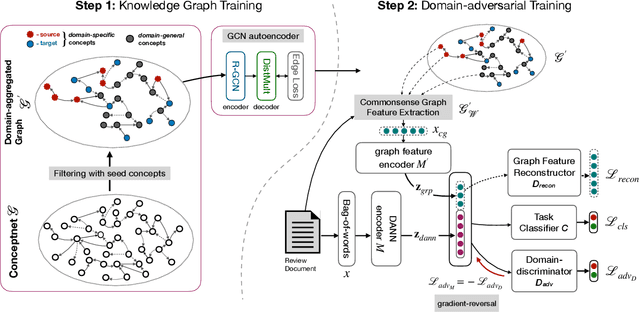

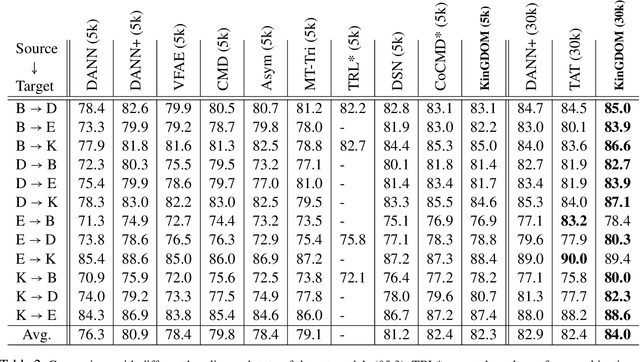

KinGDOM: Knowledge-Guided DOMain adaptation for sentiment analysis

May 11, 2020Deepanway Ghosal, Devamanyu Hazarika, Abhinaba Roy, Navonil Majumder, Rada Mihalcea, Soujanya Poria

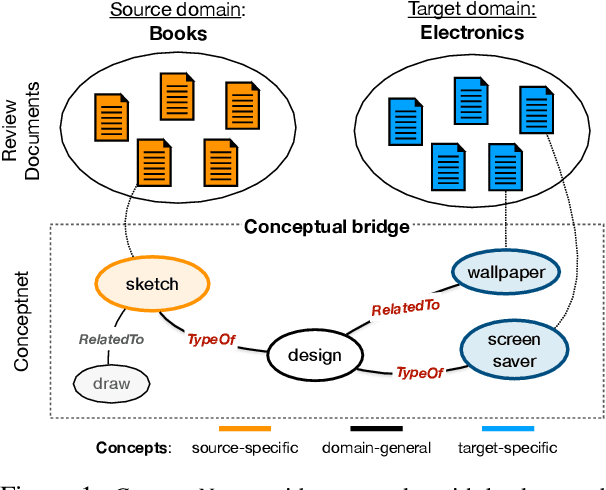

Cross-domain sentiment analysis has received significant attention in recent years, prompted by the need to combat the domain gap between different applications that make use of sentiment analysis. In this paper, we take a novel perspective on this task by exploring the role of external commonsense knowledge. We introduce a new framework, KinGDOM, which utilizes the ConceptNet knowledge graph to enrich the semantics of a document by providing both domain-specific and domain-general background concepts. These concepts are learned by training a graph convolutional autoencoder that leverages inter-domain concepts in a domain-invariant manner. Conditioning a popular domain-adversarial baseline method with these learned concepts helps improve its performance over state-of-the-art approaches, demonstrating the efficacy of our proposed framework.

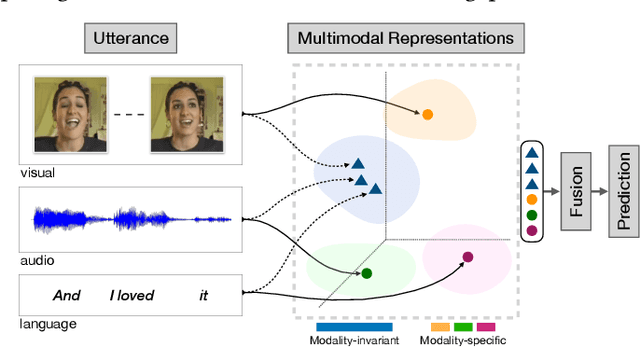

MISA: Modality-Invariant and -Specific Representations for Multimodal Sentiment Analysis

May 08, 2020Devamanyu Hazarika, Roger Zimmermann, Soujanya Poria

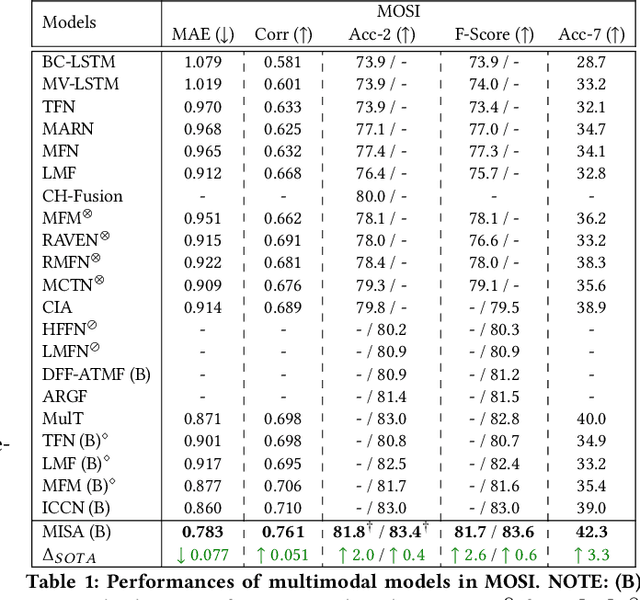

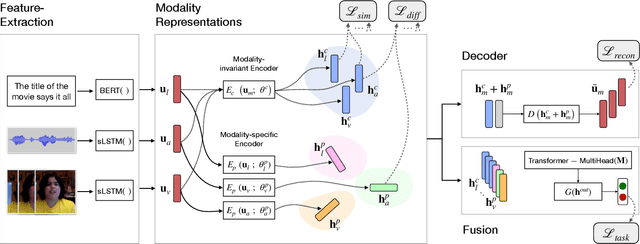

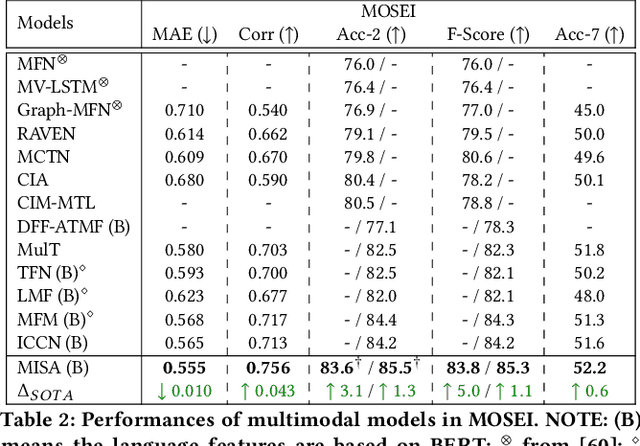

Multimodal Sentiment Analysis is an active area of research that leverages multimodal signals for affective understanding of user-generated videos. The predominant approach, addressing this task, has been to develop sophisticated fusion techniques. However, the heterogeneous nature of the signals creates distributional modality gaps that pose significant challenges. In this paper, we aim to learn effective modality representations to aid the process of fusion. We propose a novel framework, MISA, which projects each modality to two distinct subspaces. The first subspace is modality invariant, where the representations across modalities learn their commonalities and reduce the modality gap. The second subspace is modality-specific, which is private to each modality and captures their characteristic features. These representations provide a holistic view of the multimodal data, which is used for fusion that leads to task predictions. Our experiments on popular sentiment analysis benchmarks, MOSI and MOSEI, demonstrate significant gains over state-of-the-art models. We also consider the task of Multimodal Humor Detection and experiment on the recently proposed UR_FUNNY dataset. Here too, our model fares better than strong baselines, establishing MISA as a useful multimodal framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge