Daixuan Li

A Learning-based Approach Towards Automated Tuning of SSD Configurations

Oct 17, 2021

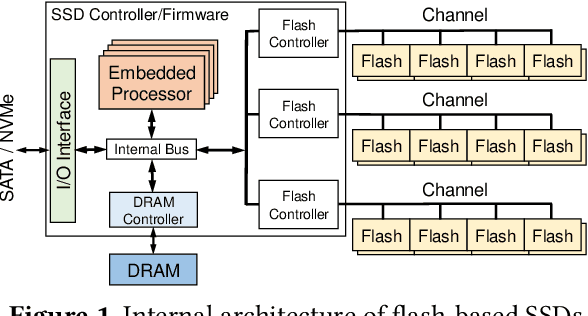

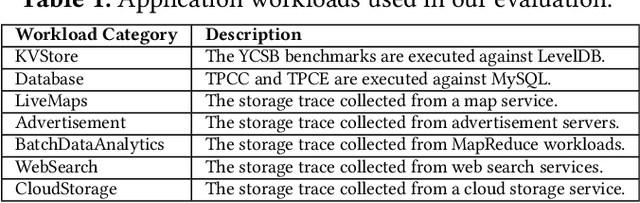

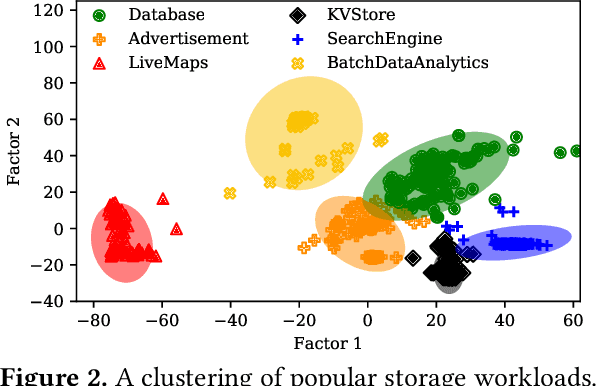

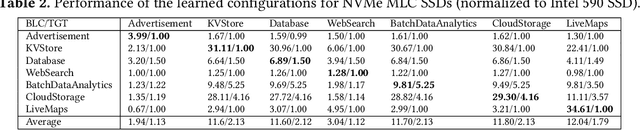

Abstract:Thanks to the mature manufacturing techniques, solid-state drives (SSDs) are highly customizable for applications today, which brings opportunities to further improve their storage performance and resource utilization. However, the SSD efficiency is usually determined by many hardware parameters, making it hard for developers to manually tune them and determine the optimal SSD configurations. In this paper, we present an automated learning-based framework, named LearnedSSD, that utilizes both supervised and unsupervised machine learning (ML) techniques to drive the tuning of hardware configurations for SSDs. LearnedSSD automatically extracts the unique access patterns of a new workload using its block I/O traces, maps the workload to previously workloads for utilizing the learned experiences, and recommends an optimal SSD configuration based on the validated storage performance. LearnedSSD accelerates the development of new SSD devices by automating the hard-ware parameter configurations and reducing the manual efforts. We develop LearnedSSD with simple yet effective learning algorithms that can run efficiently on multi-core CPUs. Given a target storage workload, our evaluation shows that LearnedSSD can always deliver an optimal SSD configuration for the target workload, and this configuration will not hurt the performance of non-target workloads.

CPM: A Large-scale Generative Chinese Pre-trained Language Model

Dec 01, 2020

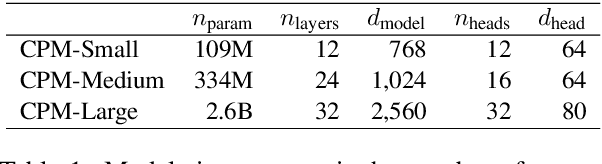

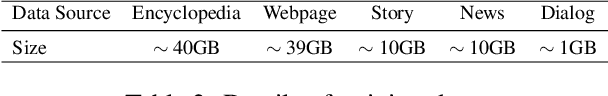

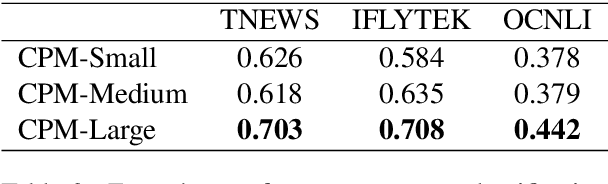

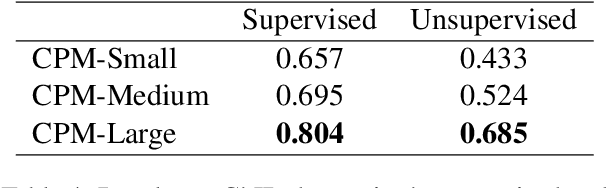

Abstract:Pre-trained Language Models (PLMs) have proven to be beneficial for various downstream NLP tasks. Recently, GPT-3, with 175 billion parameters and 570GB training data, drew a lot of attention due to the capacity of few-shot (even zero-shot) learning. However, applying GPT-3 to address Chinese NLP tasks is still challenging, as the training corpus of GPT-3 is primarily English, and the parameters are not publicly available. In this technical report, we release the Chinese Pre-trained Language Model (CPM) with generative pre-training on large-scale Chinese training data. To the best of our knowledge, CPM, with 2.6 billion parameters and 100GB Chinese training data, is the largest Chinese pre-trained language model, which could facilitate several downstream Chinese NLP tasks, such as conversation, essay generation, cloze test, and language understanding. Extensive experiments demonstrate that CPM achieves strong performance on many NLP tasks in the settings of few-shot (even zero-shot) learning. The code and parameters are available at https://github.com/TsinghuaAI/CPM-Generate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge