Baoxiang Pan

Efficient Generative AI Boosts Probabilistic Forecasting of Sudden Stratospheric Warmings

Oct 30, 2025Abstract:Sudden Stratospheric Warmings (SSWs) are key sources of subseasonal predictability and major drivers of extreme winter weather. Yet, their accurate and efficient forecast remains a persistent challenge for numerical weather prediction (NWP) systems due to limitations in physical representation, initialization, and the immense computational demands of ensemble forecasts. While data-driven forecasting is rapidly evolving, its application to the complex, three-dimensional dynamics of SSWs, particularly for probabilistic forecast, remains underexplored. Here, we bridge this gap by developing a Flow Matching-based generative AI model (FM-Cast) for efficient and skillful probabilistic forecasting of the spatiotemporal evolution of stratospheric circulation. Evaluated across 18 major SSW events (1998-2024), FM-Cast skillfully forecasts the onset, intensity, and morphology of 10 events up to 20 days in advance, achieving ensemble accuracies above 50%. Its performance is comparable to or exceeds leading NWP systems while requiring only two minutes for a 50-member, 30-day forecast on a consumer GPU. Furthermore, leveraging FM-Cast as a scientific tool, we demonstrate through idealized experiments that SSW predictability is fundamentally linked to its underlying physical drivers, distinguishing between events forced from the troposphere and those driven by internal stratospheric dynamics. Our work thus establishes a computationally efficient paradigm for probabilistic forecasting stratospheric anomalies and showcases generative AI's potential to deepen the physical understanding of atmosphere-climate dynamics.

Supporting renewable energy planning and operation with data-driven high-resolution ensemble weather forecast

May 07, 2025Abstract:The planning and operation of renewable energy, especially wind power, depend crucially on accurate, timely, and high-resolution weather information. Coarse-grid global numerical weather forecasts are typically downscaled to meet these requirements, introducing challenges of scale inconsistency, process representation error, computation cost, and entanglement of distinct uncertainty sources from chaoticity, model bias, and large-scale forcing. We address these challenges by learning the climatological distribution of a target wind farm using its high-resolution numerical weather simulations. An optimal combination of this learned high-resolution climatological prior with coarse-grid large scale forecasts yields highly accurate, fine-grained, full-variable, large ensemble of weather pattern forecasts. Using observed meteorological records and wind turbine power outputs as references, the proposed methodology verifies advantageously compared to existing numerical/statistical forecasting-downscaling pipelines, regarding either deterministic/probabilistic skills or economic gains. Moreover, a 100-member, 10-day forecast with spatial resolution of 1 km and output frequency of 15 min takes < 1 hour on a moderate-end GPU, as contrast to $\mathcal{O}(10^3)$ CPU hours for conventional numerical simulation. By drastically reducing computational costs while maintaining accuracy, our method paves the way for more efficient and reliable renewable energy planning and operation.

Generative assimilation and prediction for weather and climate

Mar 04, 2025Abstract:Machine learning models have shown great success in predicting weather up to two weeks ahead, outperforming process-based benchmarks. However, existing approaches mostly focus on the prediction task, and do not incorporate the necessary data assimilation. Moreover, these models suffer from error accumulation in long roll-outs, limiting their applicability to seasonal predictions or climate projections. Here, we introduce Generative Assimilation and Prediction (GAP), a unified deep generative framework for assimilation and prediction of both weather and climate. By learning to quantify the probabilistic distribution of atmospheric states under observational, predictive, and external forcing constraints, GAP excels in a broad range of weather-climate related tasks, including data assimilation, seamless prediction, and climate simulation. In particular, GAP is competitive with state-of-the-art ensemble assimilation, probabilistic weather forecast and seasonal prediction, yields stable millennial simulations, and reproduces climate variability from daily to decadal time scales.

HiHa: Introducing Hierarchical Harmonic Decomposition to Implicit Neural Compression for Atmospheric Data

Nov 09, 2024Abstract:The rapid development of large climate models has created the requirement of storing and transferring massive atmospheric data worldwide. Therefore, data compression is essential for meteorological research, but an efficient compression scheme capable of keeping high accuracy with high compressibility is still lacking. As an emerging technique, Implicit Neural Representation (INR) has recently acquired impressive momentum and demonstrates high promise for compressing diverse natural data. However, the INR-based compression encounters a bottleneck due to the sophisticated spatio-temporal properties and variability. To address this issue, we propose Hierarchical Harmonic decomposition implicit neural compression (HiHa) for atmospheric data. HiHa firstly segments the data into multi-frequency signals through decomposition of multiple complex harmonic, and then tackles each harmonic respectively with a frequency-based hierarchical compression module consisting of sparse storage, multi-scale INR and iterative decomposition sub-modules. We additionally design a temporal residual compression module to accelerate compression by utilizing temporal continuity. Experiments depict that HiHa outperforms both mainstream compressors and other INR-based methods in both compression fidelity and capabilities, and also demonstrate that using compressed data in existing data-driven models can achieve the same accuracy as raw data.

Fast, Scale-Adaptive, and Uncertainty-Aware Downscaling of Earth System Model Fields with Generative Foundation Models

Mar 05, 2024

Abstract:Accurate and high-resolution Earth system model (ESM) simulations are essential to assess the ecological and socio-economic impacts of anthropogenic climate change, but are computationally too expensive. Recent machine learning approaches have shown promising results in downscaling ESM simulations, outperforming state-of-the-art statistical approaches. However, existing methods require computationally costly retraining for each ESM and extrapolate poorly to climates unseen during training. We address these shortcomings by learning a consistency model (CM) that efficiently and accurately downscales arbitrary ESM simulations without retraining in a zero-shot manner. Our foundation model approach yields probabilistic downscaled fields at resolution only limited by the observational reference data. We show that the CM outperforms state-of-the-art diffusion models at a fraction of computational cost while maintaining high controllability on the downscaling task. Further, our method generalizes to climate states unseen during training without explicitly formulated physical constraints.

Reconstructing Three-decade Global Fine-Grained Nighttime Light Observations by a New Super-Resolution Framework

Jul 14, 2023

Abstract:Satellite-collected nighttime light provides a unique perspective on human activities, including urbanization, population growth, and epidemics. Yet, long-term and fine-grained nighttime light observations are lacking, leaving the analysis and applications of decades of light changes in urban facilities undeveloped. To fill this gap, we developed an innovative framework and used it to design a new super-resolution model that reconstructs low-resolution nighttime light data into high resolution. The validation of one billion data points shows that the correlation coefficient of our model at the global scale reaches 0.873, which is significantly higher than that of other existing models (maximum = 0.713). Our model also outperforms existing models at the national and urban scales. Furthermore, through an inspection of airports and roads, only our model's image details can reveal the historical development of these facilities. We provide the long-term and fine-grained nighttime light observations to promote research on human activities. The dataset is available at \url{https://doi.org/10.5281/zenodo.7859205}.

Improving seasonal forecast using probabilistic deep learning

Oct 27, 2020

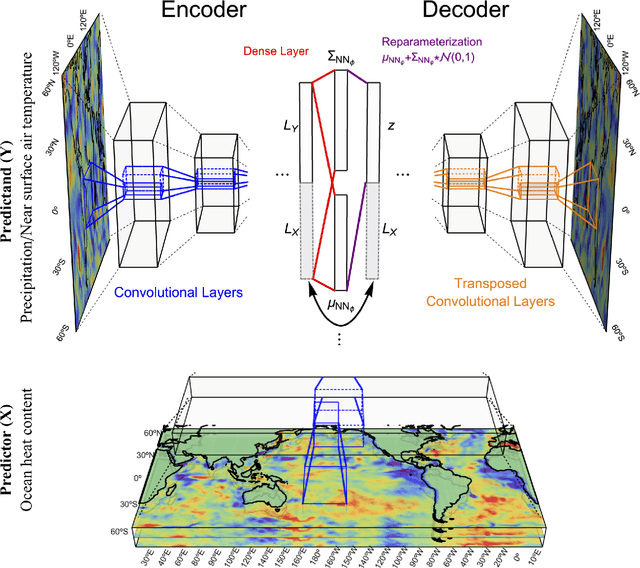

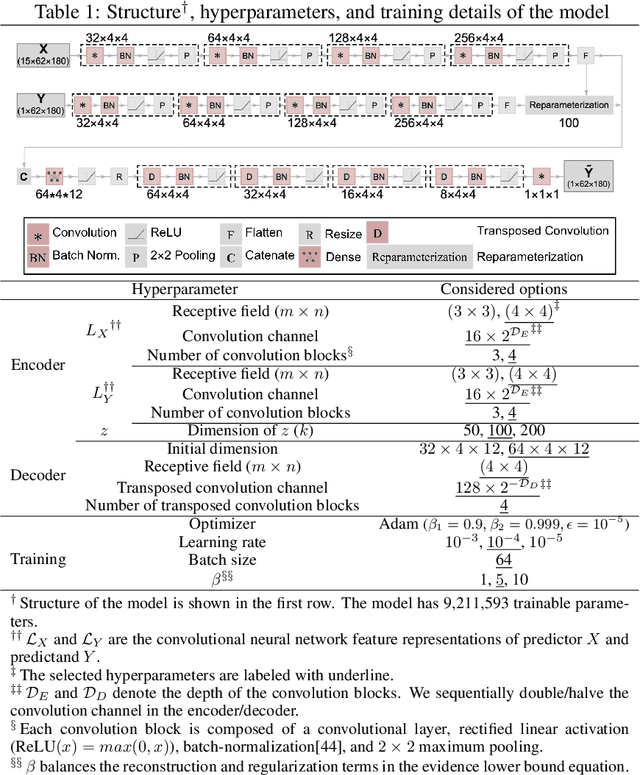

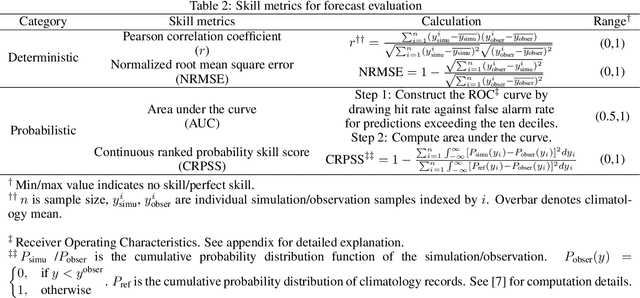

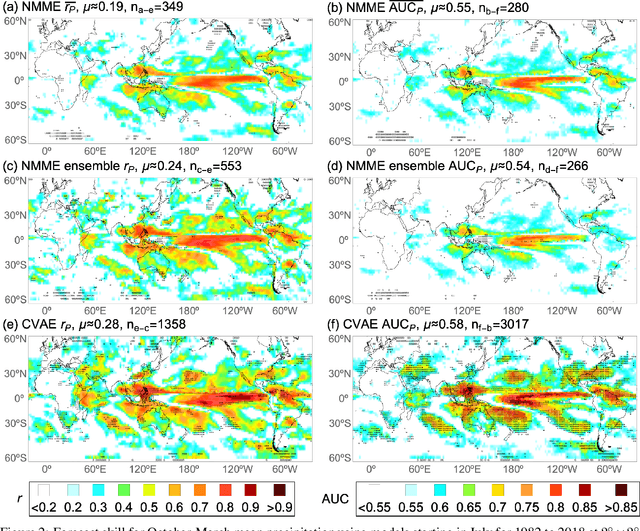

Abstract:The path toward realizing the potential of seasonal forecasting and its socioeconomic benefits depends heavily on improving general circulation model based dynamical forecasting systems. To improve dynamical seasonal forecast, it is crucial to set up forecast benchmarks, and clarify forecast limitations posed by model initialization errors, formulation deficiencies, and internal climate variability. With huge cost in generating large forecast ensembles, and limited observations for forecast verification, the seasonal forecast benchmarking and diagnosing task proves challenging. In this study, we develop a probabilistic deep neural network model, drawing on a wealth of existing climate simulations to enhance seasonal forecast capability and forecast diagnosis. By leveraging complex physical relationships encoded in climate simulations, our probabilistic forecast model demonstrates favorable deterministic and probabilistic skill compared to state-of-the-art dynamical forecast systems in quasi-global seasonal forecast of precipitation and near-surface temperature. We apply this probabilistic forecast methodology to quantify the impacts of initialization errors and model formulation deficiencies in a dynamical seasonal forecasting system. We introduce the saliency analysis approach to efficiently identify the key predictors that influence seasonal variability. Furthermore, by explicitly modeling uncertainty using variational Bayes, we give a more definitive answer to how the El Nino/Southern Oscillation, the dominant mode of seasonal variability, modulates global seasonal predictability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge