Babak Saboury

University of Maryland, Baltimore County, National Institutes of Health Clinical Center

Nuclear Medicine Artificial Intelligence in Action: The Bethesda Report (AI Summit 2024)

Jun 03, 2024

Abstract:The 2nd SNMMI Artificial Intelligence (AI) Summit, organized by the SNMMI AI Task Force, took place in Bethesda, MD, on February 29 - March 1, 2024. Bringing together various community members and stakeholders, and following up on a prior successful 2022 AI Summit, the summit theme was: AI in Action. Six key topics included (i) an overview of prior and ongoing efforts by the AI task force, (ii) emerging needs and tools for computational nuclear oncology, (iii) new frontiers in large language and generative models, (iv) defining the value proposition for the use of AI in nuclear medicine, (v) open science including efforts for data and model repositories, and (vi) issues of reimbursement and funding. The primary efforts, findings, challenges, and next steps are summarized in this manuscript.

Issues and Challenges in Applications of Artificial Intelligence to Nuclear Medicine -- The Bethesda Report (AI Summit 2022)

Nov 07, 2022

Abstract:The SNMMI Artificial Intelligence (SNMMI-AI) Summit, organized by the SNMMI AI Task Force, took place in Bethesda, MD on March 21-22, 2022. It brought together various community members and stakeholders from academia, healthcare, industry, patient representatives, and government (NIH, FDA), and considered various key themes to envision and facilitate a bright future for routine, trustworthy use of AI in nuclear medicine. In what follows, essential issues, challenges, controversies and findings emphasized in the meeting are summarized.

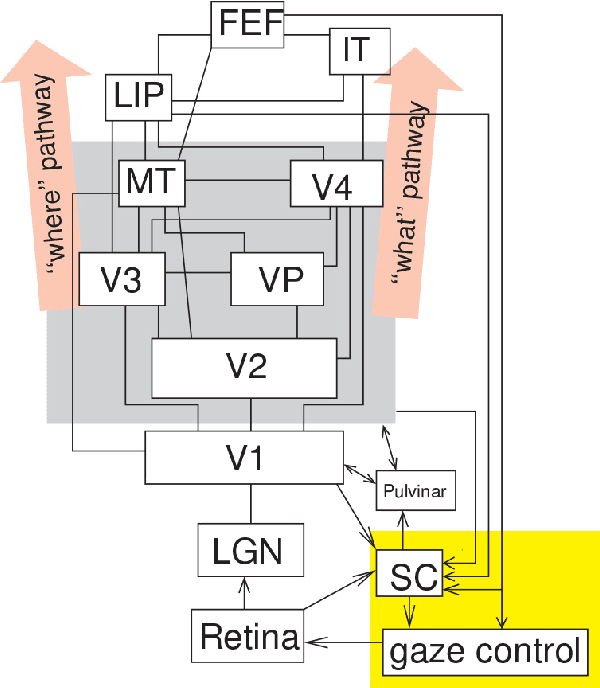

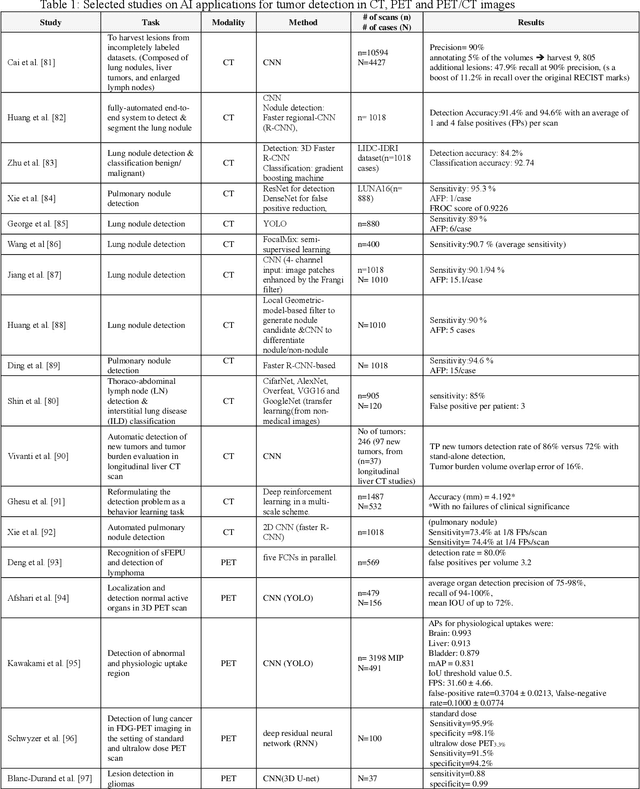

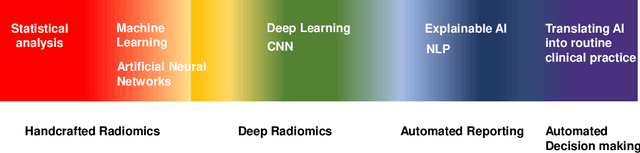

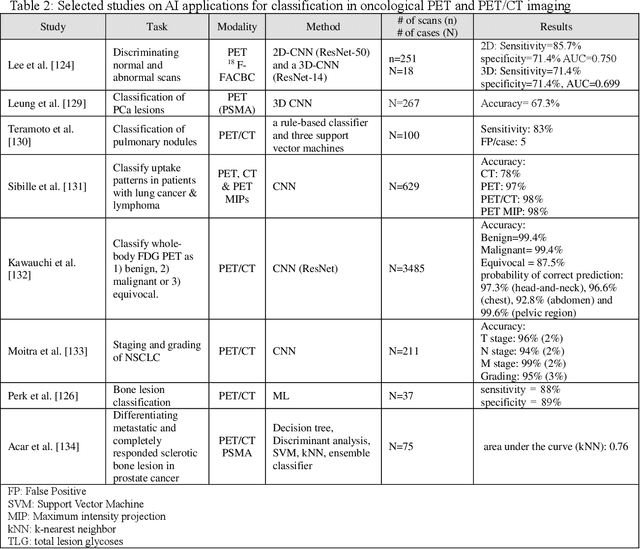

AI-Based Detection, Classification and Prediction/Prognosis in Medical Imaging: Towards Radiophenomics

Nov 01, 2021

Abstract:Artificial intelligence (AI) techniques have significant potential to enable effective, robust and automated image phenotyping including identification of subtle patterns. AI-based detection searches the image space to find the regions of interest based on patterns and features. There is a spectrum of tumor histologies from benign to malignant that can be identified by AI-based classification approaches using image features. The extraction of minable information from images gives way to the field of radiomics and can be explored via explicit (handcrafted/engineered) and deep radiomics frameworks. Radiomics analysis has the potential to be utilized as a noninvasive technique for the accurate characterization of tumors to improve diagnosis and treatment monitoring. This work reviews AI-based techniques, with a special focus on oncological PET and PET/CT imaging, for different detection, classification, and prediction/prognosis tasks. We also discuss needed efforts to enable the translation of AI techniques to routine clinical workflows, and potential improvements and complementary techniques such as the use of natural language processing on electronic health records and neuro-symbolic AI techniques.

CCS-GAN: COVID-19 CT-scan classification with very few positive training images

Oct 01, 2021

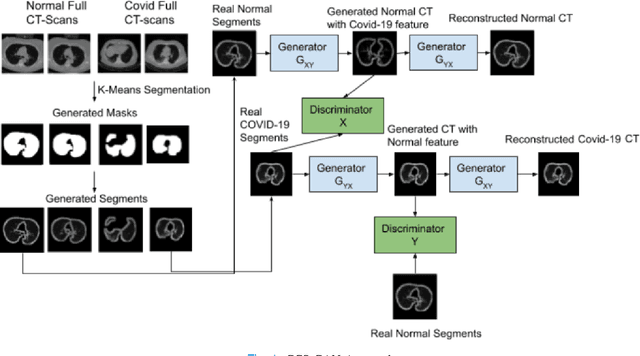

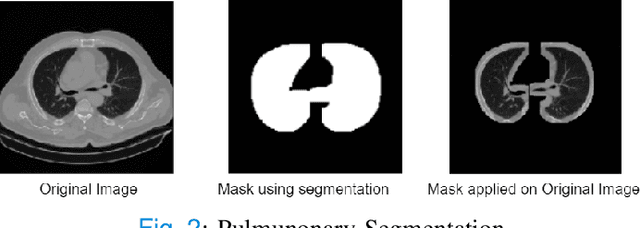

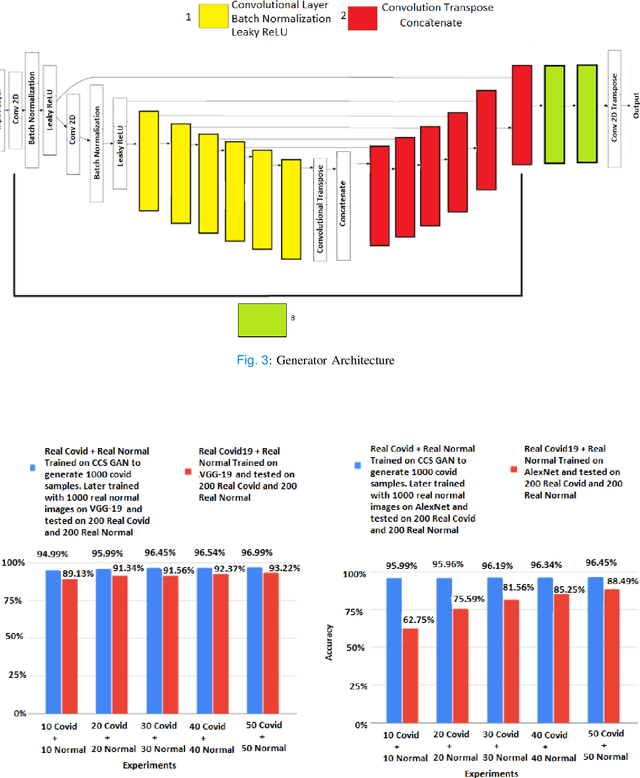

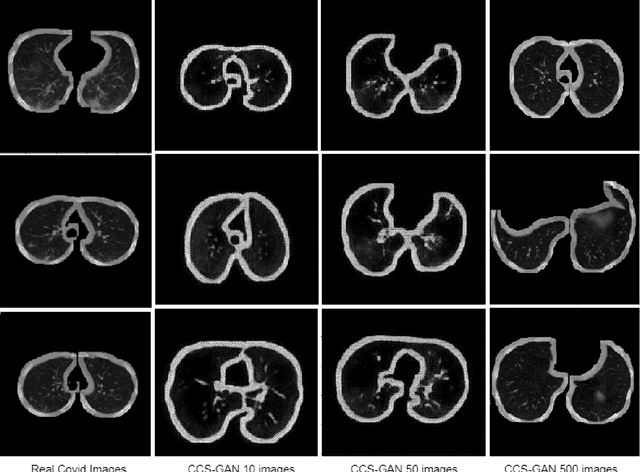

Abstract:We present a novel algorithm that is able to classify COVID-19 pneumonia from CT Scan slices using a very small sample of training images exhibiting COVID-19 pneumonia in tandem with a larger number of normal images. This algorithm is able to achieve high classification accuracy using as few as 10 positive training slices (from 10 positive cases), which to the best of our knowledge is one order of magnitude fewer than the next closest published work at the time of writing. Deep learning with extremely small positive training volumes is a very difficult problem and has been an important topic during the COVID-19 pandemic, because for quite some time it was difficult to obtain large volumes of COVID-19 positive images for training. Algorithms that can learn to screen for diseases using few examples are an important area of research. We present the Cycle Consistent Segmentation Generative Adversarial Network (CCS-GAN). CCS-GAN combines style transfer with pulmonary segmentation and relevant transfer learning from negative images in order to create a larger volume of synthetic positive images for the purposes of improving diagnostic classification performance. The performance of a VGG-19 classifier plus CCS-GAN was trained using a small sample of positive image slices ranging from at most 50 down to as few as 10 COVID-19 positive CT-scan images. CCS-GAN achieves high accuracy with few positive images and thereby greatly reduces the barrier of acquiring large training volumes in order to train a diagnostic classifier for COVID-19.

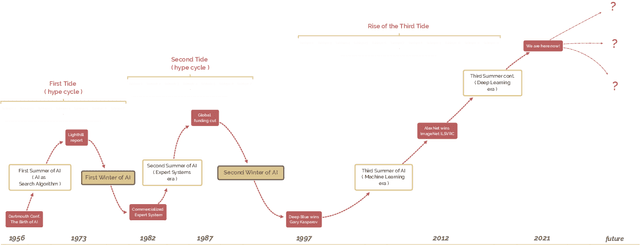

A brief history of AI: how to prevent another winter

Sep 08, 2021

Abstract:The field of artificial intelligence (AI), regarded as one of the most enigmatic areas of science, has witnessed exponential growth in the past decade including a remarkably wide array of applications, having already impacted our everyday lives. Advances in computing power and the design of sophisticated AI algorithms have enabled computers to outperform humans in a variety of tasks, especially in the areas of computer vision and speech recognition. Yet, AI's path has never been smooth, having essentially fallen apart twice in its lifetime ('winters' of AI), both after periods of popular success ('summers' of AI). We provide a brief rundown of AI's evolution over the course of decades, highlighting its crucial moments and major turning points from inception to the present. In doing so, we attempt to learn, anticipate the future, and discuss what steps may be taken to prevent another 'winter'.

Objective task-based evaluation of artificial intelligence-based medical imaging methods: Framework, strategies and role of the physician

Jul 20, 2021

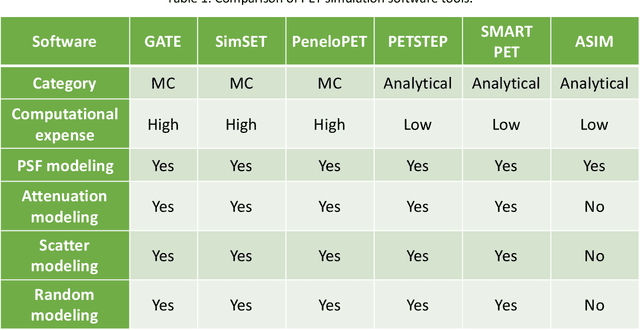

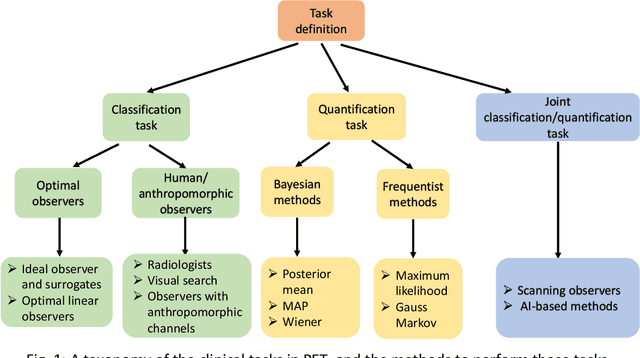

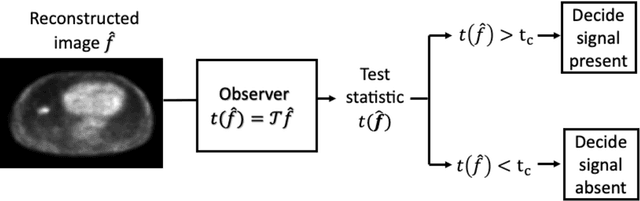

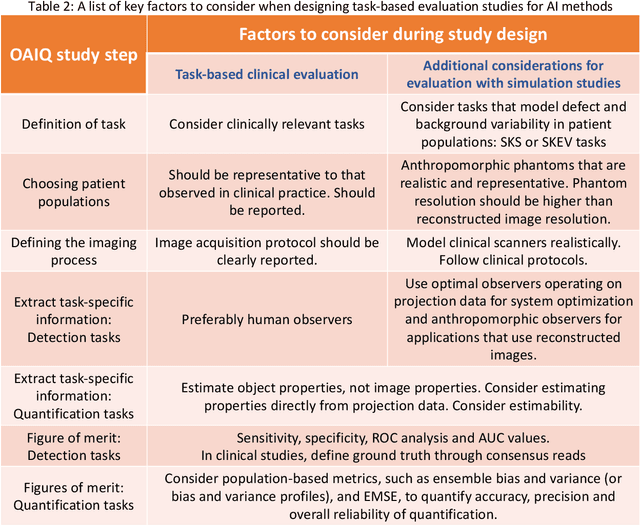

Abstract:Artificial intelligence (AI)-based methods are showing promise in multiple medical-imaging applications. Thus, there is substantial interest in clinical translation of these methods, requiring in turn, that they be evaluated rigorously. In this paper, our goal is to lay out a framework for objective task-based evaluation of AI methods. We will also provide a list of tools available in the literature to conduct this evaluation. Further, we outline the important role of physicians in conducting these evaluation studies. The examples in this paper will be proposed in the context of PET with a focus on neural-network-based methods. However, the framework is also applicable to evaluate other medical-imaging modalities and other types of AI methods.

Artificial Intelligence in PET: an Industry Perspective

Jul 14, 2021

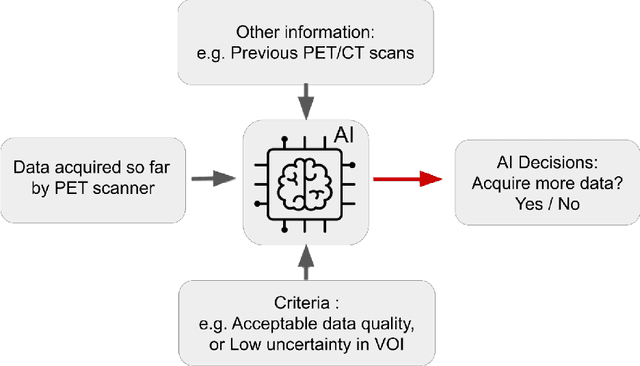

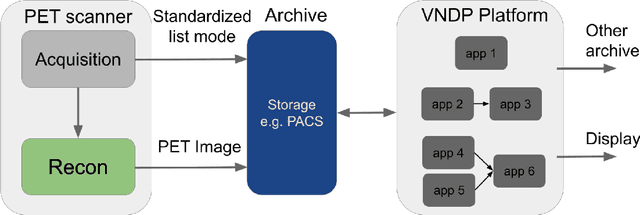

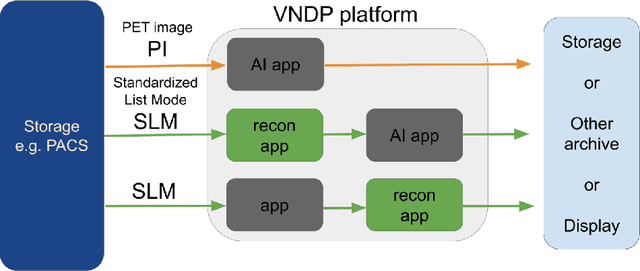

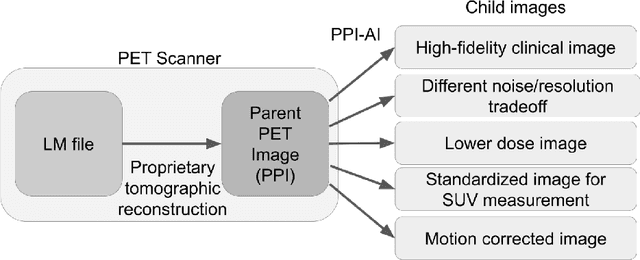

Abstract:Artificial intelligence (AI) has significant potential to positively impact and advance medical imaging, including positron emission tomography (PET) imaging applications. AI has the ability to enhance and optimize all aspects of the PET imaging chain from patient scheduling, patient setup, protocoling, data acquisition, detector signal processing, reconstruction, image processing and interpretation. AI poses industry-specific challenges which will need to be addressed and overcome to maximize the future potentials of AI in PET. This paper provides an overview of these industry-specific challenges for the development, standardization, commercialization, and clinical adoption of AI, and explores the potential enhancements to PET imaging brought on by AI in the near future. In particular, the combination of on-demand image reconstruction, AI, and custom designed data processing workflows may open new possibilities for innovation which would positively impact the industry and ultimately patients.

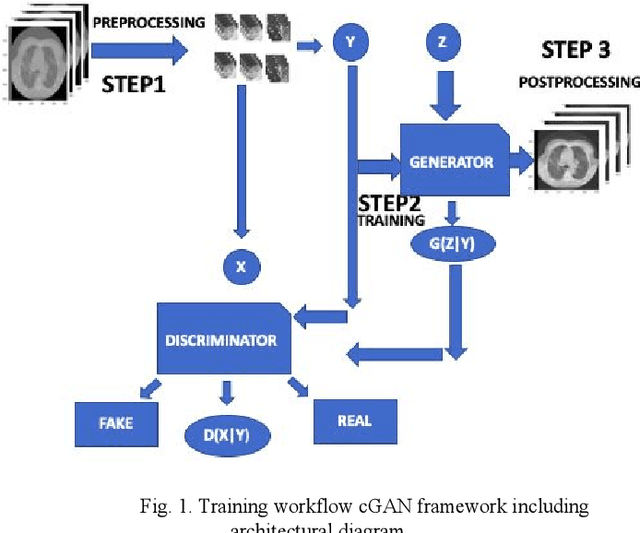

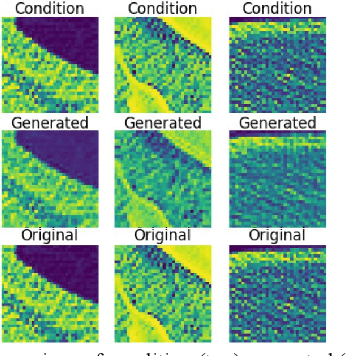

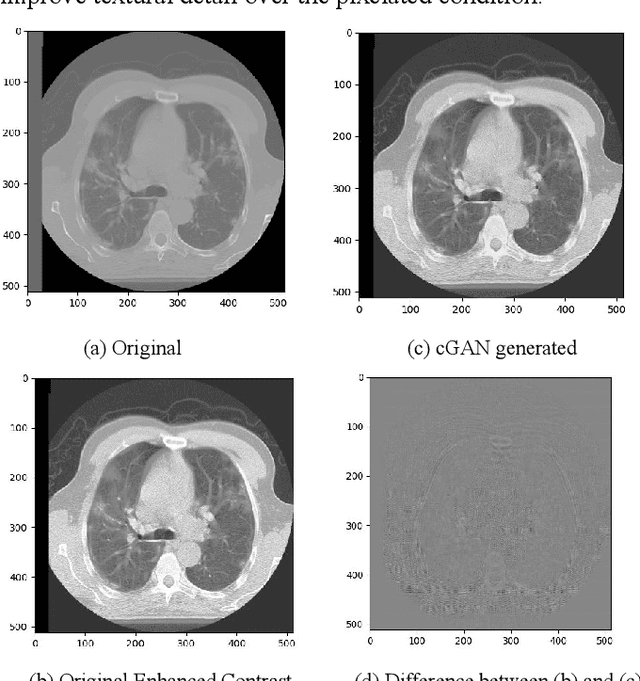

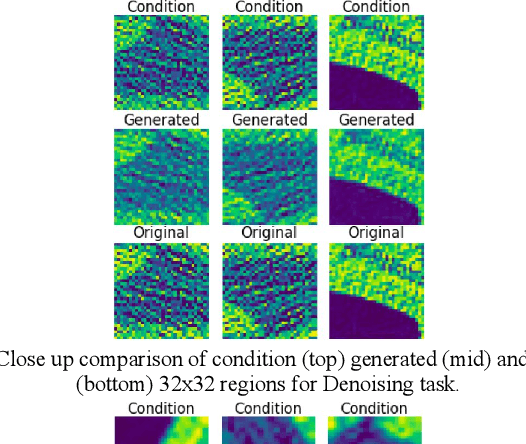

Toward Generating Synthetic CT Volumes using a 3D-Conditional Generative Adversarial Network

Apr 02, 2021

Abstract:We present a novel conditional Generative Adversarial Network (cGAN) architecture that is capable of generating 3D Computed Tomography scans in voxels from noisy and/or pixelated approximations and with the potential to generate full synthetic 3D scan volumes. We believe conditional cGAN to be a tractable approach to generate 3D CT volumes, even though the problem of generating full resolution deep fakes is presently impractical due to GPU memory limitations. We present results for autoencoder, denoising, and depixelating tasks which are trained and tested on two novel COVID19 CT datasets. Our evaluation metrics, Peak Signal to Noise ratio (PSNR) range from 12.53 - 46.46 dB, and the Structural Similarity index ( SSIM) range from 0.89 to 1.

Deep Expectation-Maximization for Semi-Supervised Lung Cancer Screening

Oct 02, 2020

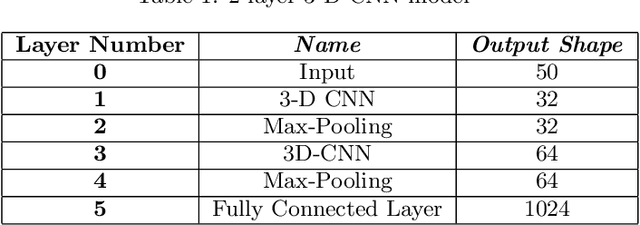

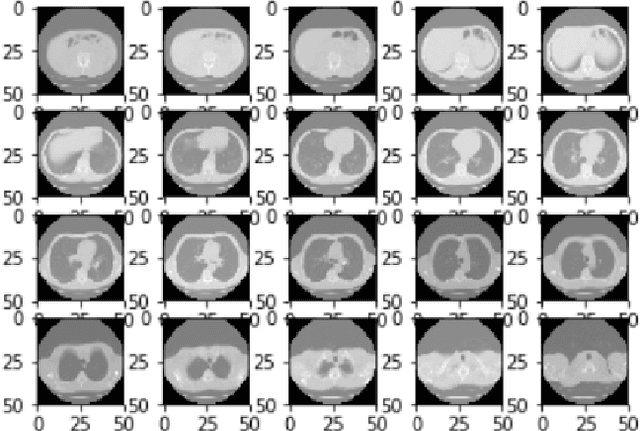

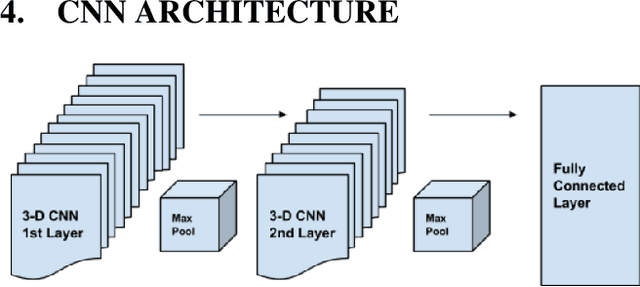

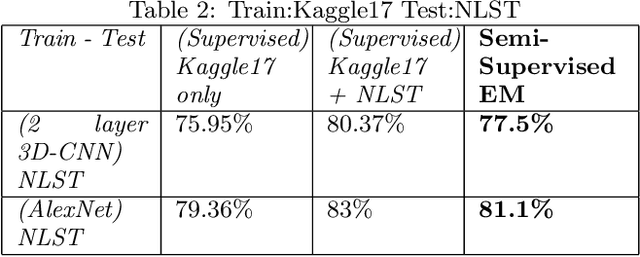

Abstract:We present a semi-supervised algorithm for lung cancer screening in which a 3D Convolutional Neural Network (CNN) is trained using the Expectation-Maximization (EM) meta-algorithm. Semi-supervised learning allows a smaller labelled data-set to be combined with an unlabeled data-set in order to provide a larger and more diverse training sample. EM allows the algorithm to simultaneously calculate a maximum likelihood estimate of the CNN training coefficients along with the labels for the unlabeled training set which are defined as a latent variable space. We evaluate the model performance of the Semi-Supervised EM algorithm for CNNs through cross-domain training of the Kaggle Data Science Bowl 2017 (Kaggle17) data-set with the National Lung Screening Trial (NLST) data-set. Our results show that the Semi-Supervised EM algorithm greatly improves the classification accuracy of the cross-domain lung cancer screening, although results are lower than a fully supervised approach with the advantage of additional labelled data from the unsupervised sample. As such, we demonstrate that Semi-Supervised EM is a valuable technique to improve the accuracy of lung cancer screening models using 3D CNNs.

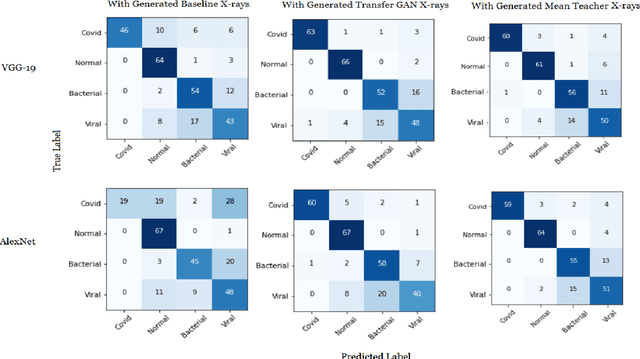

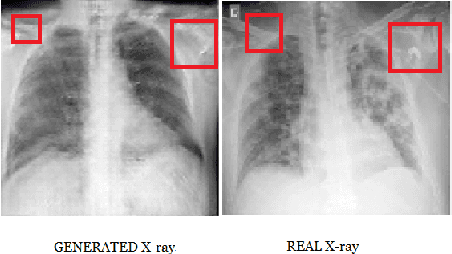

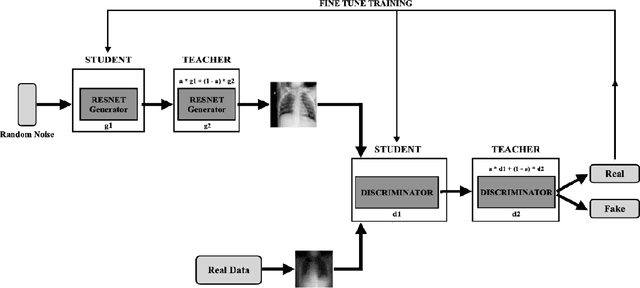

Generating Realistic COVID19 X-rays with a Mean Teacher + Transfer Learning GAN

Sep 26, 2020

Abstract:COVID-19 is a novel infectious disease responsible for over 800K deaths worldwide as of August 2020. The need for rapid testing is a high priority and alternative testing strategies including X-ray image classification are a promising area of research. However, at present, public datasets for COVID19 x-ray images have low data volumes, making it challenging to develop accurate image classifiers. Several recent papers have made use of Generative Adversarial Networks (GANs) in order to increase the training data volumes. But realistic synthetic COVID19 X-rays remain challenging to generate. We present a novel Mean Teacher + Transfer GAN (MTT-GAN) that generates COVID19 chest X-ray images of high quality. In order to create a more accurate GAN, we employ transfer learning from the Kaggle Pneumonia X-Ray dataset, a highly relevant data source orders of magnitude larger than public COVID19 datasets. Furthermore, we employ the Mean Teacher algorithm as a constraint to improve stability of training. Our qualitative analysis shows that the MTT-GAN generates X-ray images that are greatly superior to a baseline GAN and visually comparable to real X-rays. Although board-certified radiologists can distinguish MTT-GAN fakes from real COVID19 X-rays. Quantitative analysis shows that MTT-GAN greatly improves the accuracy of both a binary COVID19 classifier as well as a multi-class Pneumonia classifier as compared to a baseline GAN. Our classification accuracy is favourable as compared to recently reported results in the literature for similar binary and multi-class COVID19 screening tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge