Anurag Gupta

MedTutor: A Retrieval-Augmented LLM System for Case-Based Medical Education

Jan 11, 2026Abstract:The learning process for medical residents presents significant challenges, demanding both the ability to interpret complex case reports and the rapid acquisition of accurate medical knowledge from reliable sources. Residents typically study case reports and engage in discussions with peers and mentors, but finding relevant educational materials and evidence to support their learning from these cases is often time-consuming and challenging. To address this, we introduce MedTutor, a novel system designed to augment resident training by automatically generating evidence-based educational content and multiple-choice questions from clinical case reports. MedTutor leverages a Retrieval-Augmented Generation (RAG) pipeline that takes clinical case reports as input and produces targeted educational materials. The system's architecture features a hybrid retrieval mechanism that synergistically queries a local knowledge base of medical textbooks and academic literature (using PubMed, Semantic Scholar APIs) for the latest related research, ensuring the generated content is both foundationally sound and current. The retrieved evidence is filtered and ordered using a state-of-the-art reranking model and then an LLM generates the final long-form output describing the main educational content regarding the case-report. We conduct a rigorous evaluation of the system. First, three radiologists assessed the quality of outputs, finding them to be of high clinical and educational value. Second, we perform a large scale evaluation using an LLM-as-a Judge to understand if LLMs can be used to evaluate the output of the system. Our analysis using correlation between LLMs outputs and human expert judgments reveals a moderate alignment and highlights the continued necessity of expert oversight.

* Accepted to EMNLP 2025 (System Demonstrations)

Non-Invasive Qualitative Vibration Analysis using Event Camera

Oct 18, 2024

Abstract:This technical report investigates the application of event-based vision sensors in non-invasive qualitative vibration analysis, with a particular focus on frequency measurement and motion magnification. Event cameras, with their high temporal resolution and dynamic range, offer promising capabilities for real-time structural assessment and subtle motion analysis. Our study employs cutting-edge event-based vision techniques to explore real-world scenarios in frequency measurement in vibrational analysis and intensity reconstruction for motion magnification. In the former, event-based sensors demonstrated significant potential for real-time structural assessment. However, our work in motion magnification revealed considerable challenges, particularly in scenarios involving stationary cameras and isolated motion.

Energy-Efficient Mining for Blockchain-Enabled IoT Applications. An Optimal Multiple-Stopping Time Approach

May 09, 2023

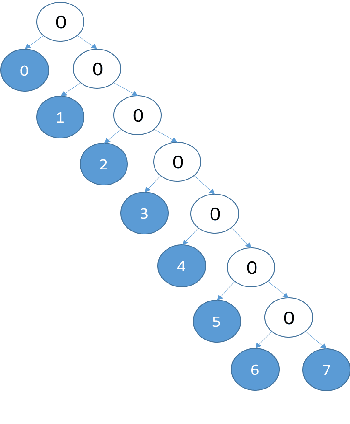

Abstract:What are the optimal times for an Internet of Things (IoT) device to act as a blockchain miner? The aim is to minimize the energy consumed by low-power IoT devices that log their data into a secure (tamper-proof) distributed ledger. We formulate the energy-efficient blockchain mining for IoT devices as a multiple-stopping time partially observed Markov decision process (POMDP) to maximize the probability of adding a block in the blockchain; we also present a model to optimize the number of stops (mining instants). In general, POMDPs are computationally intractable to solve, but we show mathematically using submodularity that the optimal mining policy has a useful structure: 1) it is monotone in belief space, and 2) it exhibits a threshold structure, which divides the belief space into two connected sets. Exploiting the structural results, we formulate a computationally-efficient linear mining policy for the blockchain-enabled IoT device. We present a policy gradient technique to optimize the parameters of the linear mining policy. Finally, we use synthetic and real Bitcoin datasets to study the performance of our proposed mining policy. We demonstrate the energy efficiency achieved by the optimal linear mining policy in contrast to other heuristic strategies.

AutoTSG: Learning and Synthesis for Incident Troubleshooting

May 26, 2022

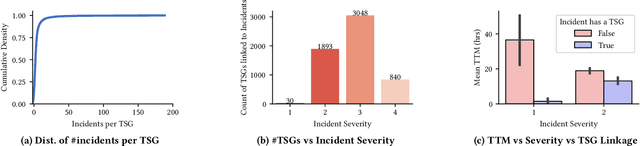

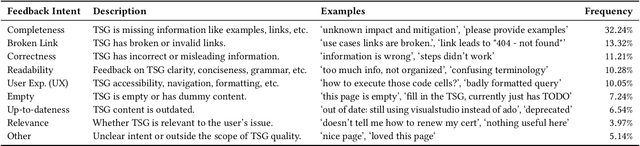

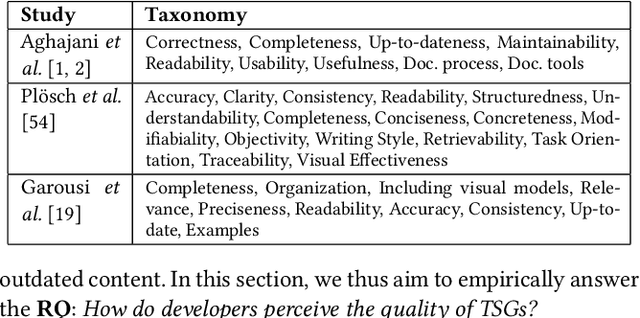

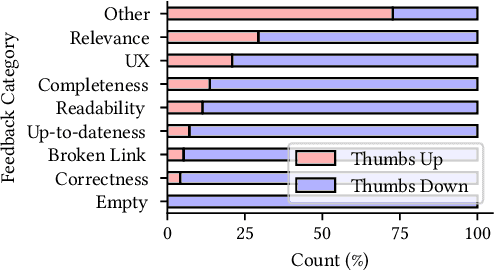

Abstract:Incident management is a key aspect of operating large-scale cloud services. To aid with faster and efficient resolution of incidents, engineering teams document frequent troubleshooting steps in the form of Troubleshooting Guides (TSGs), to be used by on-call engineers (OCEs). However, TSGs are siloed, unstructured, and often incomplete, requiring developers to manually understand and execute necessary steps. This results in a plethora of issues such as on-call fatigue, reduced productivity, and human errors. In this work, we conduct a large-scale empirical study of over 4K+ TSGs mapped to 1000s of incidents and find that TSGs are widely used and help significantly reduce mitigation efforts. We then analyze feedback on TSGs provided by 400+ OCEs and propose a taxonomy of issues that highlights significant gaps in TSG quality. To alleviate these gaps, we investigate the automation of TSGs and propose AutoTSG -- a novel framework for automation of TSGs to executable workflows by combining machine learning and program synthesis. Our evaluation of AutoTSG on 50 TSGs shows the effectiveness in both identifying TSG statements (accuracy 0.89) and parsing them for execution (precision 0.94 and recall 0.91). Lastly, we survey ten Microsoft engineers and show the importance of TSG automation and the usefulness of AutoTSG.

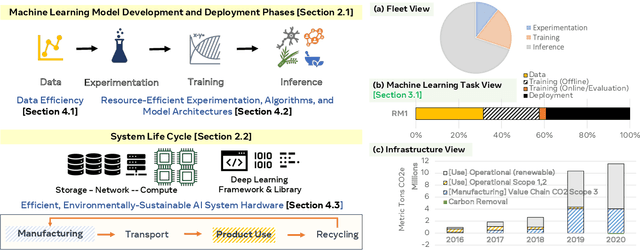

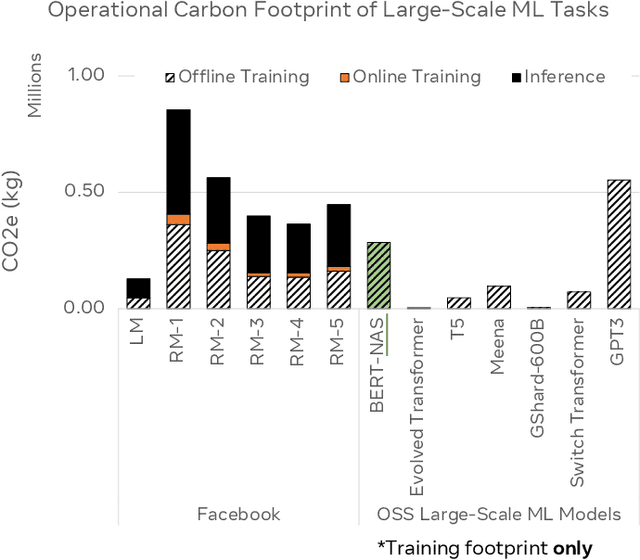

Sustainable AI: Environmental Implications, Challenges and Opportunities

Oct 30, 2021

Abstract:This paper explores the environmental impact of the super-linear growth trends for AI from a holistic perspective, spanning Data, Algorithms, and System Hardware. We characterize the carbon footprint of AI computing by examining the model development cycle across industry-scale machine learning use cases and, at the same time, considering the life cycle of system hardware. Taking a step further, we capture the operational and manufacturing carbon footprint of AI computing and present an end-to-end analysis for what and how hardware-software design and at-scale optimization can help reduce the overall carbon footprint of AI. Based on the industry experience and lessons learned, we share the key challenges and chart out important development directions across the many dimensions of AI. We hope the key messages and insights presented in this paper can inspire the community to advance the field of AI in an environmentally-responsible manner.

Principal Agent Problem as a Principled Approach to Electronic Counter-Countermeasures in Radar

Sep 08, 2021

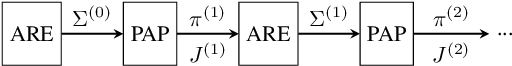

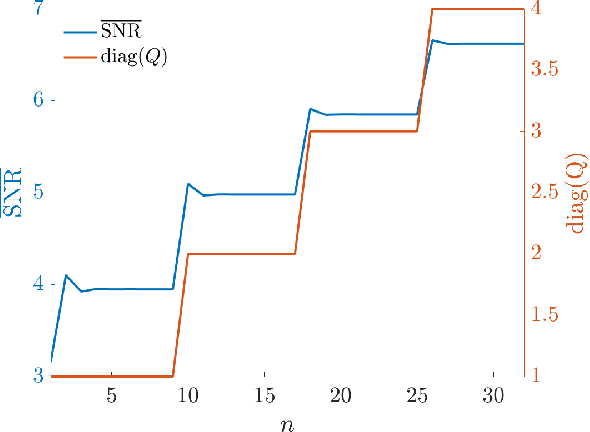

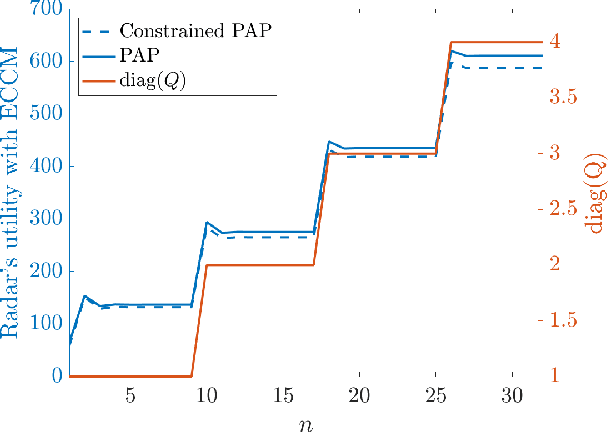

Abstract:Electronic countermeasures (ECM) against a radar are actions taken by an adversarial jammer to mitigate effective utilization of the electromagnetic spectrum by the radar. On the other hand, electronic counter-countermeasures (ECCM) are actions taken by the radar to mitigate the impact of electronic countermeasures (ECM) so that the radar can continue to operate effectively. The main idea of this paper is to show that ECCM involving a radar and a jammer can be formulated as a principal-agent problem (PAP) - a problem widely studied in microeconomics. With the radar as the principal and the jammer as the agent, we design a PAP to optimize the radar's ECCM strategy in the presence of a jammer. The radar seeks to optimally trade-off signal-to-noise ratio (SNR) of the target measurement with the measurement cost: cost for generating radiation power for the pulse to probe the target. We show that for a suitable choice of utility functions, PAP is a convex optimization problem. Further, we analyze the structure of the PAP and provide sufficient conditions under which the optimal solution is an increasing function of the jamming power observed by the radar; this enables computation of the radar's optimal ECCM within the class of increasing affine functions at a low computation cost. Finally, we illustrate the PAP formulation of the radar's ECCM problem via numerical simulations. We also use simulations to study a radar's ECCM problem wherein the radar and the jammer have mismatched information.

Small Boxes Big Data: A Deep Learning Approach to Optimize Variable Sized Bin Packing

Feb 14, 2017

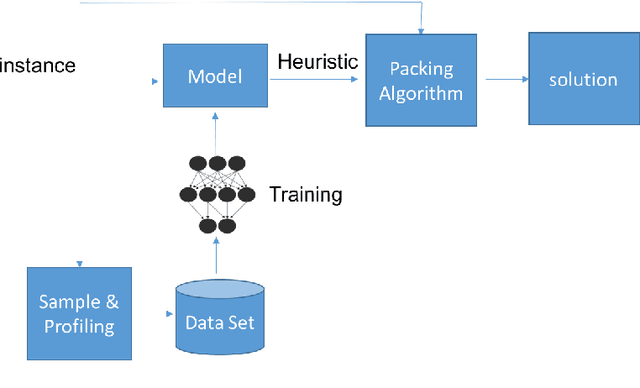

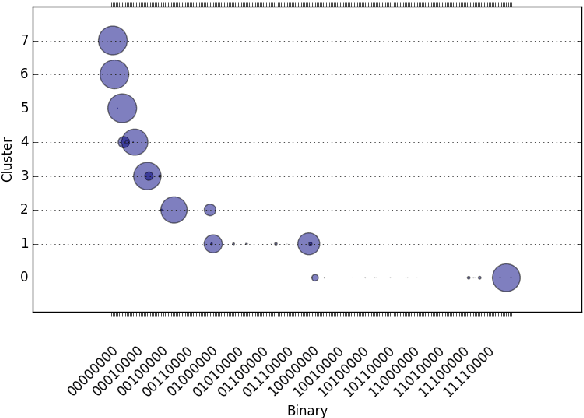

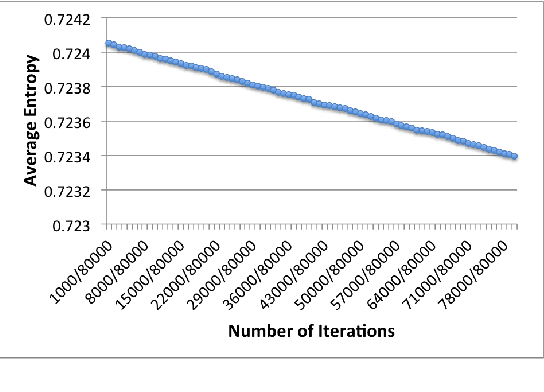

Abstract:Bin Packing problems have been widely studied because of their broad applications in different domains. Known as a set of NP-hard problems, they have different vari- ations and many heuristics have been proposed for obtaining approximate solutions. Specifically, for the 1D variable sized bin packing problem, the two key sets of optimization heuristics are the bin assignment and the bin allocation. Usually the performance of a single static optimization heuristic can not beat that of a dynamic one which is tailored for each bin packing instance. Building such an adaptive system requires modeling the relationship between bin features and packing perform profiles. The primary drawbacks of traditional AI machine learnings for this task are the natural limitations of feature engineering, such as the curse of dimensionality and feature selection quality. We introduce a deep learning approach to overcome the drawbacks by applying a large training data set, auto feature selection and fast, accurate labeling. We show in this paper how to build such a system by both theoretical formulation and engineering practices. Our prediction system achieves up to 89% training accuracy and 72% validation accuracy to select the best heuristic that can generate a better quality bin packing solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge