Alexander Heinecke

Space Filling Curves is All You Need: Communication-Avoiding Matrix Multiplication Made Simple

Jan 22, 2026Abstract:General Matrix Multiplication (GEMM) is the cornerstone of Deep Learning and HPC workloads; accordingly, academia and industry have heavily optimized this kernel. Modern platforms with matrix multiplication accelerators exhibit high FLOP/Byte machine balance, which makes implementing optimal matrix multiplication challenging. On modern CPU platforms with matrix engines, state-of-the-art vendor libraries tune input tensor layouts, parallelization schemes, and cache blocking to minimize data movement across the memory hierarchy and maximize throughput. However, the best settings for these parameters depend strongly on the target platform (number of cores, memory hierarchy, cache sizes) and on the shapes of the matrices, making exhaustive tuning infeasible; in practice this leads to performance "glass jaws". In this work we revisit space filling curves (SFC) to alleviate the problem of this cumbersome tuning. SFC convert multi-dimensional coordinates (e.g. 2D) into a single dimension (1D), keeping nearby points in the high-dimensional space close in the 1D order. We partition the Matrix Multiplication computation space using recent advancements in generalized SFC (Generalized Hilbert Curves), and we obtain platform-oblivious and shape-oblivious matrix-multiplication schemes that exhibit inherently high degree of data locality. Furthermore, we extend the SFC-based work partitioning to implement Communication-Avoiding (CA) algorithms that replicate the input tensors and provably minimize communication/data-movement on the critical path. The integration of CA-algorithms is seamless and yields compact code (~30 LOC), yet it achieves state-of-the-art results on multiple CPU platforms, outperforming vendor libraries by up to 2x(geometric-mean speedup) for a range of GEMM shapes.

Library Liberation: Competitive Performance Matmul Through Compiler-composed Nanokernels

Nov 14, 2025Abstract:The rapidly evolving landscape of AI and machine learning workloads has widened the gap between high-level domain operations and efficient hardware utilization. Achieving near-peak performance still demands deep hardware expertise-experts either handcraft target-specific kernels (e.g., DeepSeek) or rely on specialized libraries (e.g., CUTLASS)-both of which add complexity and limit scalability for most ML practitioners. This paper introduces a compilation scheme that automatically generates scalable, high-performance microkernels by leveraging the MLIR dialects to bridge domain-level operations and processor capabilities. Our approach removes dependence on low-level libraries by enabling the compiler to auto-generate near-optimal code directly. At its core is a mechanism for composing nanokernels from low-level IR constructs with near-optimal register utilization, forming efficient microkernels tailored to each target. We implement this technique in an MLIR-based compiler supporting both vector and tile based CPU instructions. Experiments show that the generated nanokernels are of production-quality, and competitive with state-of-the-art microkernel libraries.

ML-SpecQD: Multi-Level Speculative Decoding with Quantized Drafts

Mar 17, 2025Abstract:Speculative decoding (SD) has emerged as a method to accelerate LLM inference without sacrificing any accuracy over the 16-bit model inference. In a typical SD setup, the idea is to use a full-precision, small, fast model as "draft" to generate the next few tokens and use the "target" large model to verify the draft-generated tokens. The efficacy of this method heavily relies on the acceptance ratio of the draft-generated tokens and the relative token throughput of the draft versus the target model. Nevertheless, an efficient SD pipeline requires pre-training and aligning the draft model to the target model, making it impractical for LLM inference in a plug-and-play fashion. In this work, we propose using MXFP4 models as drafts in a plug-and-play fashion since the MXFP4 Weight-Only-Quantization (WOQ) merely direct-casts the BF16 target model weights to MXFP4. In practice, our plug-and-play solution gives speedups up to 2x over the BF16 baseline. Then we pursue an opportunity for further acceleration: the MXFP4 draft token generation itself can be accelerated via speculative decoding by using yet another smaller draft. We call our method ML-SpecQD: Multi-Level Speculative Decoding with Quantized Drafts since it recursively applies speculation for accelerating the draft-token generation. Combining Multi-Level Speculative Decoding with MXFP4 Quantized Drafts we outperform state-of-the-art speculative decoding, yielding speedups up to 2.72x over the BF16 baseline.

Towards a high-performance AI compiler with upstream MLIR

Apr 15, 2024

Abstract:This work proposes a compilation flow using open-source compiler passes to build a framework to achieve ninja performance from a generic linear algebra high-level abstraction. We demonstrate this flow with a proof-of-concept MLIR project that uses input IR in Linalg-on-Tensor from TensorFlow and PyTorch, performs cache-level optimizations and lowering to micro-kernels for efficient vectorization, achieving over 90% of the performance of ninja-written equivalent programs. The contributions of this work include: (1) Packing primitives on the tensor dialect and passes for cache-aware distribution of tensors (single and multi-core) and type-aware instructions (VNNI, BFDOT, BFMMLA), including propagation of shapes across the entire function; (2) A linear algebra pipeline, including tile, fuse and bufferization strategies to get model-level IR into hardware friendly tile calls; (3) A mechanism for micro-kernel lowering to an open source library that supports various CPUs.

Microscaling Data Formats for Deep Learning

Oct 19, 2023

Abstract:Narrow bit-width data formats are key to reducing the computational and storage costs of modern deep learning applications. This paper evaluates Microscaling (MX) data formats that combine a per-block scaling factor with narrow floating-point and integer types for individual elements. MX formats balance the competing needs of hardware efficiency, model accuracy, and user friction. Empirical results on over two dozen benchmarks demonstrate practicality of MX data formats as a drop-in replacement for baseline FP32 for AI inference and training with low user friction. We also show the first instance of training generative language models at sub-8-bit weights, activations, and gradients with minimal accuracy loss and no modifications to the training recipe.

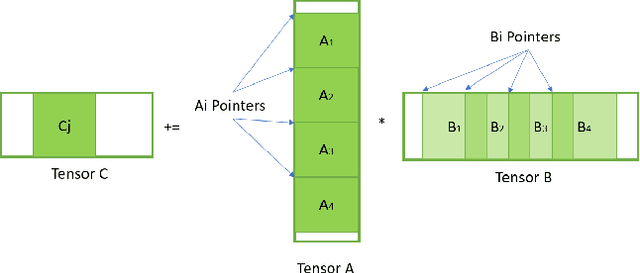

Harnessing Deep Learning and HPC Kernels via High-Level Loop and Tensor Abstractions on CPU Architectures

Apr 25, 2023

Abstract:During the past decade, Deep Learning (DL) algorithms, programming systems and hardware have converged with the High Performance Computing (HPC) counterparts. Nevertheless, the programming methodology of DL and HPC systems is stagnant, relying on highly-optimized, yet platform-specific and inflexible vendor-optimized libraries. Such libraries provide close-to-peak performance on specific platforms, kernels and shapes thereof that vendors have dedicated optimizations efforts, while they underperform in the remaining use-cases, yielding non-portable codes with performance glass-jaws. This work introduces a framework to develop efficient, portable DL and HPC kernels for modern CPU architectures. We decompose the kernel development in two steps: 1) Expressing the computational core using Tensor Processing Primitives (TPPs): a compact, versatile set of 2D-tensor operators, 2) Expressing the logical loops around TPPs in a high-level, declarative fashion whereas the exact instantiation (ordering, tiling, parallelization) is determined via simple knobs. We demonstrate the efficacy of our approach using standalone kernels and end-to-end workloads that outperform state-of-the-art implementations on diverse CPU platforms.

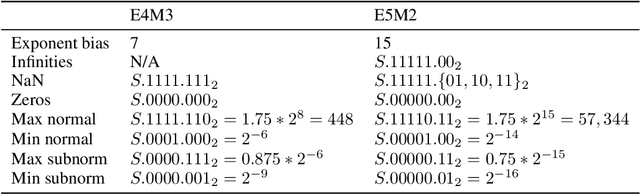

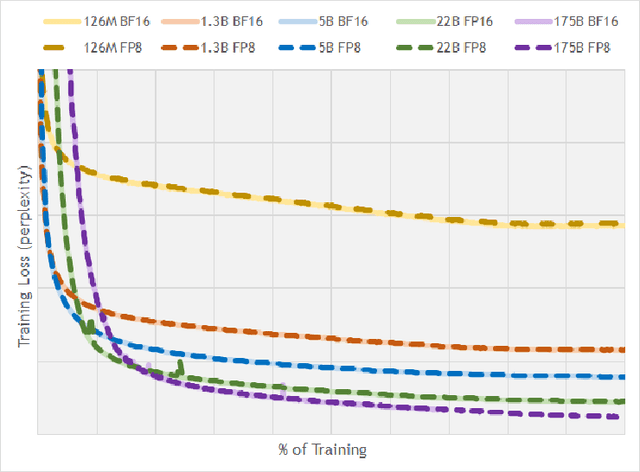

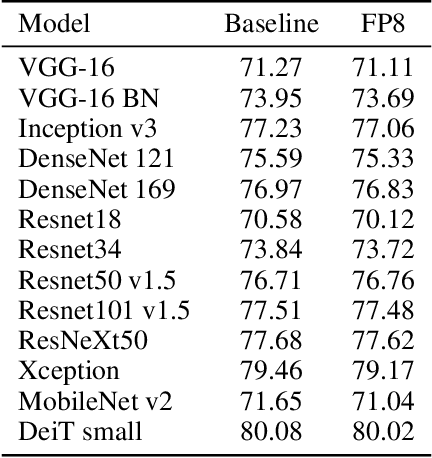

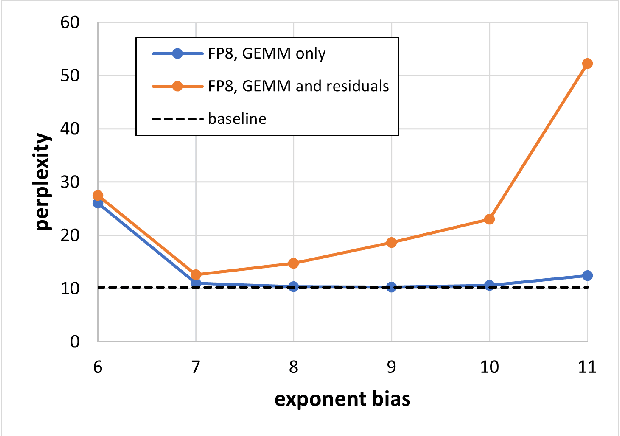

FP8 Formats for Deep Learning

Sep 12, 2022

Abstract:FP8 is a natural progression for accelerating deep learning training inference beyond the 16-bit formats common in modern processors. In this paper we propose an 8-bit floating point (FP8) binary interchange format consisting of two encodings - E4M3 (4-bit exponent and 3-bit mantissa) and E5M2 (5-bit exponent and 2-bit mantissa). While E5M2 follows IEEE 754 conventions for representatio of special values, E4M3's dynamic range is extended by not representing infinities and having only one mantissa bit-pattern for NaNs. We demonstrate the efficacy of the FP8 format on a variety of image and language tasks, effectively matching the result quality achieved by 16-bit training sessions. Our study covers the main modern neural network architectures - CNNs, RNNs, and Transformer-based models, leaving all the hyperparameters unchanged from the 16-bit baseline training sessions. Our training experiments include large, up to 175B parameter, language models. We also examine FP8 post-training-quantization of language models trained using 16-bit formats that resisted fixed point int8 quantization.

FPGA-based AI Smart NICs for Scalable Distributed AI Training Systems

Apr 22, 2022

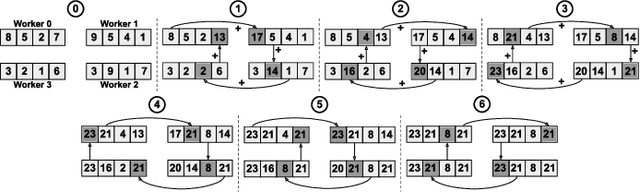

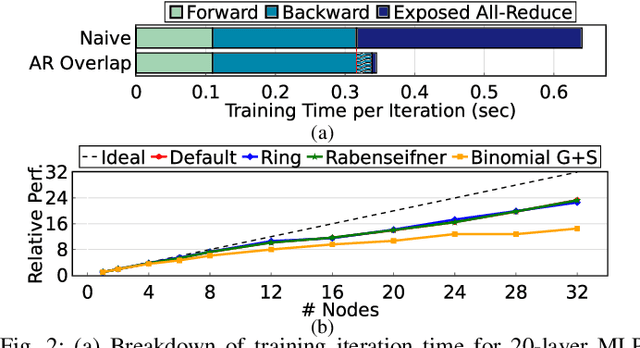

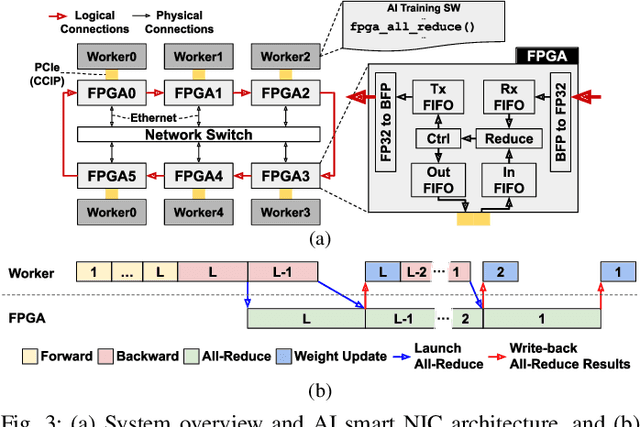

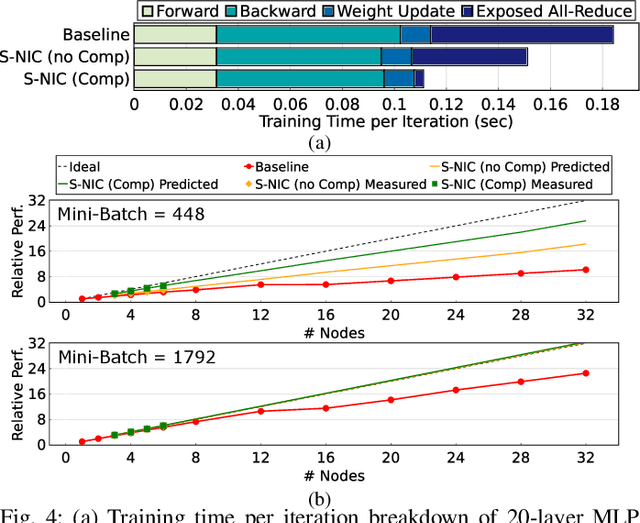

Abstract:Rapid advances in artificial intelligence (AI) technology have led to significant accuracy improvements in a myriad of application domains at the cost of larger and more compute-intensive models. Training such models on massive amounts of data typically requires scaling to many compute nodes and relies heavily on collective communication algorithms, such as all-reduce, to exchange the weight gradients between different nodes. The overhead of these collective communication operations in a distributed AI training system can bottleneck its performance, with more pronounced effects as the number of nodes increases. In this paper, we first characterize the all-reduce operation overhead by profiling distributed AI training. Then, we propose a new smart network interface card (NIC) for distributed AI training systems using field-programmable gate arrays (FPGAs) to accelerate all-reduce operations and optimize network bandwidth utilization via data compression. The AI smart NIC frees up the system's compute resources to perform the more compute-intensive tensor operations and increases the overall node-to-node communication efficiency. We perform real measurements on a prototype distributed AI training system comprised of 6 compute nodes to evaluate the performance gains of our proposed FPGA-based AI smart NIC compared to a baseline system with regular NICs. We also use these measurements to validate an analytical model that we formulate to predict performance when scaling to larger systems. Our proposed FPGA-based AI smart NIC enhances overall training performance by 1.6x at 6 nodes, with an estimated 2.5x performance improvement at 32 nodes, compared to the baseline system using conventional NICs.

DistGNN: Scalable Distributed Training for Large-Scale Graph Neural Networks

Apr 16, 2021

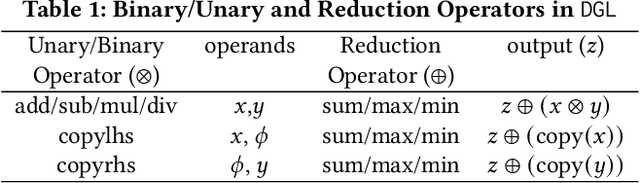

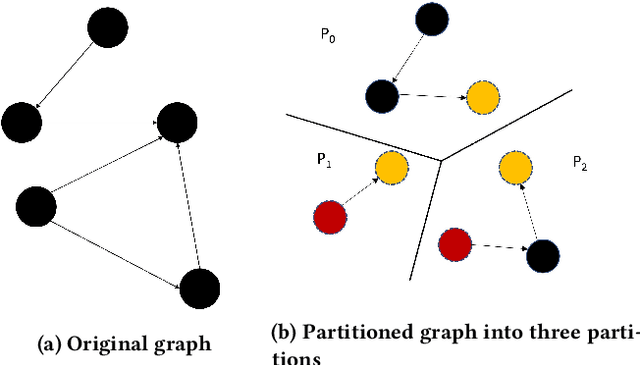

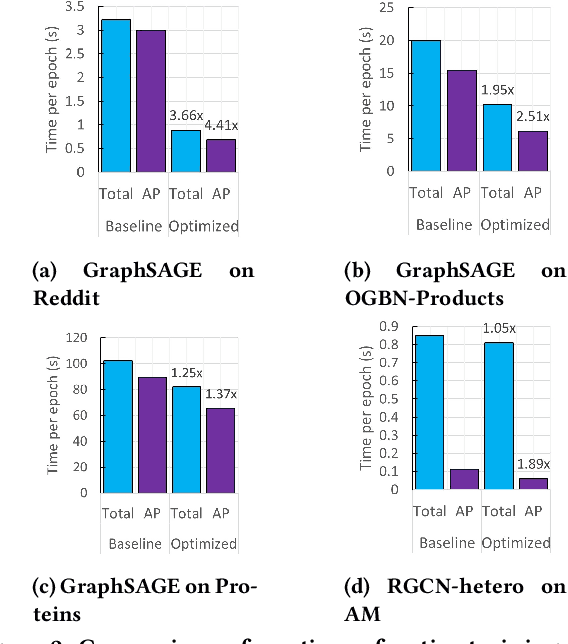

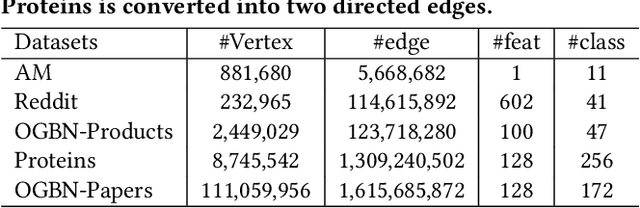

Abstract:Full-batch training on Graph Neural Networks (GNN) to learn the structure of large graphs is a critical problem that needs to scale to hundreds of compute nodes to be feasible. It is challenging due to large memory capacity and bandwidth requirements on a single compute node and high communication volumes across multiple nodes. In this paper, we present DistGNN that optimizes the well-known Deep Graph Library (DGL) for full-batch training on CPU clusters via an efficient shared memory implementation, communication reduction using a minimum vertex-cut graph partitioning algorithm and communication avoidance using a family of delayed-update algorithms. Our results on four common GNN benchmark datasets: Reddit, OGB-Products, OGB-Papers and Proteins, show up to 3.7x speed-up using a single CPU socket and up to 97x speed-up using 128 CPU sockets, respectively, over baseline DGL implementations running on a single CPU socket

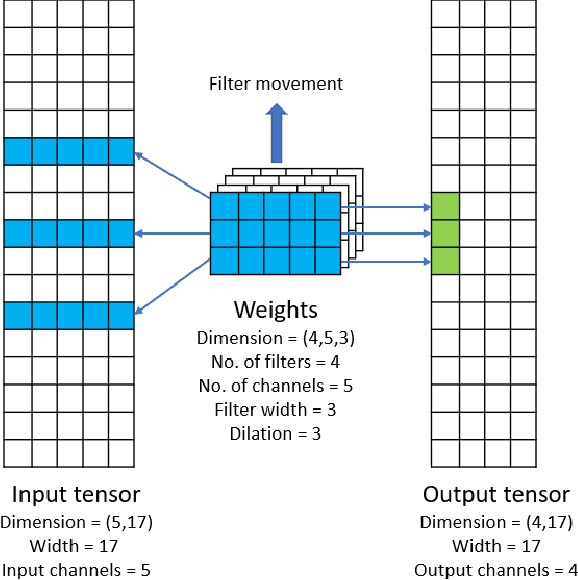

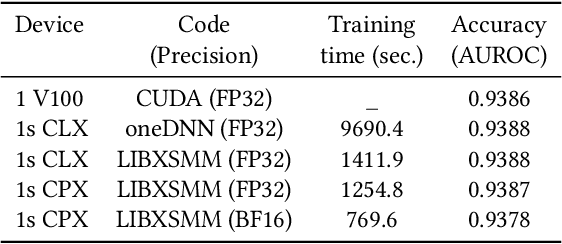

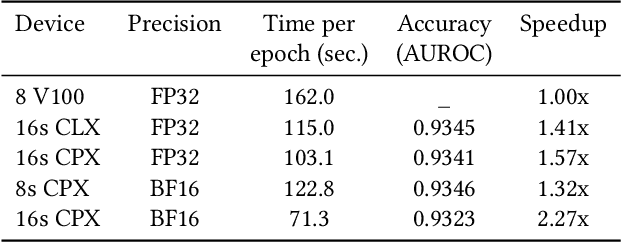

Efficient and Generic 1D Dilated Convolution Layer for Deep Learning

Apr 16, 2021

Abstract:Convolutional neural networks (CNNs) have found many applications in tasks involving two-dimensional (2D) data, such as image classification and image processing. Therefore, 2D convolution layers have been heavily optimized on CPUs and GPUs. However, in many applications - for example genomics and speech recognition, the data can be one-dimensional (1D). Such applications can benefit from optimized 1D convolution layers. In this work, we introduce our efficient implementation of a generic 1D convolution layer covering a wide range of parameters. It is optimized for x86 CPU architectures, in particular, for architectures containing Intel AVX-512 and AVX-512 BFloat16 instructions. We use the LIBXSMM library's batch-reduce General Matrix Multiplication (BRGEMM) kernel for FP32 and BFloat16 precision. We demonstrate that our implementation can achieve up to 80% efficiency on Intel Xeon Cascade Lake and Cooper Lake CPUs. Additionally, we show the generalization capability of our BRGEMM based approach by achieving high efficiency across a range of parameters. We consistently achieve higher efficiency than the 1D convolution layer with Intel oneDNN library backend for varying input tensor widths, filter widths, number of channels, filters, and dilation parameters. Finally, we demonstrate the performance of our optimized 1D convolution layer by utilizing it in the end-to-end neural network training with real genomics datasets and achieve up to 6.86x speedup over the oneDNN library-based implementation on Cascade Lake CPUs. We also demonstrate the scaling with 16 sockets of Cascade/Cooper Lake CPUs and achieve significant speedup over eight V100 GPUs using a similar power envelop. In the end-to-end training, we get a speedup of 1.41x on Cascade Lake with FP32, 1.57x on Cooper Lake with FP32, and 2.27x on Cooper Lake with BFloat16 over eight V100 GPUs with FP32.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge