Abdeslam Boularias

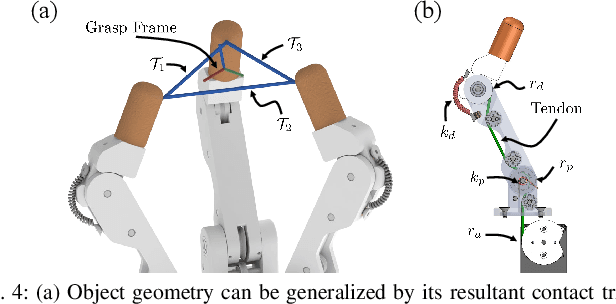

Learning Category-Level Manipulation Tasks from Point Clouds with Dynamic Graph CNNs

Sep 13, 2022

Abstract:This paper presents a new technique for learning category-level manipulation from raw RGB-D videos of task demonstrations, with no manual labels or annotations. Category-level learning aims to acquire skills that can be generalized to new objects, with geometries and textures that are different from the ones of the objects used in the demonstrations. We address this problem by first viewing both grasping and manipulation as special cases of tool use, where a tool object is moved to a sequence of key-poses defined in a frame of reference of a target object. Tool and target objects, along with their key-poses, are predicted using a dynamic graph convolutional neural network that takes as input an automatically segmented depth and color image of the entire scene. Empirical results on object manipulation tasks with a real robotic arm show that the proposed network can efficiently learn from real visual demonstrations to perform the tasks on novel objects within the same category, and outperforms alternative approaches.

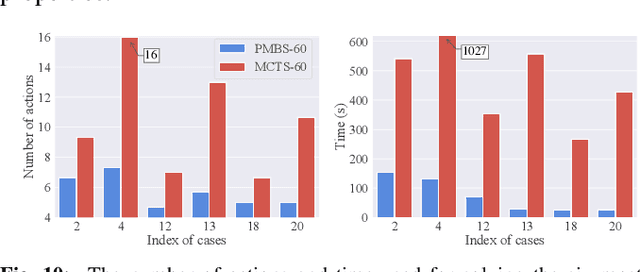

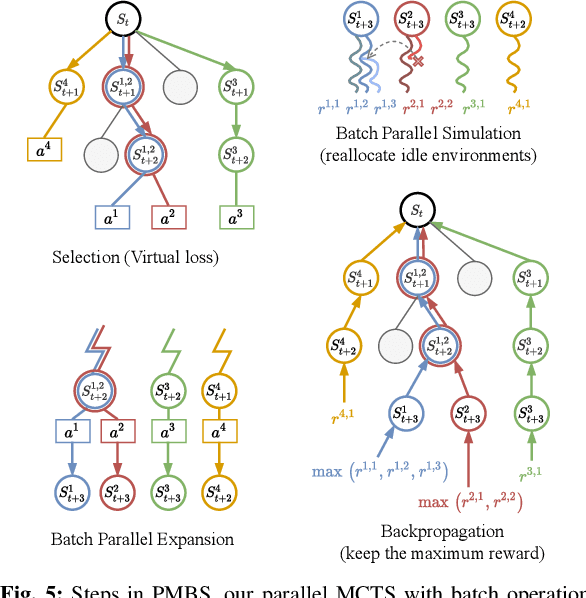

Parallel Monte Carlo Tree Search with Batched Rigid-body Simulations for Speeding up Long-Horizon Episodic Robot Planning

Jul 14, 2022

Abstract:We propose a novel Parallel Monte Carlo tree search with Batched Simulations (PMBS) algorithm for accelerating long-horizon, episodic robotic planning tasks. Monte Carlo tree search (MCTS) is an effective heuristic search algorithm for solving episodic decision-making problems whose underlying search spaces are expansive. Leveraging a GPU-based large-scale simulator, PMBS introduces massive parallelism into MCTS for solving planning tasks through the batched execution of a large number of concurrent simulations, which allows for more efficient and accurate evaluations of the expected cost-to-go over large action spaces. When applied to the challenging manipulation tasks of object retrieval from clutter, PMBS achieves a speedup of over $30\times$ with an improved solution quality, in comparison to a serial MCTS implementation. We show that PMBS can be directly applied to real robot hardware with negligible sim-to-real differences. Supplementary material, including video, can be found at https://github.com/arc-l/pmbs.

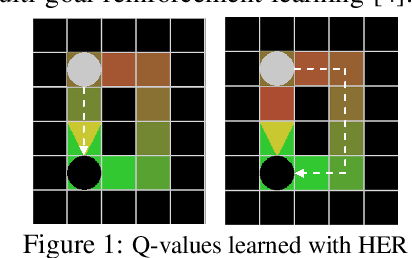

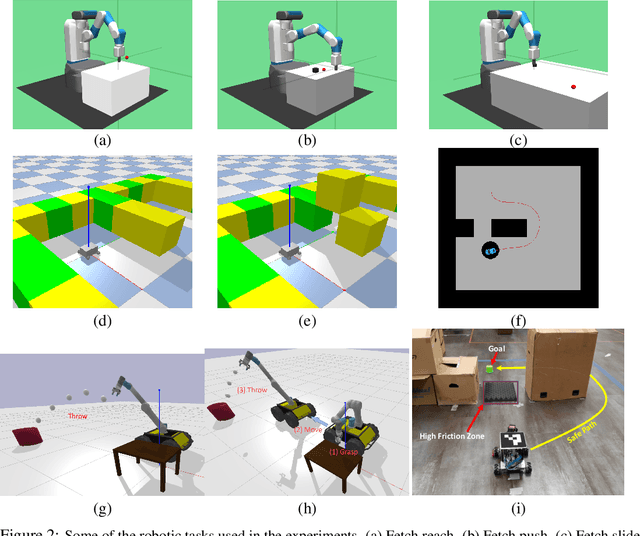

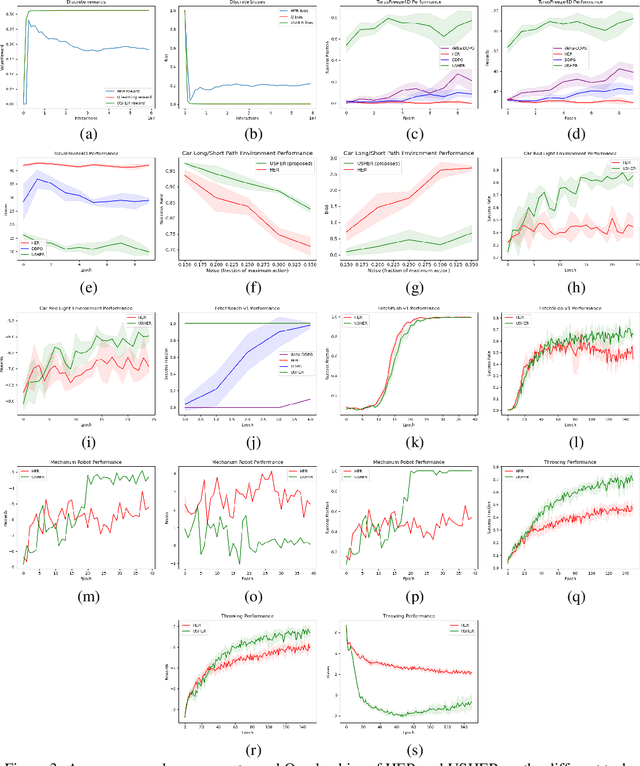

USHER: Unbiased Sampling for Hindsight Experience Replay

Jul 03, 2022

Abstract:Dealing with sparse rewards is a long-standing challenge in reinforcement learning (RL). Hindsight Experience Replay (HER) addresses this problem by reusing failed trajectories for one goal as successful trajectories for another. This allows for both a minimum density of reward and for generalization across multiple goals. However, this strategy is known to result in a biased value function, as the update rule underestimates the likelihood of bad outcomes in a stochastic environment. We propose an asymptotically unbiased importance-sampling-based algorithm to address this problem without sacrificing performance on deterministic environments. We show its effectiveness on a range of robotic systems, including challenging high dimensional stochastic environments.

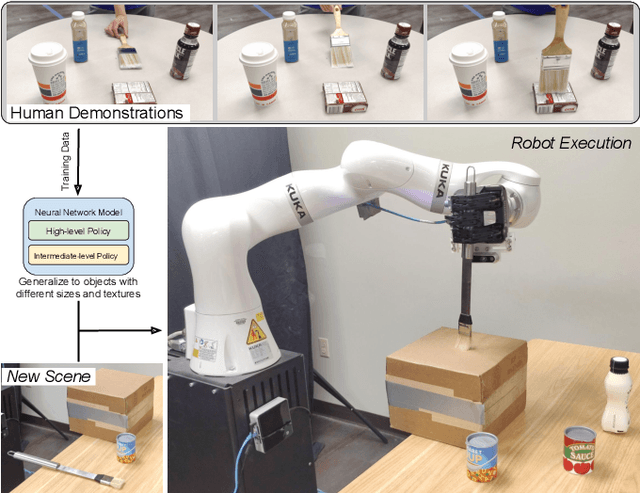

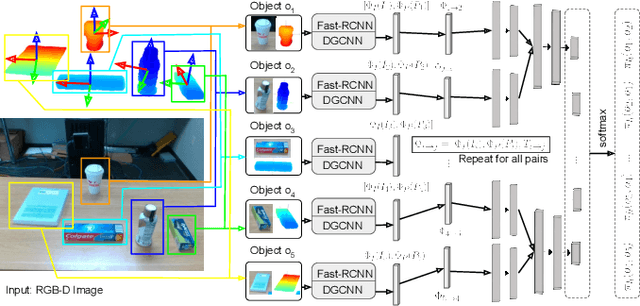

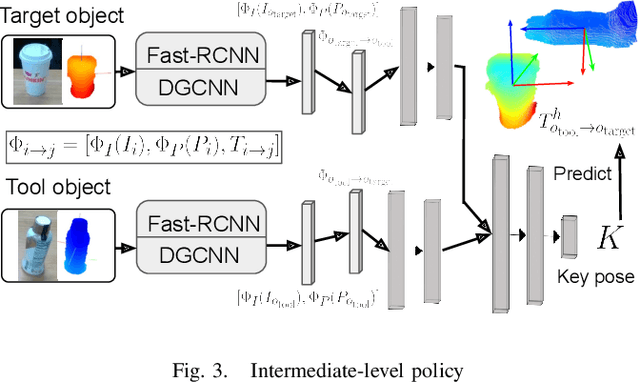

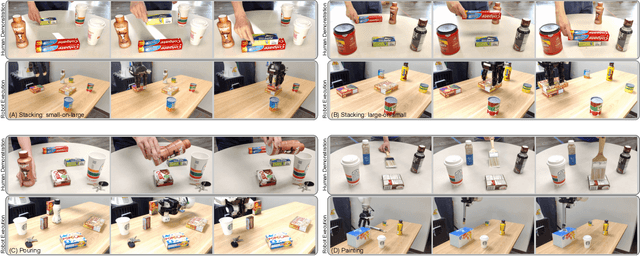

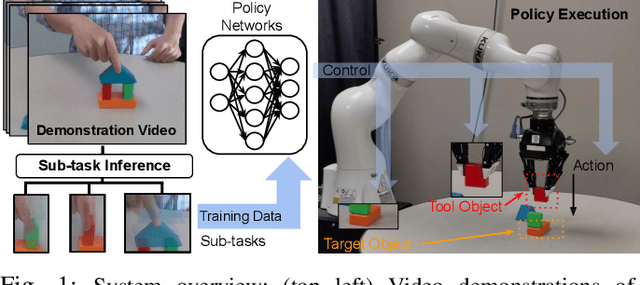

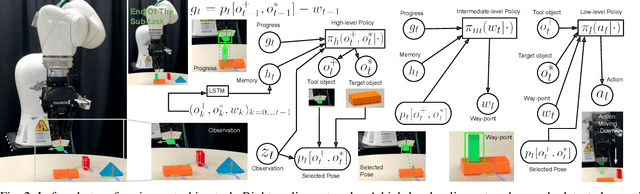

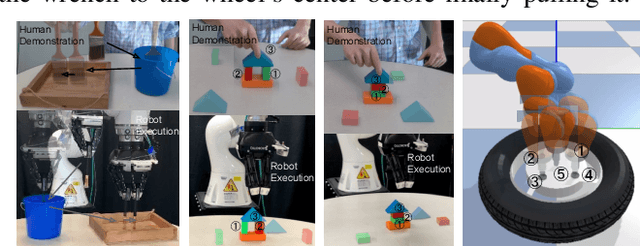

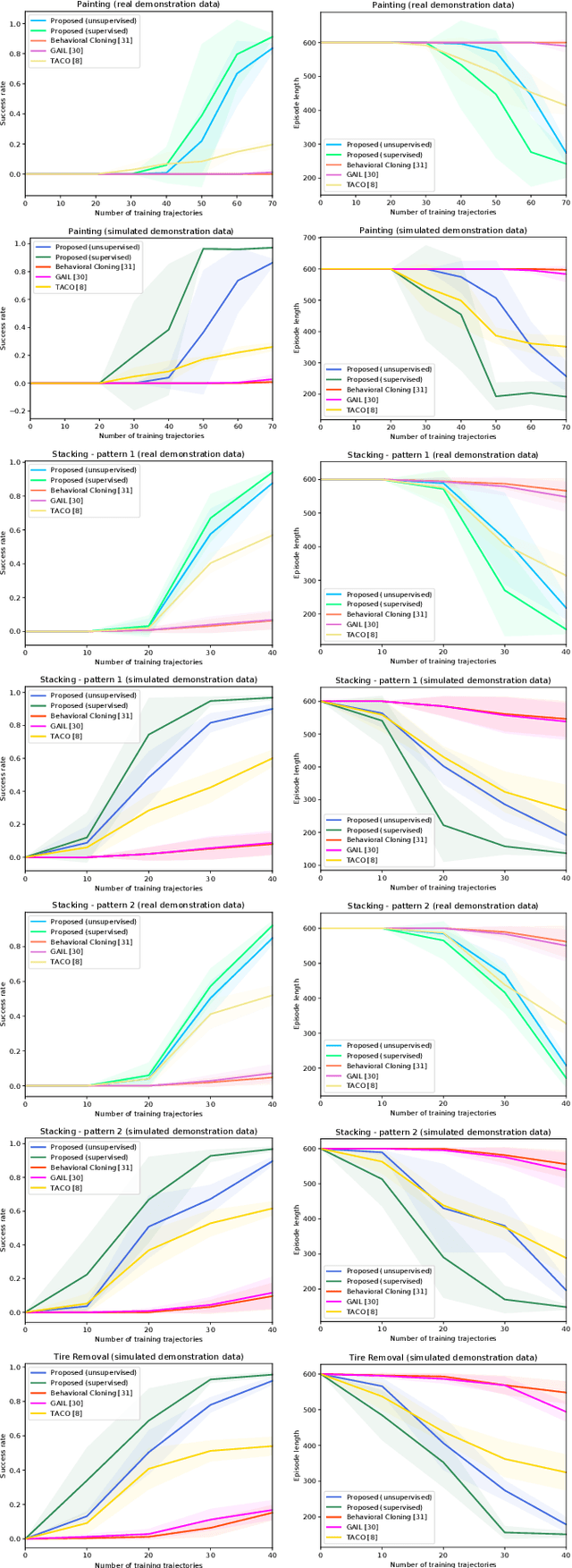

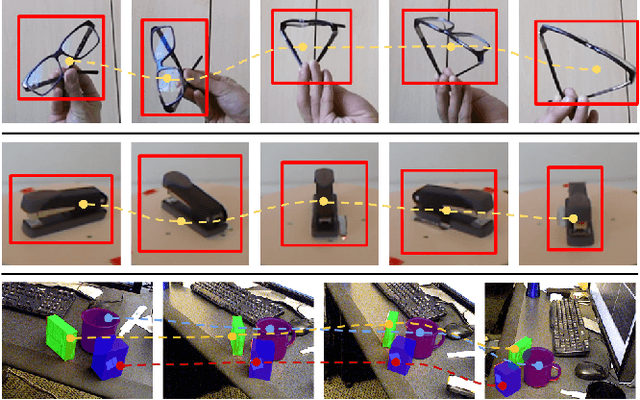

Learning Sensorimotor Primitives of Sequential Manipulation Tasks from Visual Demonstrations

Mar 08, 2022

Abstract:This work aims to learn how to perform complex robot manipulation tasks that are composed of several, consecutively executed low-level sub-tasks, given as input a few visual demonstrations of the tasks performed by a person. The sub-tasks consist of moving the robot's end-effector until it reaches a sub-goal region in the task space, performing an action, and triggering the next sub-task when a pre-condition is met. Most prior work in this domain has been concerned with learning only low-level tasks, such as hitting a ball or reaching an object and grasping it. This paper describes a new neural network-based framework for learning simultaneously low-level policies as well as high-level policies, such as deciding which object to pick next or where to place it relative to other objects in the scene. A key feature of the proposed approach is that the policies are learned directly from raw videos of task demonstrations, without any manual annotation or post-processing of the data. Empirical results on object manipulation tasks with a robotic arm show that the proposed network can efficiently learn from real visual demonstrations to perform the tasks, and outperforms popular imitation learning algorithms.

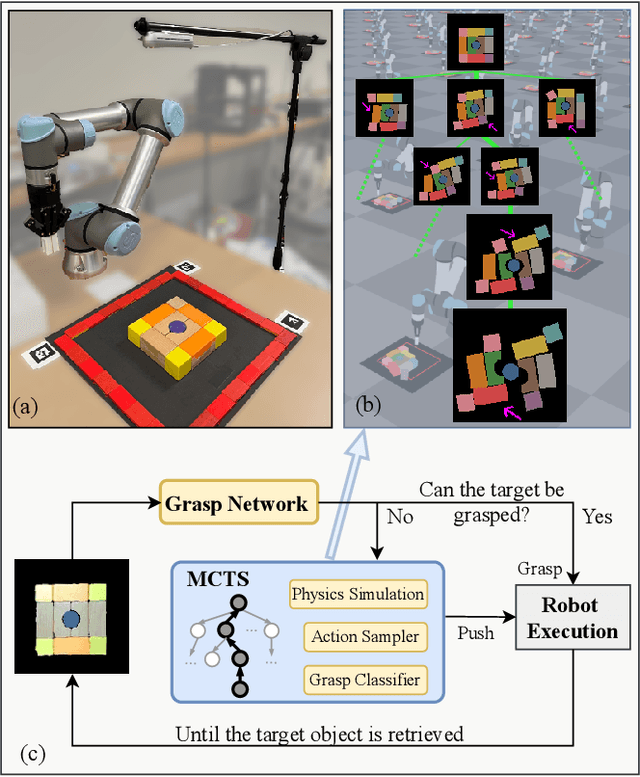

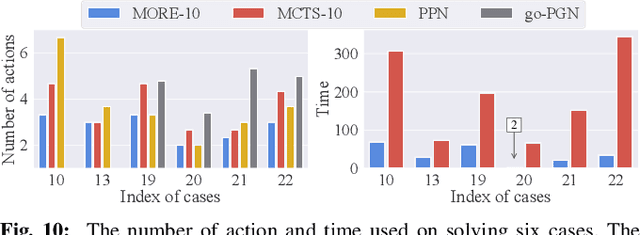

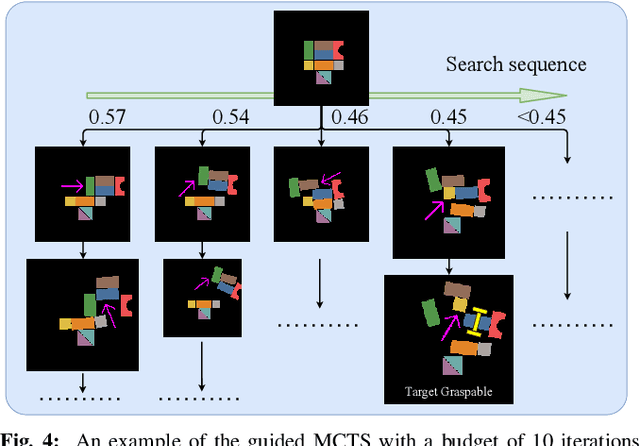

Self-Supervised Monte Carlo Tree Search Learning for Object Retrieval in Clutter

Feb 03, 2022

Abstract:In this study, working with the task of object retrieval in clutter, we have developed a robot learning framework in which Monte Carlo Tree Search (MCTS) is first applied to enable a Deep Neural Network (DNN) to learn the intricate interactions between a robot arm and a complex scene containing many objects, allowing the DNN to partially clone the behavior of MCTS. In turn, the trained DNN is integrated into MCTS to help guide its search effort. We call this approach Monte Carlo tree search and learning for Object REtrieval (MORE), which delivers significant computational efficiency gains and added solution optimality. MORE is a self-supervised robotics framework/pipeline capable of working in the real world that successfully embodies the System 2 $\to$ System 1 learning philosophy proposed by Kahneman, where learned knowledge, used properly, can help greatly speed up a time-consuming decision process over time. Videos and supplementary material can be found at https://github.com/arc-l/more

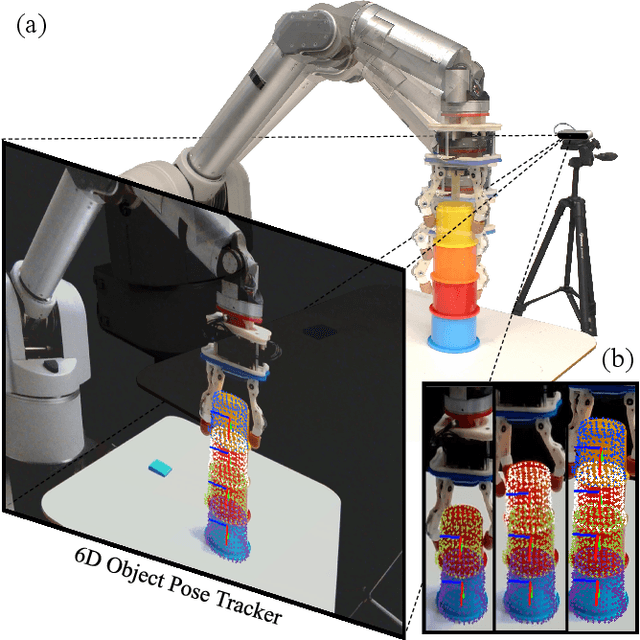

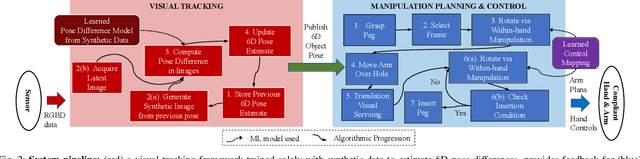

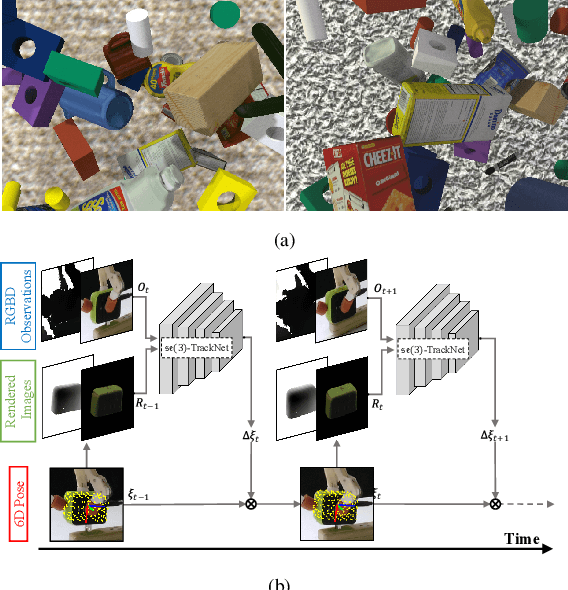

Vision-driven Compliant Manipulation for Reliable, High-Precision Assembly Tasks

Jun 26, 2021

Abstract:Highly constrained manipulation tasks continue to be challenging for autonomous robots as they require high levels of precision, typically less than 1mm, which is often incompatible with what can be achieved by traditional perception systems. This paper demonstrates that the combination of state-of-the-art object tracking with passively adaptive mechanical hardware can be leveraged to complete precision manipulation tasks with tight, industrially-relevant tolerances (0.25mm). The proposed control method closes the loop through vision by tracking the relative 6D pose of objects in the relevant workspace. It adjusts the control reference of both the compliant manipulator and the hand to complete object insertion tasks via within-hand manipulation. Contrary to previous efforts for insertion, our method does not require expensive force sensors, precision manipulators, or time-consuming, online learning, which is data hungry. Instead, this effort leverages mechanical compliance and utilizes an object agnostic manipulation model of the hand learned offline, off-the-shelf motion planning, and an RGBD-based object tracker trained solely with synthetic data. These features allow the proposed system to easily generalize and transfer to new tasks and environments. This paper describes in detail the system components and showcases its efficacy with extensive experiments involving tight tolerance peg-in-hole insertion tasks of various geometries as well as open-world constrained placement tasks.

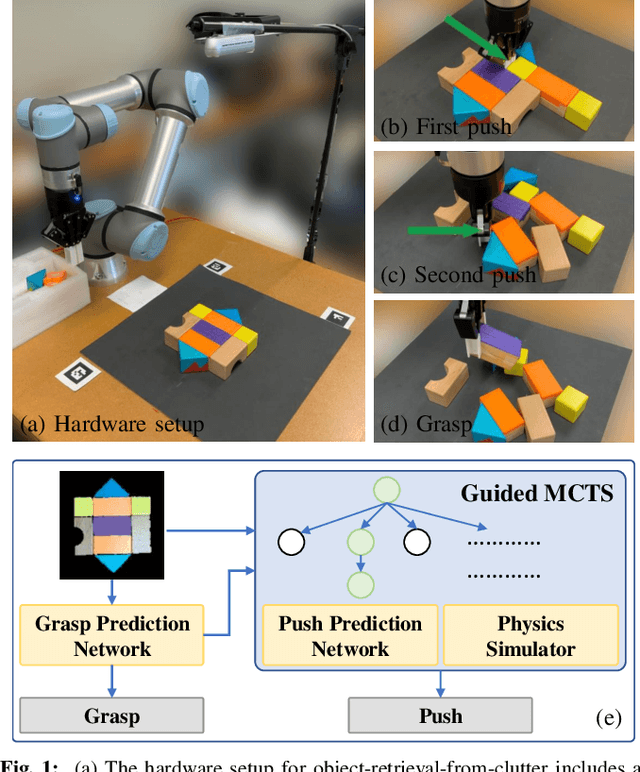

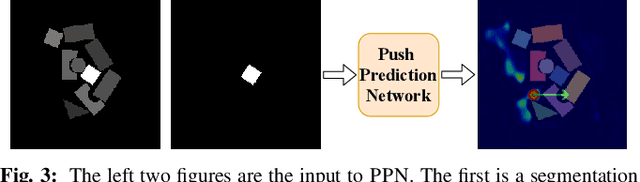

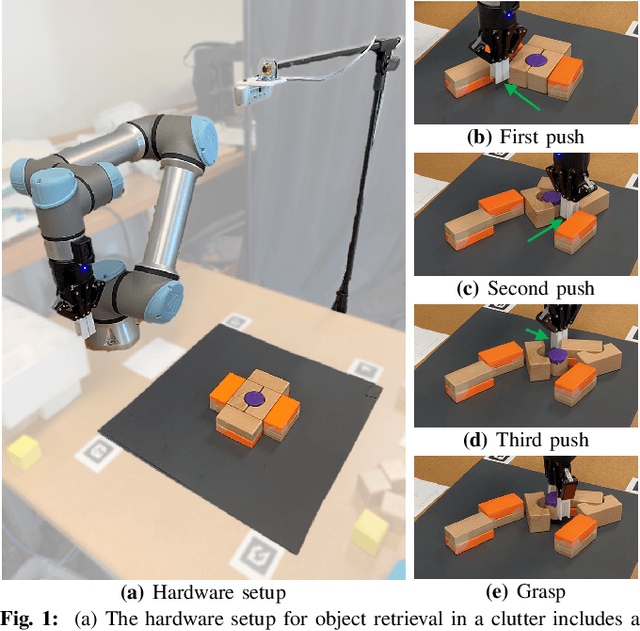

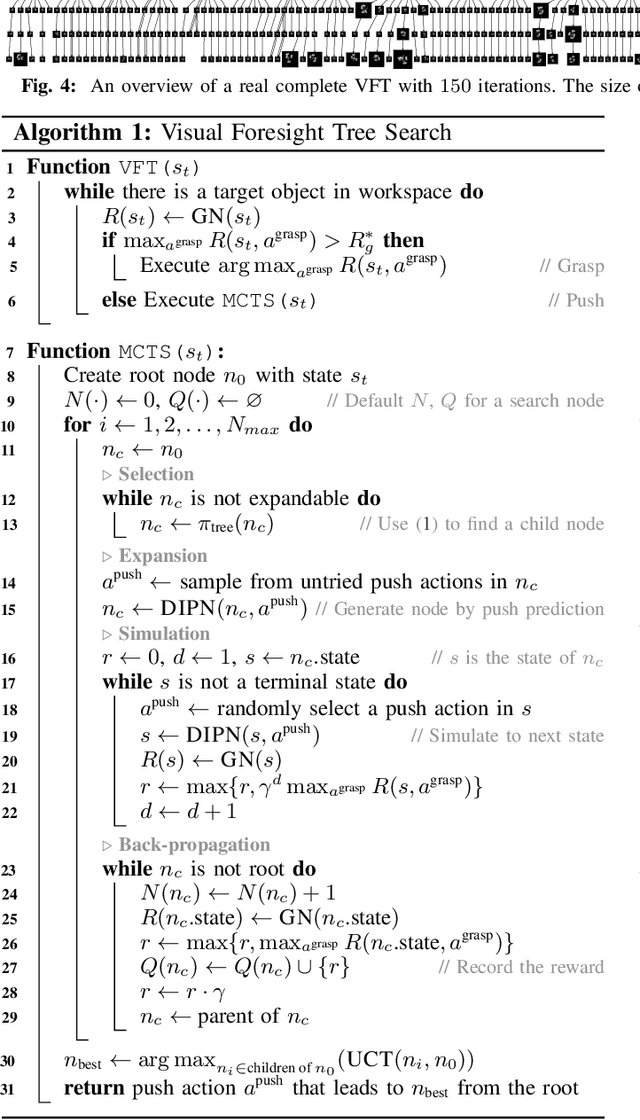

Visual Foresight Tree for Object Retrieval from Clutter with Nonprehensile Rearrangement

May 06, 2021

Abstract:This paper considers the problem of retrieving an object from a set of tightly packed objects by using a combination of robotic pushing and grasping actions. Object retrieval from confined spaces that contain clutter is an important skill for robots in order to effectively operate in households and everyday environments. The proposed solution, Visual Foresight Tree (VFT), cleverly rearranges the clutter surrounding the target object so that it can be grasped easily. Rearrangement with nested non-prehensile actions is challenging as it requires predicting complex object interactions in a combinatorially large configuration space of multiple objects. We first show that a deep neural network can be trained to accurately predict the poses of the packed objects when the robot pushes one of them. The predictive network provides visual foresight and is used in a tree search as a state transition function in the space of scene images. The tree search returns a sequence of consecutive push actions that result in the best arrangement of the clutter for grasping the target object. Experiments in simulation and using a real robot and objects show that the proposed approach outperforms model-free techniques as well as model-based myopic methods both in terms of success rates and the number of executed actions, on several challenging tasks. A video introducing VFT, with robot experiments, is accessible at https://youtu.be/7cL-hmgvyec. Full source code will also be made available upon publication of this manuscript.

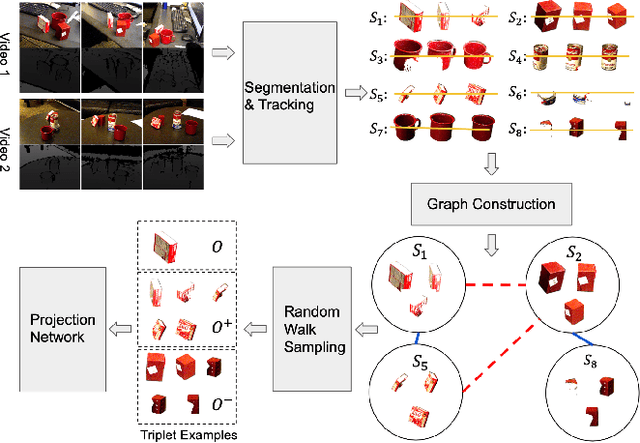

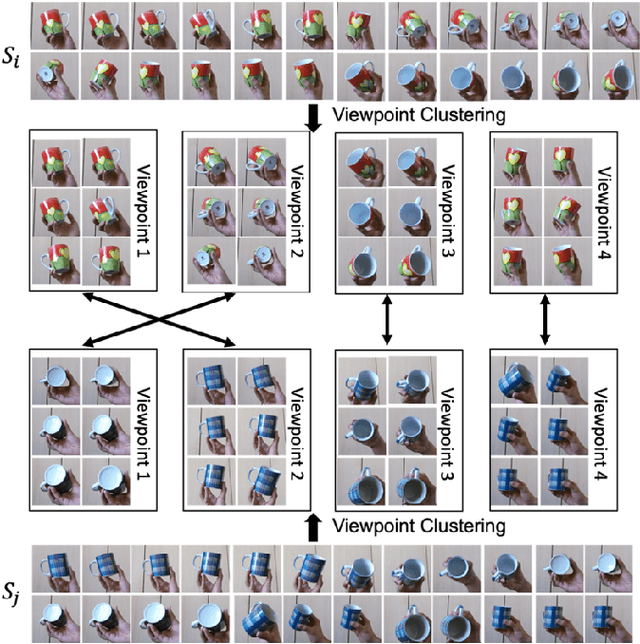

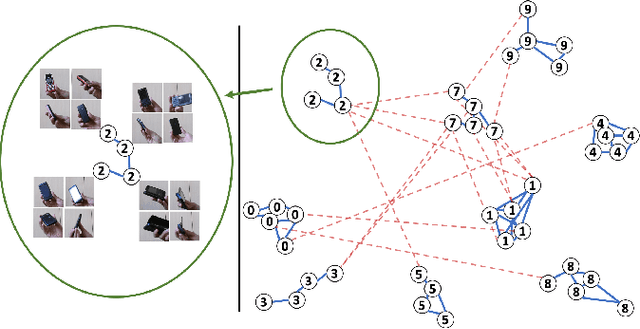

A Self-supervised Learning System for Object Detection in Videos Using Random Walks on Graphs

Nov 10, 2020

Abstract:This paper presents a new self-supervised system for learning to detect novel and previously unseen categories of objects in images. The proposed system receives as input several unlabeled videos of scenes containing various objects. The frames of the videos are segmented into objects using depth information, and the segments are tracked along each video. The system then constructs a weighted graph that connects sequences based on the similarities between the objects that they contain. The similarity between two sequences of objects is measured by using generic visual features, after automatically re-arranging the frames in the two sequences to align the viewpoints of the objects. The graph is used to sample triplets of similar and dissimilar examples by performing random walks. The triplet examples are finally used to train a siamese neural network that projects the generic visual features into a low-dimensional manifold. Experiments on three public datasets, YCB-Video, CORe50 and RGBD-Object, show that the projected low-dimensional features improve the accuracy of clustering unknown objects into novel categories, and outperform several recent unsupervised clustering techniques.

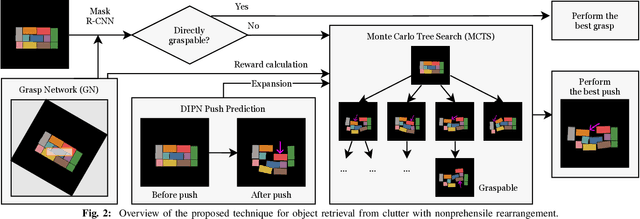

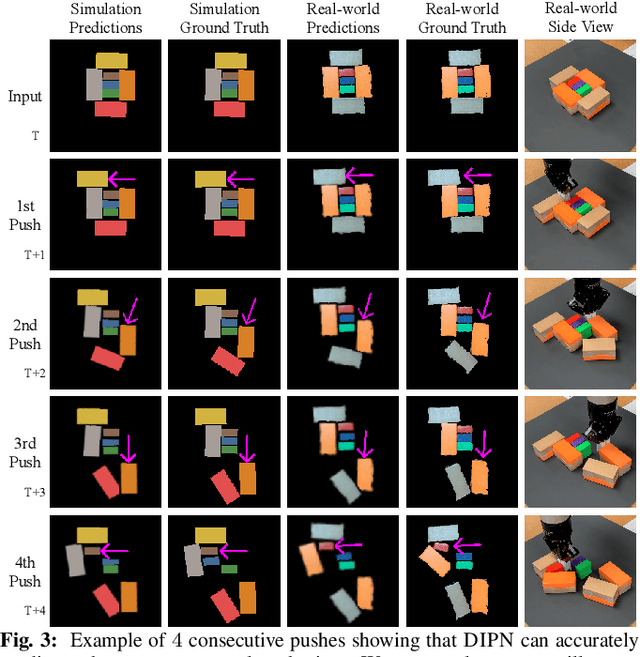

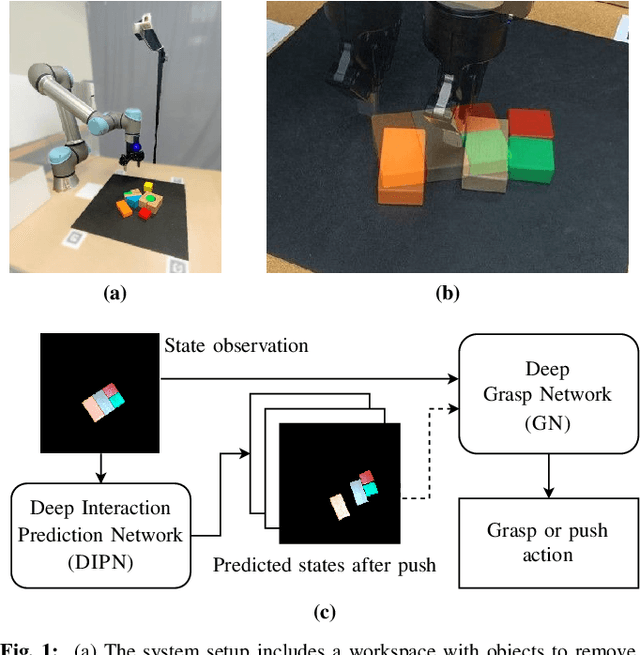

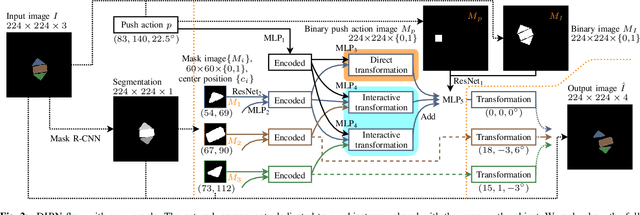

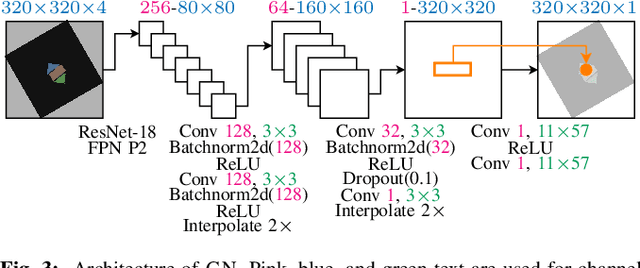

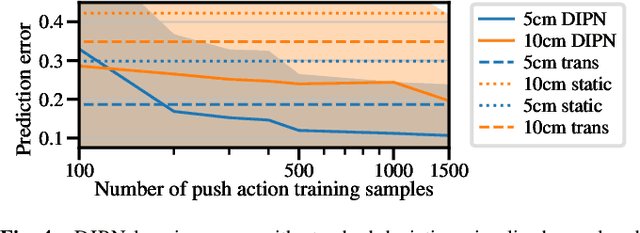

DIPN: Deep Interaction Prediction Network with Application to Clutter Removal

Nov 09, 2020

Abstract:We propose a Deep Interaction Prediction Network (DIPN) for learning to predict complex interactions that ensue as a robot end-effector pushes multiple objects, whose physical properties, including size, shape, mass, and friction coefficients may be unknown a priori. DIPN "imagines" the effect of a push action and generates an accurate synthetic image of the predicted outcome. DIPN is shown to be sample efficient when trained in simulation or with a real robotic system. The high accuracy of DIPN allows direct integration with a grasp network, yielding a robotic manipulation system capable of executing challenging clutter removal tasks while being trained in a fully self-supervised manner. The overall network demonstrates intelligent behavior in selecting proper actions between push and grasp for completing clutter removal tasks and significantly outperforms the previous state-of-the-art. Remarkably, DIPN achieves even better performance on the real robotic hardware system than in simulation. Selected evaluation video clips, code, and experiments log are available at https://github.com/rutgers-arc-lab/dipn.

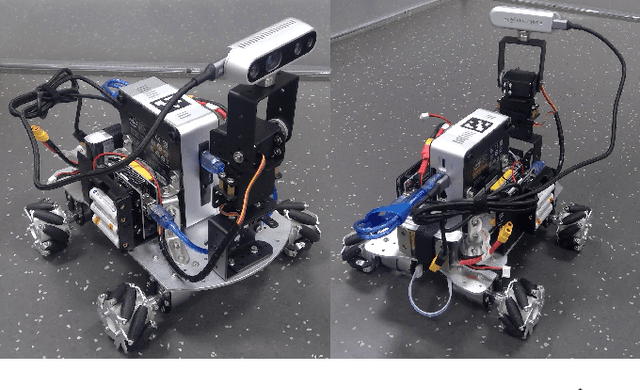

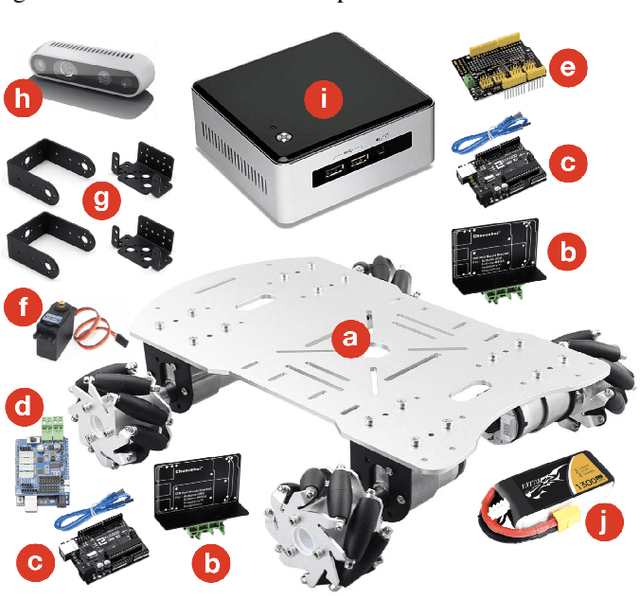

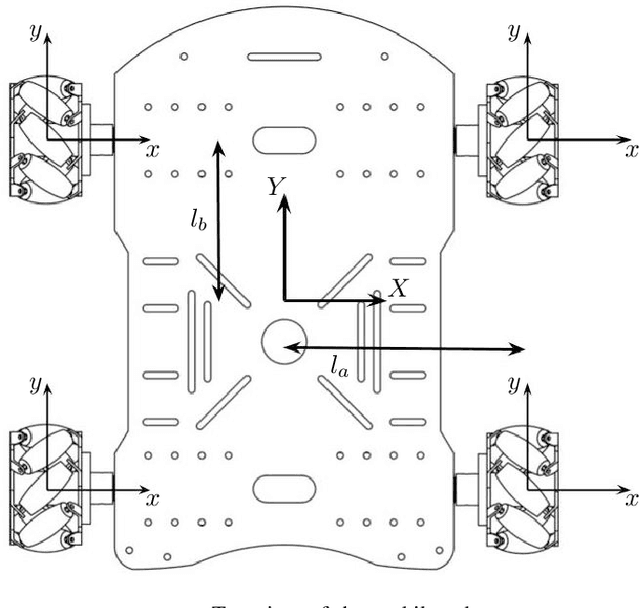

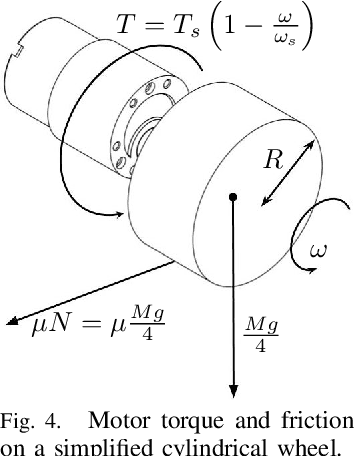

Model Identification and Control of a Low-Cost Wheeled Mobile Robot Using Differentiable Physics

Sep 24, 2020

Abstract:We present the design of a low-cost wheeled mobile robot, and an analytical model for predicting its motion under the influence of motor torques and friction forces. Using our proposed model, we show how to analytically compute the gradient of an appropriate loss function, that measures the deviation between predicted motion trajectories and real-world trajectories, which are estimated using Apriltags and an overhead camera. These analytical gradients allow us to automatically infer the unknown friction coefficients, by minimizing the loss function using gradient descent. Motion trajectories that are predicted by the optimized model are in excellent agreement with their real-world counterparts. Experiments show that our proposed approach is computationally superior to existing black-box system identification methods and other data-driven techniques, and also requires very few real-world samples for accurate trajectory prediction. The proposed approach combines the data efficiency of analytical models based on first principles, with the flexibility of data-driven methods, which makes it appropriate for low-cost robots. Using the learned model and our gradient-based optimization approach, we show how to automatically compute motor control signals for driving the robot along pre-specified curves.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge