Jersey Number Recognition

Papers and Code

From Broadcast to Minimap: Achieving State-of-the-Art SoccerNet Game State Reconstruction

Apr 08, 2025

Game State Reconstruction (GSR), a critical task in Sports Video Understanding, involves precise tracking and localization of all individuals on the football field-players, goalkeepers, referees, and others - in real-world coordinates. This capability enables coaches and analysts to derive actionable insights into player movements, team formations, and game dynamics, ultimately optimizing training strategies and enhancing competitive advantage. Achieving accurate GSR using a single-camera setup is highly challenging due to frequent camera movements, occlusions, and dynamic scene content. In this work, we present a robust end-to-end pipeline for tracking players across an entire match using a single-camera setup. Our solution integrates a fine-tuned YOLOv5m for object detection, a SegFormer-based camera parameter estimator, and a DeepSORT-based tracking framework enhanced with re-identification, orientation prediction, and jersey number recognition. By ensuring both spatial accuracy and temporal consistency, our method delivers state-of-the-art game state reconstruction, securing first place in the SoccerNet Game State Reconstruction Challenge 2024 and significantly outperforming competing methods.

A General Framework for Jersey Number Recognition in Sports Video

May 22, 2024

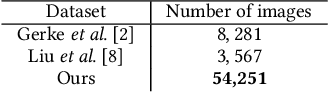

Jersey number recognition is an important task in sports video analysis, partly due to its importance for long-term player tracking. It can be viewed as a variant of scene text recognition. However, there is a lack of published attempts to apply scene text recognition models on jersey number data. Here we introduce a novel public jersey number recognition dataset for hockey and study how scene text recognition methods can be adapted to this problem. We address issues of occlusions and assess the degree to which training on one sport (hockey) can be generalized to another (soccer). For the latter, we also consider how jersey number recognition at the single-image level can be aggregated across frames to yield tracklet-level jersey number labels. We demonstrate high performance on image- and tracklet-level tasks, achieving 91.4% accuracy for hockey images and 87.4% for soccer tracklets. Code, models, and data are available at https://github.com/mkoshkina/jersey-number-pipeline.

Generalized Jersey Number Recognition Using Multi-task Learning With Orientation-guided Weight Refinement

Jun 03, 2024

Jersey number recognition (JNR) has always been an important task in sports analytics. Improving recognition accuracy remains an ongoing challenge because images are subject to blurring, occlusion, deformity, and low resolution. Recent research has addressed these problems using number localization and optical character recognition. Some approaches apply player identification schemes to image sequences, ignoring the impact of human body rotation angles on jersey digit identification. Accurately predicting the number of jersey digits by using a multi-task scheme to recognize each individual digit enables more robust results. Based on the above considerations, this paper proposes a multi-task learning method called the angle-digit refine scheme (ADRS), which combines human body orientation angles and digit number clues to recognize athletic jersey numbers. Based on our experimental results, our approach increases inference information, significantly improving prediction accuracy. Compared to state-of-the-art methods, which can only handle a single type of sport, the proposed method produces a more diverse and practical JNR application. The incorporation of diverse types of team sports such as soccer, football, basketball, volleyball, and baseball into our dataset contributes greatly to generalized JNR in sports analytics. Our accuracy achieves 64.07% on Top-1 and 89.97% on Top-2, with corresponding F1 scores of 67.46% and 90.64%, respectively.

Jersey Number Recognition using Keyframe Identification from Low-Resolution Broadcast Videos

Sep 12, 2023

Player identification is a crucial component in vision-driven soccer analytics, enabling various downstream tasks such as player assessment, in-game analysis, and broadcast production. However, automatically detecting jersey numbers from player tracklets in videos presents challenges due to motion blur, low resolution, distortions, and occlusions. Existing methods, utilizing Spatial Transformer Networks, CNNs, and Vision Transformers, have shown success in image data but struggle with real-world video data, where jersey numbers are not visible in most of the frames. Hence, identifying frames that contain the jersey number is a key sub-problem to tackle. To address these issues, we propose a robust keyframe identification module that extracts frames containing essential high-level information about the jersey number. A spatio-temporal network is then employed to model spatial and temporal context and predict the probabilities of jersey numbers in the video. Additionally, we adopt a multi-task loss function to predict the probability distribution of each digit separately. Extensive evaluations on the SoccerNet dataset demonstrate that incorporating our proposed keyframe identification module results in a significant 37.81% and 37.70% increase in the accuracies of 2 different test sets with domain gaps. These results highlight the effectiveness and importance of our approach in tackling the challenges of automatic jersey number detection in sports videos.

SoccerNet 2023 Challenges Results

Sep 12, 2023

The SoccerNet 2023 challenges were the third annual video understanding challenges organized by the SoccerNet team. For this third edition, the challenges were composed of seven vision-based tasks split into three main themes. The first theme, broadcast video understanding, is composed of three high-level tasks related to describing events occurring in the video broadcasts: (1) action spotting, focusing on retrieving all timestamps related to global actions in soccer, (2) ball action spotting, focusing on retrieving all timestamps related to the soccer ball change of state, and (3) dense video captioning, focusing on describing the broadcast with natural language and anchored timestamps. The second theme, field understanding, relates to the single task of (4) camera calibration, focusing on retrieving the intrinsic and extrinsic camera parameters from images. The third and last theme, player understanding, is composed of three low-level tasks related to extracting information about the players: (5) re-identification, focusing on retrieving the same players across multiple views, (6) multiple object tracking, focusing on tracking players and the ball through unedited video streams, and (7) jersey number recognition, focusing on recognizing the jersey number of players from tracklets. Compared to the previous editions of the SoccerNet challenges, tasks (2-3-7) are novel, including new annotations and data, task (4) was enhanced with more data and annotations, and task (6) now focuses on end-to-end approaches. More information on the tasks, challenges, and leaderboards are available on https://www.soccer-net.org. Baselines and development kits can be found on https://github.com/SoccerNet.

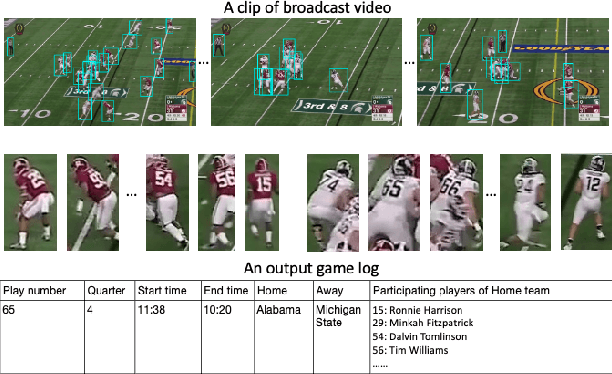

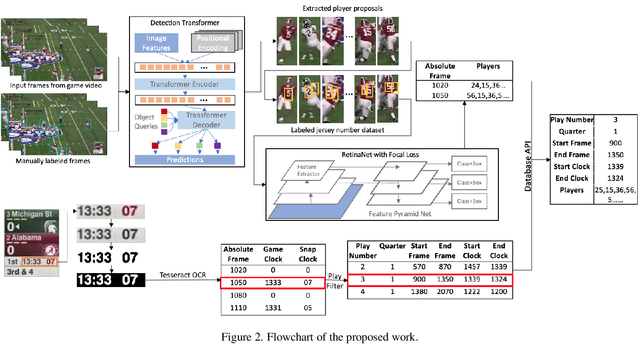

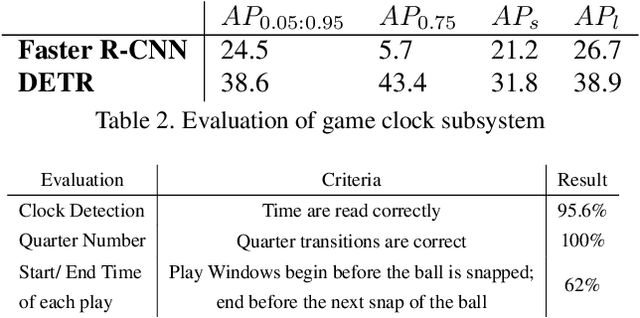

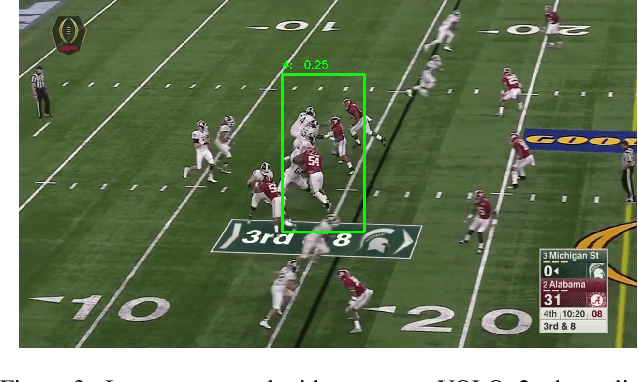

Deep Learning-based Automatic Player Identification and Logging in American Football Videos

Apr 26, 2022

American football games attract significant worldwide attention every year. Game analysis systems generate crucial information that can help analyze the games by providing fans and coaches with a convenient means to track and evaluate player performance. Identifying participating players in each play is also important for the video indexing of player participation per play. Processing football game video presents challenges such as crowded setting, distorted objects, and imbalanced data for identifying players, especially jersey numbers. In this work, we propose a deep learning-based football video analysis system to automatically track players and index their participation per play. It is a multi-stage network design to highlight area of interest and identify jersey number information with high accuracy. First, we utilize an object detection network, a detection transformer, to tackle the player detection problem in crowded context. Second, we identify players using jersey number recognition with a secondary convolutional neural network, then synchronize it with a game clock subsystem. Finally, the system outputs a complete log in a database for play indexing. We demonstrate the effectiveness and reliability of player identification and the logging system by analyzing the qualitative and quantitative results on football videos. The proposed system shows great potential for implementation in and analysis of football broadcast video.

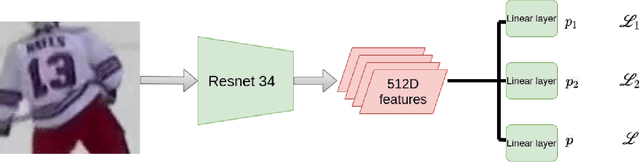

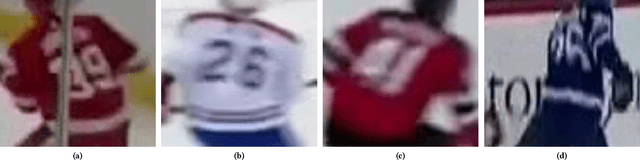

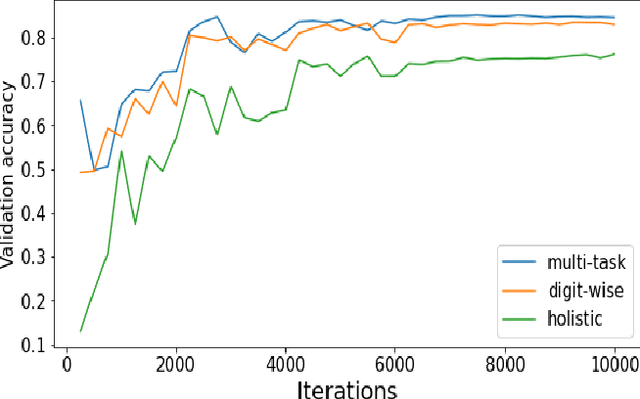

Multi-task learning for jersey number recognition in Ice Hockey

Aug 17, 2021

Identifying players in sports videos by recognizing their jersey numbers is a challenging task in computer vision. We have designed and implemented a multi-task learning network for jersey number recognition. In order to train a network to recognize jersey numbers, two output label representations are used (1) Holistic - considers the entire jersey number as one class, and (2) Digit-wise - considers the two digits in a jersey number as two separate classes. The proposed network learns both holistic and digit-wise representations through a multi-task loss function. We determine the optimal weights to be assigned to holistic and digit-wise losses through an ablation study. Experimental results demonstrate that the proposed multi-task learning network performs better than the constituent holistic and digit-wise single-task learning networks.

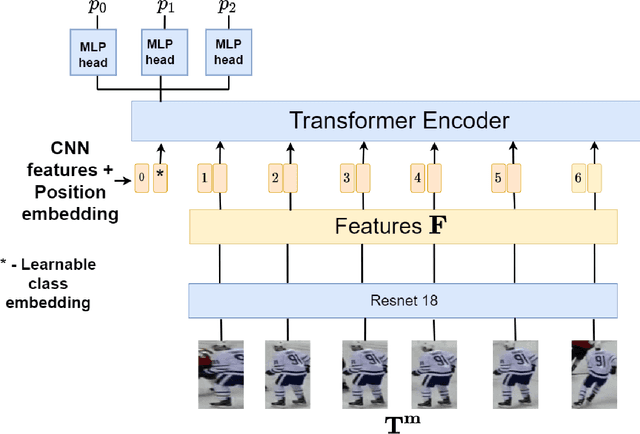

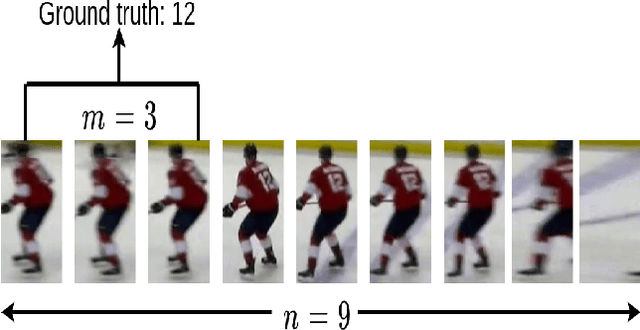

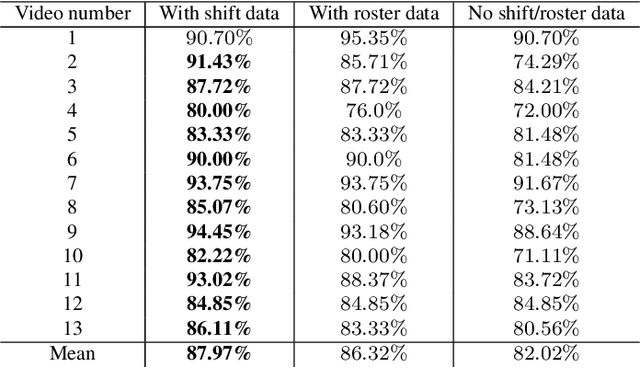

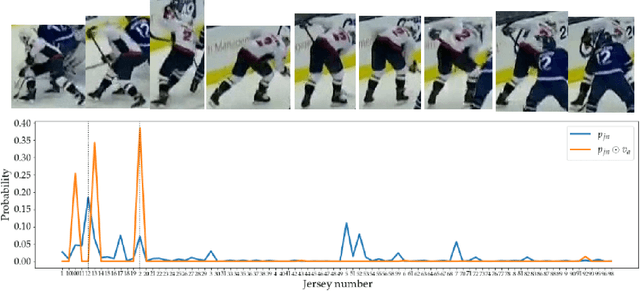

Ice hockey player identification via transformers

Nov 22, 2021

Identifying players in video is a foundational step in computer vision-based sports analytics. Obtaining player identities is essential for analyzing the game and is used in downstream tasks such as game event recognition. Transformers are the existing standard in Natural Language Processing (NLP) and are swiftly gaining traction in computer vision. Motivated by the increasing success of transformers in computer vision, in this paper, we introduce a transformer network for recognizing players through their jersey numbers in broadcast National Hockey League (NHL) videos. The transformer takes temporal sequences of player frames (also called player tracklets) as input and outputs the probabilities of jersey numbers present in the frames. The proposed network performs better than the previous benchmark on the dataset used. We implement a weakly-supervised training approach by generating approximate frame-level labels for jersey number presence and use the frame-level labels for faster training. We also utilize player shifts available in the NHL play-by-play data by reading the game time using optical character recognition (OCR) to get the players on the ice rink at a certain game time. Using player shifts improved the player identification accuracy by 6%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge