Facial Beauty Prediction

Facial beauty prediction is the process of predicting the attractiveness of a person's face in images or videos.

Papers and Code

Scale-interaction transformer: a hybrid cnn-transformer model for facial beauty prediction

Sep 05, 2025Automated Facial Beauty Prediction (FBP) is a challenging computer vision task due to the complex interplay of local and global facial features that influence human perception. While Convolutional Neural Networks (CNNs) excel at feature extraction, they often process information at a fixed scale, potentially overlooking the critical inter-dependencies between features at different levels of granularity. To address this limitation, we introduce the Scale-Interaction Transformer (SIT), a novel hybrid deep learning architecture that synergizes the feature extraction power of CNNs with the relational modeling capabilities of Transformers. The SIT first employs a multi-scale module with parallel convolutions to capture facial characteristics at varying receptive fields. These multi-scale representations are then framed as a sequence and processed by a Transformer encoder, which explicitly models their interactions and contextual relationships via a self-attention mechanism. We conduct extensive experiments on the widely-used SCUT-FBP5500 benchmark dataset, where the proposed SIT model establishes a new state-of-the-art. It achieves a Pearson Correlation of 0.9187, outperforming previous methods. Our findings demonstrate that explicitly modeling the interplay between multi-scale visual cues is crucial for high-performance FBP. The success of the SIT architecture highlights the potential of hybrid CNN-Transformer models for complex image regression tasks that demand a holistic, context-aware understanding.

Generative Pre-training for Subjective Tasks: A Diffusion Transformer-Based Framework for Facial Beauty Prediction

Jul 27, 2025Facial Beauty Prediction (FBP) is a challenging computer vision task due to its subjective nature and the subtle, holistic features that influence human perception. Prevailing methods, often based on deep convolutional networks or standard Vision Transformers pre-trained on generic object classification (e.g., ImageNet), struggle to learn feature representations that are truly aligned with high-level aesthetic assessment. In this paper, we propose a novel two-stage framework that leverages the power of generative models to create a superior, domain-specific feature extractor. In the first stage, we pre-train a Diffusion Transformer on a large-scale, unlabeled facial dataset (FFHQ) through a self-supervised denoising task. This process forces the model to learn the fundamental data distribution of human faces, capturing nuanced details and structural priors essential for aesthetic evaluation. In the second stage, the pre-trained and frozen encoder of our Diffusion Transformer is used as a backbone feature extractor, with only a lightweight regression head being fine-tuned on the target FBP dataset (FBP5500). Our method, termed Diff-FBP, sets a new state-of-the-art on the FBP5500 benchmark, achieving a Pearson Correlation Coefficient (PCC) of 0.932, significantly outperforming prior art based on general-purpose pre-training. Extensive ablation studies validate that our generative pre-training strategy is the key contributor to this performance leap, creating feature representations that are more semantically potent for subjective visual tasks.

Reflections on Diversity: A Real-time Virtual Mirror for Inclusive 3D Face Transformations

Mar 25, 2025Real-time 3D face manipulation has significant applications in virtual reality, social media and human-computer interaction. This paper introduces a novel system, which we call Mirror of Diversity (MOD), that combines Generative Adversarial Networks (GANs) for texture manipulation and 3D Morphable Models (3DMMs) for facial geometry to achieve realistic face transformations that reflect various demographic characteristics, emphasizing the beauty of diversity and the universality of human features. As participants sit in front of a computer monitor with a camera positioned above, their facial characteristics are captured in real time and can further alter their digital face reconstruction with transformations reflecting different demographic characteristics, such as gender and ethnicity (e.g., a person from Africa, Asia, Europe). Another feature of our system, which we call Collective Face, generates an averaged face representation from multiple participants' facial data. A comprehensive evaluation protocol is implemented to assess the realism and demographic accuracy of the transformations. Qualitative feedback is gathered through participant questionnaires, which include comparisons of MOD transformations with similar filters on platforms like Snapchat and TikTok. Additionally, quantitative analysis is conducted using a pretrained Convolutional Neural Network that predicts gender and ethnicity, to validate the accuracy of demographic transformations.

Uncertainty-oriented Order Learning for Facial Beauty Prediction

Sep 01, 2024

Previous Facial Beauty Prediction (FBP) methods generally model FB feature of an image as a point on the latent space, and learn a mapping from the point to a precise score. Although existing regression methods perform well on a single dataset, they are inclined to be sensitive to test data and have weak generalization ability. We think they underestimate two inconsistencies existing in the FBP problem: 1. inconsistency of FB standards among multiple datasets, and 2. inconsistency of human cognition on FB of an image. To address these issues, we propose a new Uncertainty-oriented Order Learning (UOL), where the order learning addresses the inconsistency of FB standards by learning the FB order relations among face images rather than a mapping, and the uncertainty modeling represents the inconsistency in human cognition. The key contribution of UOL is a designed distribution comparison module, which enables conventional order learning to learn the order of uncertain data. Extensive experiments on five datasets show that UOL outperforms the state-of-the-art methods on both accuracy and generalization ability.

MetaFBP: Learning to Learn High-Order Predictor for Personalized Facial Beauty Prediction

Nov 23, 2023

Predicting individual aesthetic preferences holds significant practical applications and academic implications for human society. However, existing studies mainly focus on learning and predicting the commonality of facial attractiveness, with little attention given to Personalized Facial Beauty Prediction (PFBP). PFBP aims to develop a machine that can adapt to individual aesthetic preferences with only a few images rated by each user. In this paper, we formulate this task from a meta-learning perspective that each user corresponds to a meta-task. To address such PFBP task, we draw inspiration from the human aesthetic mechanism that visual aesthetics in society follows a Gaussian distribution, which motivates us to disentangle user preferences into a commonality and an individuality part. To this end, we propose a novel MetaFBP framework, in which we devise a universal feature extractor to capture the aesthetic commonality and then optimize to adapt the aesthetic individuality by shifting the decision boundary of the predictor via a meta-learning mechanism. Unlike conventional meta-learning methods that may struggle with slow adaptation or overfitting to tiny support sets, we propose a novel approach that optimizes a high-order predictor for fast adaptation. In order to validate the performance of the proposed method, we build several PFBP benchmarks by using existing facial beauty prediction datasets rated by numerous users. Extensive experiments on these benchmarks demonstrate the effectiveness of the proposed MetaFBP method.

Attribute Controllable Beautiful Caucasian Face Generation by Aesthetics Driven Reinforcement Learning

Aug 09, 2022

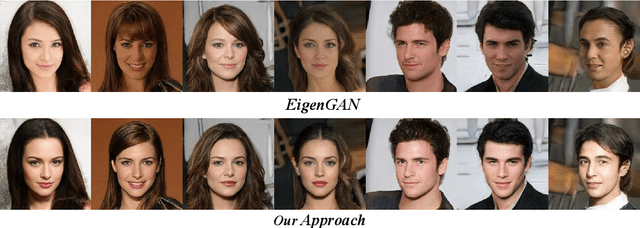

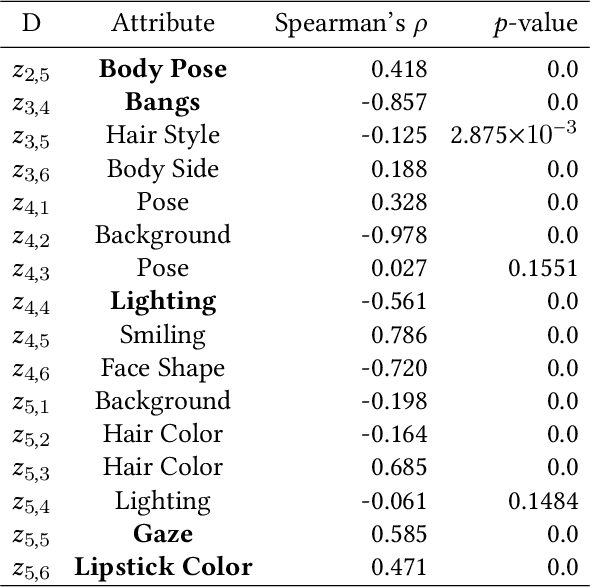

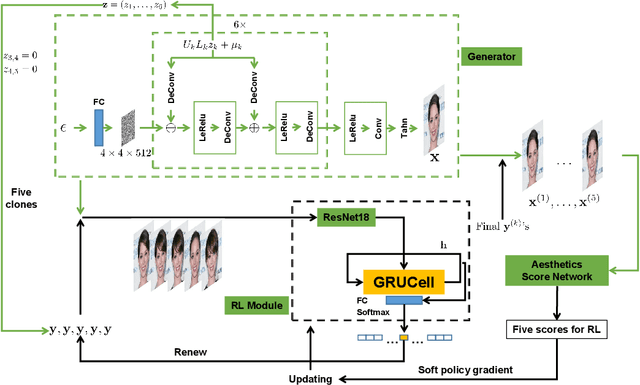

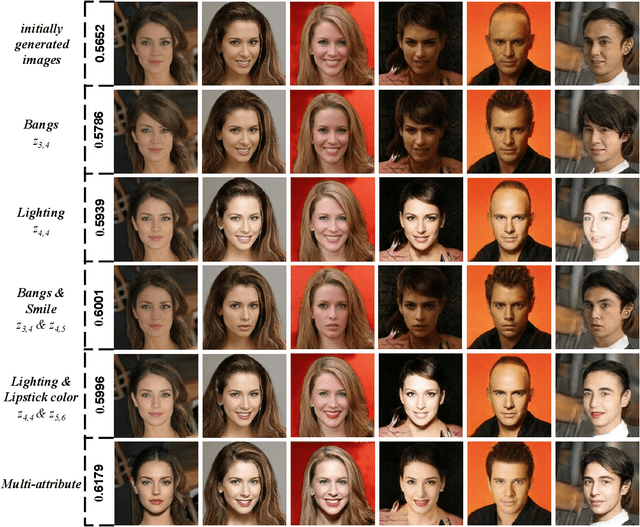

In recent years, image generation has made great strides in improving the quality of images, producing high-fidelity ones. Also, quite recently, there are architecture designs, which enable GAN to unsupervisedly learn the semantic attributes represented in different layers. However, there is still a lack of research on generating face images more consistent with human aesthetics. Based on EigenGAN [He et al., ICCV 2021], we build the techniques of reinforcement learning into the generator of EigenGAN. The agent tries to figure out how to alter the semantic attributes of the generated human faces towards more preferable ones. To accomplish this, we trained an aesthetics scoring model that can conduct facial beauty prediction. We also can utilize this scoring model to analyze the correlation between face attributes and aesthetics scores. Empirically, using off-the-shelf techniques from reinforcement learning would not work well. So instead, we present a new variant incorporating the ingredients emerging in the reinforcement learning communities in recent years. Compared to the original generated images, the adjusted ones show clear distinctions concerning various attributes. Experimental results using the MindSpore, show the effectiveness of the proposed method. Altered facial images are commonly more attractive, with significantly improved aesthetic levels.

Ethically aligned Deep Learning: Unbiased Facial Aesthetic Prediction

Nov 09, 2021

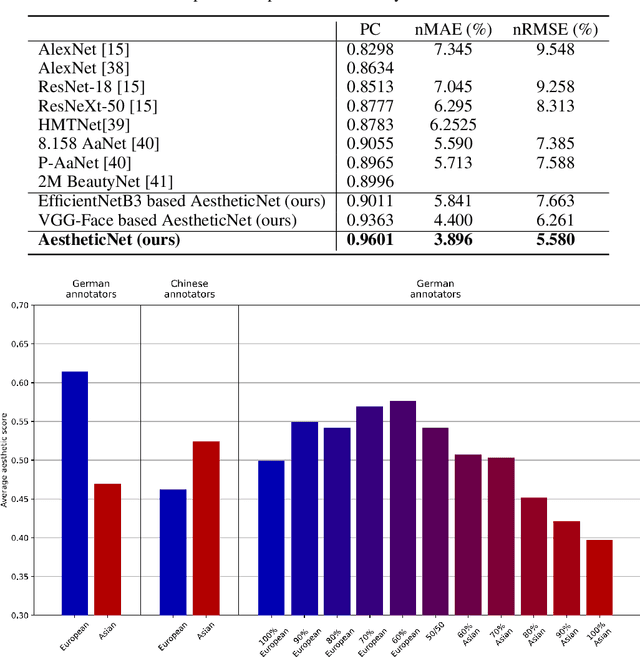

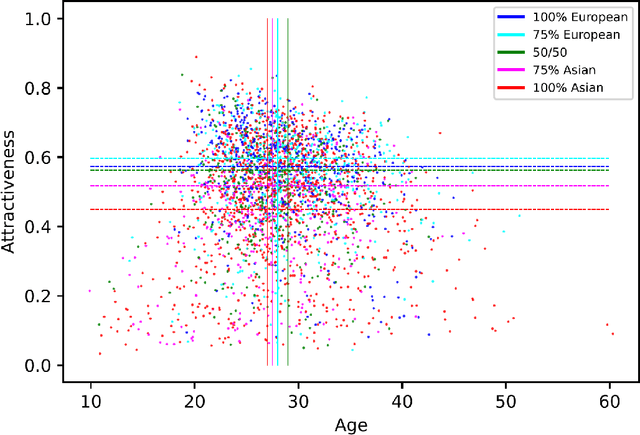

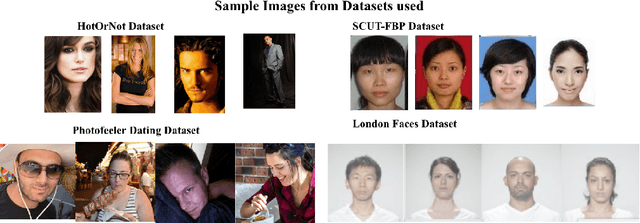

Facial beauty prediction (FBP) aims to develop a machine that automatically makes facial attractiveness assessment. In the past those results were highly correlated with human ratings, therefore also with their bias in annotating. As artificial intelligence can have racist and discriminatory tendencies, the cause of skews in the data must be identified. Development of training data and AI algorithms that are robust against biased information is a new challenge for scientists. As aesthetic judgement usually is biased, we want to take it one step further and propose an Unbiased Convolutional Neural Network for FBP. While it is possible to create network models that can rate attractiveness of faces on a high level, from an ethical point of view, it is equally important to make sure the model is unbiased. In this work, we introduce AestheticNet, a state-of-the-art attractiveness prediction network, which significantly outperforms competitors with a Pearson Correlation of 0.9601. Additionally, we propose a new approach for generating a bias-free CNN to improve fairness in machine learning.

Photofeeler-D3: A Neural Network with Voter Modeling for Dating Photo Impression Prediction

May 10, 2019

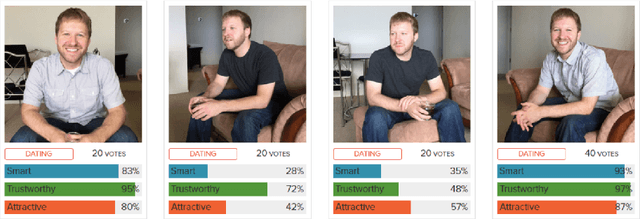

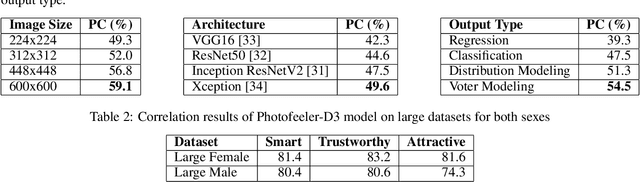

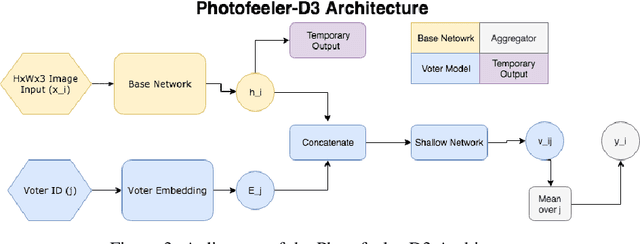

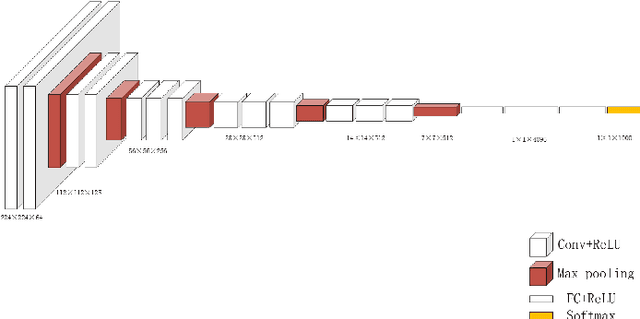

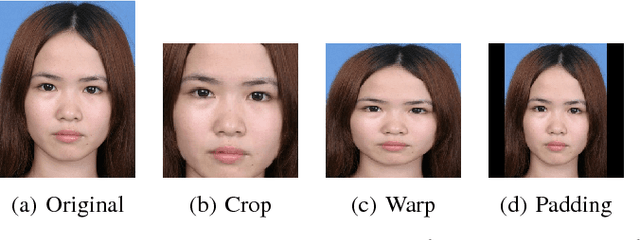

In just a few years, online dating has become the dominant way that young people meet to date, making the deceptively error-prone task of picking good dating profile photos vital to a generation's ability to form romantic connections. Until now, artificial intelligence approaches to Dating Photo Impression Prediction (DPIP) have been very inaccurate, unadaptable to real-world application, and have only taken into account a subject's physical attractiveness. To that effect, we propose Photofeeler-D3 - the first convolutional neural network as accurate as 10 human votes for how smart, trustworthy, and attractive the subject appears in highly variable dating photos. Our "attractive" output is also applicable to Facial Beauty Prediction (FBP), making Photofeeler-D3 state-of-the-art for both DPIP and FBP. We achieve this by leveraging Photofeeler's Dating Dataset (PDD) with over 1 million images and tens of millions of votes, our novel technique of voter modeling, and cutting-edge computer vision techniques.

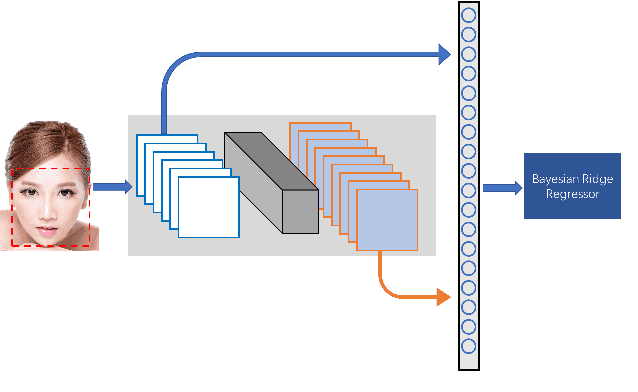

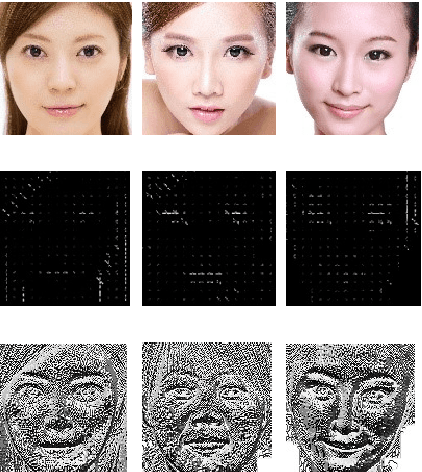

Transferring Rich Deep Features for Facial Beauty Prediction

Mar 20, 2018

Feature extraction plays a significant part in computer vision tasks. In this paper, we propose a method which transfers rich deep features from a pretrained model on face verification task and feeds the features into Bayesian ridge regression algorithm for facial beauty prediction. We leverage the deep neural networks that extracts more abstract features from stacked layers. Through simple but effective feature fusion strategy, our method achieves improved or comparable performance on SCUT-FBP dataset and ECCV HotOrNot dataset. Our experiments demonstrate the effectiveness of the proposed method and clarify the inner interpretability of facial beauty perception.

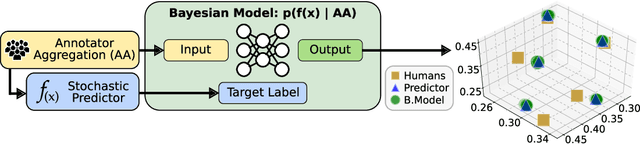

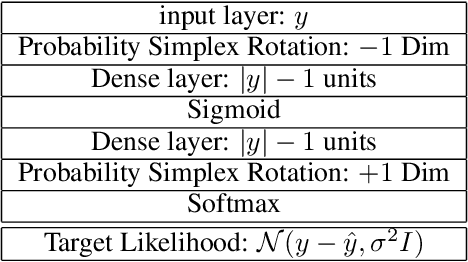

A Bayesian Evaluation Framework for Ground Truth-Free Visual Recognition Tasks

Jun 20, 2020

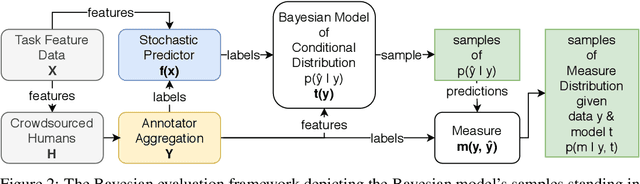

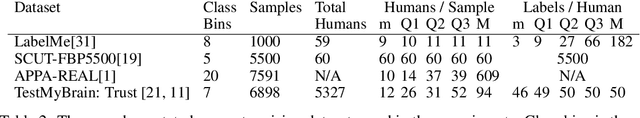

An interesting development in automatic visual recognition has been the emergence of tasks where it is not possible to assign ground truth labels to images, yet still feasible to collect annotations that reflect human judgements about them. Such tasks include subjective visual attribute assignment and the labeling of ambiguous scenes. Machine learning-based predictors for these tasks rely on supervised training that models the behavior of the annotators, e.g., what would the average person's judgement be for an image? A key open question for this type of work, especially for applications where inconsistency with human behavior can lead to ethical lapses, is how to evaluate the uncertainty of trained predictors. Given that the real answer is unknowable, we are left with often noisy judgements from human annotators to work with. In order to account for the uncertainty that is present, we propose a relative Bayesian framework for evaluating predictors trained on such data. The framework specifies how to estimate a predictor's uncertainty due to the human labels by approximating a conditional distribution and producing a credible interval for the predictions and their measures of performance. The framework is successfully applied to four image classification tasks that use subjective human judgements: facial beauty assessment using the SCUT-FBP5500 dataset, social attribute assignment using data from TestMyBrain.org, apparent age estimation using data from the ChaLearn series of challenges, and ambiguous scene labeling using the LabelMe dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge