Zhiqing Tang

Hybrid Learning for Cold-Start-Aware Microservice Scheduling in Dynamic Edge Environments

May 28, 2025Abstract:With the rapid growth of IoT devices and their diverse workloads, container-based microservices deployed at edge nodes have become a lightweight and scalable solution. However, existing microservice scheduling algorithms often assume static resource availability, which is unrealistic when multiple containers are assigned to an edge node. Besides, containers suffer from cold-start inefficiencies during early-stage training in currently popular reinforcement learning (RL) algorithms. In this paper, we propose a hybrid learning framework that combines offline imitation learning (IL) with online Soft Actor-Critic (SAC) optimization to enable a cold-start-aware microservice scheduling with dynamic allocation for computing resources. We first formulate a delay-and-energy-aware scheduling problem and construct a rule-based expert to generate demonstration data for behavior cloning. Then, a GRU-enhanced policy network is designed in the policy network to extract the correlation among multiple decisions by separately encoding slow-evolving node states and fast-changing microservice features, and an action selection mechanism is given to speed up the convergence. Extensive experiments show that our method significantly accelerates convergence and achieves superior final performance. Compared with baselines, our algorithm improves the total objective by $50\%$ and convergence speed by $70\%$, and demonstrates the highest stability and robustness across various edge configurations.

Empowering Edge Intelligence: A Comprehensive Survey on On-Device AI Models

Mar 08, 2025

Abstract:The rapid advancement of artificial intelligence (AI) technologies has led to an increasing deployment of AI models on edge and terminal devices, driven by the proliferation of the Internet of Things (IoT) and the need for real-time data processing. This survey comprehensively explores the current state, technical challenges, and future trends of on-device AI models. We define on-device AI models as those designed to perform local data processing and inference, emphasizing their characteristics such as real-time performance, resource constraints, and enhanced data privacy. The survey is structured around key themes, including the fundamental concepts of AI models, application scenarios across various domains, and the technical challenges faced in edge environments. We also discuss optimization and implementation strategies, such as data preprocessing, model compression, and hardware acceleration, which are essential for effective deployment. Furthermore, we examine the impact of emerging technologies, including edge computing and foundation models, on the evolution of on-device AI models. By providing a structured overview of the challenges, solutions, and future directions, this survey aims to facilitate further research and application of on-device AI, ultimately contributing to the advancement of intelligent systems in everyday life.

VELO: A Vector Database-Assisted Cloud-Edge Collaborative LLM QoS Optimization Framework

Jun 19, 2024

Abstract:The Large Language Model (LLM) has gained significant popularity and is extensively utilized across various domains. Most LLM deployments occur within cloud data centers, where they encounter substantial response delays and incur high costs, thereby impacting the Quality of Services (QoS) at the network edge. Leveraging vector database caching to store LLM request results at the edge can substantially mitigate response delays and cost associated with similar requests, which has been overlooked by previous research. Addressing these gaps, this paper introduces a novel Vector database-assisted cloud-Edge collaborative LLM QoS Optimization (VELO) framework. Firstly, we propose the VELO framework, which ingeniously employs vector database to cache the results of some LLM requests at the edge to reduce the response time of subsequent similar requests. Diverging from direct optimization of the LLM, our VELO framework does not necessitate altering the internal structure of LLM and is broadly applicable to diverse LLMs. Subsequently, building upon the VELO framework, we formulate the QoS optimization problem as a Markov Decision Process (MDP) and devise an algorithm grounded in Multi-Agent Reinforcement Learning (MARL) to decide whether to request the LLM in the cloud or directly return the results from the vector database at the edge. Moreover, to enhance request feature extraction and expedite training, we refine the policy network of MARL and integrate expert demonstrations. Finally, we implement the proposed algorithm within a real edge system. Experimental findings confirm that our VELO framework substantially enhances user satisfaction by concurrently diminishing delay and resource consumption for edge users utilizing LLMs.

A Fast Task Offloading Optimization Framework for IRS-Assisted Multi-Access Edge Computing System

Jul 17, 2023Abstract:Terahertz communication networks and intelligent reflecting surfaces exhibit significant potential in advancing wireless networks, particularly within the domain of aerial-based multi-access edge computing systems. These technologies enable efficient offloading of computational tasks from user electronic devices to Unmanned Aerial Vehicles or local execution. For the generation of high-quality task-offloading allocations, conventional numerical optimization methods often struggle to solve challenging combinatorial optimization problems within the limited channel coherence time, thereby failing to respond quickly to dynamic changes in system conditions. To address this challenge, we propose a deep learning-based optimization framework called Iterative Order-Preserving policy Optimization (IOPO), which enables the generation of energy-efficient task-offloading decisions within milliseconds. Unlike exhaustive search methods, IOPO provides continuous updates to the offloading decisions without resorting to exhaustive search, resulting in accelerated convergence and reduced computational complexity, particularly when dealing with complex problems characterized by extensive solution spaces. Experimental results demonstrate that the proposed framework can generate energy-efficient task-offloading decisions within a very short time period, outperforming other benchmark methods.

Look Closer to Your Enemy: Learning to Attack via Teacher-student Mimicking

Jul 28, 2022

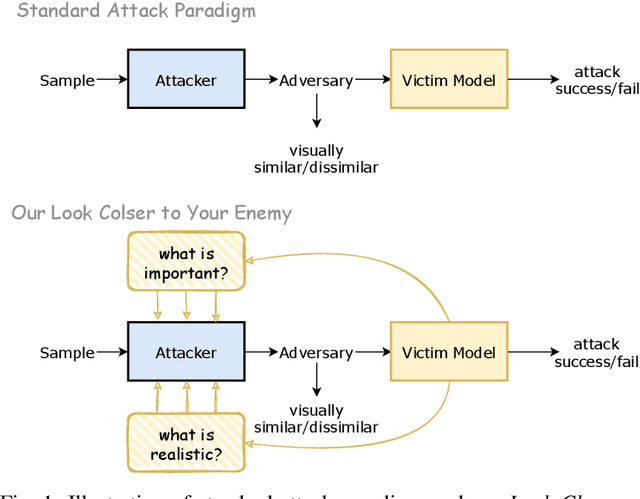

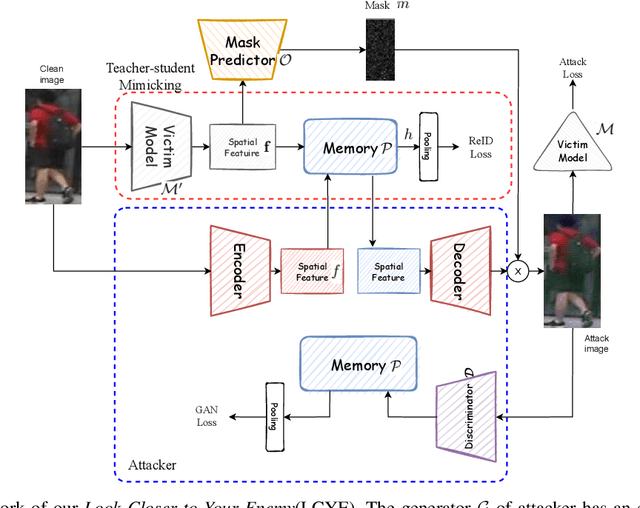

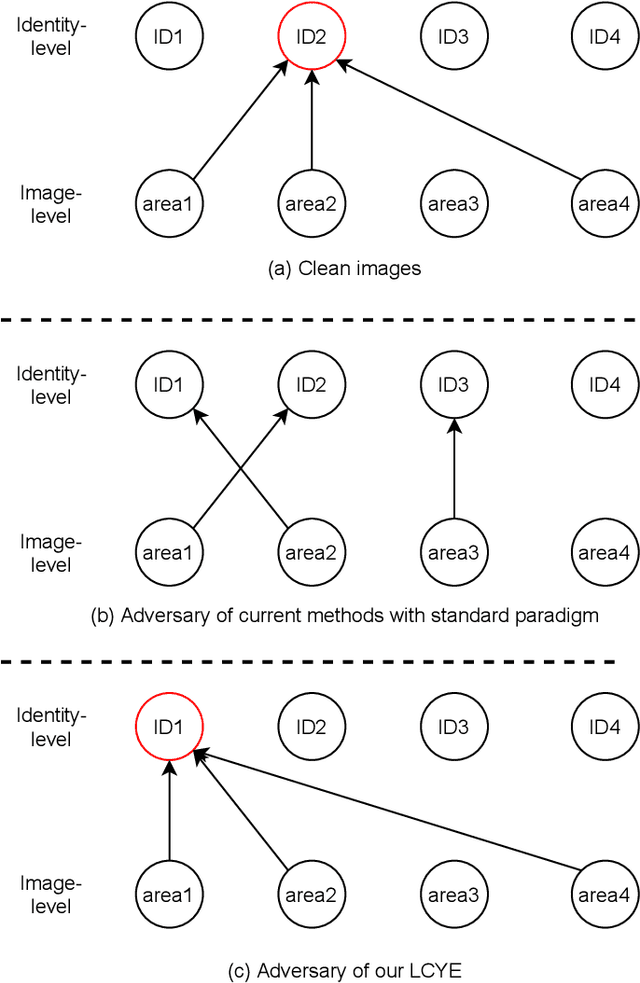

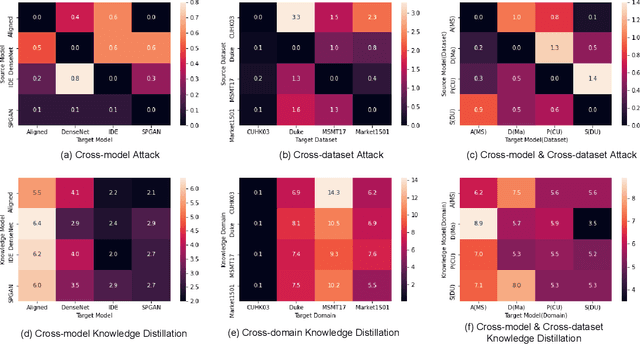

Abstract:This paper aims to generate realistic attack samples of person re-identification, ReID, by reading the enemy's mind (VM). In this paper, we propose a novel inconspicuous and controllable ReID attack baseline, LCYE, to generate adversarial query images. Concretely, LCYE first distills VM's knowledge via teacher-student memory mimicking in the proxy task. Then this knowledge prior acts as an explicit cipher conveying what is essential and realistic, believed by VM, for accurate adversarial misleading. Besides, benefiting from the multiple opposing task framework of LCYE, we further investigate the interpretability and generalization of ReID models from the view of the adversarial attack, including cross-domain adaption, cross-model consensus, and online learning process. Extensive experiments on four ReID benchmarks show that our method outperforms other state-of-the-art attackers with a large margin in white-box, black-box, and target attacks. Our code is now available at https://gitfront.io/r/user-3704489/mKXusqDT4ffr/LCYE/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge