Fastformer: Additive Attention Can Be All You Need

Sep 05, 2021Chuhan Wu, Fangzhao Wu, Tao Qi, Yongfeng Huang, Xing Xie

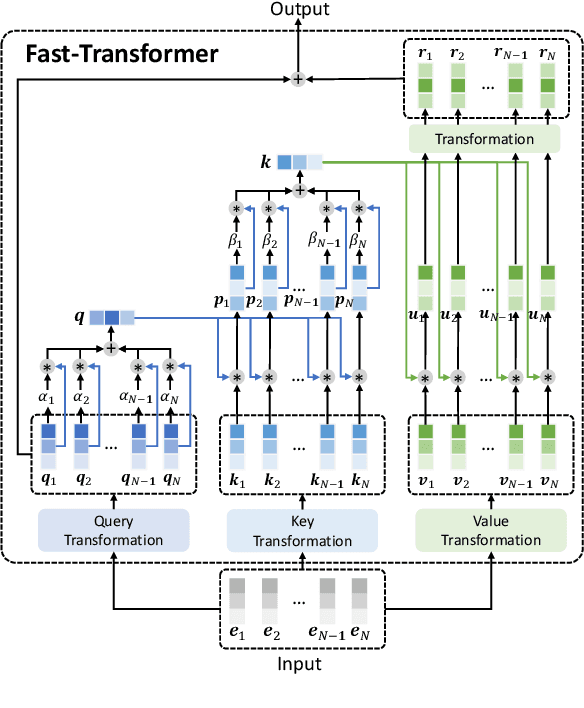

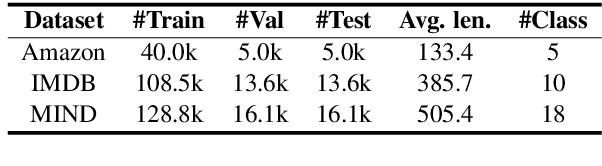

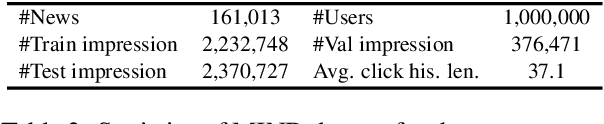

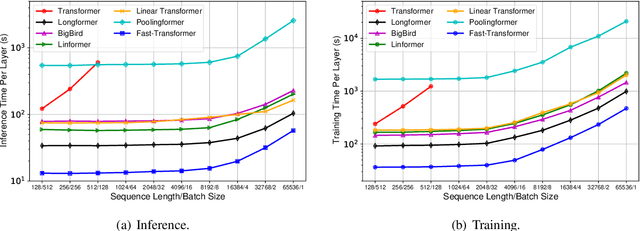

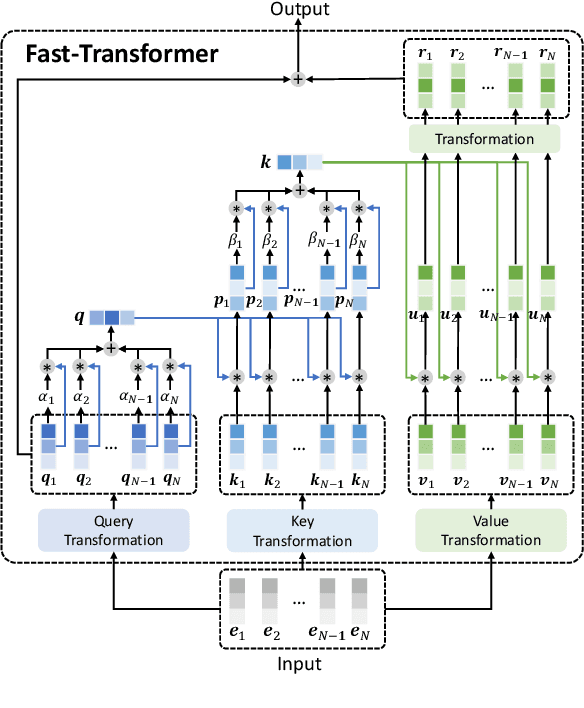

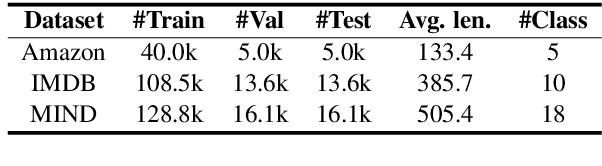

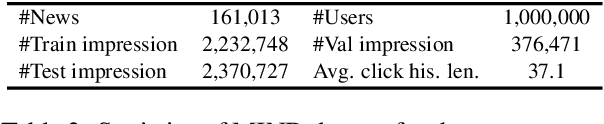

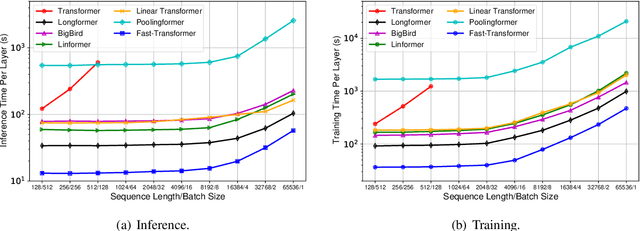

Transformer is a powerful model for text understanding. However, it is inefficient due to its quadratic complexity to input sequence length. Although there are many methods on Transformer acceleration, they are still either inefficient on long sequences or not effective enough. In this paper, we propose Fastformer, which is an efficient Transformer model based on additive attention. In Fastformer, instead of modeling the pair-wise interactions between tokens, we first use additive attention mechanism to model global contexts, and then further transform each token representation based on its interaction with global context representations. In this way, Fastformer can achieve effective context modeling with linear complexity. Extensive experiments on five datasets show that Fastformer is much more efficient than many existing Transformer models and can meanwhile achieve comparable or even better long text modeling performance.

UserBERT: Contrastive User Model Pre-training

Sep 03, 2021Chuhan Wu, Fangzhao Wu, Yang Yu, Tao Qi, Yongfeng Huang, Xing Xie

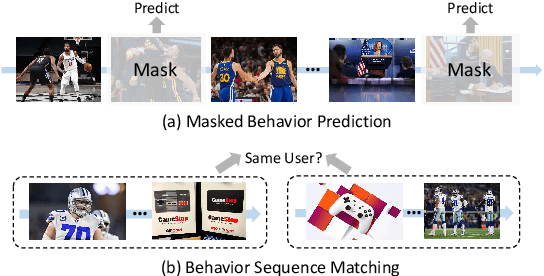

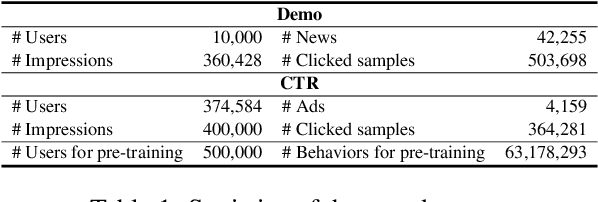

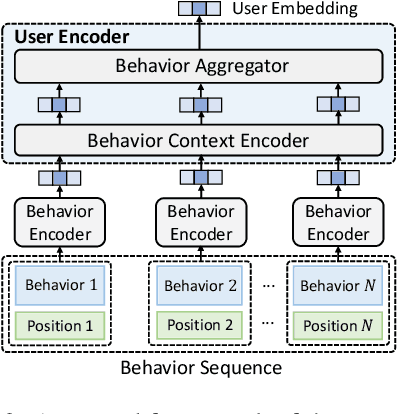

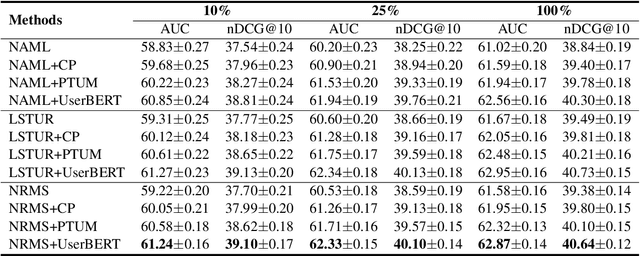

User modeling is critical for personalized web applications. Existing user modeling methods usually train user models from user behaviors with task-specific labeled data. However, labeled data in a target task may be insufficient for training accurate user models. Fortunately, there are usually rich unlabeled user behavior data which encode rich information of user characteristics and interests. Thus, pre-training user models on unlabeled user behavior data has the potential to improve user modeling for many downstream tasks. In this paper, we propose a contrastive user model pre-training method named UserBERT. Two self-supervision tasks are incorporated in UserBERT for user model pre-training on unlabeled user behavior data to empower user modeling. The first one is masked behavior prediction, which aims to model the relatedness between user behaviors. The second one is behavior sequence matching, which aims to capture the inherent user interests that are consistent in different periods. In addition, we propose a medium-hard negative sampling framework to select informative negative samples for better contrastive pre-training. We maintain a synchronously updated candidate behavior pool and an asynchronously updated candidate behavior sequence pool to select the locally hardest negative behaviors and behavior sequences in an efficient way. Extensive experiments on two real-world datasets in different tasks show that UserBERT can effectively improve various user models.

Smart Bird: Learnable Sparse Attention for Efficient and Effective Transformer

Sep 02, 2021Chuhan Wu, Fangzhao Wu, Tao Qi, Binxing Jiao, Daxin Jiang, Yongfeng Huang, Xing Xie

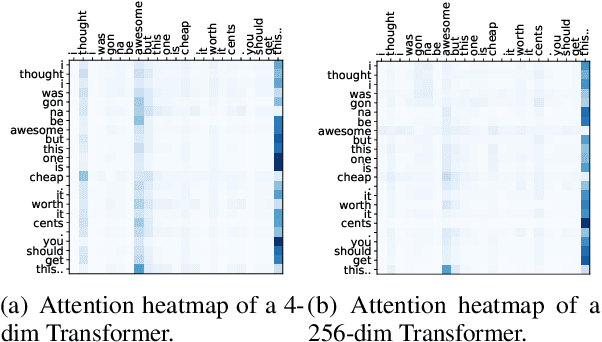

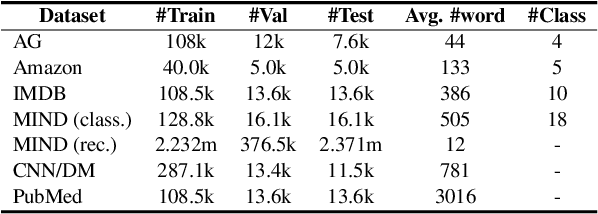

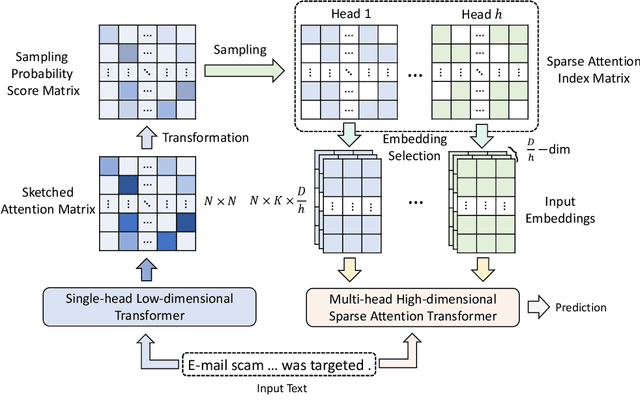

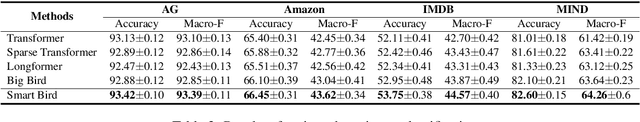

Transformer has achieved great success in NLP. However, the quadratic complexity of the self-attention mechanism in Transformer makes it inefficient in handling long sequences. Many existing works explore to accelerate Transformers by computing sparse self-attention instead of a dense one, which usually attends to tokens at certain positions or randomly selected tokens. However, manually selected or random tokens may be uninformative for context modeling. In this paper, we propose Smart Bird, which is an efficient and effective Transformer with learnable sparse attention. In Smart Bird, we first compute a sketched attention matrix with a single-head low-dimensional Transformer, which aims to find potential important interactions between tokens. We then sample token pairs based on their probability scores derived from the sketched attention matrix to generate different sparse attention index matrices for different attention heads. Finally, we select token embeddings according to the index matrices to form the input of sparse attention networks. Extensive experiments on six benchmark datasets for different tasks validate the efficiency and effectiveness of Smart Bird in text modeling.

FedKD: Communication Efficient Federated Learning via Knowledge Distillation

Aug 30, 2021Chuhan Wu, Fangzhao Wu, Ruixuan Liu, Lingjuan Lyu, Yongfeng Huang, Xing Xie

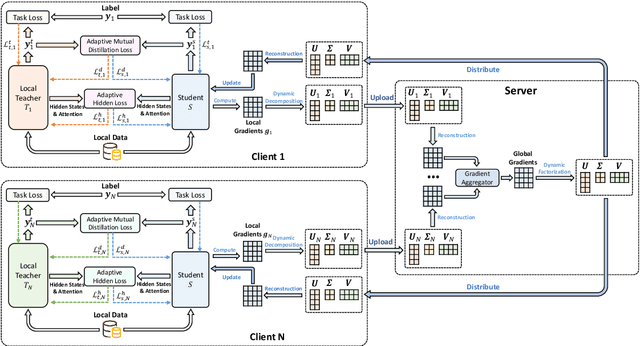

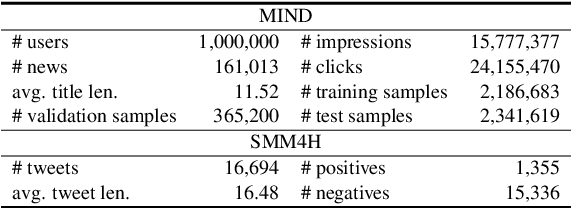

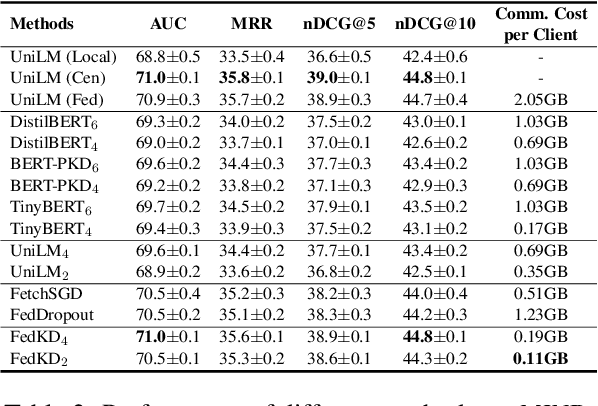

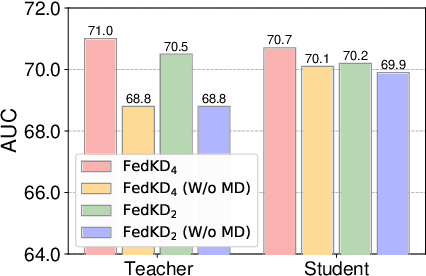

Federated learning is widely used to learn intelligent models from decentralized data. In federated learning, clients need to communicate their local model updates in each iteration of model learning. However, model updates are large in size if the model contains numerous parameters, and there usually needs many rounds of communication until model converges. Thus, the communication cost in federated learning can be quite heavy. In this paper, we propose a communication efficient federated learning method based on knowledge distillation. Instead of directly communicating the large models between clients and server, we propose an adaptive mutual distillation framework to reciprocally learn a student and a teacher model on each client, where only the student model is shared by different clients and updated collaboratively to reduce the communication cost. Both the teacher and student on each client are learned on its local data and the knowledge distilled from each other, where their distillation intensities are controlled by their prediction quality. To further reduce the communication cost, we propose a dynamic gradient approximation method based on singular value decomposition to approximate the exchanged gradients with dynamic precision. Extensive experiments on benchmark datasets in different tasks show that our approach can effectively reduce the communication cost and achieve competitive results.

Is News Recommendation a Sequential Recommendation Task?

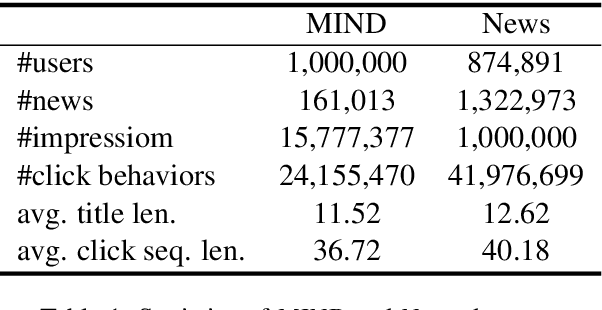

Aug 26, 2021Chuhan Wu, Fangzhao Wu, Tao Qi, Yongfeng Huang

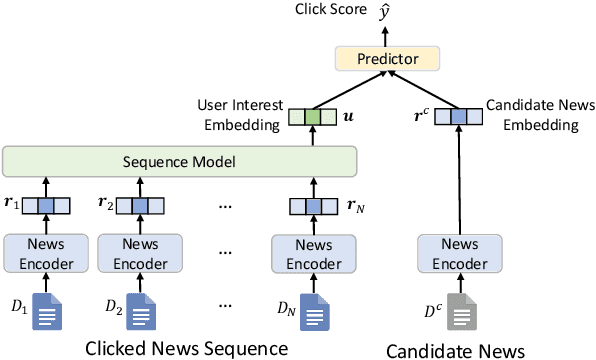

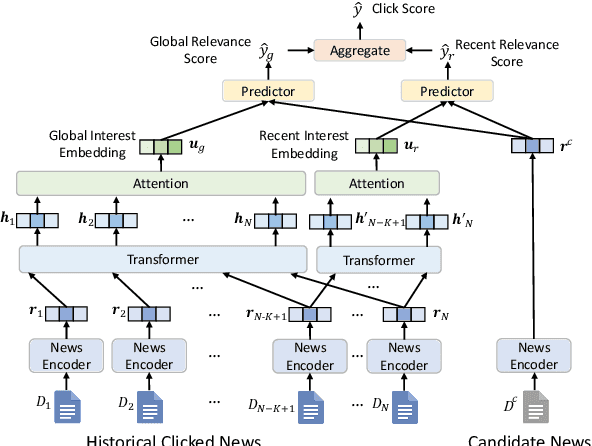

News recommendation is often modeled as a sequential recommendation task, which assumes that there are rich short-term dependencies over historical clicked news. However, in news recommendation scenarios users usually have strong preferences on the temporal diversity of news information and may not tend to click similar news successively, which is very different from many sequential recommendation scenarios such as e-commerce recommendation. In this paper, we study whether news recommendation can be regarded as a standard sequential recommendation problem. Through extensive experiments on two real-world datasets, we find that modeling news recommendation as a sequential recommendation problem is suboptimal. To handle this challenge, we further propose a temporal diversity-aware news recommendation method that can promote candidate news that are diverse from recently clicked news, which can help predict future clicks more accurately. Experiments show that our approach can consistently improve various news recommendation methods.

Fastformer: Additive Attention is All You Need

Aug 20, 2021Chuhan Wu, Fangzhao Wu, Tao Qi, Yongfeng Huang

Transformer is a powerful model for text understanding. However, it is inefficient due to its quadratic complexity to input sequence length. Although there are many methods on Transformer acceleration, they are still either inefficient on long sequences or not effective enough. In this paper, we propose Fastformer, which is an efficient Transformer model based on additive attention. In Fastformer, instead of modeling the pair-wise interactions between tokens, we first use additive attention mechanism to model global contexts, and then further transform each token representation based on its interaction with global context representations. In this way, Fastformer can achieve effective context modeling with linear complexity. Extensive experiments on five datasets show that Fastformer is much more efficient than many existing Transformer models and can meanwhile achieve comparable or even better long text modeling performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge