Yasemin Bekiroglu

Learning Dynamic Tasks on a Large-scale Soft Robot in a Handful of Trials

Nov 13, 2024

Abstract:Soft robots offer more flexibility, compliance, and adaptability than traditional rigid robots. They are also typically lighter and cheaper to manufacture. However, their use in real-world applications is limited due to modeling challenges and difficulties in integrating effective proprioceptive sensors. Large-scale soft robots ($\approx$ two meters in length) have greater modeling complexity due to increased inertia and related effects of gravity. Common efforts to ease these modeling difficulties such as assuming simple kinematic and dynamics models also limit the general capabilities of soft robots and are not applicable in tasks requiring fast, dynamic motion like throwing and hammering. To overcome these challenges, we propose a data-efficient Bayesian optimization-based approach for learning control policies for dynamic tasks on a large-scale soft robot. Our approach optimizes the task objective function directly from commanded pressures, without requiring approximate kinematics or dynamics as an intermediate step. We demonstrate the effectiveness of our approach through both simulated and real-world experiments.

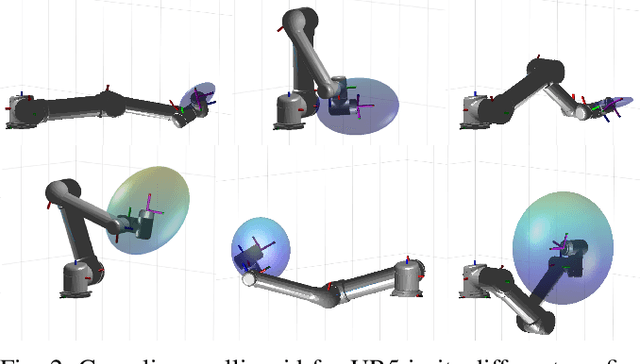

Differentiable Robot Neural Distance Function for Adaptive Grasp Synthesis on a Unified Robotic Arm-Hand System

Sep 28, 2023Abstract:Grasping is a fundamental skill for robots to interact with their environment. While grasp execution requires coordinated movement of the hand and arm to achieve a collision-free and secure grip, many grasp synthesis studies address arm and hand motion planning independently, leading to potentially unreachable grasps in practical settings. The challenge of determining integrated arm-hand configurations arises from its computational complexity and high-dimensional nature. We address this challenge by presenting a novel differentiable robot neural distance function. Our approach excels in capturing intricate geometry across various joint configurations while preserving differentiability. This innovative representation proves instrumental in efficiently addressing downstream tasks with stringent contact constraints. Leveraging this, we introduce an adaptive grasp synthesis framework that exploits the full potential of the unified arm-hand system for diverse grasping tasks. Our neural joint space distance function achieves an 84.7% error reduction compared to baseline methods. We validated our approaches on a unified robotic arm-hand system that consists of a 7-DoF robot arm and a 16-DoF multi-fingered robotic hand. Results demonstrate that our approach empowers this high-DoF system to generate and execute various arm-hand grasp configurations that adapt to the size of the target objects while ensuring whole-body movements to be collision-free.

A Unifying Variational Framework for Gaussian Process Motion Planning

Sep 02, 2023Abstract:To control how a robot moves, motion planning algorithms must compute paths in high-dimensional state spaces while accounting for physical constraints related to motors and joints, generating smooth and stable motions, avoiding obstacles, and preventing collisions. A motion planning algorithm must therefore balance competing demands, and should ideally incorporate uncertainty to handle noise, model errors, and facilitate deployment in complex environments. To address these issues, we introduce a framework for robot motion planning based on variational Gaussian Processes, which unifies and generalizes various probabilistic-inference-based motion planning algorithms. Our framework provides a principled and flexible way to incorporate equality-based, inequality-based, and soft motion-planning constraints during end-to-end training, is straightforward to implement, and provides both interval-based and Monte-Carlo-based uncertainty estimates. We conduct experiments using different environments and robots, comparing against baseline approaches based on the feasibility of the planned paths, and obstacle avoidance quality. Results show that our proposed approach yields a good balance between success rates and path quality.

Grasp Transfer based on Self-Aligning Implicit Representations of Local Surfaces

Aug 15, 2023

Abstract:Objects we interact with and manipulate often share similar parts, such as handles, that allow us to transfer our actions flexibly due to their shared functionality. This work addresses the problem of transferring a grasp experience or a demonstration to a novel object that shares shape similarities with objects the robot has previously encountered. Existing approaches for solving this problem are typically restricted to a specific object category or a parametric shape. Our approach, however, can transfer grasps associated with implicit models of local surfaces shared across object categories. Specifically, we employ a single expert grasp demonstration to learn an implicit local surface representation model from a small dataset of object meshes. At inference time, this model is used to transfer grasps to novel objects by identifying the most geometrically similar surfaces to the one on which the expert grasp is demonstrated. Our model is trained entirely in simulation and is evaluated on simulated and real-world objects that are not seen during training. Evaluations indicate that grasp transfer to unseen object categories using this approach can be successfully performed both in simulation and real-world experiments. The simulation results also show that the proposed approach leads to better spatial precision and grasp accuracy compared to a baseline approach.

Neural Field Movement Primitives for Joint Modelling of Scenes and Motions

Aug 15, 2023

Abstract:This paper presents a novel Learning from Demonstration (LfD) method that uses neural fields to learn new skills efficiently and accurately. It achieves this by utilizing a shared embedding to learn both scene and motion representations in a generative way. Our method smoothly maps each expert demonstration to a scene-motion embedding and learns to model them without requiring hand-crafted task parameters or large datasets. It achieves data efficiency by enforcing scene and motion generation to be smooth with respect to changes in the embedding space. At inference time, our method can retrieve scene-motion embeddings using test time optimization, and generate precise motion trajectories for novel scenes. The proposed method is versatile and can employ images, 3D shapes, and any other scene representations that can be modeled using neural fields. Additionally, it can generate both end-effector positions and joint angle-based trajectories. Our method is evaluated on tasks that require accurate motion trajectory generation, where the underlying task parametrization is based on object positions and geometric scene changes. Experimental results demonstrate that the proposed method outperforms the baseline approaches and generalizes to novel scenes. Furthermore, in real-world experiments, we show that our method can successfully model multi-valued trajectories, it is robust to the distractor objects introduced at inference time, and it can generate 6D motions.

Sliding Touch-based Exploration for Modeling Unknown Object Shape with Multi-fingered Hands

Aug 01, 2023

Abstract:Efficient and accurate 3D object shape reconstruction contributes significantly to the success of a robot's physical interaction with its environment. Acquiring accurate shape information about unknown objects is challenging, especially in unstructured environments, e.g. the vision sensors may only be able to provide a partial view. To address this issue, tactile sensors could be employed to extract local surface information for more robust unknown object shape estimation. In this paper, we propose a novel approach for efficient unknown 3D object shape exploration and reconstruction using a multi-fingered hand equipped with tactile sensors and a depth camera only providing a partial view. We present a multi-finger sliding touch strategy for efficient shape exploration using a Bayesian Optimization approach and a single-leader-multi-follower strategy for multi-finger smooth local surface perception. We evaluate our proposed method by estimating the 3D shape of objects from the YCB and OCRTOC datasets based on simulation and real robot experiments. The proposed approach yields successful reconstruction results relying on only a few continuous sliding touches. Experimental results demonstrate that our method is able to model unknown objects in an efficient and accurate way.

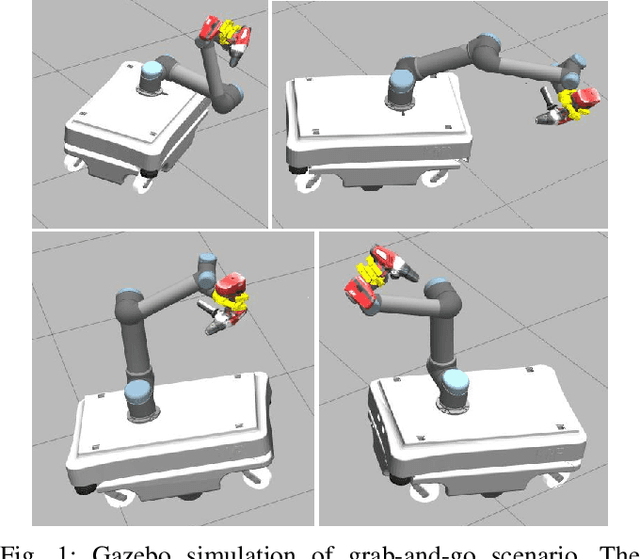

Benchmarking local motion planners for navigation of mobile manipulators

Nov 03, 2022

Abstract:There are various trajectory planners for mobile manipulators. It is often challenging to compare their performance under similar circumstances due to differences in hardware, dissimilarity of tasks and objectives, as well as uncertainties in measurements and operating environments. In this paper, we propose a simulation framework to evaluate the performance of the local trajectory planners to generate smooth, and dynamically and kinematically feasible trajectories for mobile manipulators in the same environment. We focus on local planners as they are key components that provide smooth trajectories while carrying a load, react to dynamic obstacles, and avoid collisions. We evaluate two prominent local trajectory planners, Dynamic-Window Approach (DWA) and Time Elastic Band (TEB) using the metrics that we introduce. Moreover, our software solution is applicable to any other local planners used in the Robot Operating System (ROS) framework, without additional programming effort.

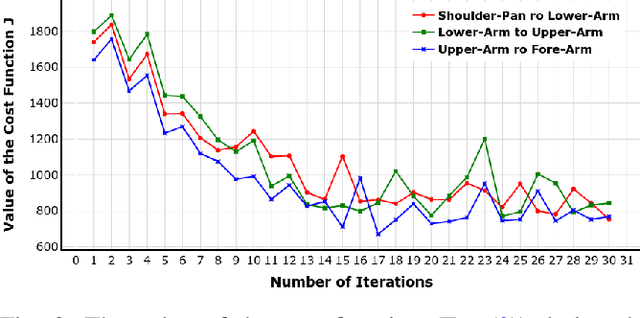

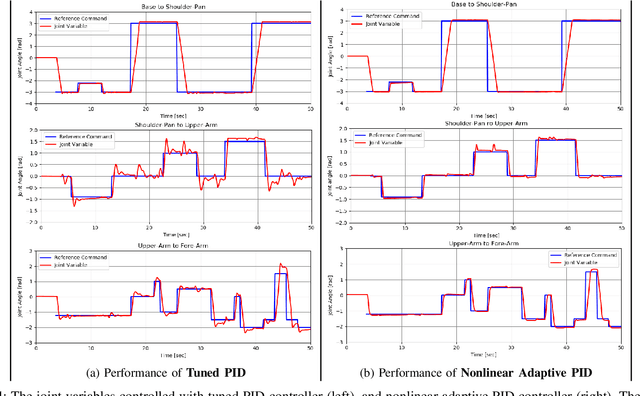

Bayesian Optimization-based Nonlinear Adaptive PID Controller Design for Robust Mobile Manipulation

Jul 04, 2022

Abstract:In this paper, we propose to use a nonlinear adaptive PID controller to regulate the joint variables of a mobile manipulator. The motion of the mobile base forces undue disturbances on the joint controllers of the manipulator. In designing a conventional PID controller, one should make a trade-off between the performance and agility of the closed-loop system and its stability margins. The proposed nonlinear adaptive PID controller provides a mechanism to relax the need for such a compromise by adapting the gains according to the magnitude of the error without expert tuning. Therefore, we can achieve agile performance for the system while seeing damped overshoot in the output and track the reference as close as possible, even in the presence of external disturbances and uncertainties in the modeling of the system. We have employed a Bayesian optimization approach to choose the parameters of a nonlinear adaptive PID controller to achieve the best performance in tracking the reference input and rejecting disturbances. The results demonstrate that a well-designed nonlinear adaptive PID controller can effectively regulate a mobile manipulator's joint variables while carrying an unspecified heavy load and an abrupt base movement occurs.

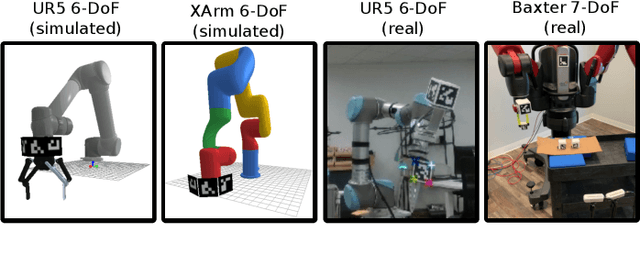

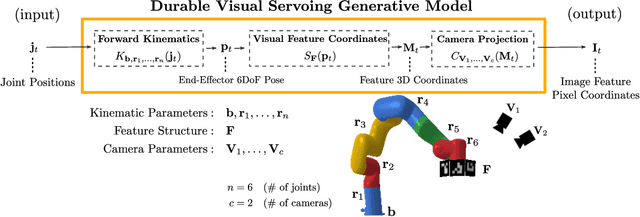

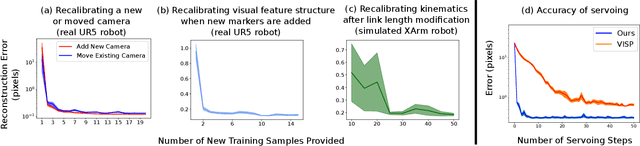

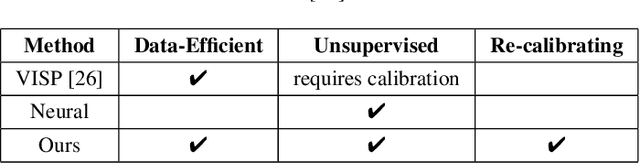

DURableVS: Data-efficient Unsupervised Recalibrating Visual Servoing via online learning in a structured generative model

Feb 08, 2022

Abstract:Visual servoing enables robotic systems to perform accurate closed-loop control, which is required in many applications. However, existing methods either require precise calibration of the robot kinematic model and cameras or use neural architectures that require large amounts of data to train. In this work, we present a method for unsupervised learning of visual servoing that does not require any prior calibration and is extremely data-efficient. Our key insight is that visual servoing does not depend on identifying the veridical kinematic and camera parameters, but instead only on an accurate generative model of image feature observations from the joint positions of the robot. We demonstrate that with our model architecture and learning algorithm, we can consistently learn accurate models from less than 50 training samples (which amounts to less than 1 min of unsupervised data collection), and that such data-efficient learning is not possible with standard neural architectures. Further, we show that by using the generative model in the loop and learning online, we can enable a robotic system to recover from calibration errors and to detect and quickly adapt to possibly unexpected changes in the robot-camera system (e.g. bumped camera, new objects).

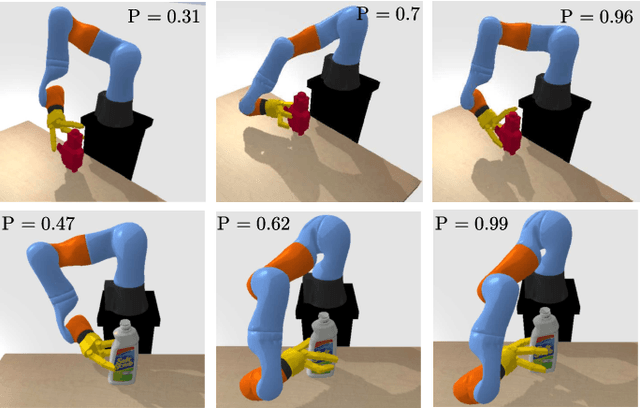

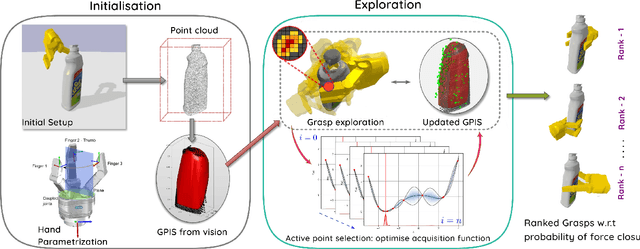

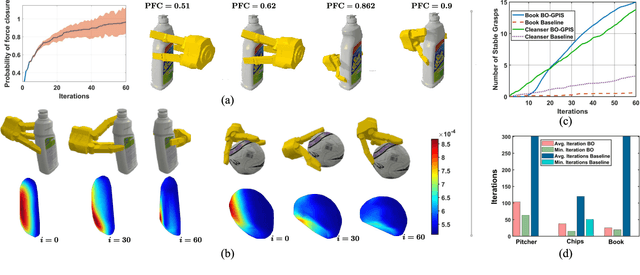

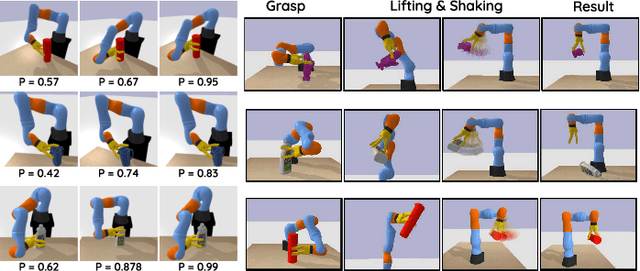

Simultaneous Tactile Exploration and Grasp Refinement for Unknown Objects

Feb 28, 2021

Abstract:This paper addresses the problem of simultaneously exploring an unknown object to model its shape, using tactile sensors on robotic fingers, while also improving finger placement to optimise grasp stability. In many situations, a robot will have only a partial camera view of the near side of an observed object, for which the far side remains occluded. We show how an initial grasp attempt, based on an initial guess of the overall object shape, yields tactile glances of the far side of the object which enable the shape estimate and consequently the successive grasps to be improved. We propose a grasp exploration approach using a probabilistic representation of shape, based on Gaussian Process Implicit Surfaces. This representation enables initial partial vision data to be augmented with additional data from successive tactile glances. This is combined with a probabilistic estimate of grasp quality to refine grasp configurations. When choosing the next set of finger placements, a bi-objective optimisation method is used to mutually maximise grasp quality and improve shape representation during successive grasp attempts. Experimental results show that the proposed approach yields stable grasp configurations more efficiently than a baseline method, while also yielding improved shape estimate of the grasped object.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge