Yanxi Zhao

MemeReaCon: Probing Contextual Meme Understanding in Large Vision-Language Models

May 23, 2025Abstract:Memes have emerged as a popular form of multimodal online communication, where their interpretation heavily depends on the specific context in which they appear. Current approaches predominantly focus on isolated meme analysis, either for harmful content detection or standalone interpretation, overlooking a fundamental challenge: the same meme can express different intents depending on its conversational context. This oversight creates an evaluation gap: although humans intuitively recognize how context shapes meme interpretation, Large Vision Language Models (LVLMs) can hardly understand context-dependent meme intent. To address this critical limitation, we introduce MemeReaCon, a novel benchmark specifically designed to evaluate how LVLMs understand memes in their original context. We collected memes from five different Reddit communities, keeping each meme's image, the post text, and user comments together. We carefully labeled how the text and meme work together, what the poster intended, how the meme is structured, and how the community responded. Our tests with leading LVLMs show a clear weakness: models either fail to interpret critical information in the contexts, or overly focus on visual details while overlooking communicative purpose. MemeReaCon thus serves both as a diagnostic tool exposing current limitations and as a challenging benchmark to drive development toward more sophisticated LVLMs of the context-aware understanding.

Positional-Spectral-Temporal Attention in 3D Convolutional Neural Networks for EEG Emotion Recognition

Nov 08, 2021

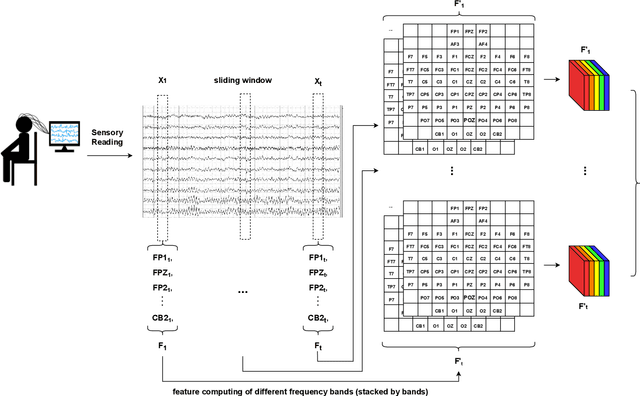

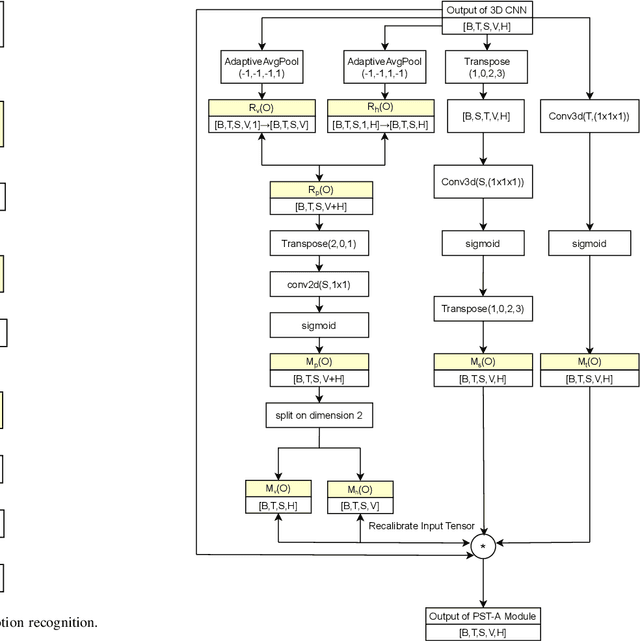

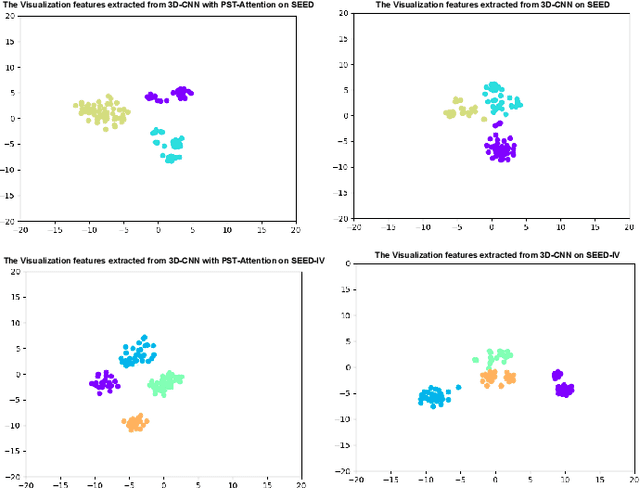

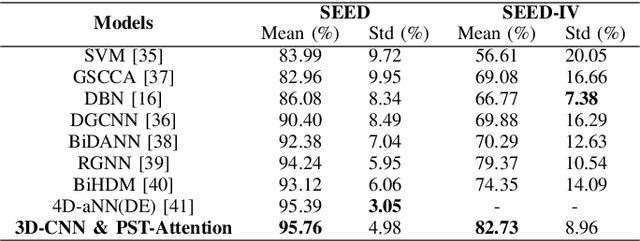

Abstract:Recognizing the feelings of human beings plays a critical role in our daily communication. Neuroscience has demonstrated that different emotion states present different degrees of activation in different brain regions, EEG frequency bands and temporal stamps. In this paper, we propose a novel structure to explore the informative EEG features for emotion recognition. The proposed module, denoted by PST-Attention, consists of Positional, Spectral and Temporal Attention modules to explore more discriminative EEG features. Specifically, the Positional Attention module is to capture the activate regions stimulated by different emotions in the spatial dimension. The Spectral and Temporal Attention modules assign the weights of different frequency bands and temporal slices respectively. Our method is adaptive as well as efficient which can be fit into 3D Convolutional Neural Networks (3D-CNN) as a plug-in module. We conduct experiments on two real-world datasets. 3D-CNN combined with our module achieves promising results and demonstrate that the PST-Attention is able to capture stable patterns for emotion recognition from EEG.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge