Yanliang Zhu

Robust Trajectory Forecasting for Multiple Intelligent Agents in Dynamic Scene

May 27, 2020

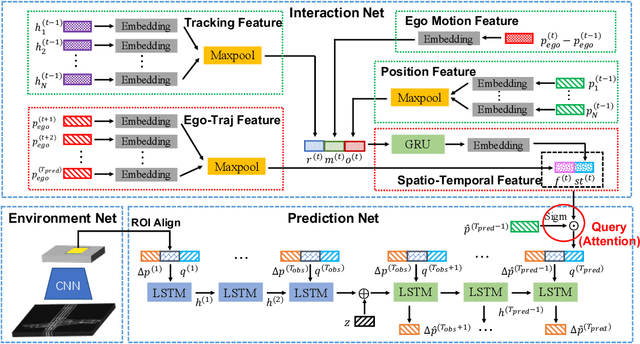

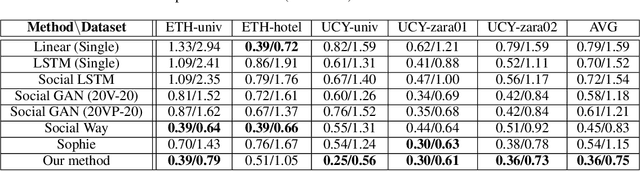

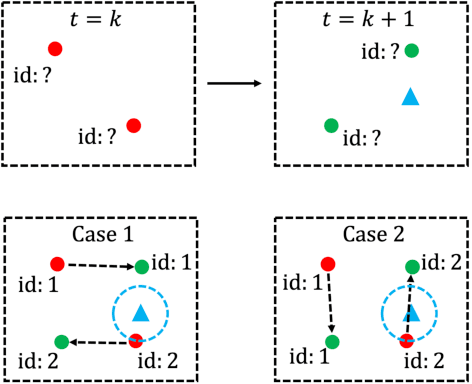

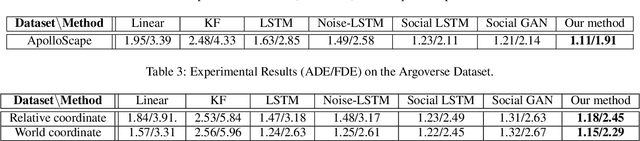

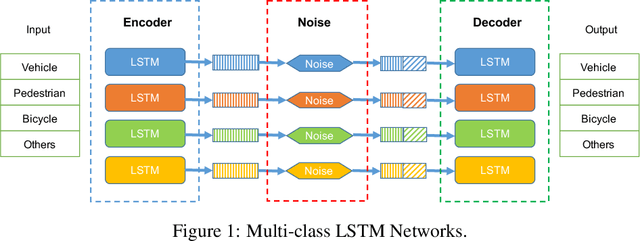

Abstract:Trajectory forecasting, or trajectory prediction, of multiple interacting agents in dynamic scenes, is an important problem for many applications, such as robotic systems and autonomous driving. The problem is a great challenge because of the complex interactions among the agents and their interactions with the surrounding scenes. In this paper, we present a novel method for the robust trajectory forecasting of multiple intelligent agents in dynamic scenes. The proposed method consists of three major interrelated components: an interaction net for global spatiotemporal interactive feature extraction, an environment net for decoding dynamic scenes (i.e., the surrounding road topology of an agent), and a prediction net that combines the spatiotemporal feature, the scene feature, the past trajectories of agents and some random noise for the robust trajectory prediction of agents. Experiments on pedestrian-walking and vehicle-pedestrian heterogeneous datasets demonstrate that the proposed method outperforms the state-of-the-art prediction methods in terms of prediction accuracy.

CVPR 2019 WAD Challenge on Trajectory Prediction and 3D Perception

Apr 06, 2020

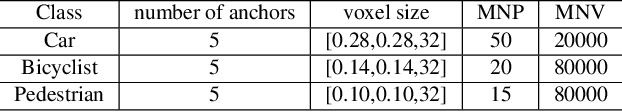

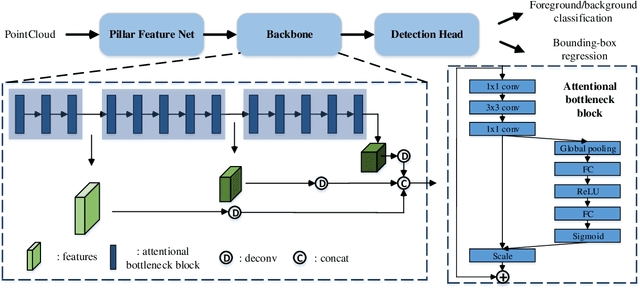

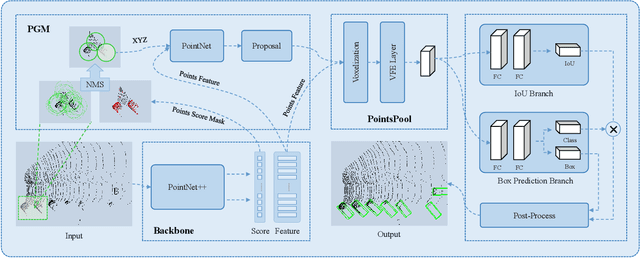

Abstract:This paper reviews the CVPR 2019 challenge on Autonomous Driving. Baidu's Robotics and Autonomous Driving Lab (RAL) providing 150 minutes labeled Trajectory and 3D Perception dataset including about 80k lidar point cloud and 1000km trajectories for urban traffic. The challenge has two tasks in (1) Trajectory Prediction and (2) 3D Lidar Object Detection. There are more than 200 teams submitted results on Leaderboard and more than 1000 participants attended the workshop.

VisionNet: A Drivable-space-based Interactive Motion Prediction Network for Autonomous Driving

Jan 08, 2020

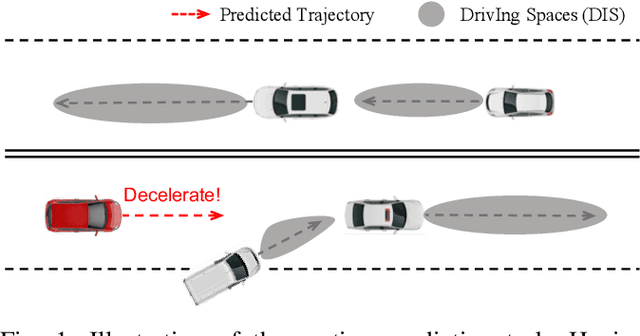

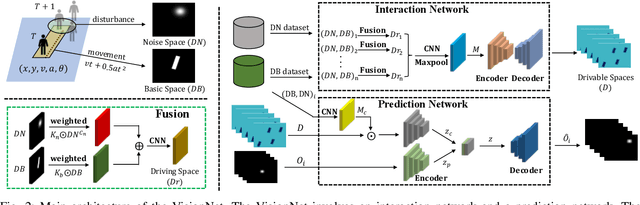

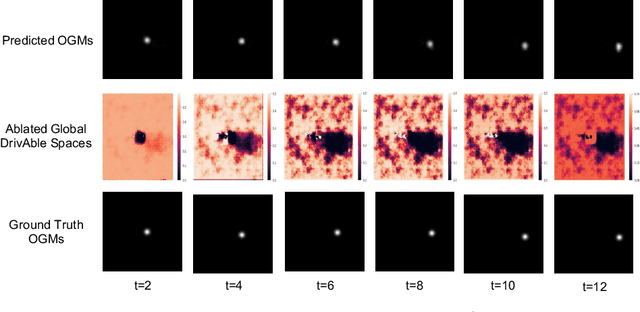

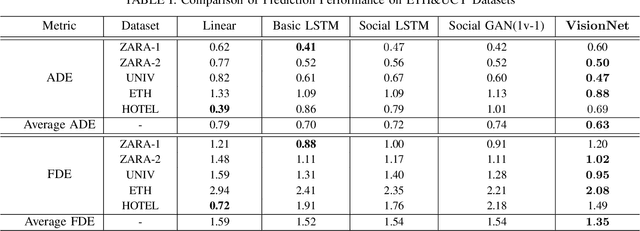

Abstract:The comprehension of environmental traffic situation largely ensures the driving safety of autonomous vehicles. Recently, the mission has been investigated by plenty of researches, while it is hard to be well addressed due to the limitation of collective influence in complex scenarios. These approaches model the interactions through the spatial relations between the target obstacle and its neighbors. However, they oversimplify the challenge since the training stage of the interactions lacks effective supervision. As a result, these models are far from promising. More intuitively, we transform the problem into calculating the interaction-aware drivable spaces and propose the CNN-based VisionNet for trajectory prediction. The VisionNet accepts a sequence of motion states, i.e., location, velocity, and acceleration, to estimate the future drivable spaces. The reified interactions significantly increase the interpretation ability of the VisionNet and refine the prediction. To further advance the performance, we propose an interactive loss to guide the generation of the drivable spaces. Experiments on multiple public datasets demonstrate the effectiveness of the proposed VisionNet.

StarNet: Pedestrian Trajectory Prediction using Deep Neural Network in Star Topology

Jun 05, 2019

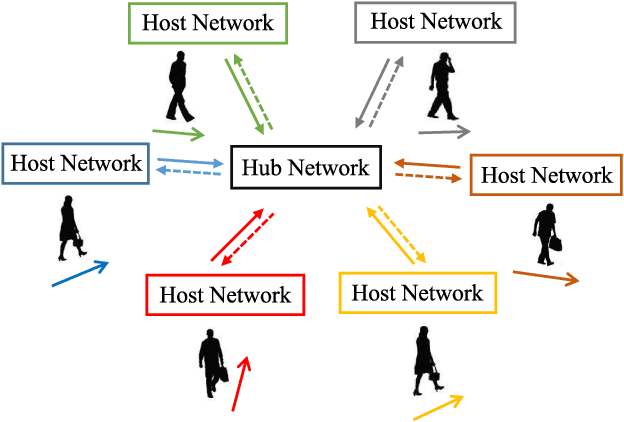

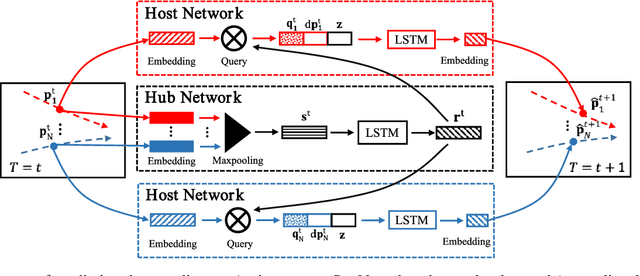

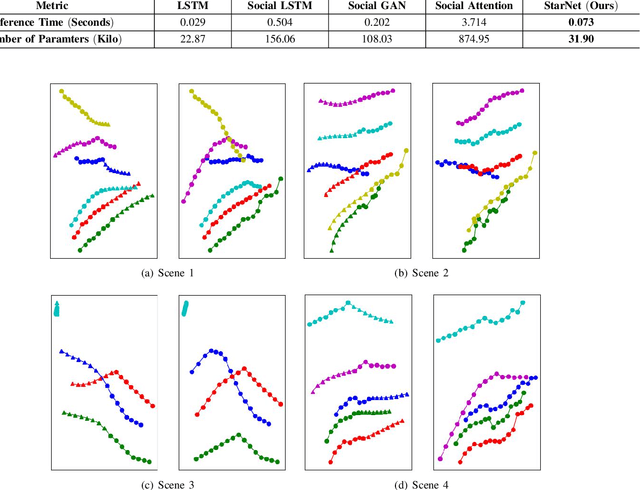

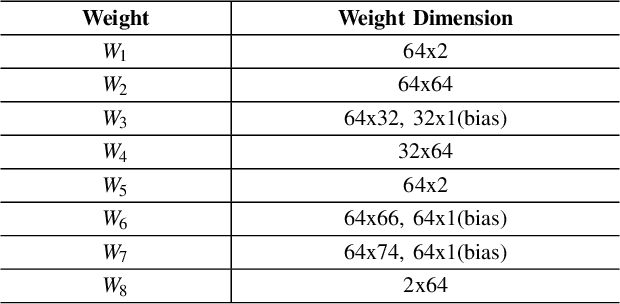

Abstract:Pedestrian trajectory prediction is crucial for many important applications. This problem is a great challenge because of complicated interactions among pedestrians. Previous methods model only the pairwise interactions between pedestrians, which not only oversimplifies the interactions among pedestrians but also is computationally inefficient. In this paper, we propose a novel model StarNet to deal with these issues. StarNet has a star topology which includes a unique hub network and multiple host networks. The hub network takes observed trajectories of all pedestrians to produce a comprehensive description of the interpersonal interactions. Then the host networks, each of which corresponds to one pedestrian, consult the description and predict future trajectories. The star topology gives StarNet two advantages over conventional models. First, StarNet is able to consider the collective influence among all pedestrians in the hub network, making more accurate predictions. Second, StarNet is computationally efficient since the number of host network is linear to the number of pedestrians. Experiments on multiple public datasets demonstrate that StarNet outperforms multiple state-of-the-arts by a large margin in terms of both accuracy and efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge