Yanfei Liu

Break Stylistic Sophon: Are We Really Meant to Confine the Imagination in Style Transfer?

Jun 18, 2025Abstract:In this pioneering study, we introduce StyleWallfacer, a groundbreaking unified training and inference framework, which not only addresses various issues encountered in the style transfer process of traditional methods but also unifies the framework for different tasks. This framework is designed to revolutionize the field by enabling artist level style transfer and text driven stylization. First, we propose a semantic-based style injection method that uses BLIP to generate text descriptions strictly aligned with the semantics of the style image in CLIP space. By leveraging a large language model to remove style-related descriptions from these descriptions, we create a semantic gap. This gap is then used to fine-tune the model, enabling efficient and drift-free injection of style knowledge. Second, we propose a data augmentation strategy based on human feedback, incorporating high-quality samples generated early in the fine-tuning process into the training set to facilitate progressive learning and significantly reduce its overfitting. Finally, we design a training-free triple diffusion process using the fine-tuned model, which manipulates the features of self-attention layers in a manner similar to the cross-attention mechanism. Specifically, in the generation process, the key and value of the content-related process are replaced with those of the style-related process to inject style while maintaining text control over the model. We also introduce query preservation to mitigate disruptions to the original content. Under such a design, we have achieved high-quality image-driven style transfer and text-driven stylization, delivering artist-level style transfer results while preserving the original image content. Moreover, we achieve image color editing during the style transfer process for the first time.

LE-NAS: Learning-based Ensenble with NAS for Dose Prediction

Jun 12, 2021

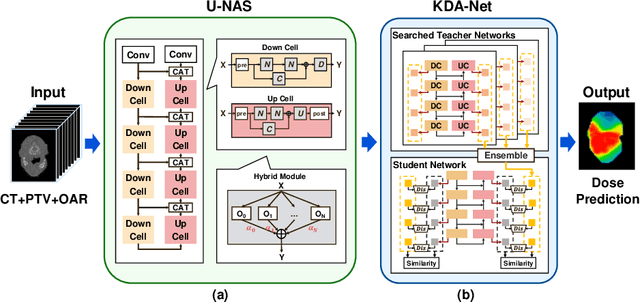

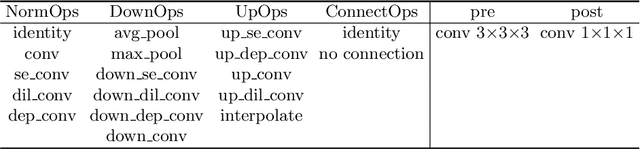

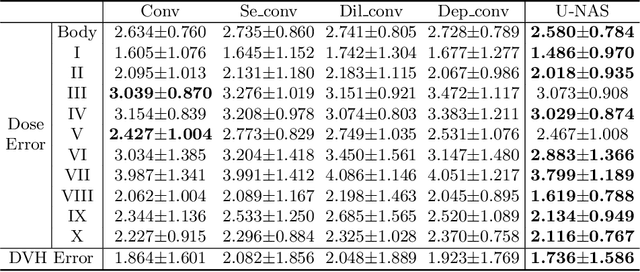

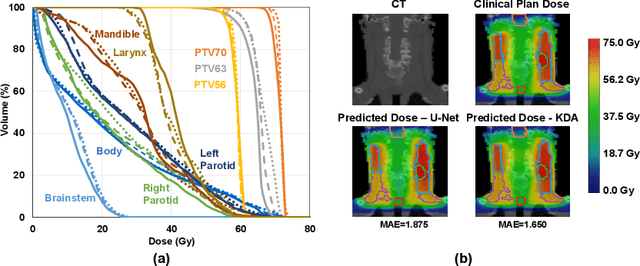

Abstract:Radiation therapy treatment planning is a complex process, as the target dose prescription and normal tissue sparing are conflicting objectives. Automated and accurate dose prediction for radiation therapy planning is in high demand. In this study, we propose a novel learning-based ensemble approach, named LE-NAS, which integrates neural architecture search (NAS) with knowledge distillation for 3D radiotherapy dose prediction. Specifically, the prediction network first exhaustively searches each block from enormous architecture space. Then, multiple architectures are selected with promising performance and diversity. To reduce the inference time, we adopt the teacher-student paradigm by treating the combination of diverse outputs from multiple searched networks as supervisions to guide the student network training. In addition, we apply adversarial learning to optimize the student network to recover the knowledge in teacher networks. To the best of our knowledge, we are the first to investigate the combination of NAS and knowledge distillation. The proposed method has been evaluated on the public OpenKBP dataset, and experimental results demonstrate the effectiveness of our method and its superior performance to the state-of-the-art method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge