Xuequan Lu

Content and Context Features for Scene Image Representation

Jun 05, 2020

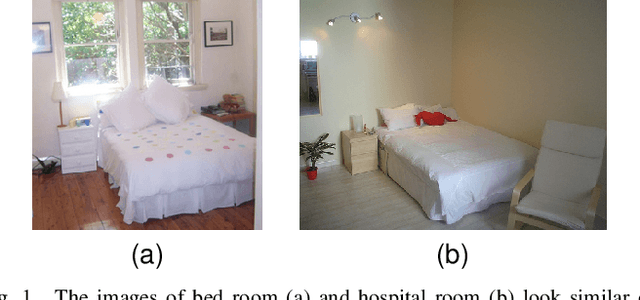

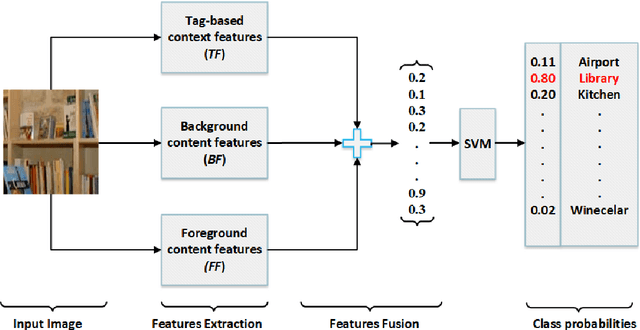

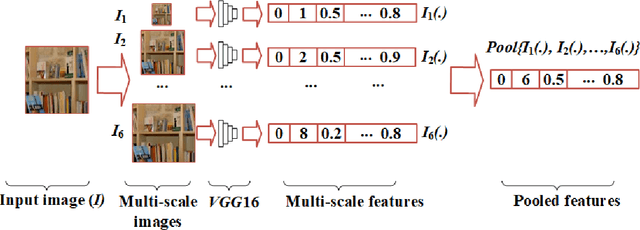

Abstract:Existing research in scene image classification has focused on either content features (e.g., visual information) or context features (e.g., annotations). As they capture different information about images which can be complementary and useful to discriminate images of different classes, we suppose the fusion of them will improve classification results. In this paper, we propose new techniques to compute content features and context features, and then fuse them together. For content features, we design multi-scale deep features based on background and foreground information in images. For context features, we use annotations of similar images available in the web to design a filter words (codebook). Our experiments in three widely used benchmark scene datasets using support vector machine classifier reveal that our proposed context and content features produce better results than existing context and content features, respectively. The fusion of the proposed two types of features significantly outperform numerous state-of-the-art features.

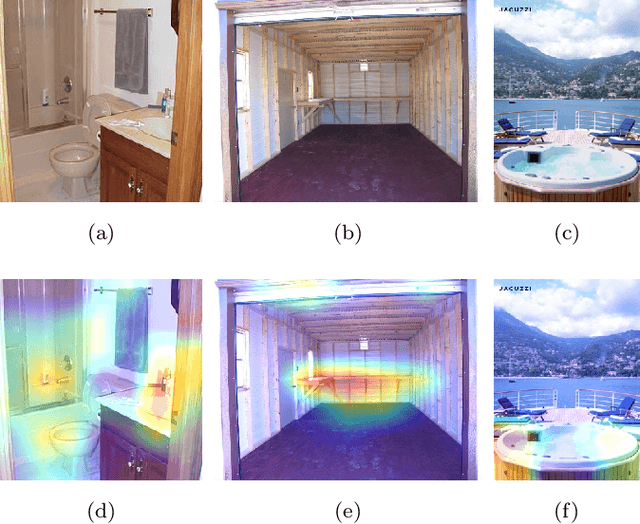

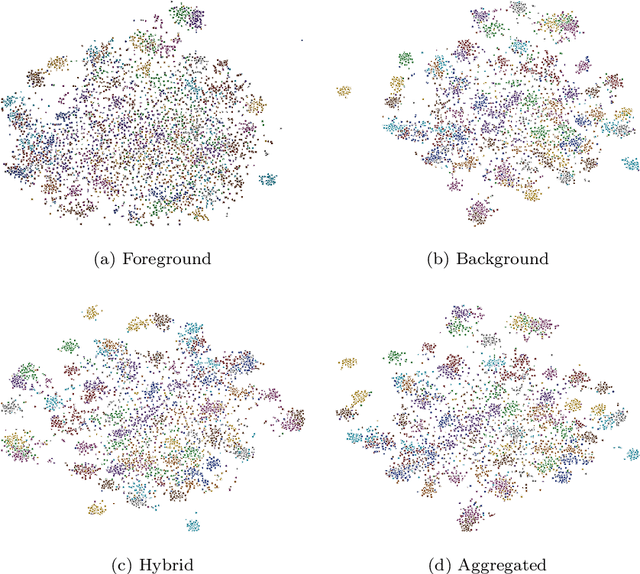

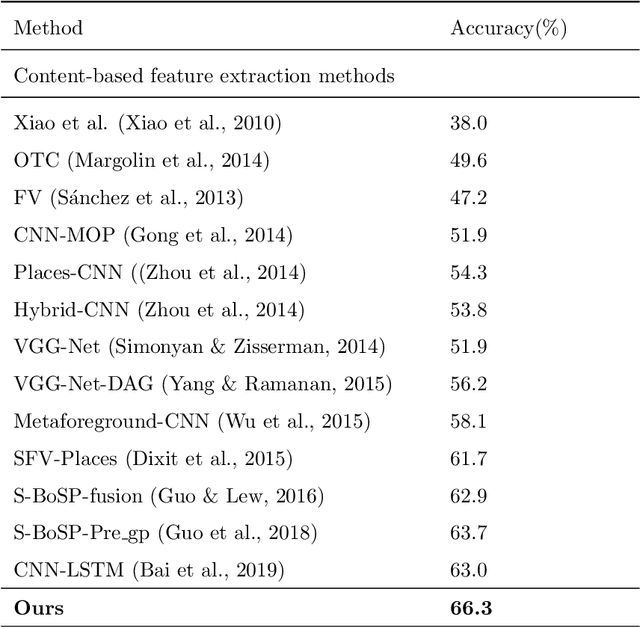

Scene Image Representation by Foreground, Background and Hybrid Features

Jun 05, 2020

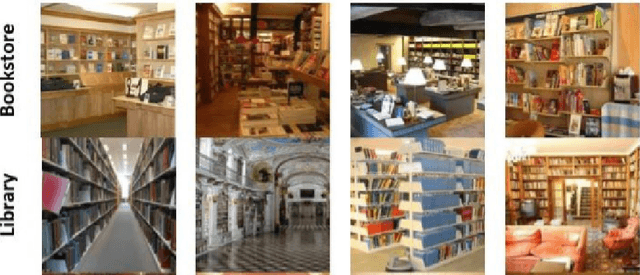

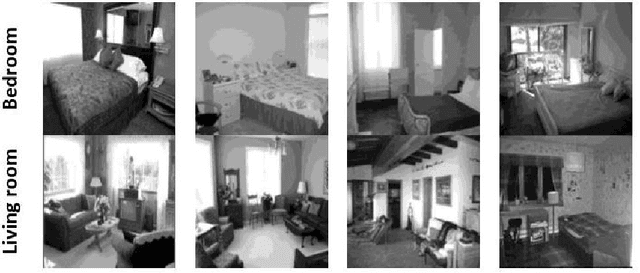

Abstract:Previous methods for representing scene images based on deep learning primarily consider either the foreground or background information as the discriminating clues for the classification task. However, scene images also require additional information (hybrid) to cope with the inter-class similarity and intra-class variation problems. In this paper, we propose to use hybrid features in addition to foreground and background features to represent scene images. We suppose that these three types of information could jointly help to represent scene image more accurately. To this end, we adopt three VGG-16 architectures pre-trained on ImageNet, Places, and Hybrid (both ImageNet and Places) datasets for the corresponding extraction of foreground, background and hybrid information. All these three types of deep features are further aggregated to achieve our final features for the representation of scene images. Extensive experiments on two large benchmark scene datasets (MIT-67 and SUN-397) show that our method produces the state-of-the-art classification performance.

Spoof Face Detection Via Semi-Supervised Adversarial Training

May 22, 2020

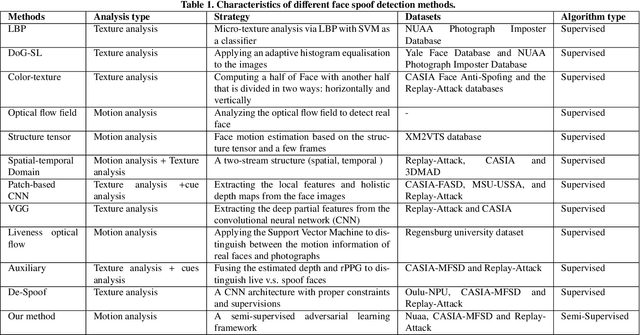

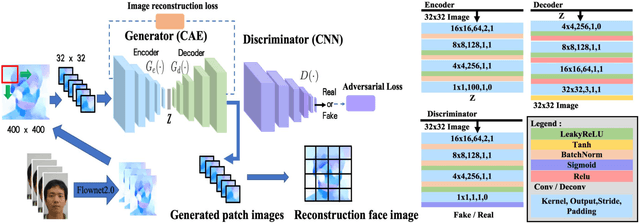

Abstract:Face spoofing causes severe security threats in face recognition systems. Previous anti-spoofing works focused on supervised techniques, typically with either binary or auxiliary supervision. Most of them suffer from limited robustness and generalization, especially in the cross-dataset setting. In this paper, we propose a semi-supervised adversarial learning framework for spoof face detection, which largely relaxes the supervision condition. To capture the underlying structure of live faces data in latent representation space, we propose to train the live face data only, with a convolutional Encoder-Decoder network acting as a Generator. Meanwhile, we add a second convolutional network serving as a Discriminator. The generator and discriminator are trained by competing with each other while collaborating to understand the underlying concept in the normal class(live faces). Since the spoof face detection is video based (i.e., temporal information), we intuitively take the optical flow maps converted from consecutive video frames as input. Our approach is free of the spoof faces, thus being robust and general to different types of spoof, even unknown spoof. Extensive experiments on intra- and cross-dataset tests show that our semi-supervised method achieves better or comparable results to state-of-the-art supervised techniques.

Deep Feature-preserving Normal Estimation for Point Cloud Filtering

Apr 24, 2020

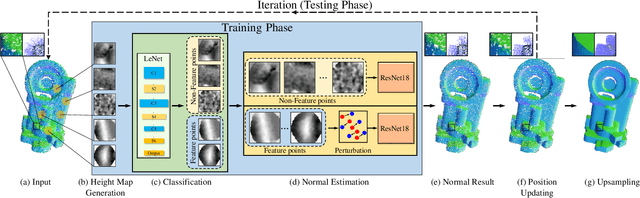

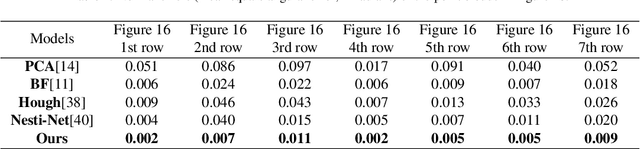

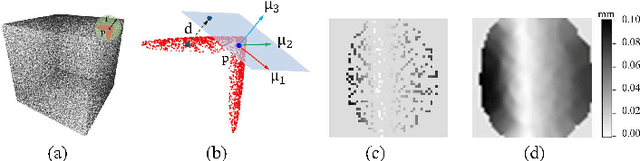

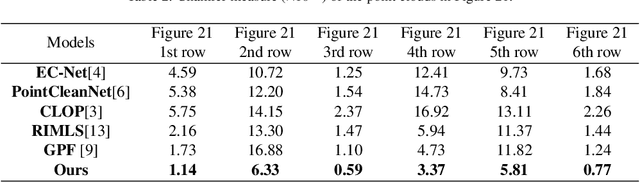

Abstract:Point cloud filtering, the main bottleneck of which is removing noise (outliers) while preserving geometric features, is a fundamental problem in 3D field. The two-step schemes involving normal estimation and position update have been shown to produce promising results. Nevertheless, the current normal estimation methods including optimization ones and deep learning ones, often either have limited automation or cannot preserve sharp features. In this paper, we propose a novel feature-preserving normal estimation method for point cloud filtering with preserving geometric features. It is a learning method and thus achieves automatic prediction for normals. For training phase, we first generate patch based samples which are then fed to a classification network to classify feature and non-feature points. We finally train the samples of feature and non-feature points separately, to achieve decent results. Regarding testing, given a noisy point cloud, its normals can be automatically estimated. For further point cloud filtering, we iterate the above normal estimation and a current position update algorithm for a few times. Various experiments demonstrate that our method outperforms state-of-the-art normal estimation methods and point cloud filtering techniques, in terms of both quality and quantity.

SHX: Search History Driven Crossover for Real-Coded Genetic Algorithm

Mar 30, 2020

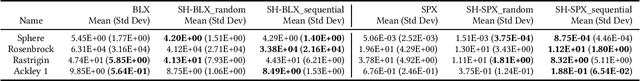

Abstract:In evolutionary algorithms, genetic operators iteratively generate new offspring which constitute a potentially valuable set of search history. To boost the performance of crossover in real-coded genetic algorithm (RCGA), in this paper we propose to exploit the search history cached so far in an online style during the iteration. Specifically, survivor individuals over past few generations are collected and stored in the archive to form the search history. We introduce a simple yet effective crossover model driven by the search history (abbreviated as SHX). In particular, the search history is clustered and each cluster is assigned a score for SHX. In essence, the proposed SHX is a data-driven method which exploits the search history to perform offspring selection after the offspring generation. Since no additional fitness evaluations are needed, SHX is favorable for the tasks with limited budget or expensive fitness evaluations. We experimentally verify the effectiveness of SHX over 4 benchmark functions. Quantitative results show that our SHX can significantly enhance the performance of RCGA, in terms of accuracy.

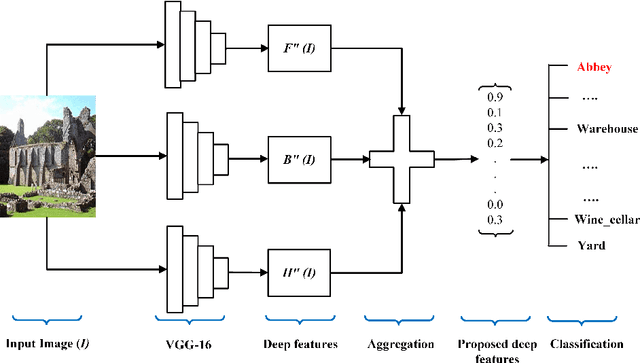

HDF: Hybrid Deep Features for Scene Image Representation

Mar 22, 2020

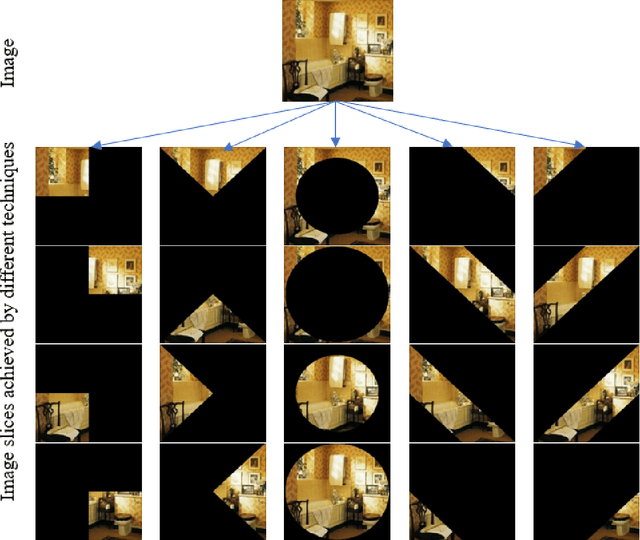

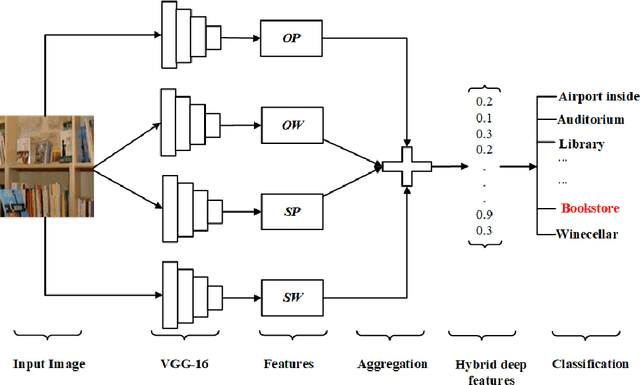

Abstract:Nowadays it is prevalent to take features extracted from pre-trained deep learning models as image representations which have achieved promising classification performance. Existing methods usually consider either object-based features or scene-based features only. However, both types of features are important for complex images like scene images, as they can complement each other. In this paper, we propose a novel type of features -- hybrid deep features, for scene images. Specifically, we exploit both object-based and scene-based features at two levels: part image level (i.e., parts of an image) and whole image level (i.e., a whole image), which produces a total number of four types of deep features. Regarding the part image level, we also propose two new slicing techniques to extract part based features. Finally, we aggregate these four types of deep features via the concatenation operator. We demonstrate the effectiveness of our hybrid deep features on three commonly used scene datasets (MIT-67, Scene-15, and Event-8), in terms of the scene image classification task. Extensive comparisons show that our introduced features can produce state-of-the-art classification accuracies which are more consistent and stable than the results of existing features across all datasets.

G2MF-WA: Geometric Multi-Model Fitting with Weakly Annotated Data

Jan 20, 2020

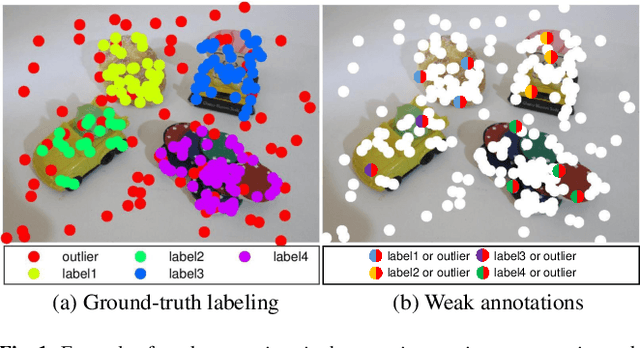

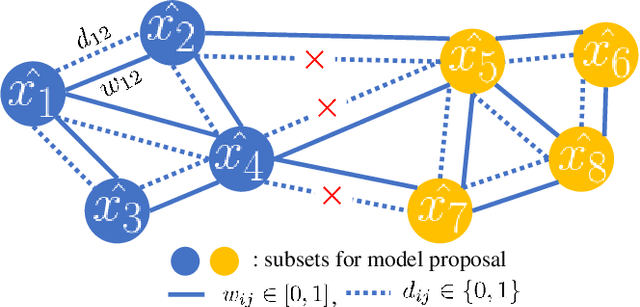

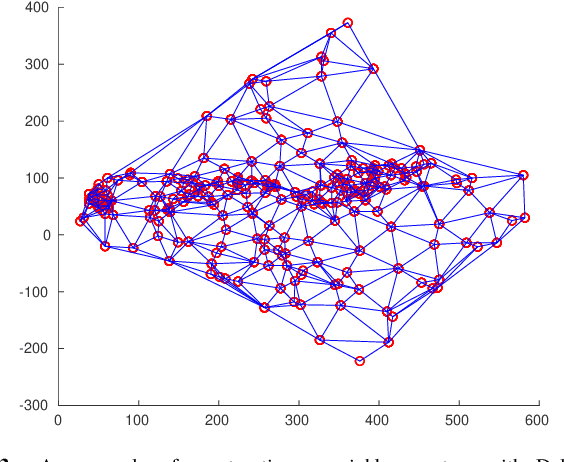

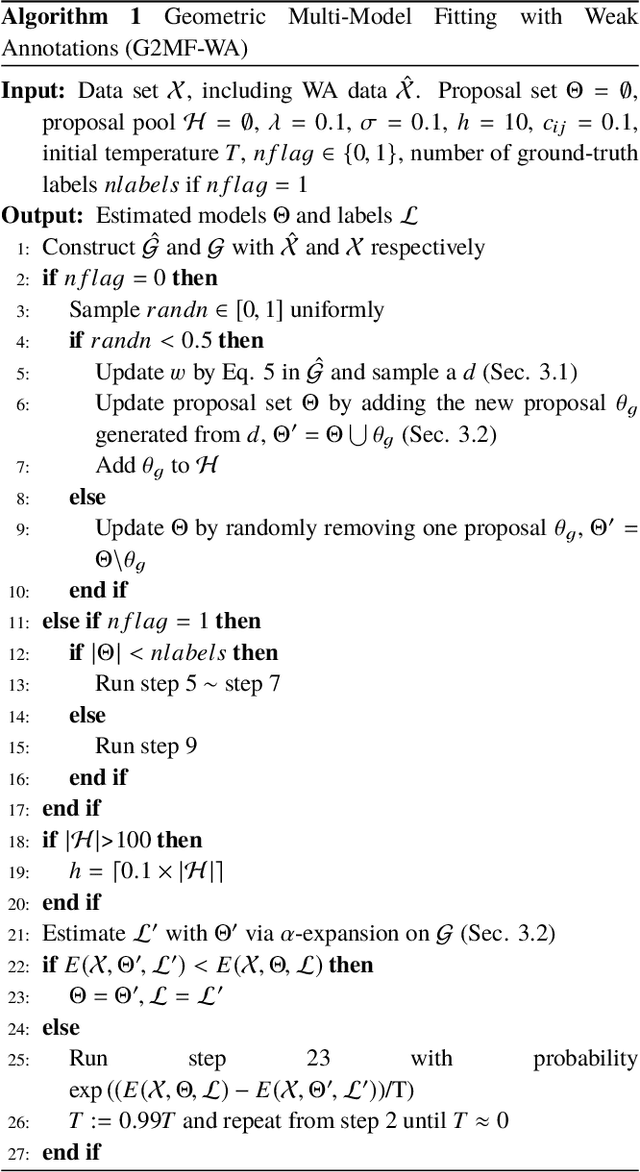

Abstract:In this paper we attempt to address the problem of geometric multi-model fitting with resorting to a few weakly annotated (WA) data points, which has been sparsely studied so far. In weak annotating, most of the manual annotations are supposed to be correct yet inevitably mixed with incorrect ones. The WA data can be naturally obtained in an interactive way for specific tasks, for example, in the case of homography estimation, one can easily annotate points on the same plane/object with a single label by observing the image. Motivated by this, we propose a novel method to make full use of the WA data to boost the multi-model fitting performance. Specifically, a graph for model proposal sampling is first constructed using the WA data, given the prior that the WA data annotated with the same weak label has a high probability of being assigned to the same model. By incorporating this prior knowledge into the calculation of edge probabilities, vertices (i.e., data points) lie on/near the latent model are likely to connect together and further form a subset/cluster for effective proposals generation. With the proposals generated, the $\alpha$-expansion is adopted for labeling, and our method in return updates the proposals. This works in an iterative way. Extensive experiments validate our method and show that the proposed method produces noticeably better results than state-of-the-art techniques in most cases.

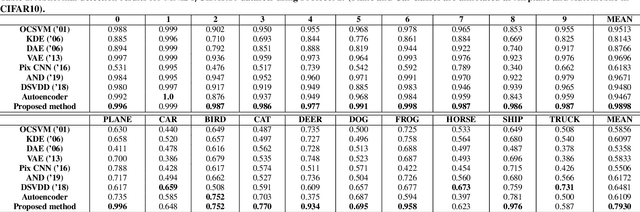

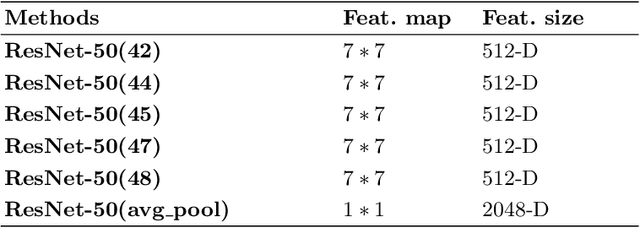

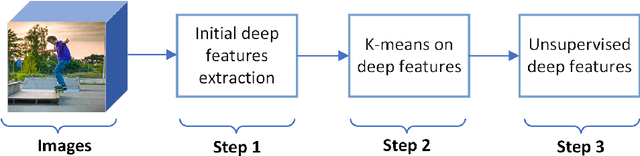

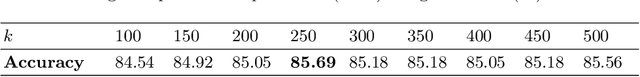

Unsupervised Deep Features for Privacy Image Classification

Sep 24, 2019

Abstract:Sharing images online poses security threats to a wide range of users due to the unawareness of privacy information. Deep features have been demonstrated to be a powerful representation for images. However, deep features usually suffer from the issues of a large size and requiring a huge amount of data for fine-tuning. In contrast to normal images (e.g., scene images), privacy images are often limited because of sensitive information. In this paper, we propose a novel approach that can work on limited data and generate deep features of smaller size. For training images, we first extract the initial deep features from the pre-trained model and then employ the K-means clustering algorithm to learn the centroids of these initial deep features. We use the learned centroids from training features to extract the final features for each testing image and encode our final features with the triangle encoding. To improve the discriminability of the features, we further perform the fusion of two proposed unsupervised deep features obtained from different layers. Experimental results show that the proposed features outperform state-of-the-art deep features, in terms of both classification accuracy and testing time.

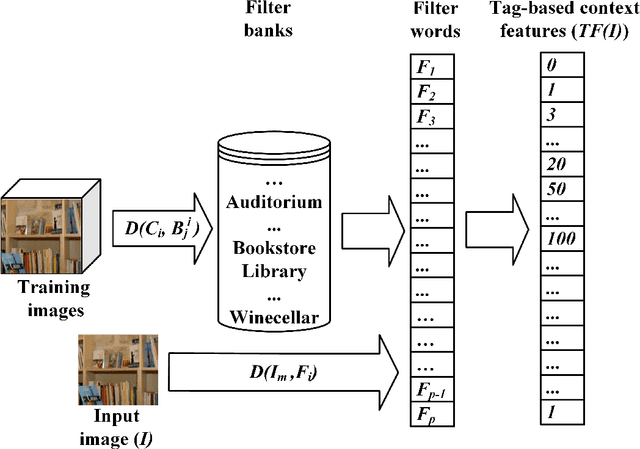

Tag-based Semantic Features for Scene Image Classification

Sep 22, 2019

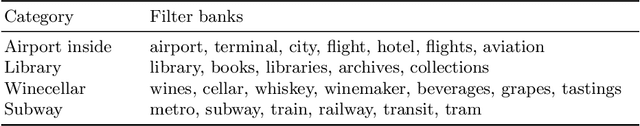

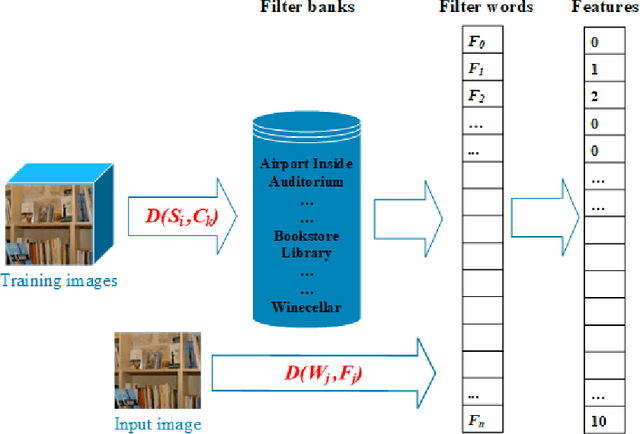

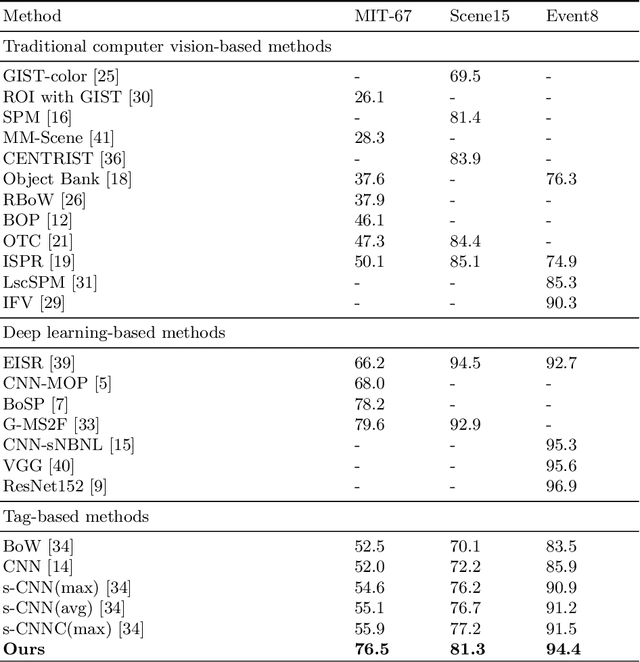

Abstract:The existing image feature extraction methods are primarily based on the content and structure information of images, and rarely consider the contextual semantic information. Regarding some types of images such as scenes and objects, the annotations and descriptions of them available on the web may provide reliable contextual semantic information for feature extraction. In this paper, we introduce novel semantic features of an image based on the annotations and descriptions of its similar images available on the web. Specifically, we propose a new method which consists of two consecutive steps to extract our semantic features. For each image in the training set, we initially search the top $k$ most similar images from the internet and extract their annotations/descriptions (e.g., tags or keywords). The annotation information is employed to design a filter bank for each image category and generate filter words (codebook). Finally, each image is represented by the histogram of the occurrences of filter words in all categories. We evaluate the performance of the proposed features in scene image classification on three commonly-used scene image datasets (i.e., MIT-67, Scene15 and Event8). Our method typically produces a lower feature dimension than existing feature extraction methods. Experimental results show that the proposed features generate better classification accuracies than vision based and tag based features, and comparable results to deep learning based features.

Explicit Facial Expression Transfer via Fine-Grained Semantic Representations

Sep 06, 2019

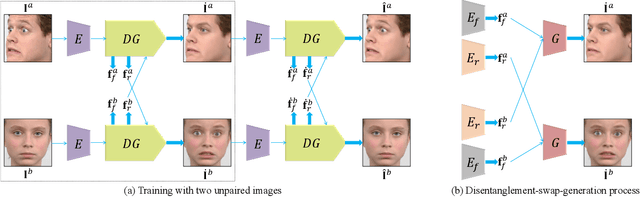

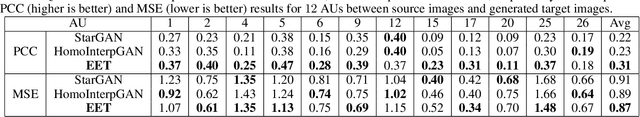

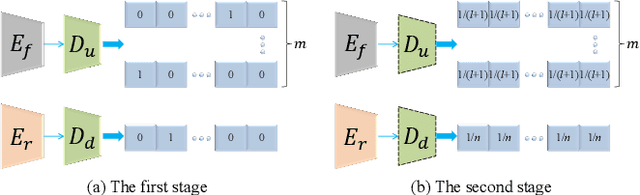

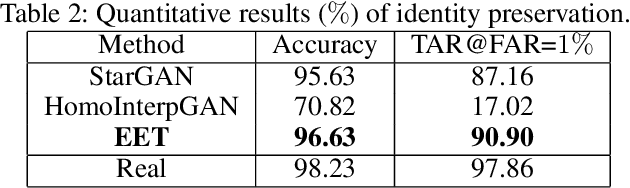

Abstract:Facial expression transfer between two unpaired images is a challenging problem, as fine-grained expressions are typically tangled with other facial attributes such as identity and pose. Most existing methods treat expression transfer as an application of expression manipulation, and use predicted facial expressions, landmarks or action units (AUs) of a source image to guide the expression edit of a target image. However, the prediction of expressions, landmarks and especially AUs may be inaccurate, which limits the accuracy of transferring fine-grained expressions. Instead of using an intermediate estimated guidance, we propose to explicitly transfer expressions by directly mapping two unpaired images to two synthesized images with swapped expressions. Since each AU semantically describes local expression details, we can synthesize new images with preserved identities and swapped expressions by combining AU-free features with swapped AU-related features. To disentangle the images into AU-related features and AU-free features, we propose a novel adversarial training method which can solve the adversarial learning of multi-class classification problems. Moreover, to obtain reliable expression transfer results of the unpaired input, we introduce a swap consistency loss to make the synthesized images and self-reconstructed images indistinguishable. Extensive experiments on RaFD, MMI and CFD datasets show that our approach can generate photo-realistic expression transfer results between unpaired images with different expression appearances including genders, ages, races and poses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge