Xiaobin Zhu

Stationarity Exploration for Multivariate Time Series Forecasting

Aug 12, 2025Abstract:Deep learning-based time series forecasting has found widespread applications. Recently, converting time series data into the frequency domain for forecasting has become popular for accurately exploring periodic patterns. However, existing methods often cannot effectively explore stationary information from complex intertwined frequency components. In this paper, we propose a simple yet effective Amplitude-Phase Reconstruct Network (APRNet) that models the inter-relationships of amplitude and phase, which prevents the amplitude and phase from being constrained by different physical quantities, thereby decoupling the distinct characteristics of signals for capturing stationary information. Specifically, we represent the multivariate time series input across sequence and channel dimensions, highlighting the correlation between amplitude and phase at multiple interaction frequencies. We propose a novel Kolmogorov-Arnold-Network-based Local Correlation (KLC) module to adaptively fit local functions using univariate functions, enabling more flexible characterization of stationary features across different amplitudes and phases. This significantly enhances the model's capability to capture time-varying patterns. Extensive experiments demonstrate the superiority of our APRNet against the state-of-the-arts (SOTAs).

Semantic-Enhanced Time-Series Forecasting via Large Language Models

Aug 11, 2025Abstract:Time series forecasting plays a significant role in finance, energy, meteorology, and IoT applications. Recent studies have leveraged the generalization capabilities of large language models (LLMs) to adapt to time series forecasting, achieving promising performance. However, existing studies focus on token-level modal alignment, instead of bridging the intrinsic modality gap between linguistic knowledge structures and time series data patterns, greatly limiting the semantic representation. To address this issue, we propose a novel Semantic-Enhanced LLM (SE-LLM) that explores the inherent periodicity and anomalous characteristics of time series to embed into the semantic space to enhance the token embedding. This process enhances the interpretability of tokens for LLMs, thereby activating the potential of LLMs for temporal sequence analysis. Moreover, existing Transformer-based LLMs excel at capturing long-range dependencies but are weak at modeling short-term anomalies in time-series data. Hence, we propose a plugin module embedded within self-attention that models long-term and short-term dependencies to effectively adapt LLMs to time-series analysis. Our approach freezes the LLM and reduces the sequence dimensionality of tokens, greatly reducing computational consumption. Experiments demonstrate the superiority performance of our SE-LLM against the state-of-the-art (SOTA) methods.

Unsupervised Real-World Super-Resolution via Rectified Flow Degradation Modelling

Aug 10, 2025

Abstract:Unsupervised real-world super-resolution (SR) faces critical challenges due to the complex, unknown degradation distributions in practical scenarios. Existing methods struggle to generalize from synthetic low-resolution (LR) and high-resolution (HR) image pairs to real-world data due to a significant domain gap. In this paper, we propose an unsupervised real-world SR method based on rectified flow to effectively capture and model real-world degradation, synthesizing LR-HR training pairs with realistic degradation. Specifically, given unpaired LR and HR images, we propose a novel Rectified Flow Degradation Module (RFDM) that introduces degradation-transformed LR (DT-LR) images as intermediaries. By modeling the degradation trajectory in a continuous and invertible manner, RFDM better captures real-world degradation and enhances the realism of generated LR images. Additionally, we propose a Fourier Prior Guided Degradation Module (FGDM) that leverages structural information embedded in Fourier phase components to ensure more precise modeling of real-world degradation. Finally, the LR images are processed by both FGDM and RFDM, producing final synthetic LR images with real-world degradation. The synthetic LR images are paired with the given HR images to train the off-the-shelf SR networks. Extensive experiments on real-world datasets demonstrate that our method significantly enhances the performance of existing SR approaches in real-world scenarios.

Similarity Matters: A Novel Depth-guided Network for Image Restoration and A New Dataset

Aug 10, 2025Abstract:Image restoration has seen substantial progress in recent years. However, existing methods often neglect depth information, which hurts similarity matching, results in attention distractions in shallow depth-of-field (DoF) scenarios, and excessive enhancement of background content in deep DoF settings. To overcome these limitations, we propose a novel Depth-Guided Network (DGN) for image restoration, together with a novel large-scale high-resolution dataset. Specifically, the network consists of two interactive branches: a depth estimation branch that provides structural guidance, and an image restoration branch that performs the core restoration task. In addition, the image restoration branch exploits intra-object similarity through progressive window-based self-attention and captures inter-object similarity via sparse non-local attention. Through joint training, depth features contribute to improved restoration quality, while the enhanced visual features from the restoration branch in turn help refine depth estimation. Notably, we also introduce a new dataset for training and evaluation, consisting of 9,205 high-resolution images from 403 plant species, with diverse depth and texture variations. Extensive experiments show that our method achieves state-of-the-art performance on several standard benchmarks and generalizes well to unseen plant images, demonstrating its effectiveness and robustness.

VCapsBench: A Large-scale Fine-grained Benchmark for Video Caption Quality Evaluation

May 29, 2025Abstract:Video captions play a crucial role in text-to-video generation tasks, as their quality directly influences the semantic coherence and visual fidelity of the generated videos. Although large vision-language models (VLMs) have demonstrated significant potential in caption generation, existing benchmarks inadequately address fine-grained evaluation, particularly in capturing spatial-temporal details critical for video generation. To address this gap, we introduce the Fine-grained Video Caption Evaluation Benchmark (VCapsBench), the first large-scale fine-grained benchmark comprising 5,677 (5K+) videos and 109,796 (100K+) question-answer pairs. These QA-pairs are systematically annotated across 21 fine-grained dimensions (e.g., camera movement, and shot type) that are empirically proven critical for text-to-video generation. We further introduce three metrics (Accuracy (AR), Inconsistency Rate (IR), Coverage Rate (CR)), and an automated evaluation pipeline leveraging large language model (LLM) to verify caption quality via contrastive QA-pairs analysis. By providing actionable insights for caption optimization, our benchmark can advance the development of robust text-to-video models. The dataset and codes are available at website: https://github.com/GXYM/VCapsBench.

DPFlow: Adaptive Optical Flow Estimation with a Dual-Pyramid Framework

Mar 19, 2025

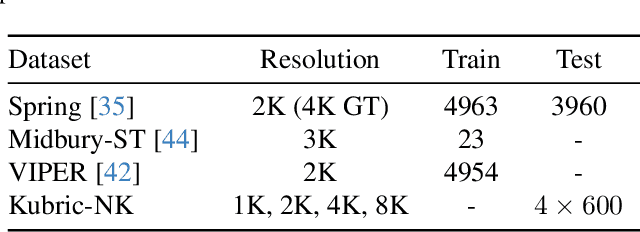

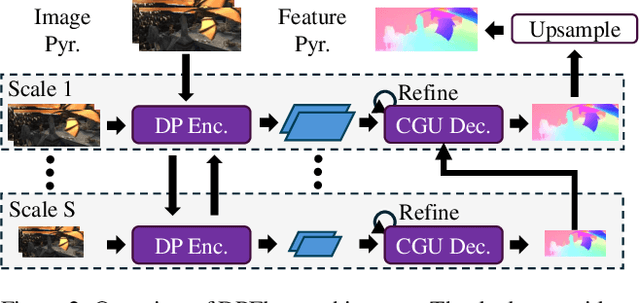

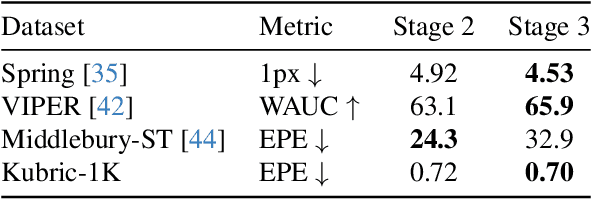

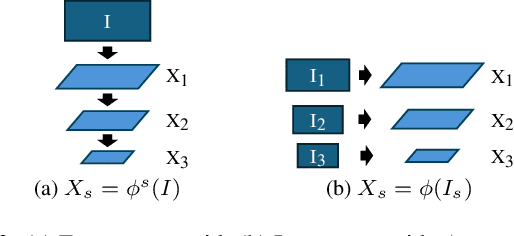

Abstract:Optical flow estimation is essential for video processing tasks, such as restoration and action recognition. The quality of videos is constantly increasing, with current standards reaching 8K resolution. However, optical flow methods are usually designed for low resolution and do not generalize to large inputs due to their rigid architectures. They adopt downscaling or input tiling to reduce the input size, causing a loss of details and global information. There is also a lack of optical flow benchmarks to judge the actual performance of existing methods on high-resolution samples. Previous works only conducted qualitative high-resolution evaluations on hand-picked samples. This paper fills this gap in optical flow estimation in two ways. We propose DPFlow, an adaptive optical flow architecture capable of generalizing up to 8K resolution inputs while trained with only low-resolution samples. We also introduce Kubric-NK, a new benchmark for evaluating optical flow methods with input resolutions ranging from 1K to 8K. Our high-resolution evaluation pushes the boundaries of existing methods and reveals new insights about their generalization capabilities. Extensive experimental results show that DPFlow achieves state-of-the-art results on the MPI-Sintel, KITTI 2015, Spring, and other high-resolution benchmarks.

Video-Language Alignment Pre-training via Spatio-Temporal Graph Transformer

Jul 16, 2024

Abstract:Video-language alignment is a crucial multi-modal task that benefits various downstream applications, e.g., video-text retrieval and video question answering. Existing methods either utilize multi-modal information in video-text pairs or apply global and local alignment techniques to promote alignment precision. However, these methods often fail to fully explore the spatio-temporal relationships among vision tokens within video and across different video-text pairs. In this paper, we propose a novel Spatio-Temporal Graph Transformer module to uniformly learn spatial and temporal contexts for video-language alignment pre-training (dubbed STGT). Specifically, our STGT combines spatio-temporal graph structure information with attention in transformer block, effectively utilizing the spatio-temporal contexts. In this way, we can model the relationships between vision tokens, promoting video-text alignment precision for benefiting downstream tasks. In addition, we propose a self-similarity alignment loss to explore the inherent self-similarity in the video and text. With the initial optimization achieved by contrastive learning, it can further promote the alignment accuracy between video and text. Experimental results on challenging downstream tasks, including video-text retrieval and video question answering, verify the superior performance of our method.

Arbitrary Time Information Modeling via Polynomial Approximation for Temporal Knowledge Graph Embedding

May 01, 2024

Abstract:Distinguished from traditional knowledge graphs (KGs), temporal knowledge graphs (TKGs) must explore and reason over temporally evolving facts adequately. However, existing TKG approaches still face two main challenges, i.e., the limited capability to model arbitrary timestamps continuously and the lack of rich inference patterns under temporal constraints. In this paper, we propose an innovative TKGE method (PTBox) via polynomial decomposition-based temporal representation and box embedding-based entity representation to tackle the above-mentioned problems. Specifically, we decompose time information by polynomials and then enhance the model's capability to represent arbitrary timestamps flexibly by incorporating the learnable temporal basis tensor. In addition, we model every entity as a hyperrectangle box and define each relation as a transformation on the head and tail entity boxes. The entity boxes can capture complex geometric structures and learn robust representations, improving the model's inductive capability for rich inference patterns. Theoretically, our PTBox can encode arbitrary time information or even unseen timestamps while capturing rich inference patterns and higher-arity relations of the knowledge base. Extensive experiments on real-world datasets demonstrate the effectiveness of our method.

Transformer-based Reasoning for Learning Evolutionary Chain of Events on Temporal Knowledge Graph

May 01, 2024Abstract:Temporal Knowledge Graph (TKG) reasoning often involves completing missing factual elements along the timeline. Although existing methods can learn good embeddings for each factual element in quadruples by integrating temporal information, they often fail to infer the evolution of temporal facts. This is mainly because of (1) insufficiently exploring the internal structure and semantic relationships within individual quadruples and (2) inadequately learning a unified representation of the contextual and temporal correlations among different quadruples. To overcome these limitations, we propose a novel Transformer-based reasoning model (dubbed ECEformer) for TKG to learn the Evolutionary Chain of Events (ECE). Specifically, we unfold the neighborhood subgraph of an entity node in chronological order, forming an evolutionary chain of events as the input for our model. Subsequently, we utilize a Transformer encoder to learn the embeddings of intra-quadruples for ECE. We then craft a mixed-context reasoning module based on the multi-layer perceptron (MLP) to learn the unified representations of inter-quadruples for ECE while accomplishing temporal knowledge reasoning. In addition, to enhance the timeliness of the events, we devise an additional time prediction task to complete effective temporal information within the learned unified representation. Extensive experiments on six benchmark datasets verify the state-of-the-art performance and the effectiveness of our method.

Inverse-like Antagonistic Scene Text Spotting via Reading-Order Estimation and Dynamic Sampling

Jan 08, 2024Abstract:Scene text spotting is a challenging task, especially for inverse-like scene text, which has complex layouts, e.g., mirrored, symmetrical, or retro-flexed. In this paper, we propose a unified end-to-end trainable inverse-like antagonistic text spotting framework dubbed IATS, which can effectively spot inverse-like scene texts without sacrificing general ones. Specifically, we propose an innovative reading-order estimation module (REM) that extracts reading-order information from the initial text boundary generated by an initial boundary module (IBM). To optimize and train REM, we propose a joint reading-order estimation loss consisting of a classification loss, an orthogonality loss, and a distribution loss. With the help of IBM, we can divide the initial text boundary into two symmetric control points and iteratively refine the new text boundary using a lightweight boundary refinement module (BRM) for adapting to various shapes and scales. To alleviate the incompatibility between text detection and recognition, we propose a dynamic sampling module (DSM) with a thin-plate spline that can dynamically sample appropriate features for recognition in the detected text region. Without extra supervision, the DSM can proactively learn to sample appropriate features for text recognition through the gradient returned by the recognition module. Extensive experiments on both challenging scene text and inverse-like scene text datasets demonstrate that our method achieves superior performance both on irregular and inverse-like text spotting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge