Wu-Jun Li

On the Optimal Batch Size for Byzantine-Robust Distributed Learning

May 23, 2023

Abstract:Byzantine-robust distributed learning (BRDL), in which computing devices are likely to behave abnormally due to accidental failures or malicious attacks, has recently become a hot research topic. However, even in the independent and identically distributed (i.i.d.) case, existing BRDL methods will suffer from a significant drop on model accuracy due to the large variance of stochastic gradients. Increasing batch sizes is a simple yet effective way to reduce the variance. However, when the total number of gradient computation is fixed, a too-large batch size will lead to a too-small iteration number (update number), which may also degrade the model accuracy. In view of this challenge, we mainly study the optimal batch size when the total number of gradient computation is fixed in this work. In particular, we theoretically and empirically show that when the total number of gradient computation is fixed, the optimal batch size in BRDL increases with the fraction of Byzantine workers. Therefore, compared to the case without attacks, the batch size should be set larger when under Byzantine attacks. However, for existing BRDL methods, large batch sizes will lead to a drop on model accuracy, even if there is no Byzantine attack. To deal with this problem, we propose a novel BRDL method, called Byzantine-robust stochastic gradient descent with normalized momentum (ByzSGDnm), which can alleviate the drop on model accuracy in large-batch cases. Moreover, we theoretically prove the convergence of ByzSGDnm for general non-convex cases under Byzantine attacks. Empirical results show that ByzSGDnm has a comparable performance to existing BRDL methods under bit-flipping failure, but can outperform existing BRDL methods under deliberately crafted attacks.

GNNFormer: A Graph-based Framework for Cytopathology Report Generation

Mar 17, 2023Abstract:Cytopathology report generation is a necessary step for the standardized examination of pathology images. However, manually writing detailed reports brings heavy workloads for pathologists. To improve efficiency, some existing works have studied automatic generation of cytopathology reports, mainly by applying image caption generation frameworks with visual encoders originally proposed for natural images. A common weakness of these works is that they do not explicitly model the structural information among cells, which is a key feature of pathology images and provides significant information for making diagnoses. In this paper, we propose a novel graph-based framework called GNNFormer, which seamlessly integrates graph neural network (GNN) and Transformer into the same framework, for cytopathology report generation. To the best of our knowledge, GNNFormer is the first report generation method that explicitly models the structural information among cells in pathology images. It also effectively fuses structural information among cells, fine-grained morphology features of cells and background features to generate high-quality reports. Experimental results on the NMI-WSI dataset show that GNNFormer can outperform other state-of-the-art baselines.

FedREP: A Byzantine-Robust, Communication-Efficient and Privacy-Preserving Framework for Federated Learning

Mar 09, 2023

Abstract:Federated learning (FL) has recently become a hot research topic, in which Byzantine robustness, communication efficiency and privacy preservation are three important aspects. However, the tension among these three aspects makes it hard to simultaneously take all of them into account. In view of this challenge, we theoretically analyze the conditions that a communication compression method should satisfy to be compatible with existing Byzantine-robust methods and privacy-preserving methods. Motivated by the analysis results, we propose a novel communication compression method called consensus sparsification (ConSpar). To the best of our knowledge, ConSpar is the first communication compression method that is designed to be compatible with both Byzantine-robust methods and privacy-preserving methods. Based on ConSpar, we further propose a novel FL framework called FedREP, which is Byzantine-robust, communication-efficient and privacy-preserving. We theoretically prove the Byzantine robustness and the convergence of FedREP. Empirical results show that FedREP can significantly outperform communication-efficient privacy-preserving baselines. Furthermore, compared with Byzantine-robust communication-efficient baselines, FedREP can achieve comparable accuracy with the extra advantage of privacy preservation.

Asymmetric Learning for Graph Neural Network based Link Prediction

Mar 01, 2023Abstract:Link prediction is a fundamental problem in many graph based applications, such as protein-protein interaction prediction. Graph neural network (GNN) has recently been widely used for link prediction. However, existing GNN based link prediction (GNN-LP) methods suffer from scalability problem during training for large-scale graphs, which has received little attention by researchers. In this paper, we first give computation complexity analysis of existing GNN-LP methods, which reveals that the scalability problem stems from their symmetric learning strategy adopting the same class of GNN models to learn representation for both head and tail nodes. Then we propose a novel method, called asymmetric learning (AML), for GNN-LP. The main idea of AML is to adopt a GNN model for learning head node representation while using a multi-layer perceptron (MLP) model for learning tail node representation. Furthermore, AML proposes a row-wise sampling strategy to generate mini-batch for training, which is a necessary component to make the asymmetric learning strategy work for training speedup. To the best of our knowledge, AML is the first GNN-LP method adopting an asymmetric learning strategy for node representation learning. Experiments on three real large-scale datasets show that AML is 1.7X~7.3X faster in training than baselines with a symmetric learning strategy, while having almost no accuracy loss.

Optimizing Class Distribution in Memory for Multi-Label Online Continual Learning

Sep 23, 2022

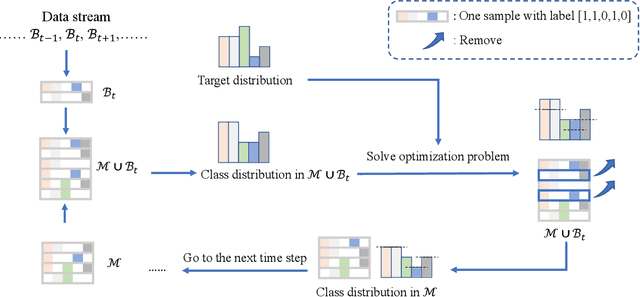

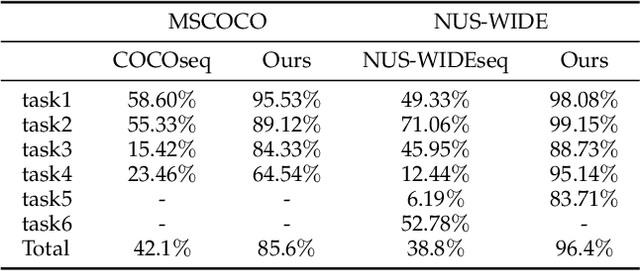

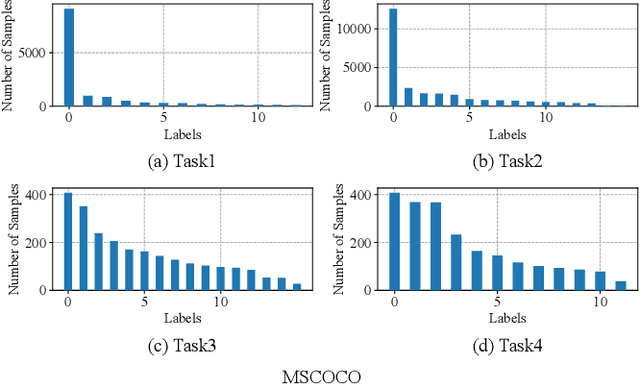

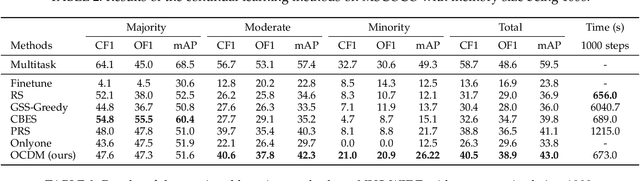

Abstract:Online continual learning, especially when task identities and task boundaries are unavailable, is a challenging continual learning setting. One representative kind of methods for online continual learning is replay-based methods, in which a replay buffer called memory is maintained to keep a small part of past samples for overcoming catastrophic forgetting. When tackling with online continual learning, most existing replay-based methods focus on single-label problems in which each sample in the data stream has only one label. But multi-label problems may also happen in the online continual learning setting in which each sample may have more than one label. In the online setting with multi-label samples, the class distribution in data stream is typically highly imbalanced, and it is challenging to control class distribution in memory since changing the number of samples belonging to one class may affect the number of samples belonging to other classes. But class distribution in memory is critical for replay-based memory to get good performance, especially when the class distribution in data stream is highly imbalanced. In this paper, we propose a simple but effective method, called optimizing class distribution in memory (OCDM), for multi-label online continual learning. OCDM formulates the memory update mechanism as an optimization problem and updates the memory by solving this problem. Experiments on two widely used multi-label datasets show that OCDM can control the class distribution in memory well and can outperform other state-of-the-art methods.

State-based Episodic Memory for Multi-Agent Reinforcement Learning

Oct 19, 2021

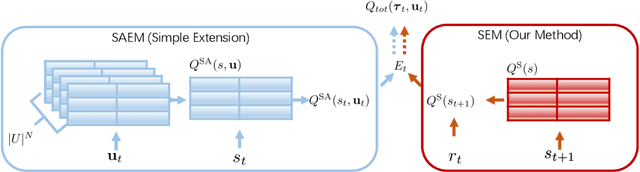

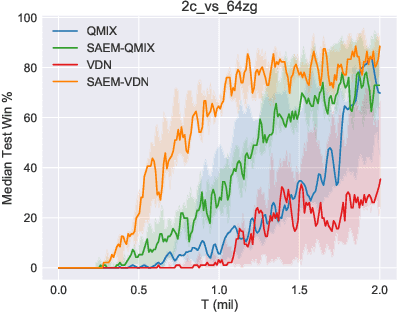

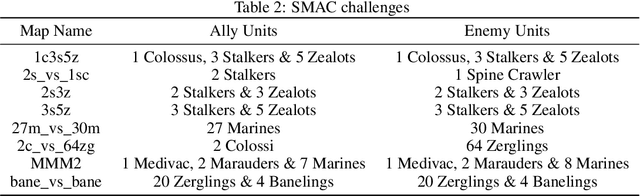

Abstract:Multi-agent reinforcement learning (MARL) algorithms have made promising progress in recent years by leveraging the centralized training and decentralized execution (CTDE) paradigm. However, existing MARL algorithms still suffer from the sample inefficiency problem. In this paper, we propose a simple yet effective approach, called state-based episodic memory (SEM), to improve sample efficiency in MARL. SEM adopts episodic memory (EM) to supervise the centralized training procedure of CTDE in MARL. To the best of our knowledge, SEM is the first work to introduce EM into MARL. We can theoretically prove that, when using for MARL, SEM has lower space complexity and time complexity than state and action based EM (SAEM), which is originally proposed for single-agent reinforcement learning. Experimental results on StarCraft multi-agent challenge (SMAC) show that introducing episodic memory into MARL can improve sample efficiency and SEM can reduce storage cost and time cost compared with SAEM.

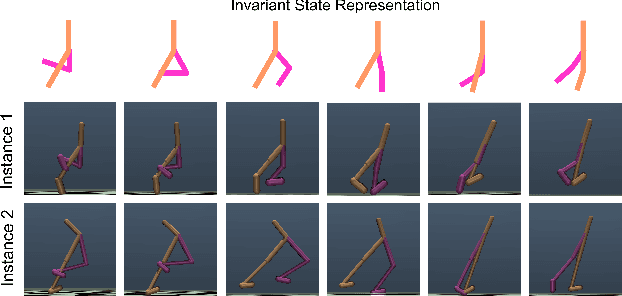

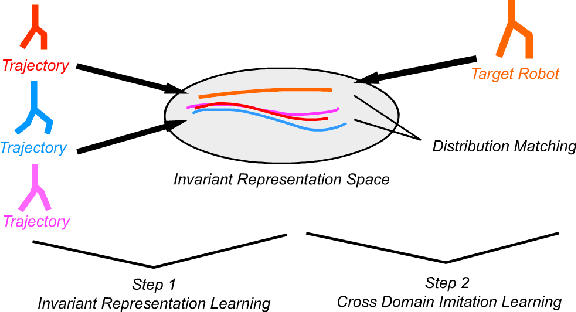

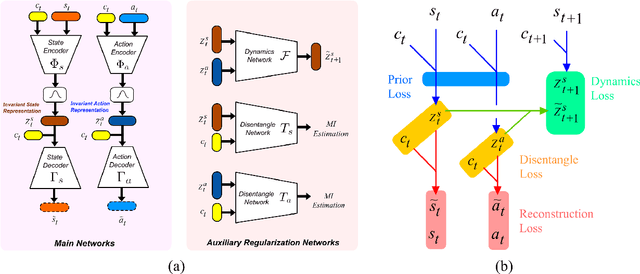

Cross Domain Robot Imitation with Invariant Representation

Sep 13, 2021

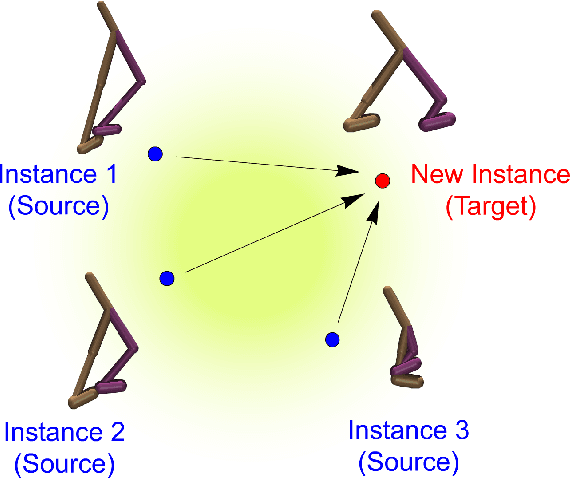

Abstract:Animals are able to imitate each others' behavior, despite their difference in biomechanics. In contrast, imitating the other similar robots is a much more challenging task in robotics. This problem is called cross domain imitation learning~(CDIL). In this paper, we consider CDIL on a class of similar robots. We tackle this problem by introducing an imitation learning algorithm based on invariant representation. We propose to learn invariant state and action representations, which aligns the behavior of multiple robots so that CDIL becomes possible. Compared with previous invariant representation learning methods for similar purpose, our method does not require human-labeled pairwise data for training. Instead, we use cycle-consistency and domain confusion to align the representation and increase its robustness. We test the algorithm on multiple robots in simulator and show that unseen new robot instances can be trained with existing expert demonstrations successfully. Qualitative results also demonstrate that the proposed method is able to learn similar representations for different robots with similar behaviors, which is essential for successful CDIL.

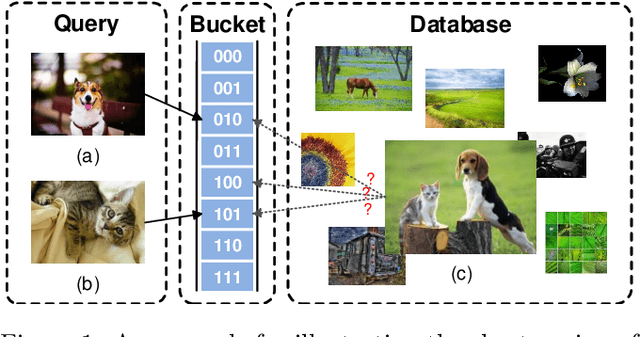

Multiple Code Hashing for Efficient Image Retrieval

Aug 04, 2020

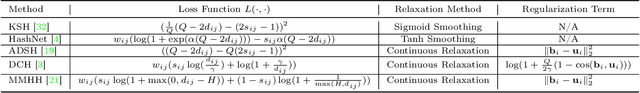

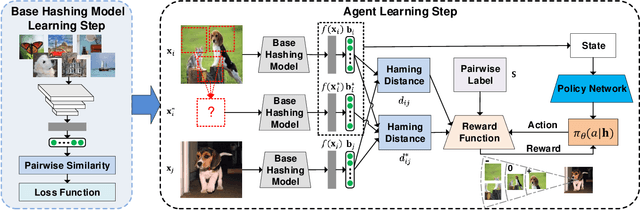

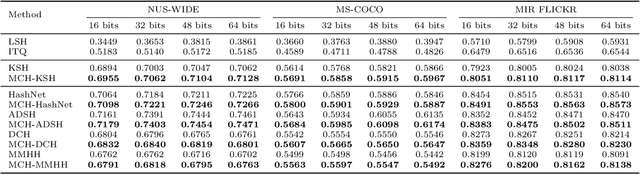

Abstract:Due to its low storage cost and fast query speed, hashing has been widely used in large-scale image retrieval tasks. Hash bucket search returns data points within a given Hamming radius to each query, which can enable search at a constant or sub-linear time cost. However, existing hashing methods cannot achieve satisfactory retrieval performance for hash bucket search in complex scenarios, since they learn only one hash code for each image. More specifically, by using one hash code to represent one image, existing methods might fail to put similar image pairs to the buckets with a small Hamming distance to the query when the semantic information of images is complex. As a result, a large number of hash buckets need to be visited for retrieving similar images, based on the learned codes. This will deteriorate the efficiency of hash bucket search. In this paper, we propose a novel hashing framework, called multiple code hashing (MCH), to improve the performance of hash bucket search. The main idea of MCH is to learn multiple hash codes for each image, with each code representing a different region of the image. Furthermore, we propose a deep reinforcement learning algorithm to learn the parameters in MCH. To the best of our knowledge, this is the first work that proposes to learn multiple hash codes for each image in image retrieval. Experiments demonstrate that MCH can achieve a significant improvement in hash bucket search, compared with existing methods that learn only one hash code for each image.

ExchNet: A Unified Hashing Network for Large-Scale Fine-Grained Image Retrieval

Aug 04, 2020

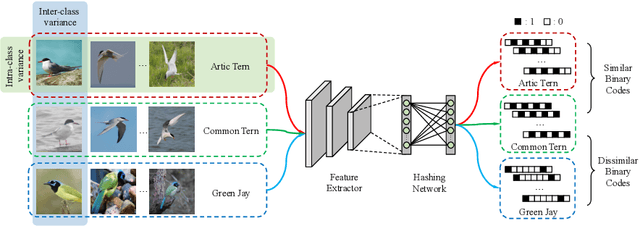

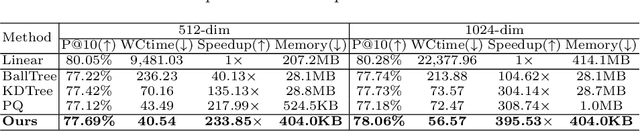

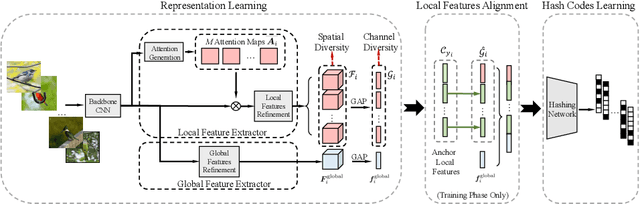

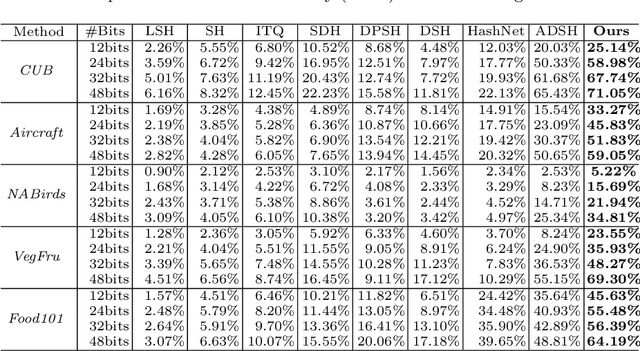

Abstract:Retrieving content relevant images from a large-scale fine-grained dataset could suffer from intolerably slow query speed and highly redundant storage cost, due to high-dimensional real-valued embeddings which aim to distinguish subtle visual differences of fine-grained objects. In this paper, we study the novel fine-grained hashing topic to generate compact binary codes for fine-grained images, leveraging the search and storage efficiency of hash learning to alleviate the aforementioned problems. Specifically, we propose a unified end-to-end trainable network, termed as ExchNet. Based on attention mechanisms and proposed attention constraints, it can firstly obtain both local and global features to represent object parts and whole fine-grained objects, respectively. Furthermore, to ensure the discriminative ability and semantic meaning's consistency of these part-level features across images, we design a local feature alignment approach by performing a feature exchanging operation. Later, an alternative learning algorithm is employed to optimize the whole ExchNet and then generate the final binary hash codes. Validated by extensive experiments, our proposal consistently outperforms state-of-the-art generic hashing methods on five fine-grained datasets, which shows our effectiveness. Moreover, compared with other approximate nearest neighbor methods, ExchNet achieves the best speed-up and storage reduction, revealing its efficiency and practicality.

Stochastic Normalized Gradient Descent with Momentum for Large Batch Training

Jul 28, 2020

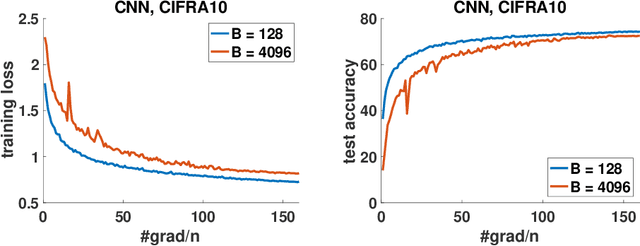

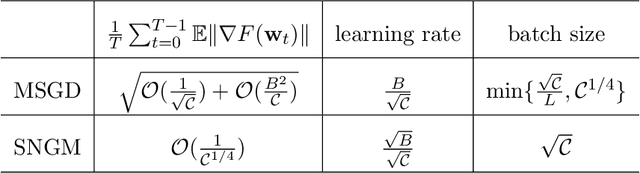

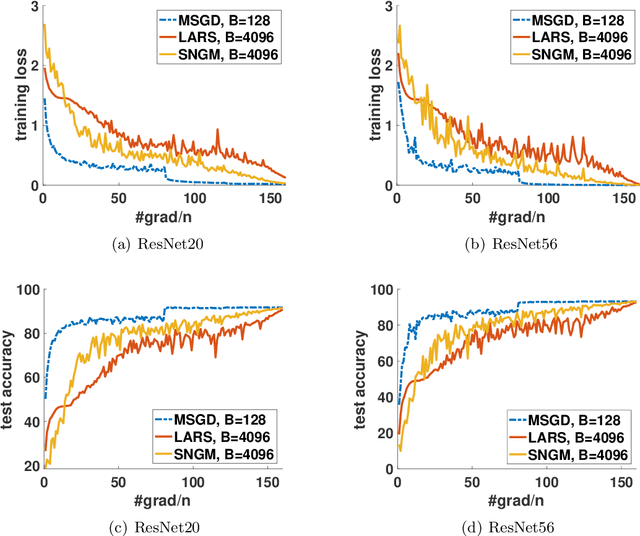

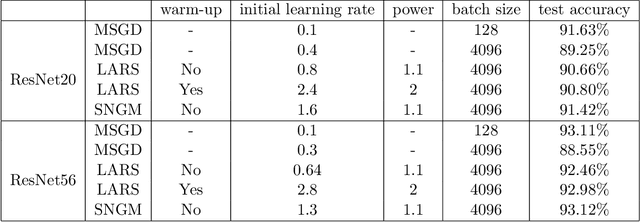

Abstract:Stochastic gradient descent (SGD) and its variants have been the dominating optimization methods in machine learning. Compared with small batch training, SGD with large batch training can better utilize the computational power of current multi-core systems like GPUs and can reduce the number of communication rounds in distributed training. Hence, SGD with large batch training has attracted more and more attention. However, existing empirical results show that large batch training typically leads to a drop of generalization accuracy. As a result, large batch training has also become a challenging topic. In this paper, we propose a novel method, called stochastic normalized gradient descent with momentum (SNGM), for large batch training. We theoretically prove that compared to momentum SGD (MSGD) which is one of the most widely used variants of SGD, SNGM can adopt a larger batch size to converge to the $\epsilon$-stationary point with the same computation complexity (total number of gradient computation). Empirical results on deep learning also show that SNGM can achieve the state-of-the-art accuracy with a large batch size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge