Victor S. Bursztyn

Northwestern University

Disambiguation in Conversational Question Answering in the Era of LLM: A Survey

May 18, 2025

Abstract:Ambiguity remains a fundamental challenge in Natural Language Processing (NLP) due to the inherent complexity and flexibility of human language. With the advent of Large Language Models (LLMs), addressing ambiguity has become even more critical due to their expanded capabilities and applications. In the context of Conversational Question Answering (CQA), this paper explores the definition, forms, and implications of ambiguity for language driven systems, particularly in the context of LLMs. We define key terms and concepts, categorize various disambiguation approaches enabled by LLMs, and provide a comparative analysis of their advantages and disadvantages. We also explore publicly available datasets for benchmarking ambiguity detection and resolution techniques and highlight their relevance for ongoing research. Finally, we identify open problems and future research directions, proposing areas for further investigation. By offering a comprehensive review of current research on ambiguities and disambiguation with LLMs, we aim to contribute to the development of more robust and reliable language systems.

Learning to Perform Complex Tasks through Compositional Fine-Tuning of Language Models

Oct 23, 2022

Abstract:How to usefully encode compositional task structure has long been a core challenge in AI. Recent work in chain of thought prompting has shown that for very large neural language models (LMs), explicitly demonstrating the inferential steps involved in a target task may improve performance over end-to-end learning that focuses on the target task alone. However, chain of thought prompting has significant limitations due to its dependency on huge pretrained LMs. In this work, we present compositional fine-tuning (CFT): an approach based on explicitly decomposing a target task into component tasks, and then fine-tuning smaller LMs on a curriculum of such component tasks. We apply CFT to recommendation tasks in two domains, world travel and local dining, as well as a previously studied inferential task (sports understanding). We show that CFT outperforms end-to-end learning even with equal amounts of data, and gets consistently better as more component tasks are modeled via fine-tuning. Compared with chain of thought prompting, CFT performs at least as well using LMs only 7.4% of the size, and is moreover applicable to task domains for which data are not available during pretraining.

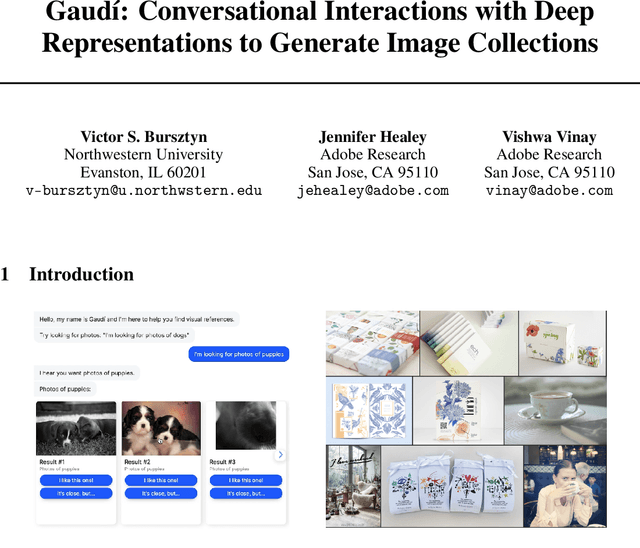

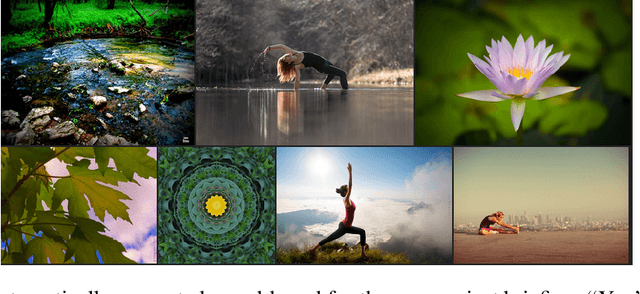

Gaudí: Conversational Interactions with Deep Representations to Generate Image Collections

Dec 05, 2021

Abstract:Based on recent advances in realistic language modeling (GPT-3) and cross-modal representations (CLIP), Gaud\'i was developed to help designers search for inspirational images using natural language. In the early stages of the design process, with the goal of eliciting a client's preferred creative direction, designers will typically create thematic collections of inspirational images called "mood-boards". Creating a mood-board involves sequential image searches which are currently performed using keywords or images. Gaud\'i transforms this process into a conversation where the user is gradually detailing the mood-board's theme. This representation allows our AI to generate new search queries from scratch, straight from a project briefing, following a theme hypothesized by GPT-3. Compared to previous computational approaches to mood-board creation, to the best of our knowledge, ours is the first attempt to represent mood-boards as the stories that designers tell when presenting a creative direction to a client.

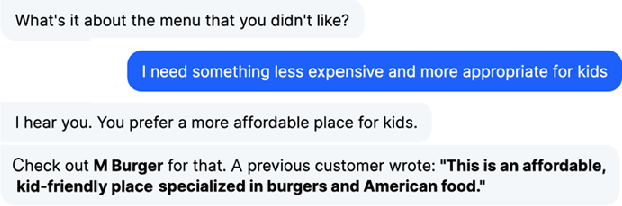

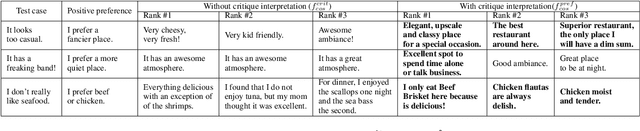

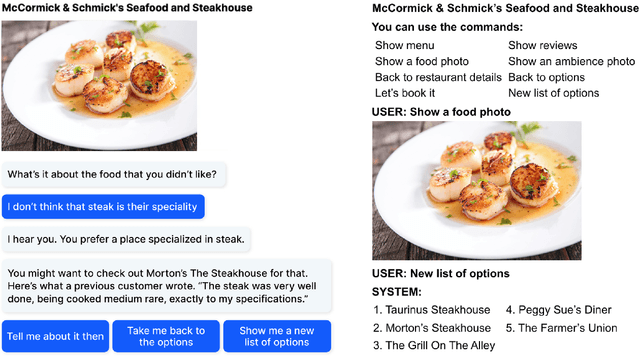

"It doesn't look good for a date": Transforming Critiques into Preferences for Conversational Recommendation Systems

Sep 15, 2021

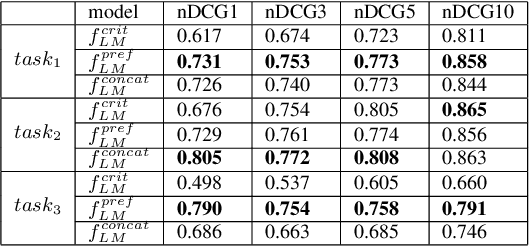

Abstract:Conversations aimed at determining good recommendations are iterative in nature. People often express their preferences in terms of a critique of the current recommendation (e.g., "It doesn't look good for a date"), requiring some degree of common sense for a preference to be inferred. In this work, we present a method for transforming a user critique into a positive preference (e.g., "I prefer more romantic") in order to retrieve reviews pertaining to potentially better recommendations (e.g., "Perfect for a romantic dinner"). We leverage a large neural language model (LM) in a few-shot setting to perform critique-to-preference transformation, and we test two methods for retrieving recommendations: one that matches embeddings, and another that fine-tunes an LM for the task. We instantiate this approach in the restaurant domain and evaluate it using a new dataset of restaurant critiques. In an ablation study, we show that utilizing critique-to-preference transformation improves recommendations, and that there are at least three general cases that explain this improved performance.

Developing a Conversational Recommendation System for Navigating Limited Options

Apr 13, 2021

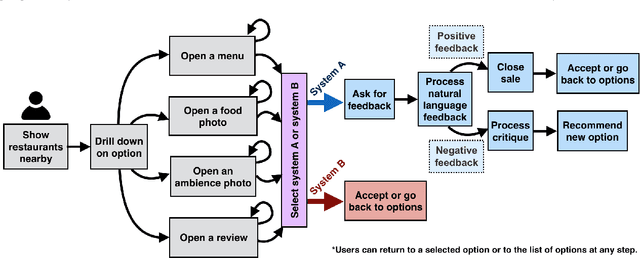

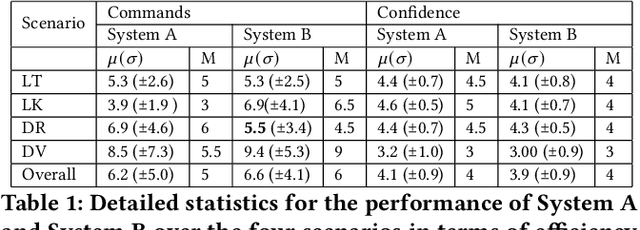

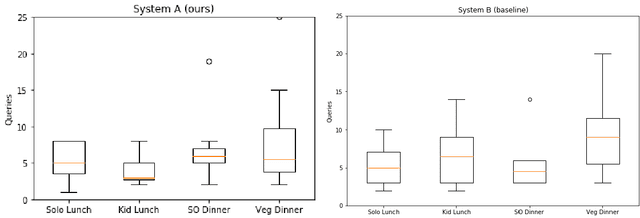

Abstract:We have developed a conversational recommendation system designed to help users navigate through a set of limited options to find the best choice. Unlike many internet scale systems that use a singular set of search terms and return a ranked list of options from amongst thousands, our system uses multi-turn user dialog to deeply understand the users preferences. The system responds in context to the users specific and immediate feedback to make sequential recommendations. We envision our system would be highly useful in situations with intrinsic constraints, such as finding the right restaurant within walking distance or the right retail item within a limited inventory. Our research prototype instantiates the former use case, leveraging real data from Google Places, Yelp, and Zomato. We evaluated our system against a similar system that did not incorporate user feedback in a 16 person remote study, generating 64 scenario-based search journeys. When our recommendation system was successfully triggered, we saw both an increase in efficiency and a higher confidence rating with respect to final user choice. We also found that users preferred our system (75%) compared with the baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge