Timothy Bretl

Estimating Force Interactions of Deformable Linear Objects from their Shapes

Feb 01, 2026Abstract:This work introduces an analytical approach for detecting and estimating external forces acting on deformable linear objects (DLOs) using only their observed shapes. In many robot-wire interaction tasks, contact occurs not at the end-effector but at other points along the robot's body. Such scenarios arise when robots manipulate wires indirectly (e.g., by nudging) or when wires act as passive obstacles in the environment. Accurately identifying these interactions is crucial for safe and efficient trajectory planning, helping to prevent wire damage, avoid restricted robot motions, and mitigate potential hazards. Existing approaches often rely on expensive external force-torque sensor or that contacts occur at the end-effector for accurate force estimation. Using wire shape information acquired from a depth camera and under the assumption that the wire is in or near its static equilibrium, our method estimates both the location and magnitude of external forces without additional prior knowledge. This is achieved by exploiting derived consistency conditions and solving a system of linear equations based on force-torque balance along the wire. The approach was validated through simulation, where it achieved high accuracy, and through real-world experiments, where accurate estimation was demonstrated in selected interaction scenarios.

DLO-Splatting: Tracking Deformable Linear Objects Using 3D Gaussian Splatting

May 13, 2025Abstract:This work presents DLO-Splatting, an algorithm for estimating the 3D shape of Deformable Linear Objects (DLOs) from multi-view RGB images and gripper state information through prediction-update filtering. The DLO-Splatting algorithm uses a position-based dynamics model with shape smoothness and rigidity dampening corrections to predict the object shape. Optimization with a 3D Gaussian Splatting-based rendering loss iteratively renders and refines the prediction to align it with the visual observations in the update step. Initial experiments demonstrate promising results in a knot tying scenario, which is challenging for existing vision-only methods.

GSFeatLoc: Visual Localization Using Feature Correspondence on 3D Gaussian Splatting

May 01, 2025Abstract:In this paper, we present a method for localizing a query image with respect to a precomputed 3D Gaussian Splatting (3DGS) scene representation. First, the method uses 3DGS to render a synthetic RGBD image at some initial pose estimate. Second, it establishes 2D-2D correspondences between the query image and this synthetic image. Third, it uses the depth map to lift the 2D-2D correspondences to 2D-3D correspondences and solves a perspective-n-point (PnP) problem to produce a final pose estimate. Results from evaluation across three existing datasets with 38 scenes and over 2,700 test images show that our method significantly reduces both inference time (by over two orders of magnitude, from more than 10 seconds to as fast as 0.1 seconds) and estimation error compared to baseline methods that use photometric loss minimization. Results also show that our method tolerates large errors in the initial pose estimate of up to 55{\deg} in rotation and 1.1 units in translation (normalized by scene scale), achieving final pose errors of less than 5{\deg} in rotation and 0.05 units in translation on 90% of images from the Synthetic NeRF and Mip-NeRF360 datasets and on 42% of images from the more challenging Tanks and Temples dataset.

Efficient Extrinsic Self-Calibration of Multiple IMUs using Measurement Subset Selection

Jul 02, 2024Abstract:This paper addresses the problem of choosing a sparse subset of measurements for quick calibration parameter estimation. A standard solution to this is selecting a measurement only if its utility -- the difference between posterior (with the measurement) and prior information (without the measurement) -- exceeds some threshold. Theoretically, utility, a function of the parameter estimate, should be evaluated at the estimate obtained with all measurements selected so far, hence necessitating a recalibration with each new measurement. However, we hypothesize that utility is insensitive to changes in the parameter estimate for many systems of interest, suggesting that evaluating utility at some initial parameter guess would yield equivalent results in practice. We provide evidence supporting this hypothesis for extrinsic calibration of multiple inertial measurement units (IMUs), showing the reduction in calibration time by two orders of magnitude by forgoing recalibration for each measurement.

The Impact of Time Step Frequency on the Realism of Robotic Manipulation Simulation for Objects of Different Scales

Oct 12, 2023Abstract:This work evaluates the impact of time step frequency and component scale on robotic manipulation simulation accuracy. Increasing the time step frequency for small-scale objects is shown to improve simulation accuracy. This simulation, demonstrating pre-assembly part picking for two object geometries, serves as a starting point for discussing how to improve Sim2Real transfer in robotic assembly processes.

Dynamic Manipulation of a Deformable Linear Object: Simulation and Learning

Oct 02, 2023

Abstract:We show that it is possible to learn an open-loop policy in simulation for the dynamic manipulation of a deformable linear object (DLO) -- e.g., a rope, wire, or cable -- that can be executed by a real robot without additional training. Our method is enabled by integrating an existing state-of-the-art DLO model (Discrete Elastic Rods) with MuJoCo, a robot simulator. We describe how this integration was done, check that validation results produced in simulation match what we expect from analysis of the physics, and apply policy optimization to train an open-loop policy from data collected only in simulation that uses a robot arm to fling a wire precisely between two obstacles. This policy achieves a success rate of 76.7% when executed by a real robot in hardware experiments without additional training on the real task.

The Use of Multi-Scale Fiducial Markers To Aid Takeoff and Landing Navigation by Rotorcraft

Sep 15, 2023Abstract:This paper quantifies the impact of adverse environmental conditions on the detection of fiducial markers (i.e., artificial landmarks) by color cameras mounted on rotorcraft. We restrict our attention to square markers with a black-and-white pattern of grid cells that can be nested to allow detection at multiple scales. These markers have the potential to enhance the reliability of precision takeoff and landing at vertiports by flying vehicles in urban settings. Prior work has shown, in particular, that these markers can be detected with high precision (i.e., few false positives) and high recall (i.e., few false negatives). However, most of this prior work has been based on image sequences collected indoors with hand-held cameras. Our work is based on image sequences collected outdoors with cameras mounted on a quadrotor during semi-autonomous takeoff and landing operations under adverse environmental conditions that include variations in temperature, illumination, wind speed, humidity, visibility, and precipitation. In addition to precision and recall, performance measures include continuity, availability, robustness, resiliency, and coverage volume. We release both our dataset and the code we used for analysis to the public as open source.

Comparative Study of Visual SLAM-Based Mobile Robot Localization Using Fiducial Markers

Sep 08, 2023Abstract:This paper presents a comparative study of three modes for mobile robot localization based on visual SLAM using fiducial markers (i.e., square-shaped artificial landmarks with a black-and-white grid pattern): SLAM, SLAM with a prior map, and localization with a prior map. The reason for comparing the SLAM-based approaches leveraging fiducial markers is because previous work has shown their superior performance over feature-only methods, with less computational burden compared to methods that use both feature and marker detection without compromising the localization performance. The evaluation is conducted using indoor image sequences captured with a hand-held camera containing multiple fiducial markers in the environment. The performance metrics include absolute trajectory error and runtime for the optimization process per frame. In particular, for the last two modes (SLAM and localization with a prior map), we evaluate their performances by perturbing the quality of prior map to study the extent to which each mode is tolerant to such perturbations. Hardware experiments show consistent trajectory error levels across the three modes, with the localization mode exhibiting the shortest runtime among them. Yet, with map perturbations, SLAM with a prior map maintains performance, while localization mode degrades in both aspects.

Learning from Integral Losses in Physics Informed Neural Networks

May 27, 2023Abstract:This work proposes a solution for the problem of training physics informed networks under partial integro-differential equations. These equations require infinite or a large number of neural evaluations to construct a single residual for training. As a result, accurate evaluation may be impractical, and we show that naive approximations at replacing these integrals with unbiased estimates lead to biased loss functions and solutions. To overcome this bias, we investigate three types of solutions: the deterministic sampling approach, the double-sampling trick, and the delayed target method. We consider three classes of PDEs for benchmarking; one defining a Poisson problem with singular charges and weak solutions, another involving weak solutions on electro-magnetic fields and a Maxwell equation, and a third one defining a Smoluchowski coagulation problem. Our numerical results confirm the existence of the aforementioned bias in practice, and also show that our proposed delayed target approach can lead to accurate solutions with comparable quality to ones estimated with a large number of samples. Our implementation is open-source and available at https://github.com/ehsansaleh/btspinn.

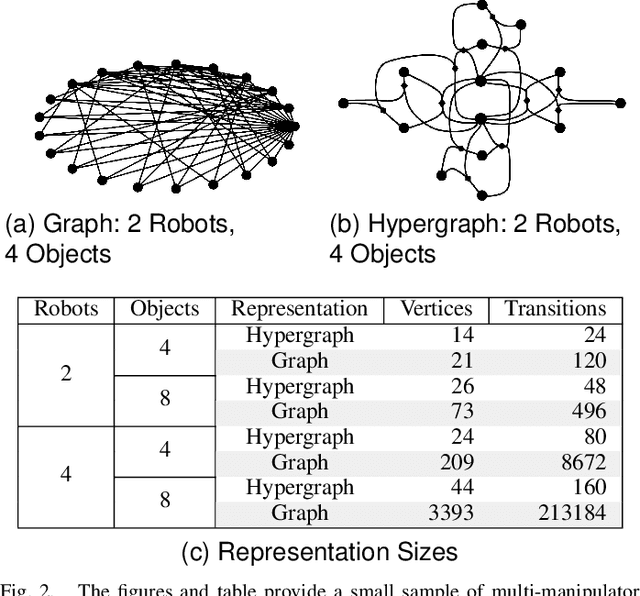

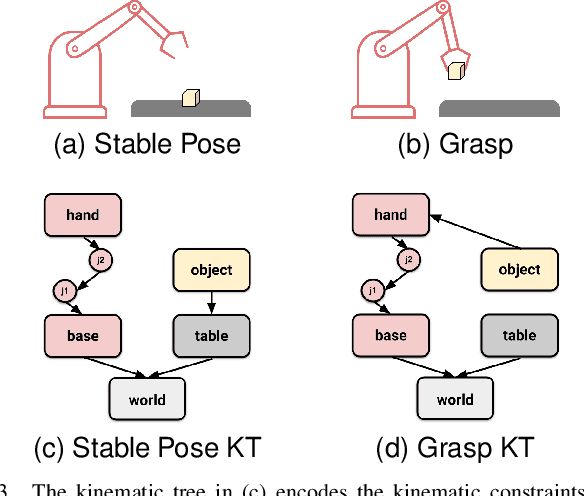

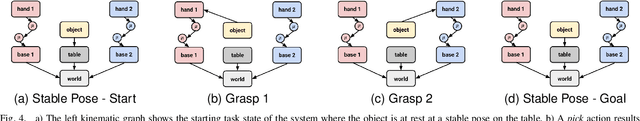

Hypergraph-based Multi-Robot Task and Motion Planning

Oct 09, 2022

Abstract:We present a multi-robot task and motion planning method that, when applied to the rearrangement of objects by manipulators, produces solution times up to three orders of magnitude faster than existing methods. We achieve this improvement by decomposing the planning space into subspaces for independent manipulators, objects, and manipulators holding objects. We represent this decomposition with a hypergraph where vertices are substates and hyperarcs are transitions between substates. Existing methods use graph-based representations where vertices are full states and edges are transitions between states. Using the hypergraph reduces the size of the planning space-for multi-manipulator object rearrangement, the number of hypergraph vertices scales linearly with the number of either robots or objects, while the number of hyperarcs scales quadratically with the number of robots and linearly with the number of objects. In contrast, the number of vertices and edges in graph-based representations scale exponentially in the number of robots and objects. Additionally, the hypergraph provides a structure to reason over varying levels of (de)coupled spaces and transitions between them enabling a hybrid search of the planning space. We show that similar gains can be achieved for other multi-robot task and motion planning problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge