Tianchen Ji

Learning Coordinated Bimanual Manipulation Policies using State Diffusion and Inverse Dynamics Models

Mar 30, 2025Abstract:When performing tasks like laundry, humans naturally coordinate both hands to manipulate objects and anticipate how their actions will change the state of the clothes. However, achieving such coordination in robotics remains challenging due to the need to model object movement, predict future states, and generate precise bimanual actions. In this work, we address these challenges by infusing the predictive nature of human manipulation strategies into robot imitation learning. Specifically, we disentangle task-related state transitions from agent-specific inverse dynamics modeling to enable effective bimanual coordination. Using a demonstration dataset, we train a diffusion model to predict future states given historical observations, envisioning how the scene evolves. Then, we use an inverse dynamics model to compute robot actions that achieve the predicted states. Our key insight is that modeling object movement can help learning policies for bimanual coordination manipulation tasks. Evaluating our framework across diverse simulation and real-world manipulation setups, including multimodal goal configurations, bimanual manipulation, deformable objects, and multi-object setups, we find that it consistently outperforms state-of-the-art state-to-action mapping policies. Our method demonstrates a remarkable capacity to navigate multimodal goal configurations and action distributions, maintain stability across different control modes, and synthesize a broader range of behaviors than those present in the demonstration dataset.

An Expert Ensemble for Detecting Anomalous Scenes, Interactions, and Behaviors in Autonomous Driving

Feb 23, 2025Abstract:As automated vehicles enter public roads, safety in a near-infinite number of driving scenarios becomes one of the major concerns for the widespread adoption of fully autonomous driving. The ability to detect anomalous situations outside of the operational design domain is a key component in self-driving cars, enabling us to mitigate the impact of abnormal ego behaviors and to realize trustworthy driving systems. On-road anomaly detection in egocentric videos remains a challenging problem due to the difficulties introduced by complex and interactive scenarios. We conduct a holistic analysis of common on-road anomaly patterns, from which we propose three unsupervised anomaly detection experts: a scene expert that focuses on frame-level appearances to detect abnormal scenes and unexpected scene motions; an interaction expert that models normal relative motions between two road participants and raises alarms whenever anomalous interactions emerge; and a behavior expert which monitors abnormal behaviors of individual objects by future trajectory prediction. To combine the strengths of all the modules, we propose an expert ensemble (Xen) using a Kalman filter, in which the final anomaly score is absorbed as one of the states and the observations are generated by the experts. Our experiments employ a novel evaluation protocol for realistic model performance, demonstrate superior anomaly detection performance than previous methods, and show that our framework has potential in classifying anomaly types using unsupervised learning on a large-scale on-road anomaly dataset.

Interaction-aware Conformal Prediction for Crowd Navigation

Feb 10, 2025Abstract:During crowd navigation, robot motion plan needs to consider human motion uncertainty, and the human motion uncertainty is dependent on the robot motion plan. We introduce Interaction-aware Conformal Prediction (ICP) to alternate uncertainty-aware robot motion planning and decision-dependent human motion uncertainty quantification. ICP is composed of a trajectory predictor to predict human trajectories, a model predictive controller to plan robot motion with confidence interval radii added for probabilistic safety, a human simulator to collect human trajectory calibration dataset conditioned on the planned robot motion, and a conformal prediction module to quantify trajectory prediction error on the decision-dependent calibration dataset. Crowd navigation simulation experiments show that ICP strikes a good balance of performance among navigation efficiency, social awareness, and uncertainty quantification compared to previous works. ICP generalizes well to navigation tasks under various crowd densities. The fast runtime and efficient memory usage make ICP practical for real-world applications. Code is available at https://github.com/tedhuang96/icp.

Towards Real-Time Generation of Delay-Compensated Video Feeds for Outdoor Mobile Robot Teleoperation

Sep 16, 2024

Abstract:Teleoperation is an important technology to enable supervisors to control agricultural robots remotely. However, environmental factors in dense crop rows and limitations in network infrastructure hinder the reliability of data streamed to teleoperators. These issues result in delayed and variable frame rate video feeds that often deviate significantly from the robot's actual viewpoint. We propose a modular learning-based vision pipeline to generate delay-compensated images in real-time for supervisors. Our extensive offline evaluations demonstrate that our method generates more accurate images compared to state-of-the-art approaches in our setting. Additionally, we are one of the few works to evaluate a delay-compensation method in outdoor field environments with complex terrain on data from a real robot in real-time. Additional videos are provided at https://sites.google.com/illinois.edu/comp-teleop.

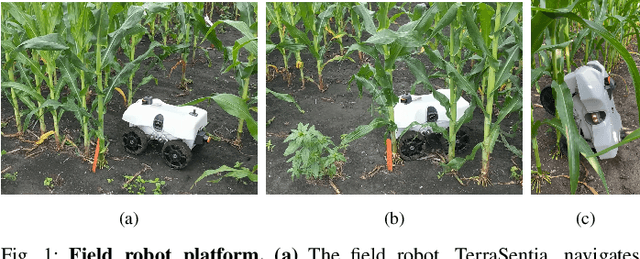

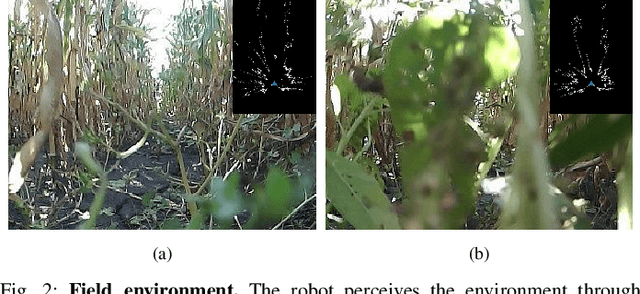

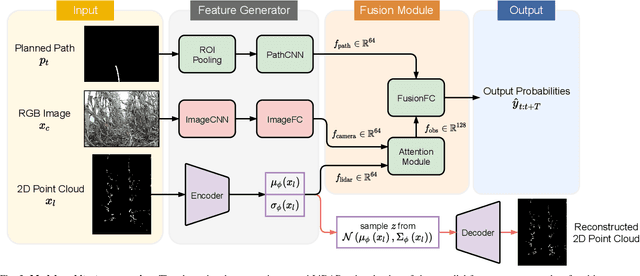

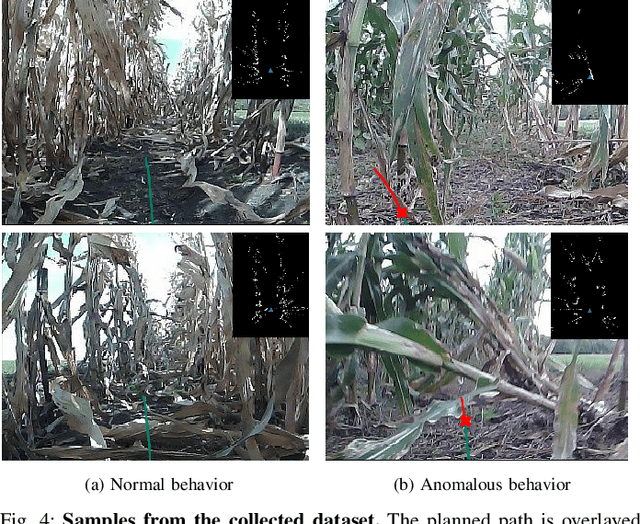

An Attentional Recurrent Neural Network for Occlusion-Aware Proactive Anomaly Detection in Field Robot Navigation

Sep 28, 2023

Abstract:The use of mobile robots in unstructured environments like the agricultural field is becoming increasingly common. The ability for such field robots to proactively identify and avoid failures is thus crucial for ensuring efficiency and avoiding damage. However, the cluttered field environment introduces various sources of noise (such as sensor occlusions) that make proactive anomaly detection difficult. Existing approaches can show poor performance in sensor occlusion scenarios as they typically do not explicitly model occlusions and only leverage current sensory inputs. In this work, we present an attention-based recurrent neural network architecture for proactive anomaly detection that fuses current sensory inputs and planned control actions with a latent representation of prior robot state. We enhance our model with an explicitly-learned model of sensor occlusion that is used to modulate the use of our latent representation of prior robot state. Our method shows improved anomaly detection performance and enables mobile field robots to display increased resilience to predicting false positives regarding navigation failure during periods of sensor occlusion, particularly in cases where all sensors are briefly occluded. Our code is available at: https://github.com/andreschreiber/roar

Learning Rewards and Skills to Follow Commands with A Data Efficient Visual-Audio Representation

Jan 23, 2023Abstract:Based on the recent advancements in representation learning, we propose a novel framework for command-following robots with raw sensor inputs. Previous RL-based methods are either difficult to continuously improve after the deployment or require a large number of new labels during the fine-tuning. Motivated by (self-)supervised contrastive learning literature, we propose a novel representation, named VAR++, that generates an intrinsic reward function for command-following robot tasks by associating images with sound commands. After the robot is deployed in a new domain, the representation can be updated intuitively and data-efficiently by non-experts, and the robot is able to fulfill sound commands without any hand-crafted reward functions. We demonstrate our approach on various sound types and robotic tasks, including navigation and manipulation with raw sensor inputs. In the simulated experiments, we show that our system can continually self-improve in previously unseen scenarios given fewer new labeled data, yet achieves better performance, compared with previous methods.

Structural Attention-Based Recurrent Variational Autoencoder for Highway Vehicle Anomaly Detection

Jan 09, 2023

Abstract:In autonomous driving, detection of abnormal driving behaviors is essential to ensure the safety of vehicle controllers. Prior works in vehicle anomaly detection have shown that modeling interactions between agents improves detection accuracy, but certain abnormal behaviors where structured road information is paramount are poorly identified, such as wrong-way and off-road driving. We propose a novel unsupervised framework for highway anomaly detection named Structural Attention-based Recurrent VAE (SABeR-VAE), which explicitly uses the structure of the environment to aid anomaly identification. Specifically, we use a vehicle self-attention module to learn the relations among vehicles on a road, and a separate lane-vehicle attention module to model the importance of permissible lanes to aid in trajectory prediction. Conditioned on the attention modules' outputs, a recurrent encoder-decoder architecture with a stochastic Koopman operator-propagated latent space predicts the next states of vehicles. Our model is trained end-to-end to minimize prediction loss on normal vehicle behaviors, and is deployed to detect anomalies in (ab)normal scenarios. By combining the heterogeneous vehicle and lane information, SABeR-VAE and its deterministic variant, SABeR-AE, improve abnormal AUPR by 18% and 25% respectively on the simulated MAAD highway dataset. Furthermore, we show that the learned Koopman operator in SABeR-VAE enforces interpretable structure in the variational latent space. The results of our method indeed show that modeling environmental factors is essential to detecting a diverse set of anomalies in deployment.

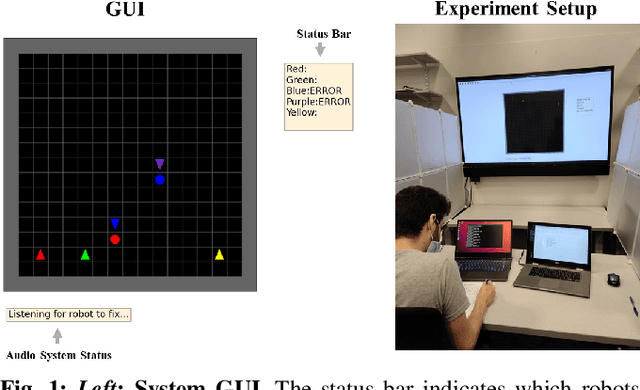

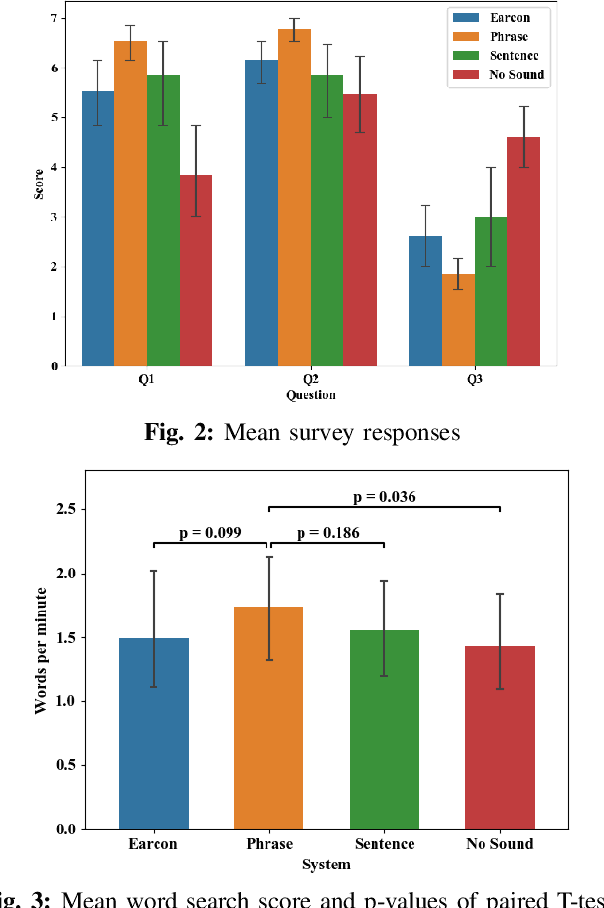

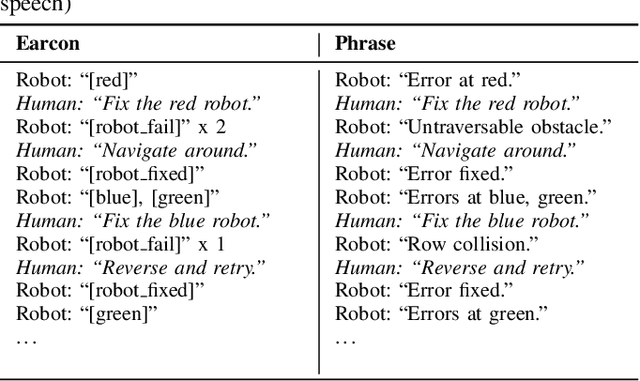

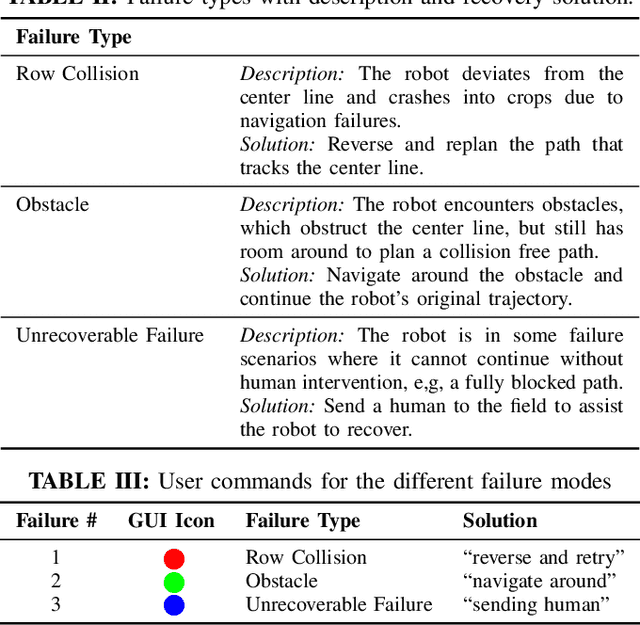

Examining Audio Communication Mechanisms for Supervising Fleets of Agricultural Robots

Aug 22, 2022

Abstract:Agriculture is facing a labor crisis, leading to increased interest in fleets of small, under-canopy robots (agbots) that can perform precise, targeted actions (e.g., crop scouting, weeding, fertilization), while being supervised by human operators remotely. However, farmers are not necessarily experts in robotics technology and will not adopt technologies that add to their workload or do not provide an immediate payoff. In this work, we explore methods for communication between a remote human operator and multiple agbots and examine the impact of audio communication on the operator's preferences and productivity. We develop a simulation platform where agbots are deployed across a field, randomly encounter failures, and call for help from the operator. As the agbots report errors, various audio communication mechanisms are tested to convey which robot failed and what type of failure occurs. The human is tasked with verbally diagnosing the failure while completing a secondary task. A user study was conducted to test three audio communication methods: earcons, single-phrase commands, and full sentence communication. Each participant completed a survey to determine their preferences and each method's overall effectiveness. Our results suggest that the system using single phrases is the most positively perceived by participants and may allow for the human to complete the secondary task more efficiently. The code is available at: https://github.com/akamboj2/Agbot-Sim.

Traversing Supervisor Problem: An Approximately Optimal Approach to Multi-Robot Assistance

May 03, 2022

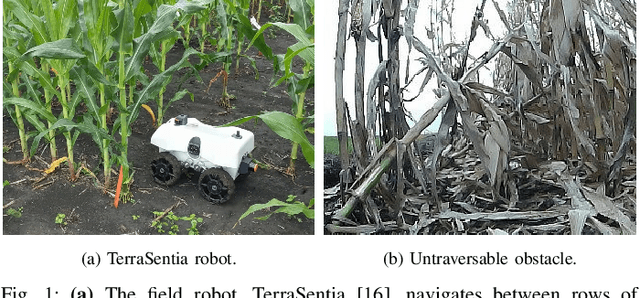

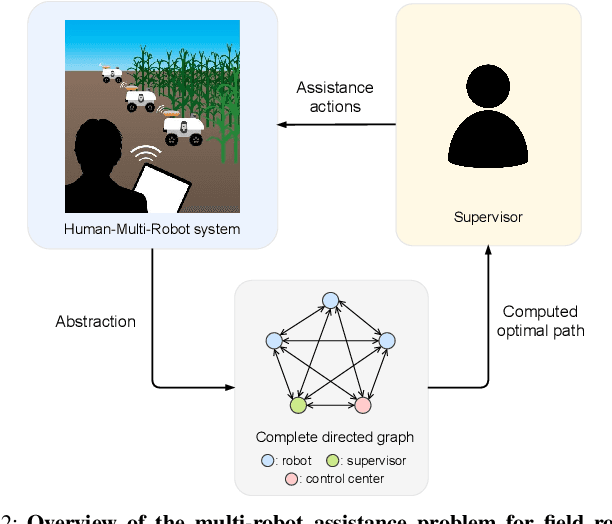

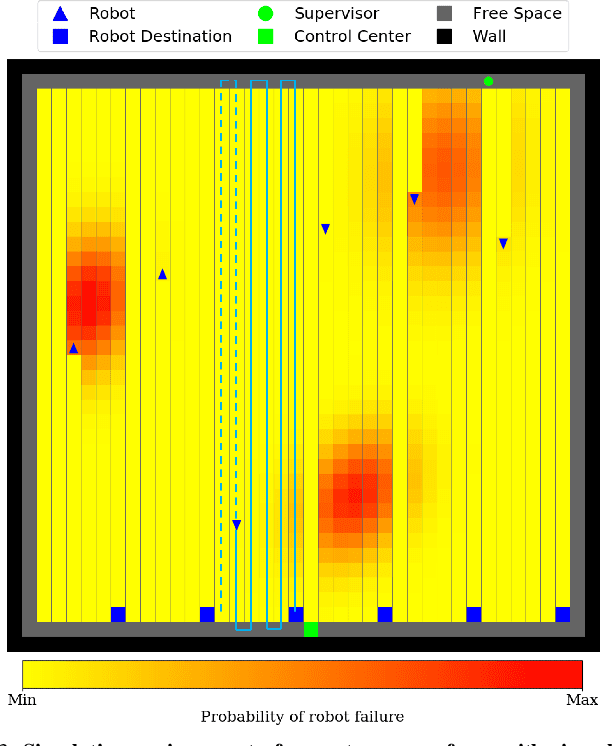

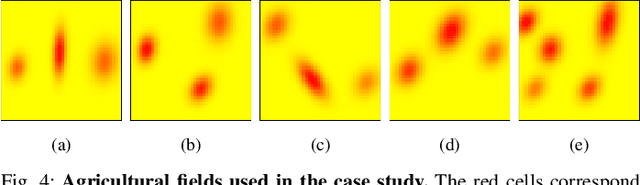

Abstract:The number of multi-robot systems deployed in field applications has increased dramatically over the years. Despite the recent advancement of navigation algorithms, autonomous robots often encounter challenging situations where the control policy fails and the human assistance is required to resume robot tasks. Human-robot collaboration can help achieve high-levels of autonomy, but monitoring and managing multiple robots at once by a single human supervisor remains a challenging problem. Our goal is to help a supervisor decide which robots to assist in which order such that the team performance can be maximized. We formulate the one-to-many supervision problem in uncertain environments as a dynamic graph traversal problem. An approximation algorithm based on the profitable tour problem on a static graph is developed to solve the original problem, and the approximation error is bounded and analyzed. Our case study on a simulated autonomous farm demonstrates superior team performance than baseline methods in task completion time and human working time, and that our method can be deployed in real-time for robot fleets with moderate size.

Proactive Anomaly Detection for Robot Navigation with Multi-Sensor Fusion

Apr 03, 2022

Abstract:Despite the rapid advancement of navigation algorithms, mobile robots often produce anomalous behaviors that can lead to navigation failures. The ability to detect such anomalous behaviors is a key component in modern robots to achieve high-levels of autonomy. Reactive anomaly detection methods identify anomalous task executions based on the current robot state and thus lack the ability to alert the robot before an actual failure occurs. Such an alert delay is undesirable due to the potential damage to both the robot and the surrounding objects. We propose a proactive anomaly detection network (PAAD) for robot navigation in unstructured and uncertain environments. PAAD predicts the probability of future failure based on the planned motions from the predictive controller and the current observation from the perception module. Multi-sensor signals are fused effectively to provide robust anomaly detection in the presence of sensor occlusion as seen in field environments. Our experiments on field robot data demonstrates superior failure identification performance than previous methods, and that our model can capture anomalous behaviors in real-time while maintaining a low false detection rate in cluttered fields. Code, dataset, and video are available at https://github.com/tianchenji/PAAD

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge