Tatsuya Harada

Sketch-based Medical Image Retrieval

Mar 07, 2023

Abstract:The amount of medical images stored in hospitals is increasing faster than ever; however, utilizing the accumulated medical images has been limited. This is because existing content-based medical image retrieval (CBMIR) systems usually require example images to construct query vectors; nevertheless, example images cannot always be prepared. Besides, there can be images with rare characteristics that make it difficult to find similar example images, which we call isolated samples. Here, we introduce a novel sketch-based medical image retrieval (SBMIR) system that enables users to find images of interest without example images. The key idea lies in feature decomposition of medical images, whereby the entire feature of a medical image can be decomposed into and reconstructed from normal and abnormal features. By extending this idea, our SBMIR system provides an easy-to-use two-step graphical user interface: users first select a template image to specify a normal feature and then draw a semantic sketch of the disease on the template image to represent an abnormal feature. Subsequently, it integrates the two kinds of input to construct a query vector and retrieves reference images with the closest reference vectors. Using two datasets, ten healthcare professionals with various clinical backgrounds participated in the user test for evaluation. As a result, our SBMIR system enabled users to overcome previous challenges, including image retrieval based on fine-grained image characteristics, image retrieval without example images, and image retrieval for isolated samples. Our SBMIR system achieves flexible medical image retrieval on demand, thereby expanding the utility of medical image databases.

Interpretable Medical Image Visual Question Answering via Multi-Modal Relationship Graph Learning

Feb 19, 2023

Abstract:Medical visual question answering (VQA) aims to answer clinically relevant questions regarding input medical images. This technique has the potential to improve the efficiency of medical professionals while relieving the burden on the public health system, particularly in resource-poor countries. Existing medical VQA methods tend to encode medical images and learn the correspondence between visual features and questions without exploiting the spatial, semantic, or medical knowledge behind them. This is partially because of the small size of the current medical VQA dataset, which often includes simple questions. Therefore, we first collected a comprehensive and large-scale medical VQA dataset, focusing on chest X-ray images. The questions involved detailed relationships, such as disease names, locations, levels, and types in our dataset. Based on this dataset, we also propose a novel baseline method by constructing three different relationship graphs: spatial relationship, semantic relationship, and implicit relationship graphs on the image regions, questions, and semantic labels. The answer and graph reasoning paths are learned for different questions.

Name Your Colour For the Task: Artificially Discover Colour Naming via Colour Quantisation Transformer

Dec 07, 2022Abstract:The long-standing theory that a colour-naming system evolves under the dual pressure of efficient communication and perceptual mechanism is supported by more and more linguistic studies including the analysis of four decades' diachronic data from the Nafaanra language. This inspires us to explore whether artificial intelligence could evolve and discover a similar colour-naming system via optimising the communication efficiency represented by high-level recognition performance. Here, we propose a novel colour quantisation transformer, CQFormer, that quantises colour space while maintaining the accuracy of machine recognition on the quantised images. Given an RGB image, Annotation Branch maps it into an index map before generating the quantised image with a colour palette, meanwhile the Palette Branch utilises a key-point detection way to find proper colours in palette among whole colour space. By interacting with colour annotation, CQFormer is able to balance both the machine vision accuracy and colour perceptual structure such as distinct and stable colour distribution for discovered colour system. Very interestingly, we even observe the consistent evolution pattern between our artificial colour system and basic colour terms across human languages. Besides, our colour quantisation method also offers an efficient quantisation method that effectively compresses the image storage while maintaining a high performance in high-level recognition tasks such as classification and detection. Extensive experiments demonstrate the superior performance of our method with extremely low bit-rate colours. We will release the source code soon.

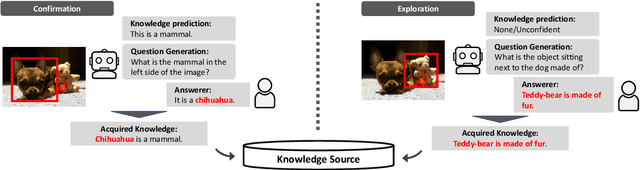

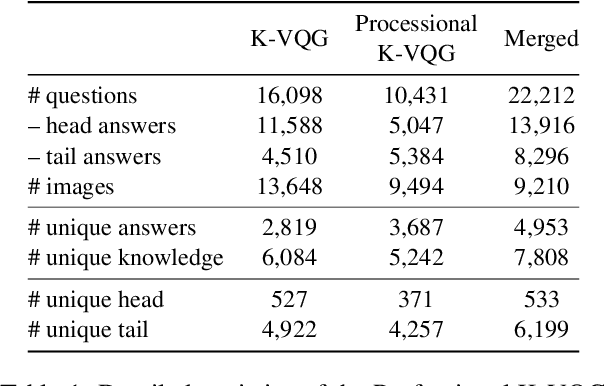

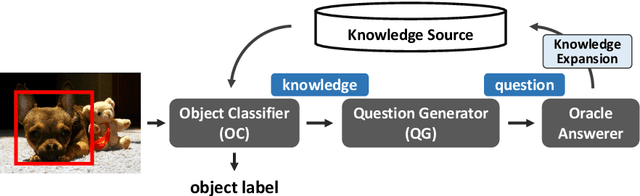

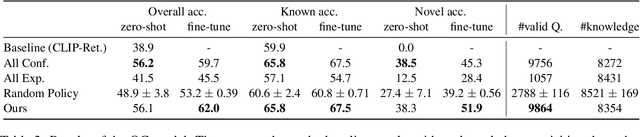

Learning by Asking Questions for Knowledge-based Novel Object Recognition

Oct 12, 2022

Abstract:In real-world object recognition, there are numerous object classes to be recognized. Conventional image recognition based on supervised learning can only recognize object classes that exist in the training data, and thus has limited applicability in the real world. On the other hand, humans can recognize novel objects by asking questions and acquiring knowledge about them. Inspired by this, we study a framework for acquiring external knowledge through question generation that would help the model instantly recognize novel objects. Our pipeline consists of two components: the Object Classifier, which performs knowledge-based object recognition, and the Question Generator, which generates knowledge-aware questions to acquire novel knowledge. We also propose a question generation strategy based on the confidence of the knowledge-aware prediction of the Object Classifier. To train the Question Generator, we construct a dataset that contains knowledge-aware questions about objects in the images. Our experiments show that the proposed pipeline effectively acquires knowledge about novel objects compared to several baselines.

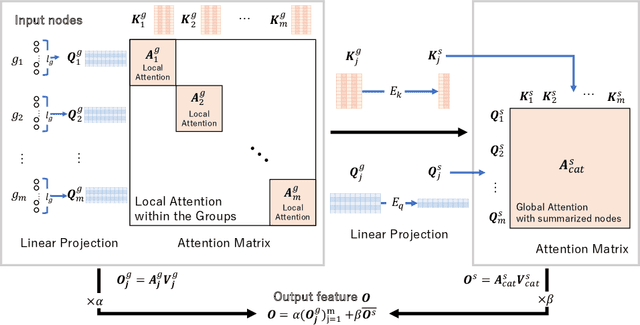

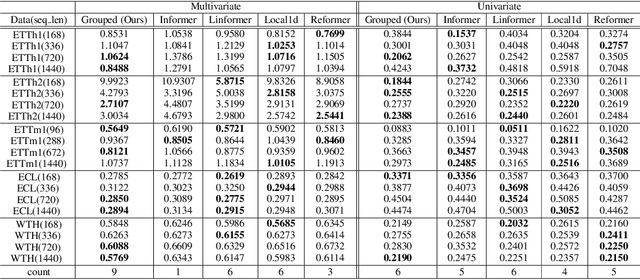

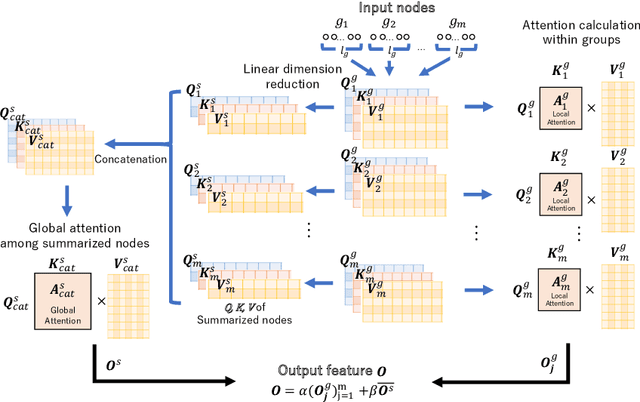

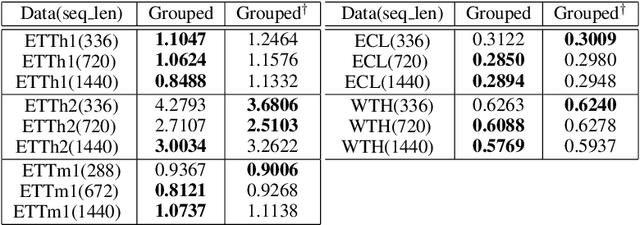

Grouped self-attention mechanism for a memory-efficient Transformer

Oct 06, 2022

Abstract:Time-series data analysis is important because numerous real-world tasks such as forecasting weather, electricity consumption, and stock market involve predicting data that vary over time. Time-series data are generally recorded over a long period of observation with long sequences owing to their periodic characteristics and long-range dependencies over time. Thus, capturing long-range dependency is an important factor in time-series data forecasting. To solve these problems, we proposed two novel modules, Grouped Self-Attention (GSA) and Compressed Cross-Attention (CCA). With both modules, we achieved a computational space and time complexity of order $O(l)$ with a sequence length $l$ under small hyperparameter limitations, and can capture locality while considering global information. The results of experiments conducted on time-series datasets show that our proposed model efficiently exhibited reduced computational complexity and performance comparable to or better than existing methods.

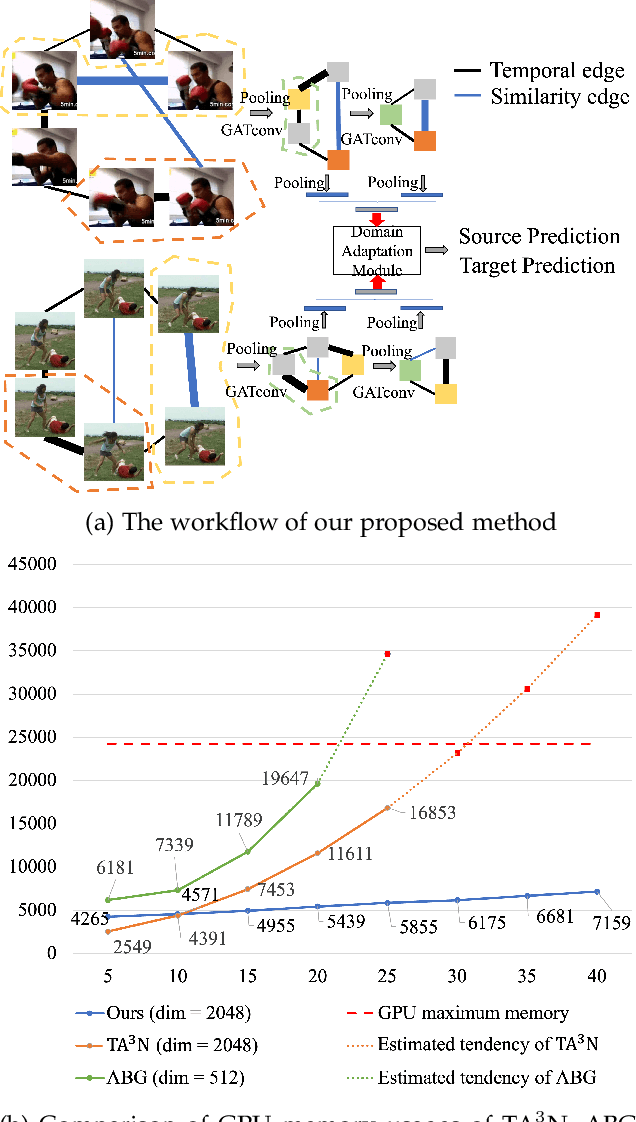

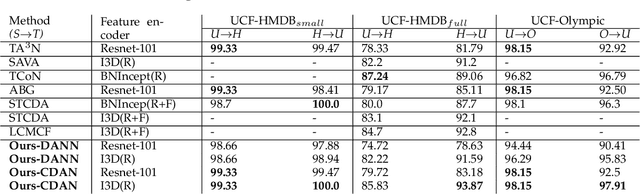

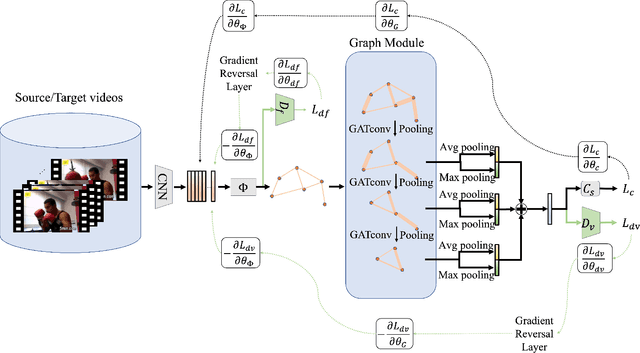

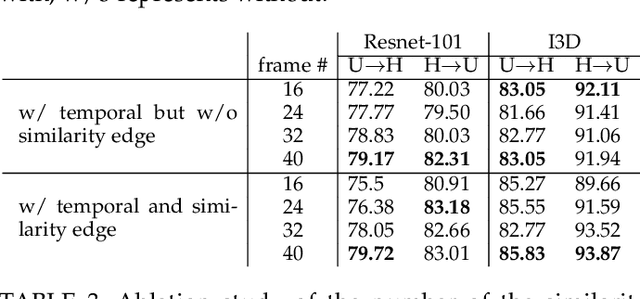

Memory Efficient Temporal & Visual Graph Model for Unsupervised Video Domain Adaptation

Aug 13, 2022

Abstract:Existing video domain adaption (DA) methods need to store all temporal combinations of video frames or pair the source and target videos, which are memory cost expensive and can't scale up to long videos. To address these limitations, we propose a memory-efficient graph-based video DA approach as follows. At first our method models each source or target video by a graph: nodes represent video frames and edges represent the temporal or visual similarity relationship between frames. We use a graph attention network to learn the weight of individual frames and simultaneously align the source and target video into a domain-invariant graph feature space. Instead of storing a large number of sub-videos, our method only constructs one graph with a graph attention mechanism for one video, reducing the memory cost substantially. The extensive experiments show that, compared with the state-of-art methods, we achieved superior performance while reducing the memory cost significantly.

Exploring Resolution and Degradation Clues as Self-supervised Signal for Low Quality Object Detection

Aug 05, 2022

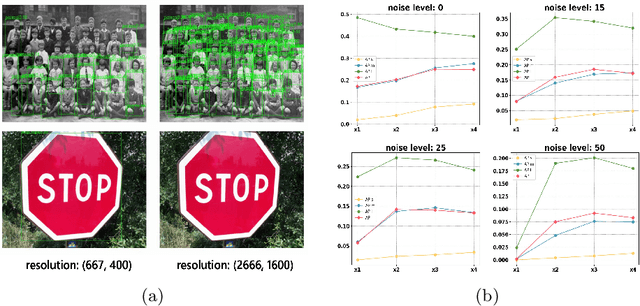

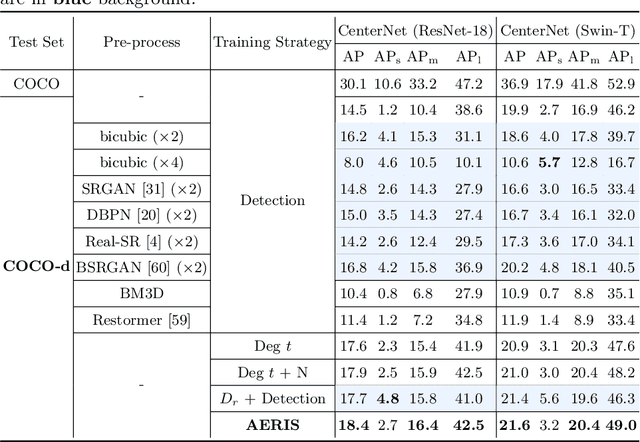

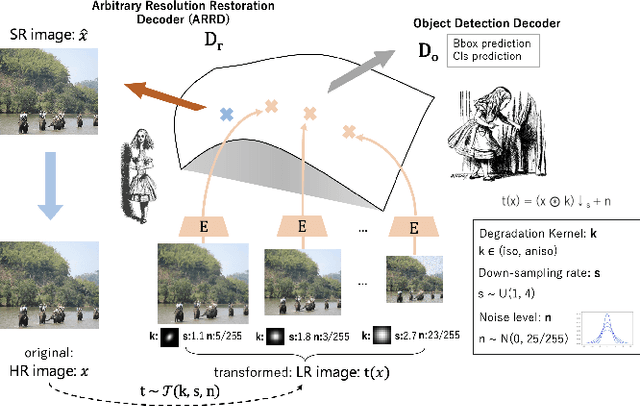

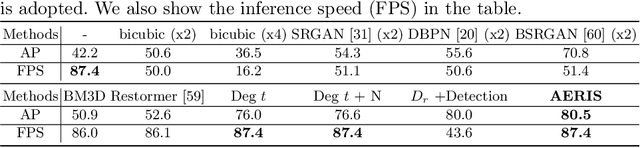

Abstract:Image restoration algorithms such as super resolution (SR) are indispensable pre-processing modules for object detection in low quality images. Most of these algorithms assume the degradation is fixed and known a priori. However, in practical, either the real degradation or optimal up-sampling ratio rate is unknown or differs from assumption, leading to a deteriorating performance for both the pre-processing module and the consequent high-level task such as object detection. Here, we propose a novel self-supervised framework to detect objects in degraded low resolution images. We utilizes the downsampling degradation as a kind of transformation for self-supervised signals to explore the equivariant representation against various resolutions and other degradation conditions. The Auto Encoding Resolution in Self-supervision (AERIS) framework could further take the advantage of advanced SR architectures with an arbitrary resolution restoring decoder to reconstruct the original correspondence from the degraded input image. Both the representation learning and object detection are optimized jointly in an end-to-end training fashion. The generic AERIS framework could be implemented on various mainstream object detection architectures with different backbones. The extensive experiments show that our methods has achieved superior performance compared with existing methods when facing variant degradation situations. Code would be released at https://github.com/cuiziteng/ECCV_AERIS.

Deforming Radiance Fields with Cages

Jul 25, 2022

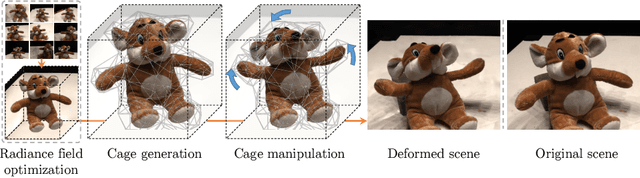

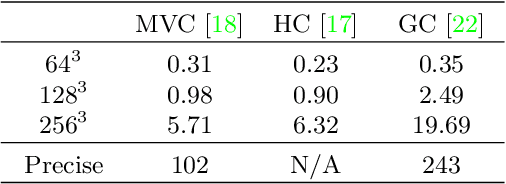

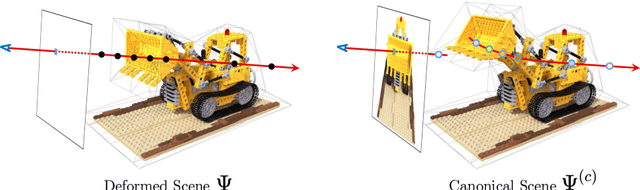

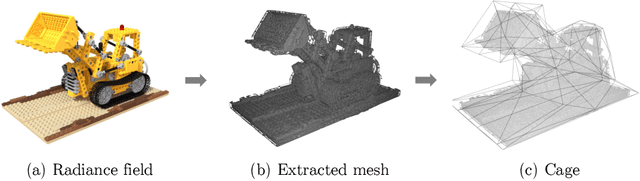

Abstract:Recent advances in radiance fields enable photorealistic rendering of static or dynamic 3D scenes, but still do not support explicit deformation that is used for scene manipulation or animation. In this paper, we propose a method that enables a new type of deformation of the radiance field: free-form radiance field deformation. We use a triangular mesh that encloses the foreground object called cage as an interface, and by manipulating the cage vertices, our approach enables the free-form deformation of the radiance field. The core of our approach is cage-based deformation which is commonly used in mesh deformation. We propose a novel formulation to extend it to the radiance field, which maps the position and the view direction of the sampling points from the deformed space to the canonical space, thus enabling the rendering of the deformed scene. The deformation results of the synthetic datasets and the real-world datasets demonstrate the effectiveness of our approach.

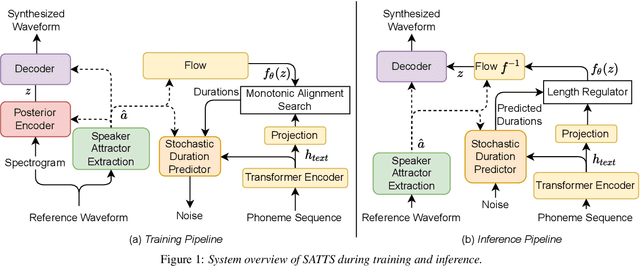

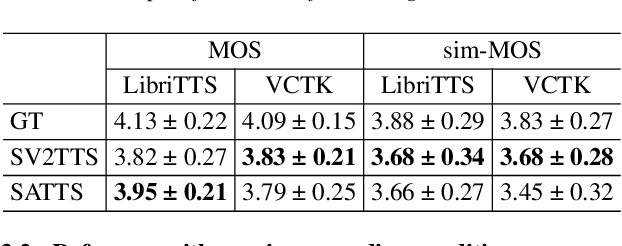

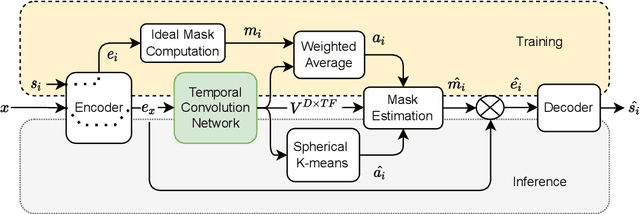

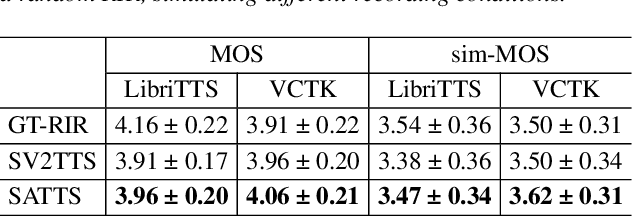

SATTS: Speaker Attractor Text to Speech, Learning to Speak by Learning to Separate

Jul 13, 2022

Abstract:The mapping of text to speech (TTS) is non-deterministic, letters may be pronounced differently based on context, or phonemes can vary depending on various physiological and stylistic factors like gender, age, accent, emotions, etc. Neural speaker embeddings, trained to identify or verify speakers are typically used to represent and transfer such characteristics from reference speech to synthesized speech. Speech separation on the other hand is the challenging task of separating individual speakers from an overlapping mixed signal of various speakers. Speaker attractors are high-dimensional embedding vectors that pull the time-frequency bins of each speaker's speech towards themselves while repelling those belonging to other speakers. In this work, we explore the possibility of using these powerful speaker attractors for zero-shot speaker adaptation in multi-speaker TTS synthesis and propose speaker attractor text to speech (SATTS). Through various experiments, we show that SATTS can synthesize natural speech from text from an unseen target speaker's reference signal which might have less than ideal recording conditions, i.e. reverberations or mixed with other speakers.

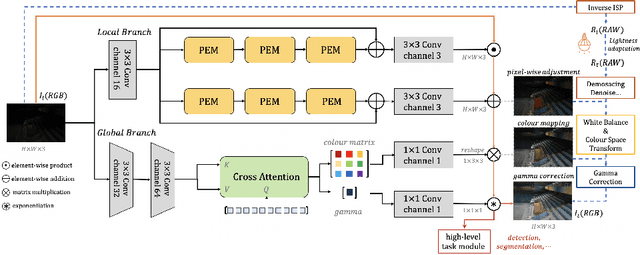

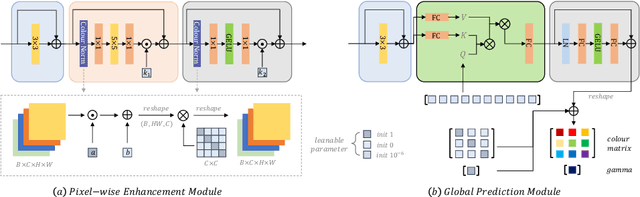

Illumination Adaptive Transformer

May 30, 2022

Abstract:Challenging illumination conditions (low light, underexposure and overexposure) in the real world not only cast an unpleasant visual appearance but also taint the computer vision tasks. Existing light adaptive methods often deal with each condition individually. What is more, most of them often operate on a RAW image or over-simplify the camera image signal processing (ISP) pipeline. By decomposing the light transformation pipeline into local and global ISP components, we propose a lightweight fast Illumination Adaptive Transformer (IAT) which comprises two transformer-style branches: local estimation branch and global ISP branch. While the local branch estimates the pixel-wise local components relevant to illumination, the global branch defines learnable quires that attend the whole image to decode the parameters. Our IAT could also conduct both object detection and semantic segmentation under various light conditions. We have extensively evaluated IAT on multiple real-world datasets on 2 low-level tasks and 3 high-level tasks. With only 90k parameters and 0.004s processing speed (excluding high-level module), our IAT has consistently achieved superior performance over SOTA. Code is available at https://github.com/cuiziteng/IlluminationAdaptive-Transformer.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge