Stefanos Zafeiriou

AvatarMe: Realistically Renderable 3D Facial Reconstruction "in-the-wild"

Mar 30, 2020

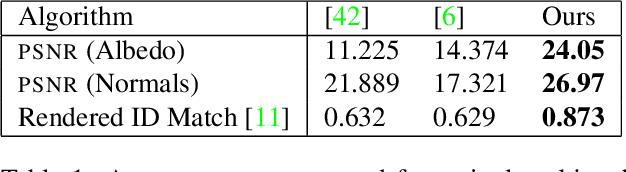

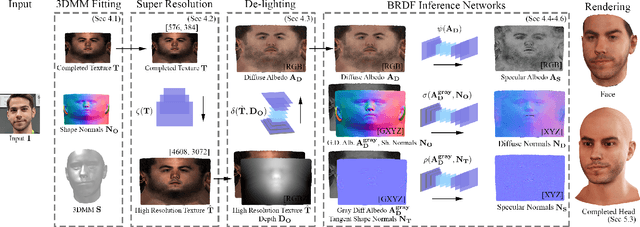

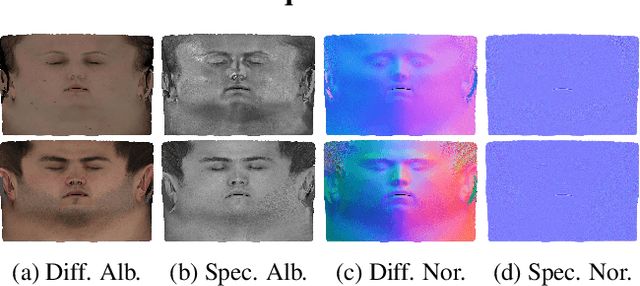

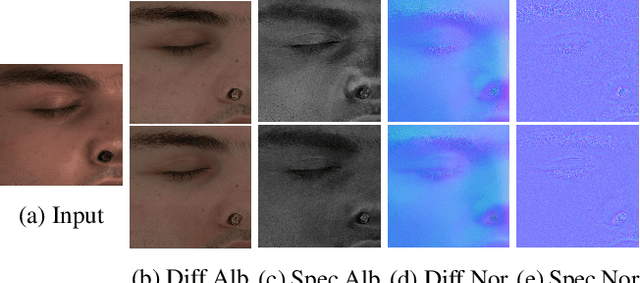

Abstract:Over the last years, with the advent of Generative Adversarial Networks (GANs), many face analysis tasks have accomplished astounding performance, with applications including, but not limited to, face generation and 3D face reconstruction from a single "in-the-wild" image. Nevertheless, to the best of our knowledge, there is no method which can produce high-resolution photorealistic 3D faces from "in-the-wild" images and this can be attributed to the: (a) scarcity of available data for training, and (b) lack of robust methodologies that can successfully be applied on very high-resolution data. In this paper, we introduce AvatarMe, the first method that is able to reconstruct photorealistic 3D faces from a single "in-the-wild" image with an increasing level of detail. To achieve this, we capture a large dataset of facial shape and reflectance and build on a state-of-the-art 3D texture and shape reconstruction method and successively refine its results, while generating the per-pixel diffuse and specular components that are required for realistic rendering. As we demonstrate in a series of qualitative and quantitative experiments, AvatarMe outperforms the existing arts by a significant margin and reconstructs authentic, 4K by 6K-resolution 3D faces from a single low-resolution image that, for the first time, bridges the uncanny valley.

$Π-$nets: Deep Polynomial Neural Networks

Mar 26, 2020

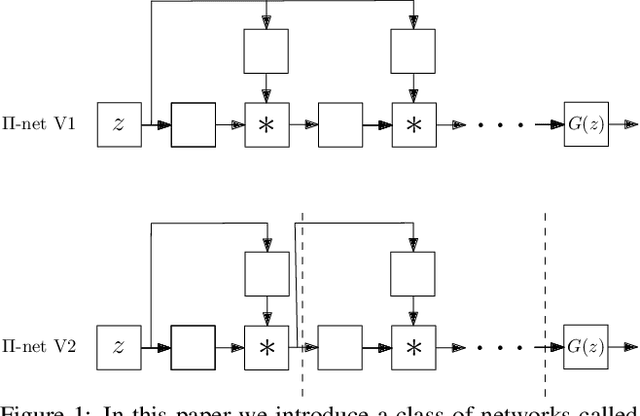

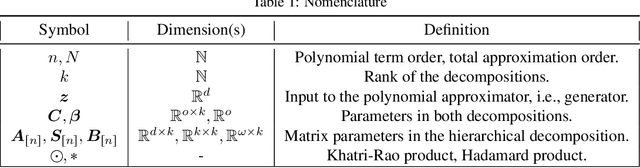

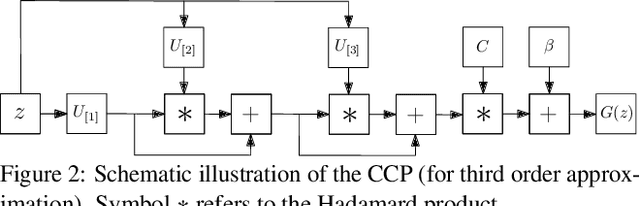

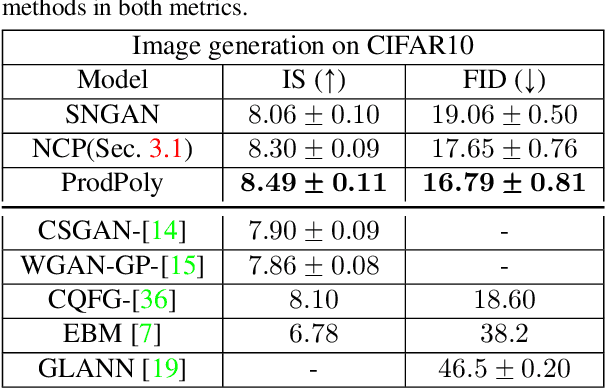

Abstract:Deep Convolutional Neural Networks (DCNNs) is currently the method of choice both for generative, as well as for discriminative learning in computer vision and machine learning. The success of DCNNs can be attributed to the careful selection of their building blocks (e.g., residual blocks, rectifiers, sophisticated normalization schemes, to mention but a few). In this paper, we propose $\Pi$-Nets, a new class of DCNNs. $\Pi$-Nets are polynomial neural networks, i.e., the output is a high-order polynomial of the input. $\Pi$-Nets can be implemented using special kind of skip connections and their parameters can be represented via high-order tensors. We empirically demonstrate that $\Pi$-Nets have better representation power than standard DCNNs and they even produce good results without the use of non-linear activation functions in a large battery of tasks and signals, i.e., images, graphs, and audio. When used in conjunction with activation functions, $\Pi$-Nets produce state-of-the-art results in challenging tasks, such as image generation. Lastly, our framework elucidates why recent generative models, such as StyleGAN, improve upon their predecessors, e.g., ProGAN.

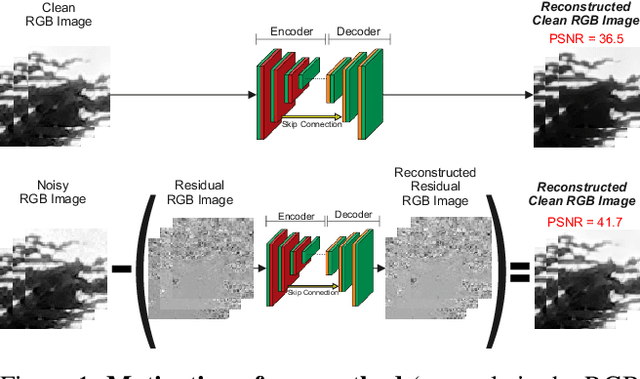

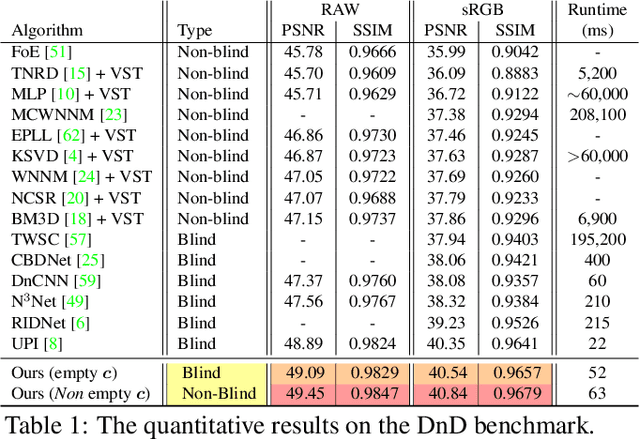

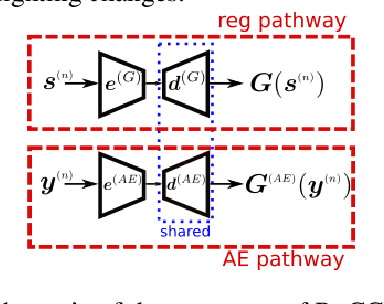

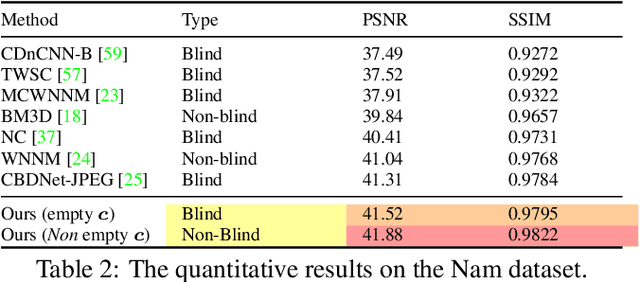

Reconstructing the Noise Manifold for Image Denoising

Mar 07, 2020

Abstract:Deep Convolutional Neural Networks (CNNs) have been successfully used in many low-level vision problems like image denoising. Although the conditional image generation techniques have led to large improvements in this task, there has been little effort in providing conditional generative adversarial networks (cGAN)[42] with an explicit way of understanding the image noise for object-independent denoising reliable for real-world applications. The task of leveraging structures in the target space is unstable due to the complexity of patterns in natural scenes, so the presence of unnatural artifacts or over-smoothed image areas cannot be avoided. To fill the gap, in this work we introduce the idea of a cGAN which explicitly leverages structure in the image noise space. By learning directly a low dimensional manifold of the image noise, the generator promotes the removal from the noisy image only that information which spans this manifold. This idea brings many advantages while it can be appended at the end of any denoiser to significantly improve its performance. Based on our experiments, our model substantially outperforms existing state-of-the-art architectures, resulting in denoised images with less oversmoothing and better detail.

Analysing Affective Behavior in the First ABAW 2020 Competition

Jan 30, 2020

Abstract:The Affective Behavior Analysis in-the-wild (ABAW) 2020 Competition is the first Competition aiming at automatic analysis of the three main behavior tasks of valence-arousal estimation, basic expression recognition and action unit detection. It is split into three Challenges, each one addressing a respective behavior task. For the Challenges, we provide a common benchmark database, Aff-Wild2, which is a large scale in-the-wild database and the first one annotated for all these three tasks. In this paper, we describe this Competition, to be held in conjunction with the IEEE Conference on Face and Gesture Recognition, May 2020, in Buenos Aires, Argentina. We present the three Challenges, with the utilized Competition corpora. We outline the evaluation metrics and present the baseline methodologies and the obtained results when these are applied to each Challenge. More information regarding the Competition and details for how to access the utilized database, are provided in the Competition site: http://ibug.doc.ic.ac.uk/resources/fg-2020-competition-affective-behavior-analysis.

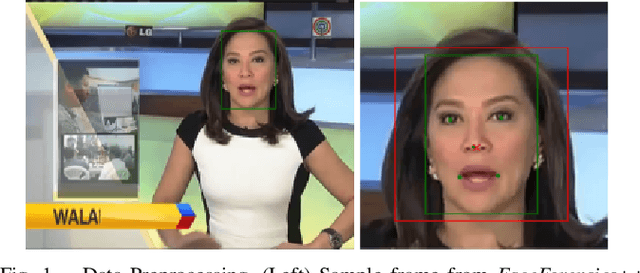

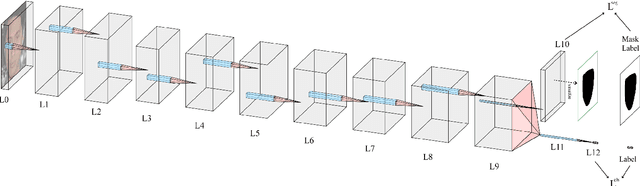

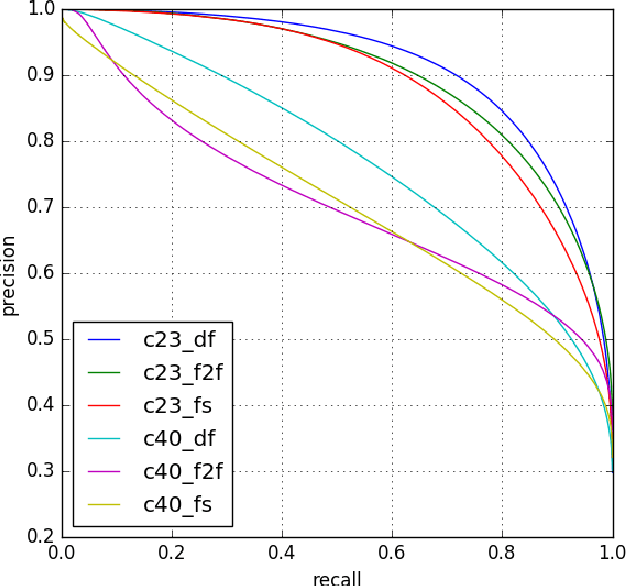

Using Fully Convolutional Neural Networks to detect manipulated images in videos

Nov 29, 2019

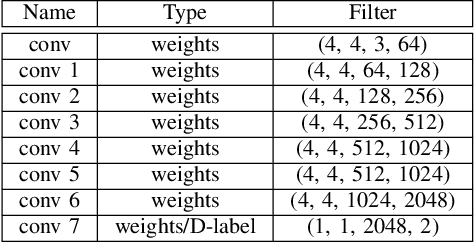

Abstract:We propose a compact architecture based on fully convolutional neural networks (FCN) to detect manipulated images of human faces. In contrast to existing FCN architectures for classification, here the final layer feature map exhibits large spatial dimensions with non-global receptive field. The final layer features are spatially averaged using global average pooling (GAP) to provide more robust features. We leverage the structure of the FCN to derive a straightforward way for joint classification and forgery localization training and show that the network's classification performance improves significantly by the addition of a pixelwise classification loss. The trained networks achieve state of the art results in binary classification in the {\it FaceForensics++} dataset and competitive performance in other tasks using a significantly reduced number of parameters and small resolution input images. Additionally, we examine how well the proposed architecture can detect fully generated images using faces from the recently proposed PGAN and StyleGAN methods. We show that this task is easier to learn than detecting manipulated images and that for both cases there is only a small drop of performance when the network is trained using more than one manipulation technique in the training data.

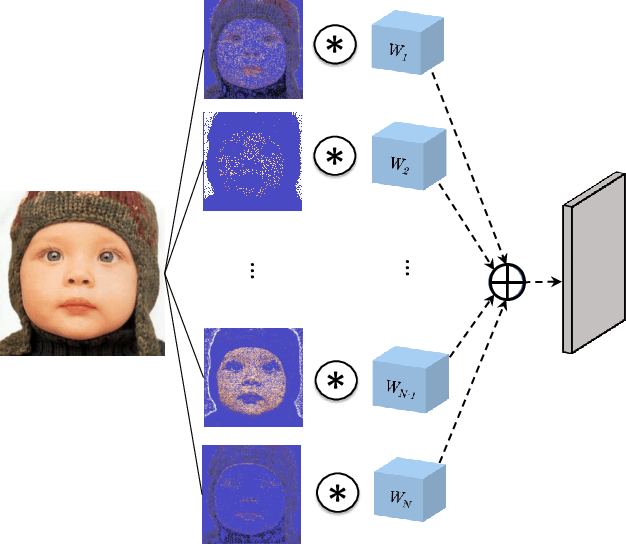

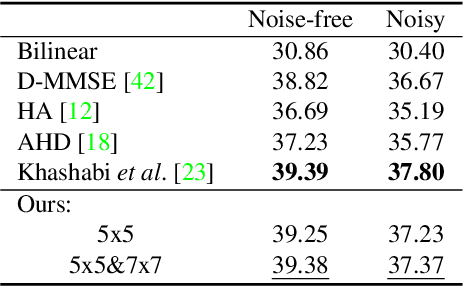

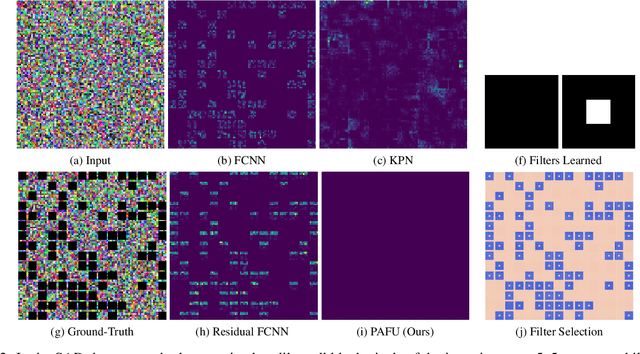

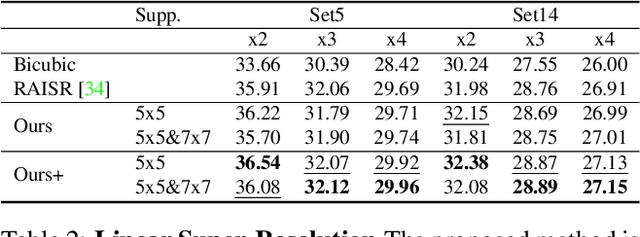

Pixel Adaptive Filtering Units

Nov 24, 2019

Abstract:State-of-the-art methods for computer vision rely heavily on the translation equivariance and spatial sharing properties of convolutional layers without explicitly taking into consideration the input content. Modern techniques employ deep sophisticated architectures in order to circumvent this issue. In this work, we propose a Pixel Adaptive Filtering Unit (PAFU) which introduces a differentiable kernel selection mechanism paired with a discrete, learnable and decorrelated group of kernels to allow for content-based spatial adaptation. First, we demonstrate the applicability of the technique in applications where runtime is of importance. Next, we employ PAFU in deep neural networks as a replacement of standard convolutional layers to enhance the original architectures with spatially varying computations to achieve considerable performance improvements. Finally, diverse and extensive experimentation provides strong empirical evidence in favor of the proposed content-adaptive processing scheme across different image processing and high-level computer vision tasks.

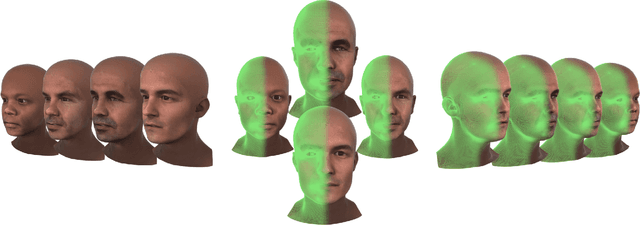

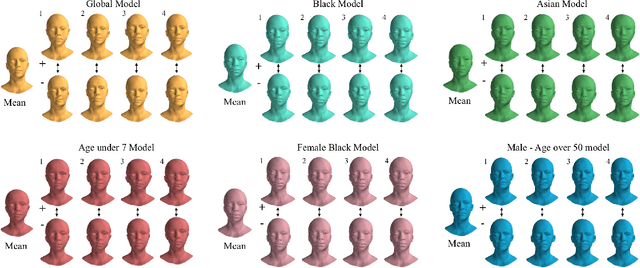

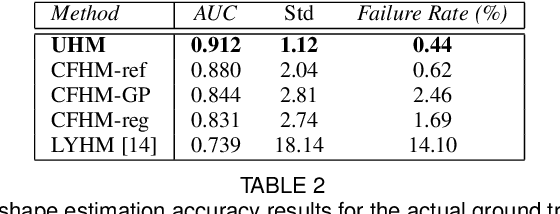

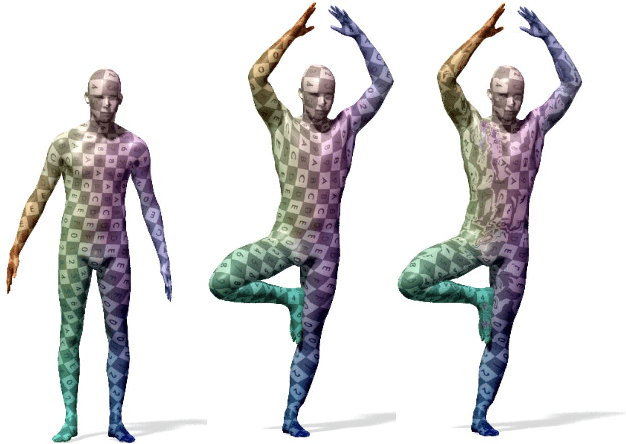

Towards a complete 3D morphable model of the human head

Nov 18, 2019

Abstract:Three-dimensional Morphable Models (3DMMs) are powerful statistical tools for representing the 3D shapes and textures of an object class. Here we present the most complete 3DMM of the human head to date that includes face, cranium, ears, eyes, teeth and tongue. To achieve this, we propose two methods for combining existing 3DMMs of different overlapping head parts: i. use a regressor to complete missing parts of one model using the other, ii. use the Gaussian Process framework to blend covariance matrices from multiple models. Thus we build a new combined face-and-head shape model that blends the variability and facial detail of an existing face model (the LSFM) with the full head modelling capability of an existing head model (the LYHM). Then we construct and fuse a highly-detailed ear model to extend the variation of the ear shape. Eye and eye region models are incorporated into the head model, along with basic models of the teeth, tongue and inner mouth cavity. The new model achieves state-of-the-art performance. We use our model to reconstruct full head representations from single, unconstrained images allowing us to parameterize craniofacial shape and texture, along with the ear shape, eye gaze and eye color.

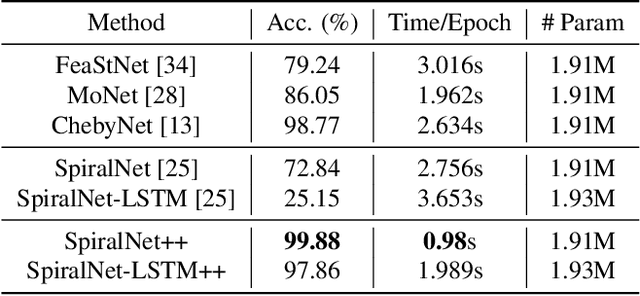

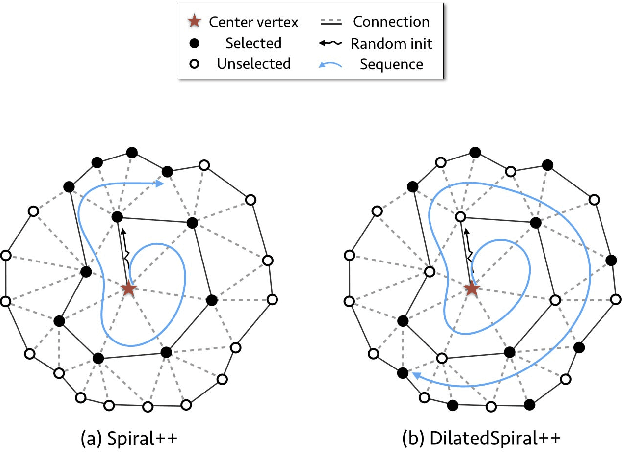

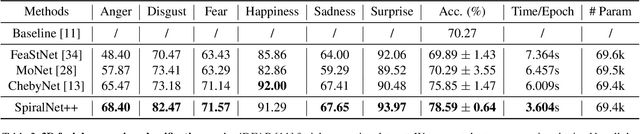

SpiralNet++: A Fast and Highly Efficient Mesh Convolution Operator

Nov 13, 2019

Abstract:Intrinsic graph convolution operators with differentiable kernel functions play a crucial role in analyzing 3D shape meshes. In this paper, we present a fast and efficient intrinsic mesh convolution operator that does not rely on the intricate design of kernel function. We explicitly formulate the order of aggregating neighboring vertices, instead of learning weights between nodes, and then a fully connected layer follows to fuse local geometric structure information with vertex features. We provide extensive evidence showing that models based on this convolution operator are easier to train, and can efficiently learn invariant shape features. Specifically, we evaluate our method on three different types of tasks of dense shape correspondence, 3D facial expression classification, and 3D shape reconstruction, and show that it significantly outperforms state-of-the-art approaches while being significantly faster, without relying on shape descriptors. Our source code is available on GitHub.

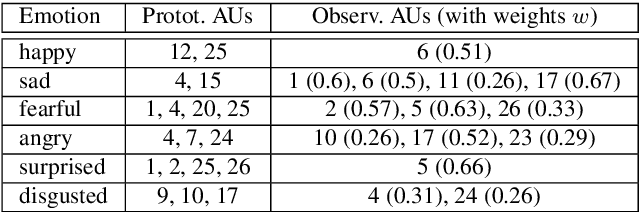

Face Behavior à la carte: Expressions, Affect and Action Units in a Single Network

Oct 15, 2019

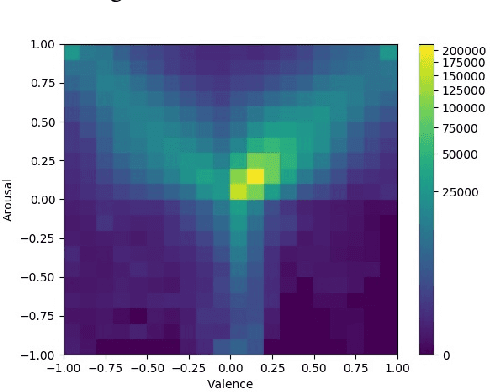

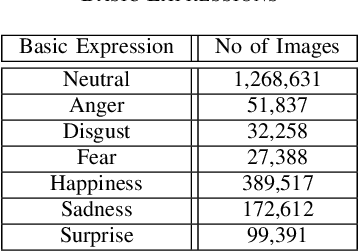

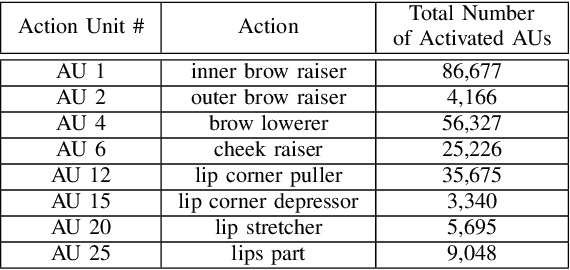

Abstract:Automatic facial behavior analysis has a long history of studies in the intersection of computer vision, physiology and psychology. However it is only recently, with the collection of large-scale datasets and powerful machine learning methods such as deep neural networks, that automatic facial behavior analysis started to thrive. Three of its iconic tasks are automatic recognition of basic expressions (e.g. happy, sad, surprised), estimation of continuous emotions (e.g., valence and arousal), and detection of facial action units (activations of e.g. upper/inner eyebrows, nose wrinkles). Up until now these tasks have been mostly studied independently collecting a dataset for the task. We present the first and the largest study of all facial behaviour tasks learned jointly in a single multi-task, multi-domain and multi-label network, which we call FaceBehaviorNet. For this we utilize all publicly available datasets in the community (around 5M images) that study facial behaviour tasks in-the-wild. We demonstrate that training jointly an end-to-end network for all tasks has consistently better performance than training each of the single-task networks. Furthermore, we propose two simple strategies for coupling the tasks during training, co-annotation and distribution matching, and show the advantages of this approach. Finally we show that FaceBehaviorNet has learned features that encapsulate all aspects of facial behaviour, and can be successfully applied to perform tasks (compound emotion recognition) beyond the ones that it has been trained in a zero- and few-shot learning setting.

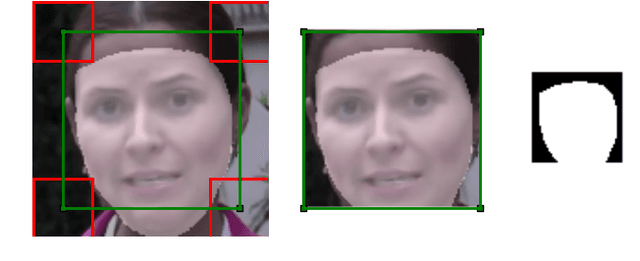

Complement Face Forensic Detection and Localization with FacialLandmarks

Oct 12, 2019

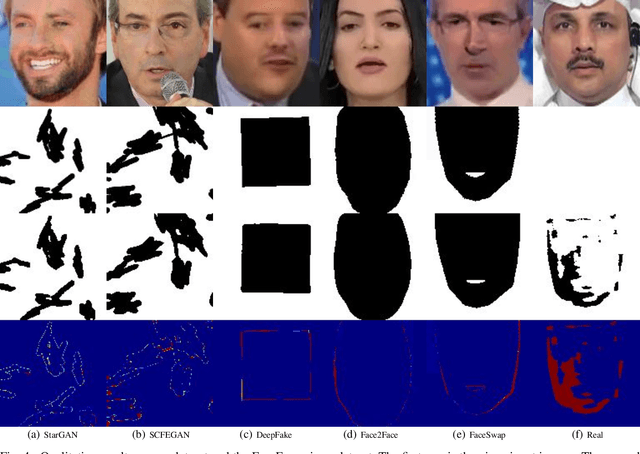

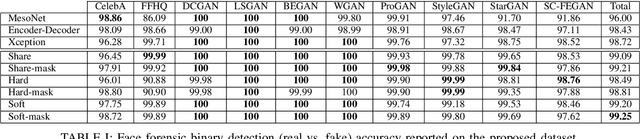

Abstract:Recently, Generative Adversarial Networks (GANs) and image manipulating methods are becoming more powerful and can produce highly realistic face images beyond human recognition which have raised significant concerns regarding the authenticity of digital media. Although there have been some prior works that tackle face forensic classification problem, it is not trivial to estimate edited locations from classification predictions. In this paper, we propose, to the best of our knowledge, the first rigorous face forensic localization dataset, which consists of genuine, generated, and manipulated face images. In particular, the pristine parts contain face images from CelebA and FFHQ datasets. The fake images are generated from various GANs methods, namely DCGANs, LSGANs, BEGANs, WGAN-GP, ProGANs, and StyleGANs. Lastly, the edited subset is generated from StarGAN and SEFCGAN based on free-form masks. In total, the dataset contains about 1.3 million facial images labelled with corresponding binary masks. Based on the proposed dataset, we demonstrated that explicit adding facial landmarks information in addition to input images improves the performance. In addition, our proposed method consists of two branches and can coherently predict face forensic detection and localization to outperform the previous state-of-the-art techniques on the newly proposed dataset as well as the faceforecsic++ dataset especially on low-quality videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge