Somesh Kumar

Real-time and Autonomous Detection of Helipad for Landing Quad-Rotors by Visual Servoing

Aug 05, 2020

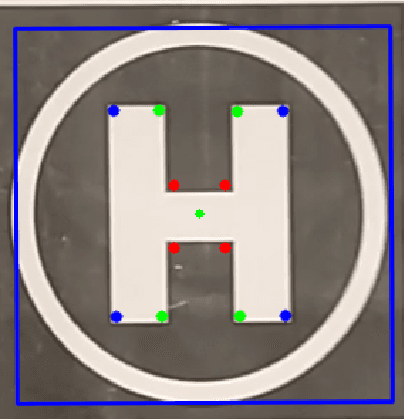

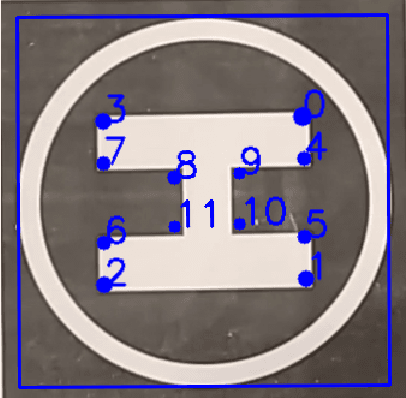

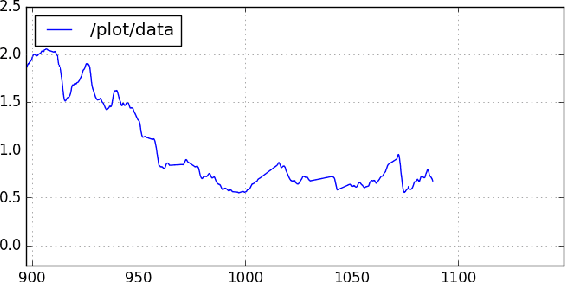

Abstract:In this paper, we first present a method to autonomously detect helipads in real time. Our method does not rely on any machine-learning methods and as such is applicable in real-time on the computational capabilities of an average quad-rotor. After initial detection, we use image tracking methods to reduce the computational resource requirement further. Once the tracking starts our modified IBVS(Image-Based Visual Servoing) method starts publishing velocity to guide the quad-rotor onto the helipad. The modified IBVS scheme is designed for the four degrees-of-freedom of a quad-rotor and can land the quad-rotor in a specific orientation.

IROS 2019 Lifelong Robotic Vision Challenge -- Lifelong Object Recognition Report

Apr 26, 2020

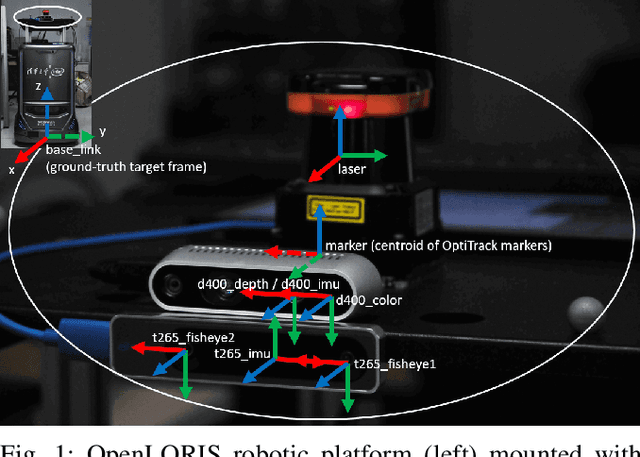

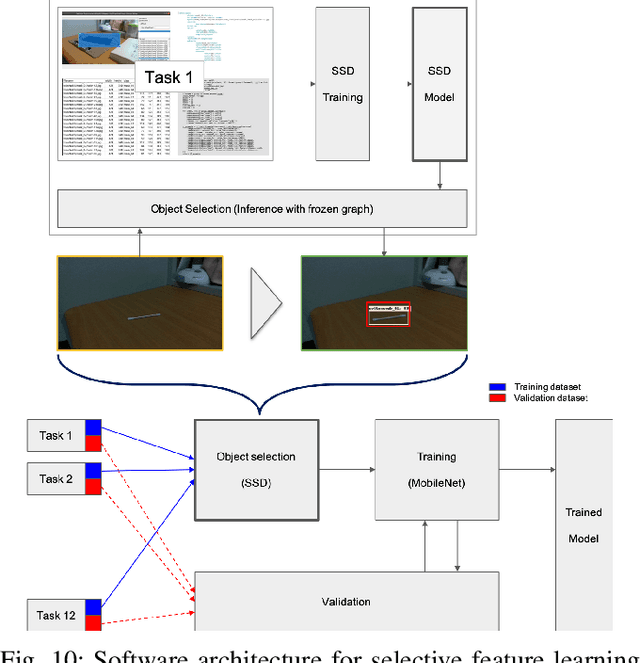

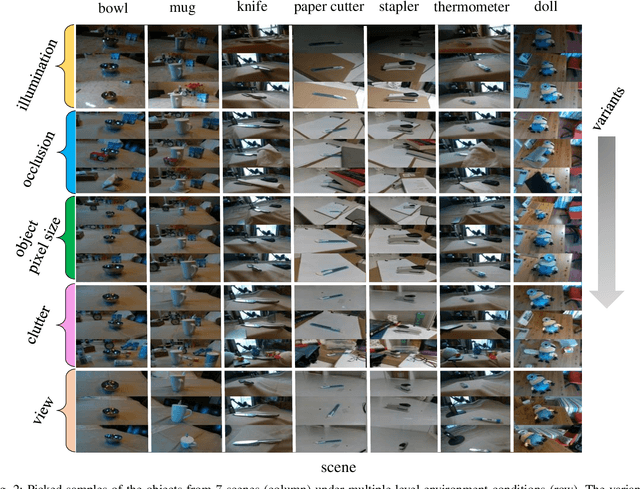

Abstract:This report summarizes IROS 2019-Lifelong Robotic Vision Competition (Lifelong Object Recognition Challenge) with methods and results from the top $8$ finalists (out of over~$150$ teams). The competition dataset (L)ifel(O)ng (R)obotic V(IS)ion (OpenLORIS) - Object Recognition (OpenLORIS-object) is designed for driving lifelong/continual learning research and application in robotic vision domain, with everyday objects in home, office, campus, and mall scenarios. The dataset explicitly quantifies the variants of illumination, object occlusion, object size, camera-object distance/angles, and clutter information. Rules are designed to quantify the learning capability of the robotic vision system when faced with the objects appearing in the dynamic environments in the contest. Individual reports, dataset information, rules, and released source code can be found at the project homepage: "https://lifelong-robotic-vision.github.io/competition/".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge