Simon Watson

Object-Reconstruction-Aware Whole-body Control of Mobile Manipulators

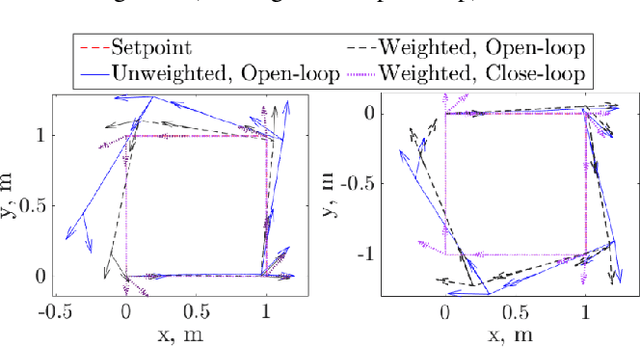

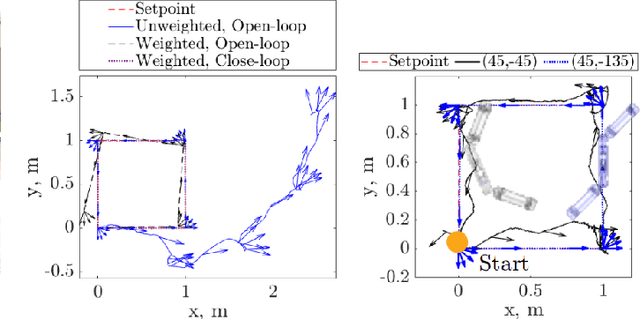

Sep 04, 2025Abstract:Object reconstruction and inspection tasks play a crucial role in various robotics applications. Identifying paths that reveal the most unknown areas of the object becomes paramount in this context, as it directly affects efficiency, and this problem is known as the view path planning problem. Current methods often use sampling-based path planning techniques, evaluating potential views along the path to enhance reconstruction performance. However, these methods are computationally expensive as they require evaluating several candidate views on the path. To this end, we propose a computationally efficient solution that relies on calculating a focus point in the most informative (unknown) region and having the robot maintain this point in the camera field of view along the path. We incorporated this strategy into the whole-body control of a mobile manipulator employing a visibility constraint without the need for an additional path planner. We conducted comprehensive and realistic simulations using a large dataset of 114 diverse objects of varying sizes from 57 categories to compare our method with a sampling-based planning strategy using Bayesian data analysis. Furthermore, we performed real-world experiments with an 8-DoF mobile manipulator to demonstrate the proposed method's performance in practice. Our results suggest that there is no significant difference in object coverage and entropy. In contrast, our method is approximately nine times faster than the baseline sampling-based method in terms of the average time the robot spends between views.

Resonant Inductive Coupling Power Transfer for Mid-Sized Inspection Robot

Nov 26, 2024

Abstract:This paper presents a wireless power transfer (WPT) for a mid-sized inspection mobile robot. The objective is to transmit 100 W of power over 1 meter of distance, achieved through lightweight Litz wire coils weighing 320 g held together with a coil structure of 3.54 kg. The Wireless Power Transfer System (WPTS) is mounted onto an unmanned ground vehicle (UGV). The study addresses an investigation of coil design, accounting for misalignment and tolerance issues in resonance-coupled coils. In experimental validation, the system effectively transmits 109.7 W of power over a 1-meter distance, with obstacles present. This achievement yields a system efficiency of 47.14%, a value that is remarkably close to the maximum power transfer point (50%) when the WPTS utilises the full voltage allowance of the capacitor. The paper shows the WPTS charging speed of 5 minutes for 12 V, 0.8 Ah lead acid batteries.

Fin ray-inspired, Origami, Small Scale Actuator for Fin Manipulation in Aquatic Bioinspired Robots

Jul 23, 2024Abstract:Fish locomotion is enabled by fin rays-actively deformable boney rods, which manipulate the fin to facilitate complex interaction with surrounding water and enable propulsion. Replicating the performance and kinematics of the biological fin ray from an engineering perspective is a challenging task and has not been realised thus far. This work introduces a prototype of a fin ray-inspired origami electromagnetic tendon-driven (FOLD) actuator, designed to emulate the functional dynamics of fish fin rays. Constructed in minutes using origami/kirigami and paper joinery techniques from flat laser-cut polypropylene film, this actuator is low-cost at {\pounds}0.80 (\$1), simple to assemble, and durable for over one million cycles. We leverage its small size to embed eight into two fin membranes of a 135 mm long cuttlefish robot capable of four degrees of freedom swimming. We present an extensive kinematic and swimming parametric study with 1015 data points from 7.6 hours of video, which has been used to determine optimal kinematic parameters and validate theoretical constants observed in aquatic animals. Notably, the study explores the nuanced interplay between undulation patterns, power distribution, and locomotion efficiency, underscoring the potential of the actuator as a model system for the investigation of energy-efficient propulsion and control of bioinspired systems. The versatility of the actuator is further demonstrated by its integration into a fish and a jellyfish.

Integrating knowledge-guided symbolic regression and model-based design of experiments to automate process flow diagram development

May 07, 2024Abstract:New products must be formulated rapidly to succeed in the global formulated product market; however, key product indicators (KPIs) can be complex, poorly understood functions of the chemical composition and processing history. Consequently, scale-up must currently undergo expensive trial-and-error campaigns. To accelerate process flow diagram (PFD) optimisation and knowledge discovery, this work proposed a novel digital framework to automatically quantify process mechanisms by integrating symbolic regression (SR) within model-based design of experiments (MBDoE). Each iteration, SR proposed a Pareto front of interpretable mechanistic expressions, and then MBDoE designed a new experiment to discriminate between them while balancing PFD optimisation. To investigate the framework's performance, a new process model capable of simulating general formulated product synthesis was constructed to generate in-silico data for different case studies. The framework could effectively discover ground-truth process mechanisms within a few iterations, indicating its great potential for use within the general chemical industry for digital manufacturing and product innovation.

Fully Distributed Cooperative Multi-agent Underwater Obstacle Avoidance Under Dog Walking Paradigm

Mar 16, 2024Abstract:Navigation in cluttered underwater environments is challenging, especially when there are constraints on communication and self-localisation. Part of the fully distributed underwater navigation problem has been resolved by introducing multi-agent robot teams, however when the environment becomes cluttered, the problem remains unresolved. In this paper, we first studied the connection between everyday activity of dog walking and the cooperative underwater obstacle avoidance problem. Inspired by this analogy, we propose a novel dog walking paradigm and implement it in a multi-agent underwater system. Simulations were conducted across various scenarios, with performance benchmarked against traditional methods utilising Image-Based Visual Servoing in a multi-agent setup. Results indicate that our dog walking-inspired paradigm significantly enhances cooperative behavior among agents and outperforms the existing approach in navigating through obstacles.

Virtual Elastic Tether: a New Approach for Multi-agent Navigation in Confined Aquatic Environments

Mar 15, 2024

Abstract:Underwater navigation is a challenging area in the field of mobile robotics due to inherent constraints in self-localisation and communication in underwater environments. Some of these challenges can be mitigated by using collaborative multi-agent teams. However, when applied underwater, the robustness of traditional multi-agent collaborative control approaches is highly limited due to the unavailability of reliable measurements. In this paper, the concept of a Virtual Elastic Tether (VET) is introduced in the context of incomplete state measurements, which represents an innovative approach to underwater navigation in confined spaces. The concept of VET is formulated and validated using the Cooperative Aquatic Vehicle Exploration System (CAVES), which is a sim-to-real multi-agent aquatic robotic platform. Within this framework, a vision-based Autonomous Underwater Vehicle-Autonomous Surface Vehicle leader-follower formulation is developed. Experiments were conducted in both simulation and on a physical platform, benchmarked against a traditional Image-Based Visual Servoing approach. Results indicate that the formation of the baseline approach fails under discrete disturbances, when induced distances between the robots exceeds 0.6 m in simulation and 0.3 m in the real world. In contrast, the VET-enhanced system recovers to pre-perturbation distances within 5 seconds. Furthermore, results illustrate the successful navigation of VET-enhanced CAVES in a confined water pond where the baseline approach fails to perform adequately.

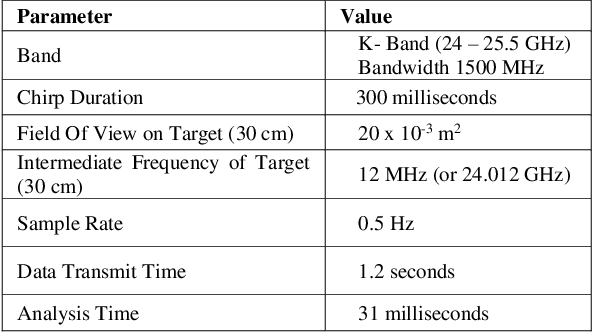

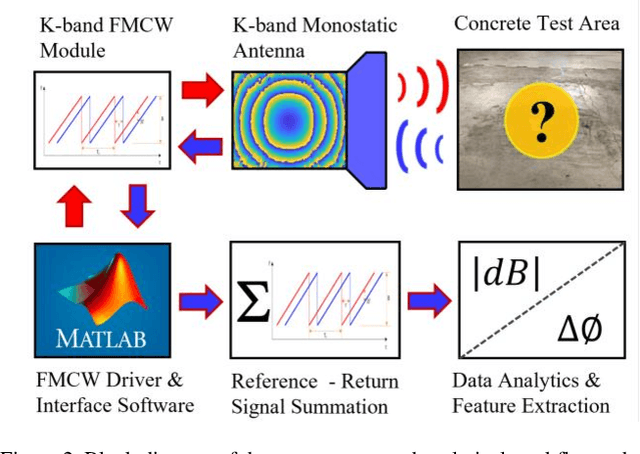

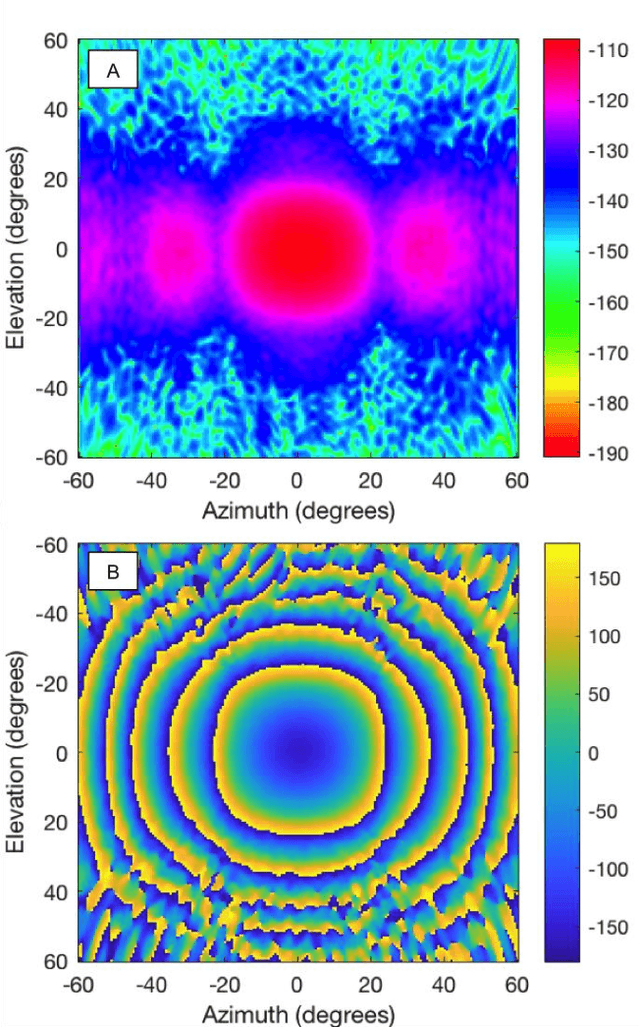

Millimeter-Wave Sensing for Avoidance of High-Risk Ground Conditions for Mobile Robots

Mar 30, 2022

Abstract:Mobile robot autonomy has made significant advances in recent years, with navigation algorithms well developed and used commercially in certain well-defined environments, such as warehouses. The common link in usage scenarios is that the environments in which the robots are utilized have a high degree of certainty. Operating environments are often designed to be robot friendly, for example augmented reality markers are strategically placed and the ground is typically smooth, level, and clear of debris. For robots to be useful in a wider range of environments, especially environments that are not sanitized for their use, robots must be able to handle uncertainty. This requires a robot to incorporate new sensors and sources of information, and to be able to use this information to make decisions regarding navigation and the overall mission. When using autonomous mobile robots in unstructured and poorly defined environments, such as a natural disaster site or in a rural environment, ground condition is of critical importance and is a common cause of failure. Examples include loss of traction due to high levels of ground water, hidden cavities, or material boundary failures. To evaluate a non-contact sensing method to mitigate these risks, Frequency Modulated Continuous Wave (FMCW) radar is integrated with an Unmanned Ground Vehicle (UGV), representing a novel application of FMCW to detect new measurands for Robotic Autonomous Systems (RAS) navigation, informing on terrain integrity and adding to the state-of-the-art in sensing for optimized autonomous path planning. In this paper, the FMCW is first evaluated in a desktop setting to determine its performance in anticipated ground conditions. The FMCW is then fixed to a UGV and the sensor system is tested and validated in a representative environment containing regions with significant levels of ground water saturation.

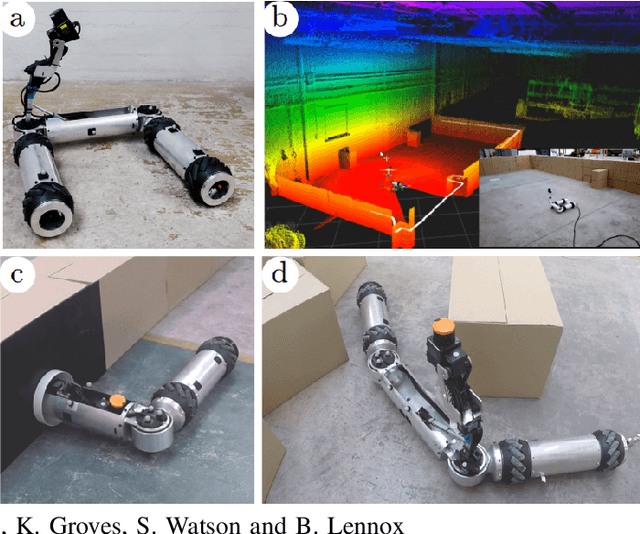

MIRRAX: A Reconfigurable Robot for Limited Access Environments

Mar 01, 2022

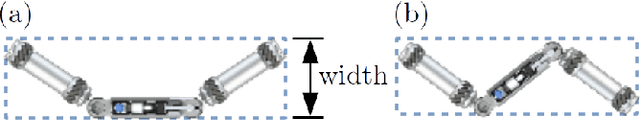

Abstract:The development of mobile robot platforms for inspection has gained traction in recent years with the rapid advancement in hardware and software. However, conventional mobile robots are unable to address the challenge of operating in extreme environments where the robot is required to traverse narrow gaps in highly cluttered areas with restricted access. This paper presents MIRRAX, a robot that has been designed to meet these challenges with the capability of re-configuring itself to both access restricted environments through narrow ports and navigate through tightly spaced obstacles. Controllers for the robot are detailed, along with an analysis on the controllability of the robot given the use of Mecanum wheels in a variable configuration. Characterisation on the robot's performance identified suitable configurations for operating in narrow environments. The minimum lateral footprint width achievable for stable configuration ($<2^\text{o}$~roll) was 0.19~m. Experimental validation of the robot's controllability shows good agreement with the theoretical analysis. A further series of experiments shows the feasibility of the robot in addressing the challenges above: the capability to reconfigure itself for restricted entry through ports as small as 150mm diameter, and navigating through cluttered environments. The paper also presents results from a deployment in a Magnox facility at the Sellafield nuclear site in the UK -- the first robot to ever do so, for remote inspection and mapping.

A Review: Challenges and Opportunities for Artificial Intelligence and Robotics in the Offshore Wind Sector

Dec 13, 2021

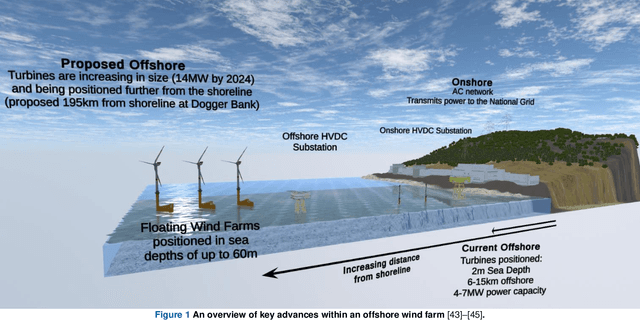

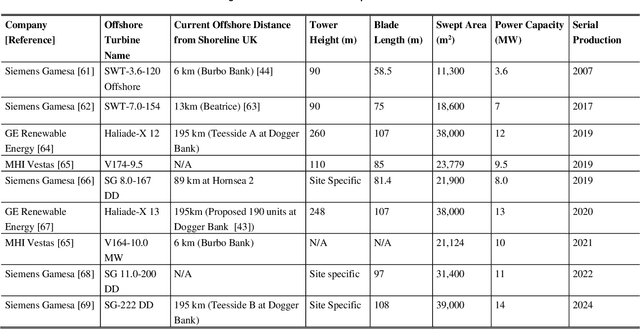

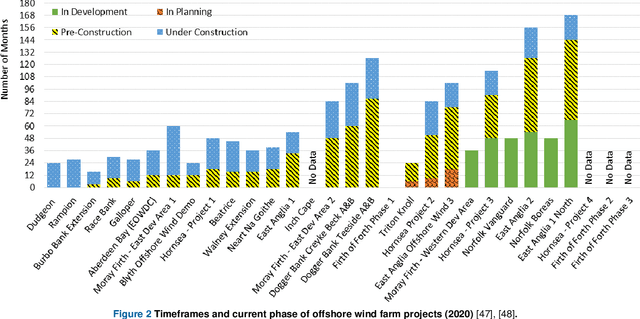

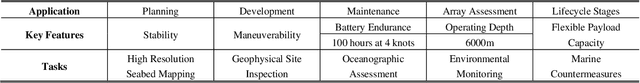

Abstract:A global trend in increasing wind turbine size and distances from shore is emerging within the rapidly growing offshore wind farm market. In the UK, the offshore wind sector produced its highest amount of electricity in the UK in 2019, a 19.6% increase on the year before. Currently, the UK is set to increase production further, targeting a 74.7% increase of installed turbine capacity as reflected in recent Crown Estate leasing rounds. With such tremendous growth, the sector is now looking to Robotics and Artificial Intelligence (RAI) in order to tackle lifecycle service barriers as to support sustainable and profitable offshore wind energy production. Today, RAI applications are predominately being used to support short term objectives in operation and maintenance. However, moving forward, RAI has the potential to play a critical role throughout the full lifecycle of offshore wind infrastructure, from surveying, planning, design, logistics, operational support, training and decommissioning. This paper presents one of the first systematic reviews of RAI for the offshore renewable energy sector. The state-of-the-art in RAI is analyzed with respect to offshore energy requirements, from both industry and academia, in terms of current and future requirements. Our review also includes a detailed evaluation of investment, regulation and skills development required to support the adoption of RAI. The key trends identified through a detailed analysis of patent and academic publication databases provide insights to barriers such as certification of autonomous platforms for safety compliance and reliability, the need for digital architectures for scalability in autonomous fleets, adaptive mission planning for resilient resident operations and optimization of human machine interaction for trusted partnerships between people and autonomous assistants.

CNN-Based Semantic Change Detection in Satellite Imagery

Jun 10, 2020

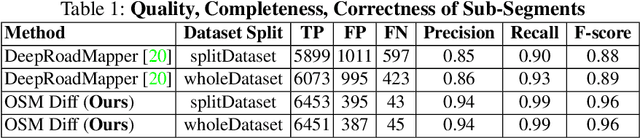

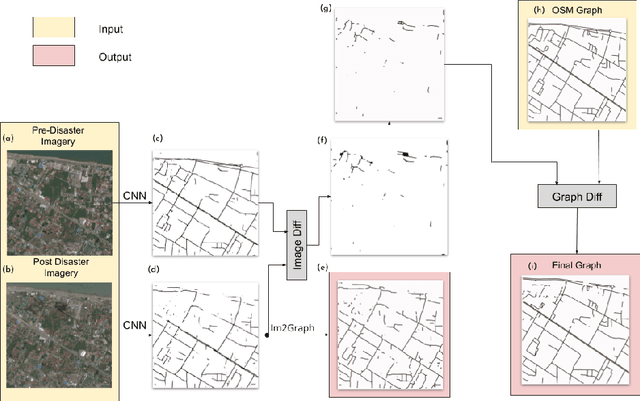

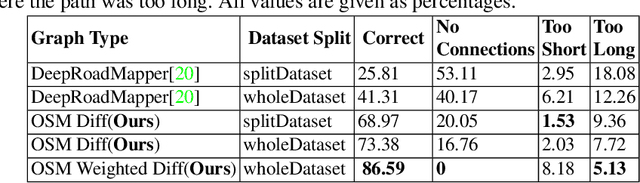

Abstract:Timely disaster risk management requires accurate road maps and prompt damage assessment. Currently, this is done by volunteers manually marking satellite imagery of affected areas but this process is slow and often error-prone. Segmentation algorithms can be applied to satellite images to detect road networks. However, existing methods are unsuitable for disaster-struck areas as they make assumptions about the road network topology which may no longer be valid in these scenarios. Herein, we propose a CNN-based framework for identifying accessible roads in post-disaster imagery by detecting changes from pre-disaster imagery. Graph theory is combined with the CNN output for detecting semantic changes in road networks with OpenStreetMap data. Our results are validated with data of a tsunami-affected region in Palu, Indonesia acquired from DigitalGlobe.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge