Shijian Lu

Nanyang Technological University

Domain Adaptive Video Segmentation via Temporal Consistency Regularization

Jul 23, 2021

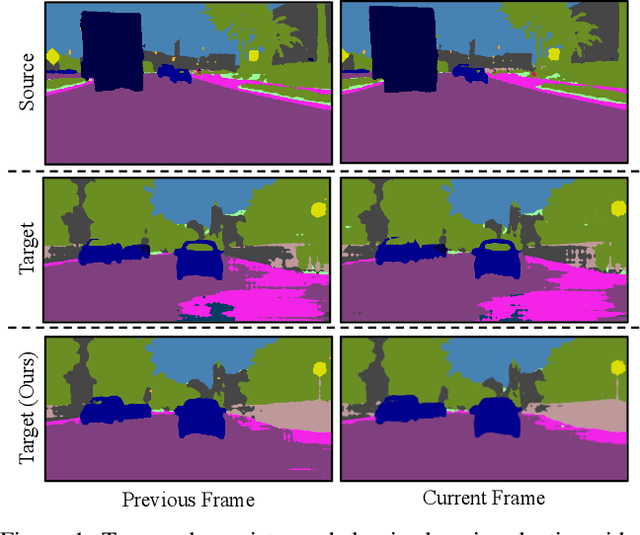

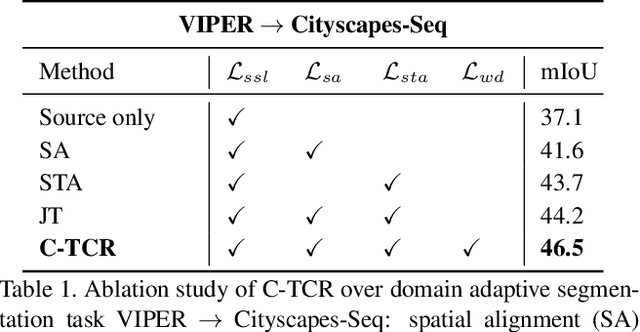

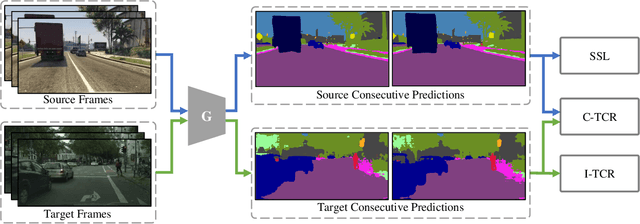

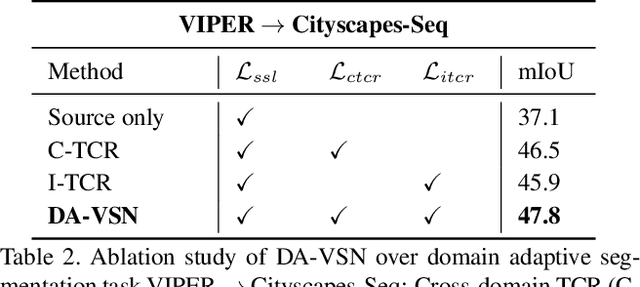

Abstract:Video semantic segmentation is an essential task for the analysis and understanding of videos. Recent efforts largely focus on supervised video segmentation by learning from fully annotated data, but the learnt models often experience clear performance drop while applied to videos of a different domain. This paper presents DA-VSN, a domain adaptive video segmentation network that addresses domain gaps in videos by temporal consistency regularization (TCR) for consecutive frames of target-domain videos. DA-VSN consists of two novel and complementary designs. The first is cross-domain TCR that guides the prediction of target frames to have similar temporal consistency as that of source frames (learnt from annotated source data) via adversarial learning. The second is intra-domain TCR that guides unconfident predictions of target frames to have similar temporal consistency as confident predictions of target frames. Extensive experiments demonstrate the superiority of our proposed domain adaptive video segmentation network which outperforms multiple baselines consistently by large margins.

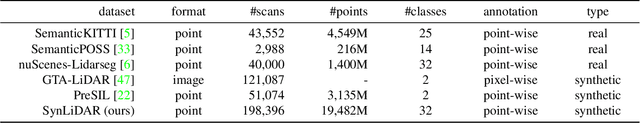

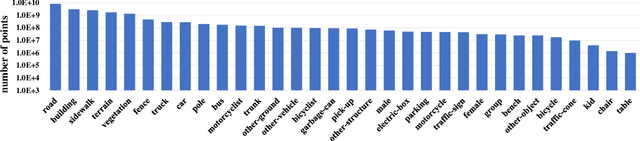

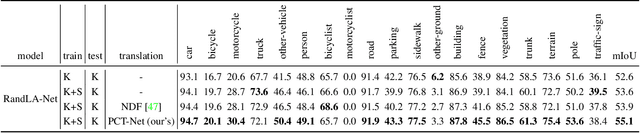

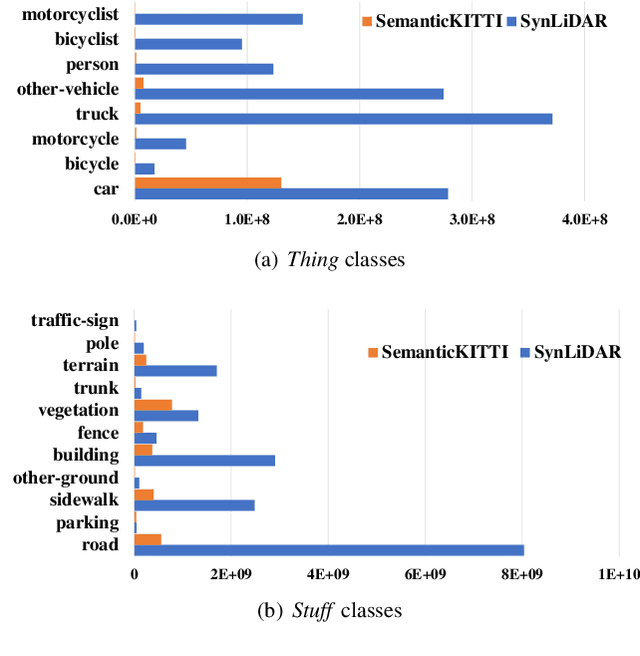

SynLiDAR: Learning From Synthetic LiDAR Sequential Point Cloud for Semantic Segmentation

Jul 12, 2021

Abstract:Transfer learning from synthetic to real data has been proved an effective way of mitigating data annotation constraints in various computer vision tasks. However, the developments focused on 2D images but lag far behind for 3D point clouds due to the lack of large-scale high-quality synthetic point cloud data and effective transfer methods. We address this issue by collecting SynLiDAR, a synthetic LiDAR point cloud dataset that contains large-scale point-wise annotated point cloud with accurate geometric shapes and comprehensive semantic classes, and designing PCT-Net, a point cloud translation network that aims to narrow down the gap with real-world point cloud data. For SynLiDAR, we leverage graphic tools and professionals who construct multiple realistic virtual environments with rich scene types and layouts where annotated LiDAR points can be generated automatically. On top of that, PCT-Net disentangles synthetic-to-real gaps into an appearance component and a sparsity component and translates SynLiDAR by aligning the two components with real-world data separately. Extensive experiments over multiple data augmentation and semi-supervised semantic segmentation tasks show very positive outcomes - including SynLiDAR can either train better models or reduce real-world annotated data without sacrificing performance, and PCT-Net translated data further improve model performance consistently.

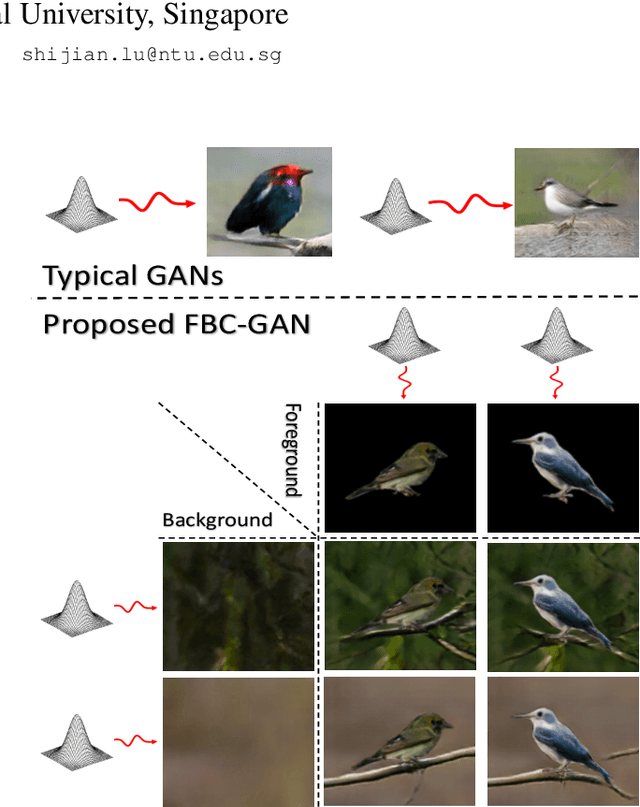

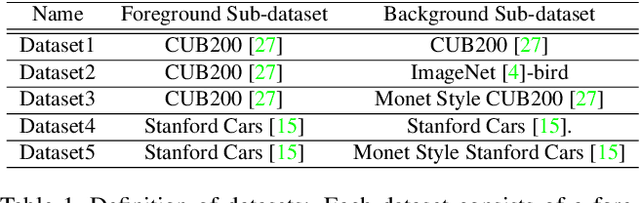

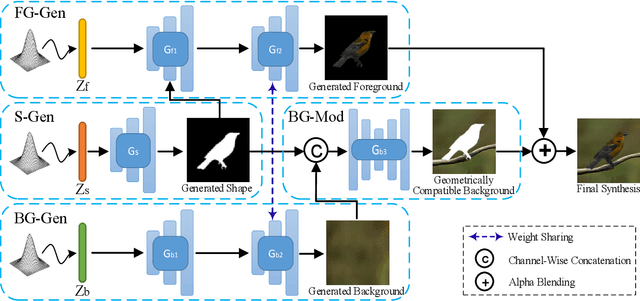

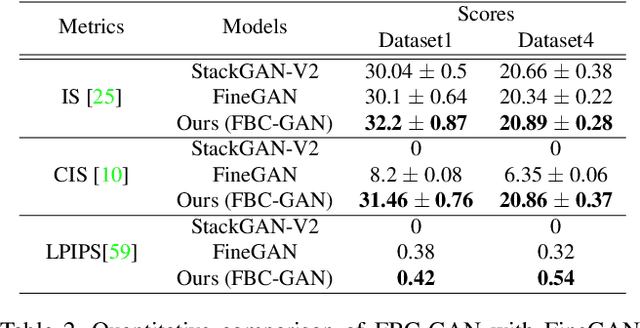

FBC-GAN: Diverse and Flexible Image Synthesis via Foreground-Background Composition

Jul 07, 2021

Abstract:Generative Adversarial Networks (GANs) have become the de-facto standard in image synthesis. However, without considering the foreground-background decomposition, existing GANs tend to capture excessive content correlation between foreground and background, thus constraining the diversity in image generation. This paper presents a novel Foreground-Background Composition GAN (FBC-GAN) that performs image generation by generating foreground objects and background scenes concurrently and independently, followed by composing them with style and geometrical consistency. With this explicit design, FBC-GAN can generate images with foregrounds and backgrounds that are mutually independent in contents, thus lifting the undesirably learned content correlation constraint and achieving superior diversity. It also provides excellent flexibility by allowing the same foreground object with different background scenes, the same background scene with varying foreground objects, or the same foreground object and background scene with different object positions, sizes and poses. It can compose foreground objects and background scenes sampled from different datasets as well. Extensive experiments over multiple datasets show that FBC-GAN achieves competitive visual realism and superior diversity as compared with state-of-the-art methods.

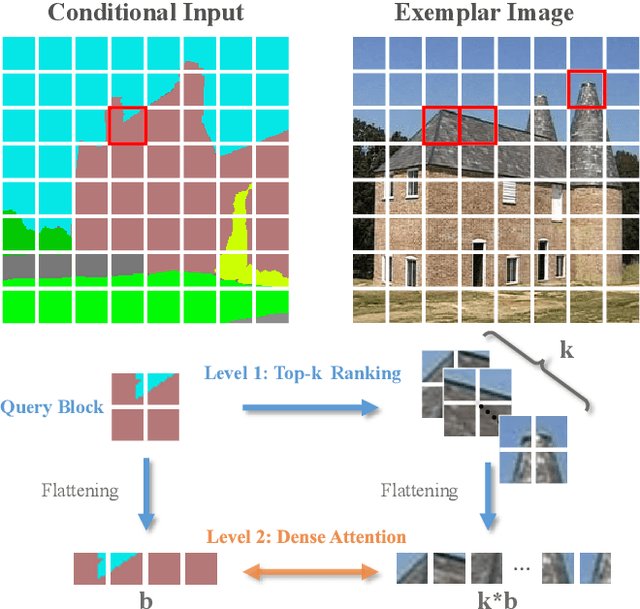

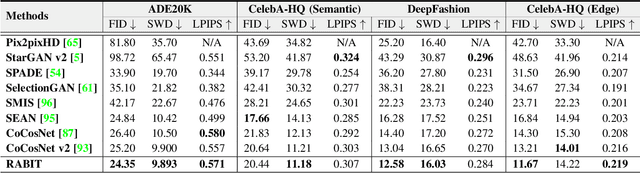

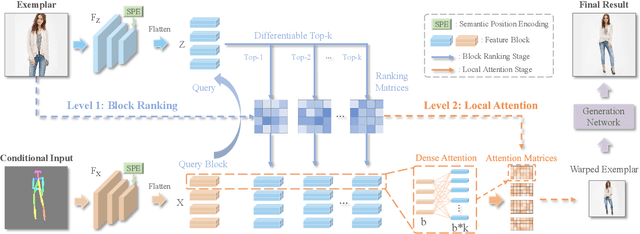

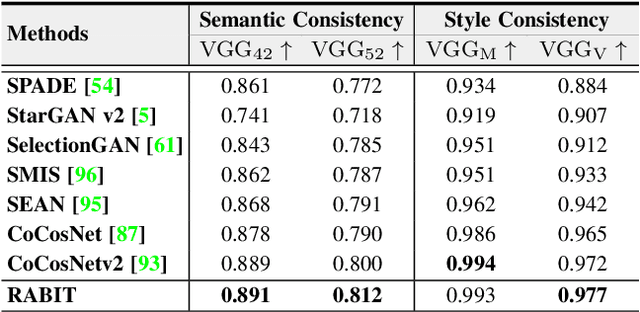

Bi-level Feature Alignment for Versatile Image Translation and Manipulation

Jul 07, 2021

Abstract:Generative adversarial networks (GANs) have achieved great success in image translation and manipulation. However, high-fidelity image generation with faithful style control remains a grand challenge in computer vision. This paper presents a versatile image translation and manipulation framework that achieves accurate semantic and style guidance in image generation by explicitly building a correspondence. To handle the quadratic complexity incurred by building the dense correspondences, we introduce a bi-level feature alignment strategy that adopts a top-$k$ operation to rank block-wise features followed by dense attention between block features which reduces memory cost substantially. As the top-$k$ operation involves index swapping which precludes the gradient propagation, we propose to approximate the non-differentiable top-$k$ operation with a regularized earth mover's problem so that its gradient can be effectively back-propagated. In addition, we design a novel semantic position encoding mechanism that builds up coordinate for each individual semantic region to preserve texture structures while building correspondences. Further, we design a novel confidence feature injection module which mitigates mismatch problem by fusing features adaptively according to the reliability of built correspondences. Extensive experiments show that our method achieves superior performance qualitatively and quantitatively as compared with the state-of-the-art. The code is available at \href{https://github.com/fnzhan/RABIT}{https://github.com/fnzhan/RABIT}.

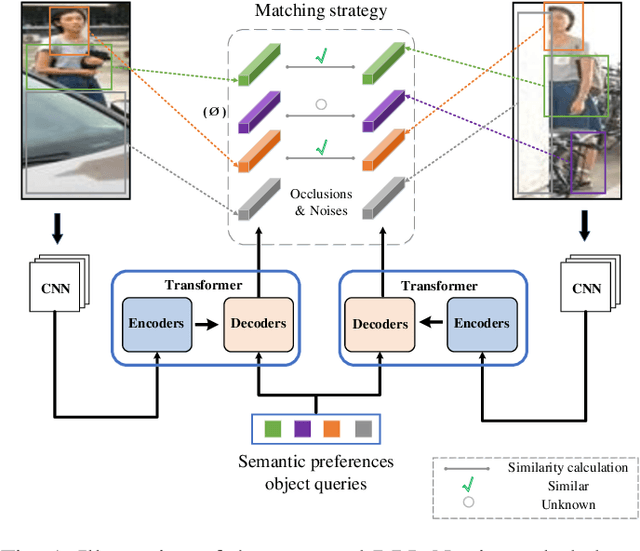

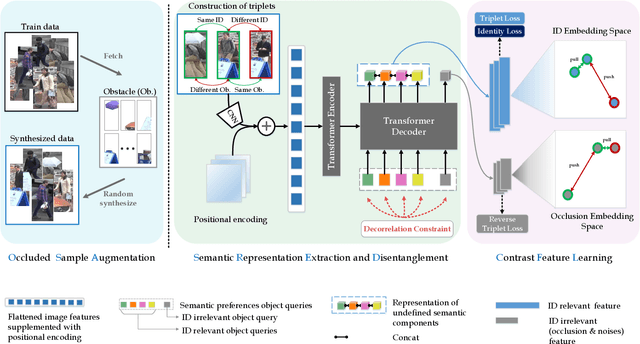

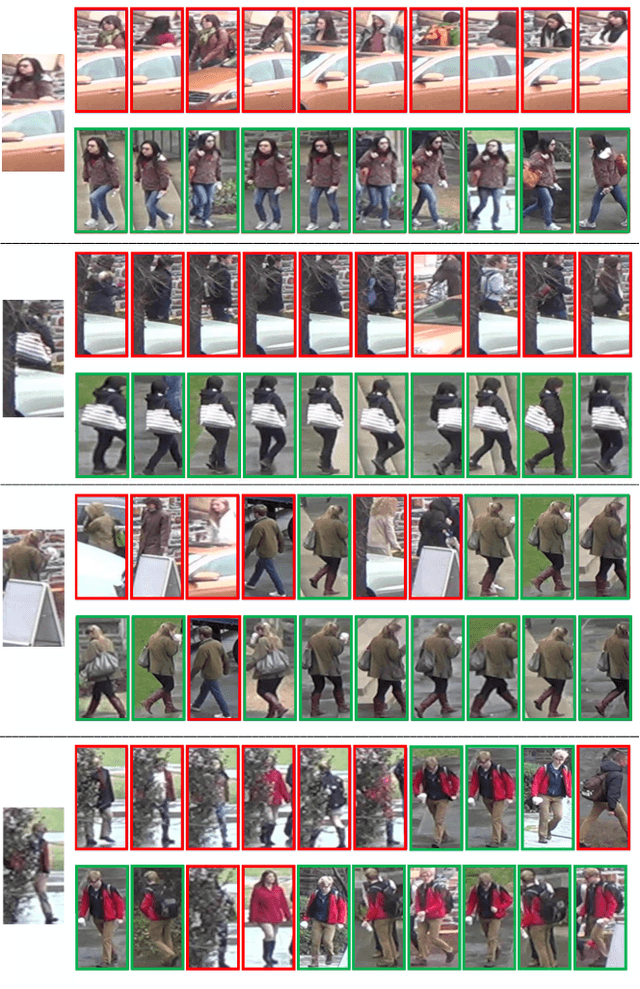

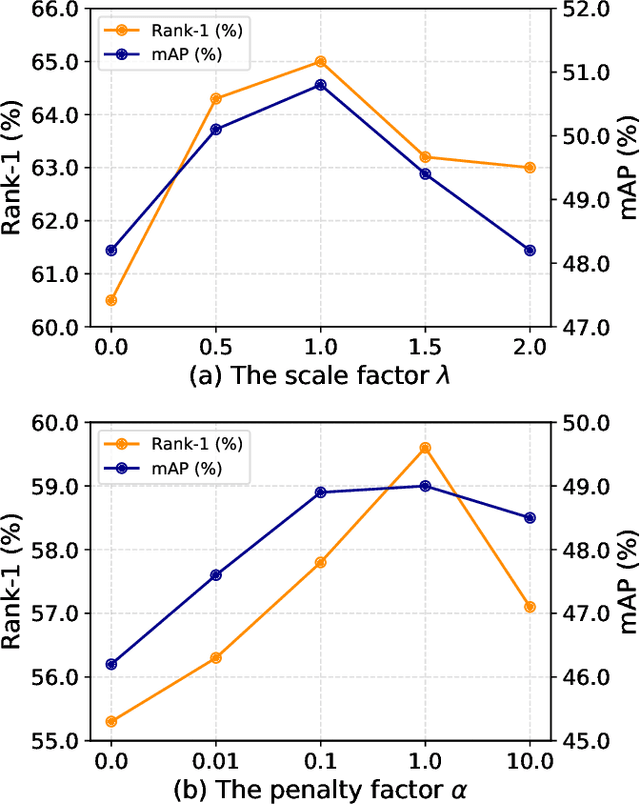

Learning Disentangled Representation Implicitly via Transformer for Occluded Person Re-Identification

Jul 06, 2021

Abstract:Person re-identification (re-ID) under various occlusions has been a long-standing challenge as person images with different types of occlusions often suffer from misalignment in image matching and ranking. Most existing methods tackle this challenge by aligning spatial features of body parts according to external semantic cues or feature similarities but this alignment approach is complicated and sensitive to noises. We design DRL-Net, a disentangled representation learning network that handles occluded re-ID without requiring strict person image alignment or any additional supervision. Leveraging transformer architectures, DRL-Net achieves alignment-free re-ID via global reasoning of local features of occluded person images. It measures image similarity by automatically disentangling the representation of undefined semantic components, e.g., human body parts or obstacles, under the guidance of semantic preference object queries in the transformer. In addition, we design a decorrelation constraint in the transformer decoder and impose it over object queries for better focus on different semantic components. To better eliminate interference from occlusions, we design a contrast feature learning technique (CFL) for better separation of occlusion features and discriminative ID features. Extensive experiments over occluded and holistic re-ID benchmarks (Occluded-DukeMTMC, Market1501 and DukeMTMC) show that the DRL-Net achieves superior re-ID performance consistently and outperforms the state-of-the-art by large margins for Occluded-DukeMTMC.

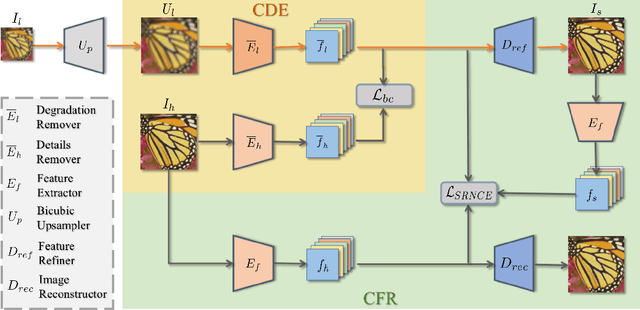

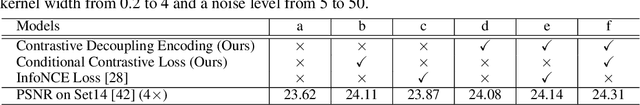

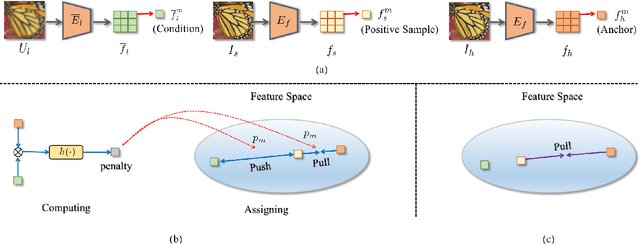

Blind Image Super-Resolution via Contrastive Representation Learning

Jul 01, 2021

Abstract:Image super-resolution (SR) research has witnessed impressive progress thanks to the advance of convolutional neural networks (CNNs) in recent years. However, most existing SR methods are non-blind and assume that degradation has a single fixed and known distribution (e.g., bicubic) which struggle while handling degradation in real-world data that usually follows a multi-modal, spatially variant, and unknown distribution. The recent blind SR studies address this issue via degradation estimation, but they do not generalize well to multi-source degradation and cannot handle spatially variant degradation. We design CRL-SR, a contrastive representation learning network that focuses on blind SR of images with multi-modal and spatially variant distributions. CRL-SR addresses the blind SR challenges from two perspectives. The first is contrastive decoupling encoding which introduces contrastive learning to extract resolution-invariant embedding and discard resolution-variant embedding under the guidance of a bidirectional contrastive loss. The second is contrastive feature refinement which generates lost or corrupted high-frequency details under the guidance of a conditional contrastive loss. Extensive experiments on synthetic datasets and real images show that the proposed CRL-SR can handle multi-modal and spatially variant degradation effectively under blind settings and it also outperforms state-of-the-art SR methods qualitatively and quantitatively.

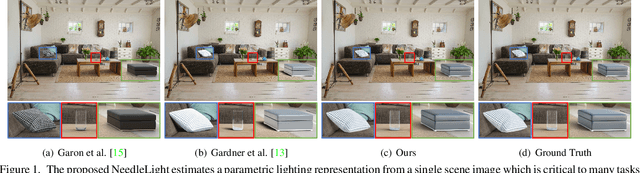

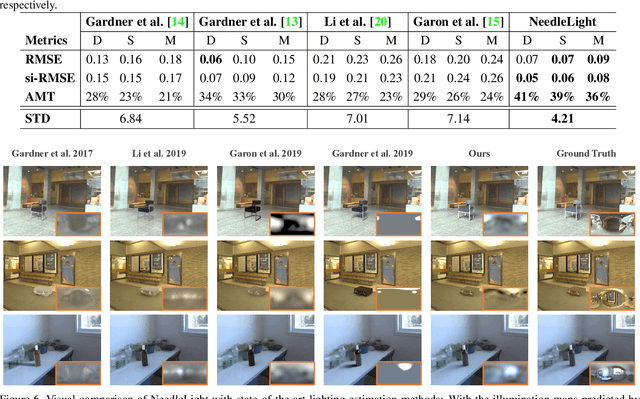

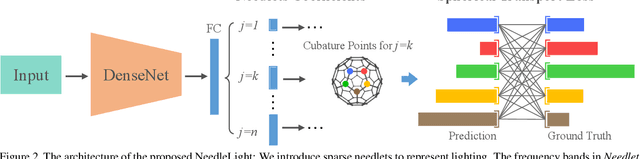

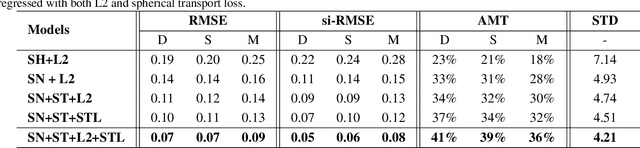

Sparse Needlets for Lighting Estimation with Spherical Transport Loss

Jun 24, 2021

Abstract:Accurate lighting estimation is challenging yet critical to many computer vision and computer graphics tasks such as high-dynamic-range (HDR) relighting. Existing approaches model lighting in either frequency domain or spatial domain which is insufficient to represent the complex lighting conditions in scenes and tends to produce inaccurate estimation. This paper presents NeedleLight, a new lighting estimation model that represents illumination with needlets and allows lighting estimation in both frequency domain and spatial domain jointly. An optimal thresholding function is designed to achieve sparse needlets which trims redundant lighting parameters and demonstrates superior localization properties for illumination representation. In addition, a novel spherical transport loss is designed based on optimal transport theory which guides to regress lighting representation parameters with consideration of the spatial information. Furthermore, we propose a new metric that is concise yet effective by directly evaluating the estimated illumination maps rather than rendered images. Extensive experiments show that NeedleLight achieves superior lighting estimation consistently across multiple evaluation metrics as compared with state-of-the-art methods.

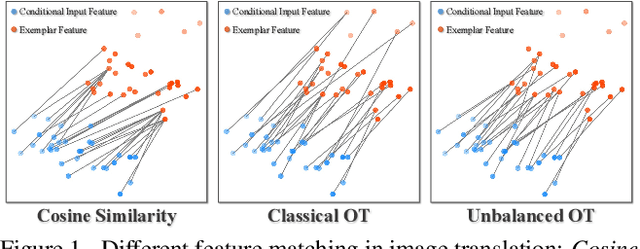

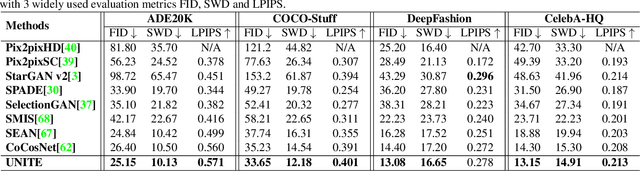

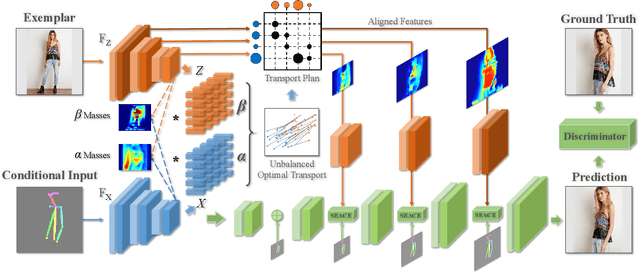

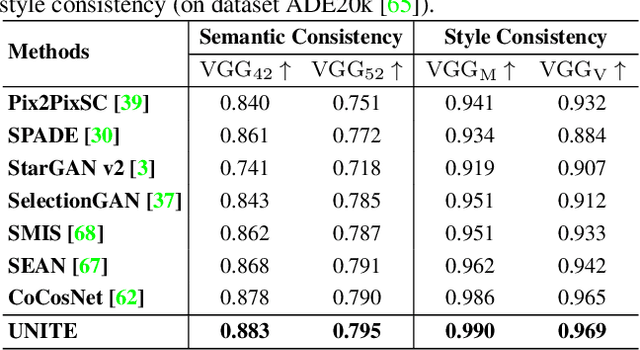

Unbalanced Feature Transport for Exemplar-based Image Translation

Jun 19, 2021

Abstract:Despite the great success of GANs in images translation with different conditioned inputs such as semantic segmentation and edge maps, generating high-fidelity realistic images with reference styles remains a grand challenge in conditional image-to-image translation. This paper presents a general image translation framework that incorporates optimal transport for feature alignment between conditional inputs and style exemplars in image translation. The introduction of optimal transport mitigates the constraint of many-to-one feature matching significantly while building up accurate semantic correspondences between conditional inputs and exemplars. We design a novel unbalanced optimal transport to address the transport between features with deviational distributions which exists widely between conditional inputs and exemplars. In addition, we design a semantic-activation normalization scheme that injects style features of exemplars into the image translation process successfully. Extensive experiments over multiple image translation tasks show that our method achieves superior image translation qualitatively and quantitatively as compared with the state-of-the-art.

Domain Consistency Regularization for Unsupervised Multi-source Domain Adaptive Classification

Jun 16, 2021

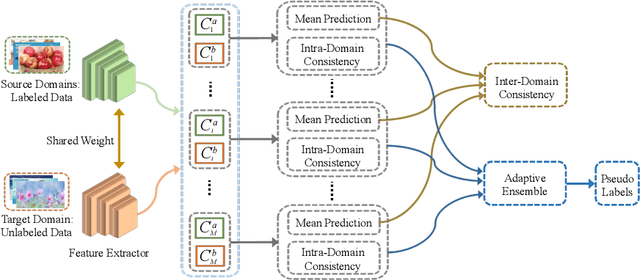

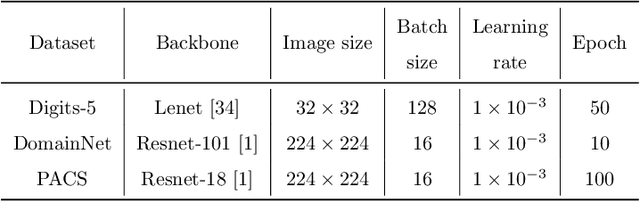

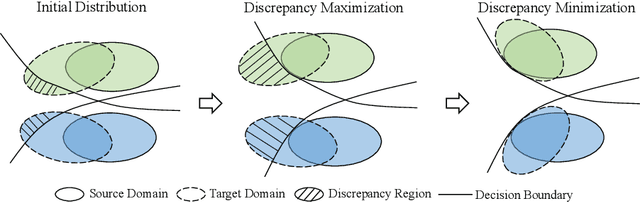

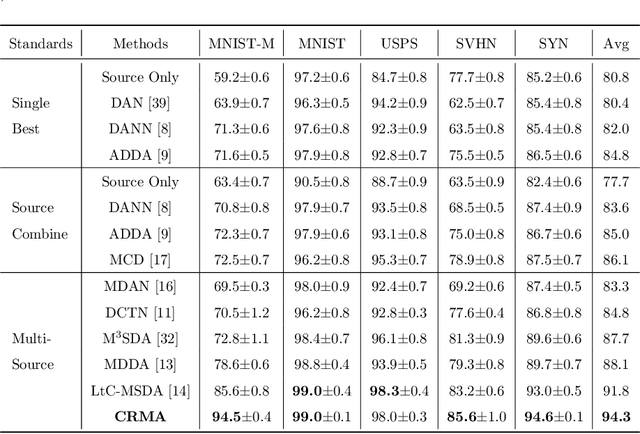

Abstract:Deep learning-based multi-source unsupervised domain adaptation (MUDA) has been actively studied in recent years. Compared with single-source unsupervised domain adaptation (SUDA), domain shift in MUDA exists not only between the source and target domains but also among multiple source domains. Most existing MUDA algorithms focus on extracting domain-invariant representations among all domains whereas the task-specific decision boundaries among classes are largely neglected. In this paper, we propose an end-to-end trainable network that exploits domain Consistency Regularization for unsupervised Multi-source domain Adaptive classification (CRMA). CRMA aligns not only the distributions of each pair of source and target domains but also that of all domains. For each pair of source and target domains, we employ an intra-domain consistency to regularize a pair of domain-specific classifiers to achieve intra-domain alignment. In addition, we design an inter-domain consistency that targets joint inter-domain alignment among all domains. To address different similarities between multiple source domains and the target domain, we design an authorization strategy that assigns different authorities to domain-specific classifiers adaptively for optimal pseudo label prediction and self-training. Extensive experiments show that CRMA tackles unsupervised domain adaptation effectively under a multi-source setup and achieves superior adaptation consistently across multiple MUDA datasets.

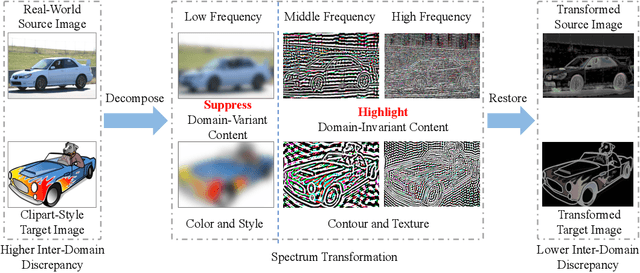

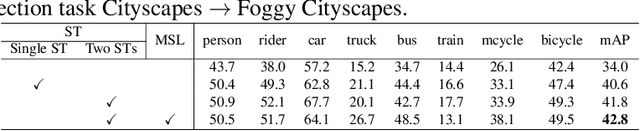

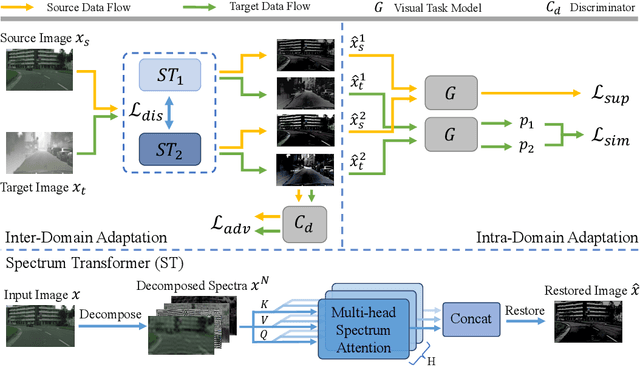

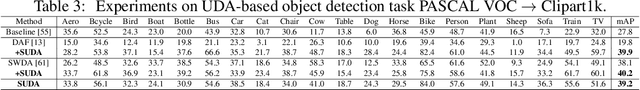

Spectral Unsupervised Domain Adaptation for Visual Recognition

Jun 11, 2021

Abstract:Unsupervised domain adaptation (UDA) aims to learn a well-performed model in an unlabeled target domain by leveraging labeled data from one or multiple related source domains. It remains a great challenge due to 1) the lack of annotations in the target domain and 2) the rich discrepancy between the distributions of source and target data. We propose Spectral UDA (SUDA), an efficient yet effective UDA technique that works in the spectral space and is generic across different visual recognition tasks in detection, classification and segmentation. SUDA addresses UDA challenges from two perspectives. First, it mitigates inter-domain discrepancies by a spectrum transformer (ST) that maps source and target images into spectral space and learns to enhance domain-invariant spectra while suppressing domain-variant spectra simultaneously. To this end, we design novel adversarial multi-head spectrum attention that leverages contextual information to identify domain-variant and domain-invariant spectra effectively. Second, it mitigates the lack of annotations in target domain by introducing multi-view spectral learning which aims to learn comprehensive yet confident target representations by maximizing the mutual information among multiple ST augmentations capturing different spectral views of each target sample. Extensive experiments over different visual tasks (e.g., detection, classification and segmentation) show that SUDA achieves superior accuracy and it is also complementary with state-of-the-art UDA methods with consistent performance boosts but little extra computation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge