Qiyu Sun

Augment Features Beyond Color for Domain Generalized Segmentation

Jul 04, 2023Abstract:Domain generalized semantic segmentation (DGSS) is an essential but highly challenging task, in which the model is trained only on source data and any target data is not available. Previous DGSS methods can be partitioned into augmentation-based and normalization-based ones. The former either introduces extra biased data or only conducts channel-wise adjustments for data augmentation, and the latter may discard beneficial visual information, both of which lead to limited performance in DGSS. Contrarily, our method performs inter-channel transformation and meanwhile evades domain-specific biases, thus diversifying data and enhancing model generalization performance. Specifically, our method consists of two modules: random image color augmentation (RICA) and random feature distribution augmentation (RFDA). RICA converts images from RGB to the CIELAB color model and randomizes color maps in a perception-based way for image enhancement purposes. We further this augmentation by extending it beyond color to feature space using a CycleGAN-based generative network, which complements RICA and further boosts generalization capability. We conduct extensive experiments, and the generalization results from the synthetic GTAV and SYNTHIA to the real Cityscapes, BDDS, and Mapillary datasets show that our method achieves state-of-the-art performance in DGSS.

RoMa: Revisiting Robust Losses for Dense Feature Matching

May 24, 2023Abstract:Dense feature matching is an important computer vision task that involves estimating all correspondences between two images of a 3D scene. In this paper, we revisit robust losses for matching from a Markov chain perspective, yielding theoretical insights and large gains in performance. We begin by constructing a unifying formulation of matching as a Markov chain, based on which we identify two key stages which we argue should be decoupled for matching. The first is the coarse stage, where the estimated result needs to be globally consistent. The second is the refinement stage, where the model needs precise localization capabilities. Inspired by the insight that these stages concern distinct issues, we propose a coarse matcher following the regression-by-classification paradigm that provides excellent globally consistent, albeit not exactly localized, matches. This is followed by a local feature refinement stage using well-motivated robust regression losses, yielding extremely precise matches. Our proposed approach, which we call RoMa, achieves significant improvements compared to the state-of-the-art. Code is available at https://github.com/Parskatt/RoMa

Molecular Joint Representation Learning via Multi-modal Information

Nov 25, 2022Abstract:In recent years, artificial intelligence has played an important role on accelerating the whole process of drug discovery. Various of molecular representation schemes of different modals (e.g. textual sequence or graph) are developed. By digitally encoding them, different chemical information can be learned through corresponding network structures. Molecular graphs and Simplified Molecular Input Line Entry System (SMILES) are popular means for molecular representation learning in current. Previous works have done attempts by combining both of them to solve the problem of specific information loss in single-modal representation on various tasks. To further fusing such multi-modal imformation, the correspondence between learned chemical feature from different representation should be considered. To realize this, we propose a novel framework of molecular joint representation learning via Multi-Modal information of SMILES and molecular Graphs, called MMSG. We improve the self-attention mechanism by introducing bond level graph representation as attention bias in Transformer to reinforce feature correspondence between multi-modal information. We further propose a Bidirectional Message Communication Graph Neural Network (BMC GNN) to strengthen the information flow aggregated from graphs for further combination. Numerous experiments on public property prediction datasets have demonstrated the effectiveness of our model.

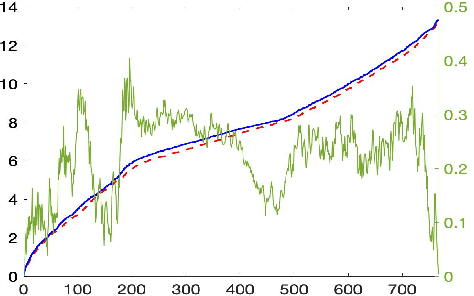

Learning Nonlinear Couplings in Network of Agents from a Single Sample Trajectory

Nov 20, 2022Abstract:We consider a class of stochastic dynamical networks whose governing dynamics can be modeled using a coupling function. It is shown that the dynamics of such networks can generate geometrically ergodic trajectories under some reasonable assumptions. We show that a general class of coupling functions can be learned using only one sample trajectory from the network. This is practically plausible as in numerous applications it is desired to run an experiment only once but for a longer period of time, rather than repeating the same experiment multiple times from different initial conditions. Building upon ideas from the concentration inequalities for geometrically ergodic Markov chains, we formulate several results about the convergence of the empirical estimator to the true coupling function. Our theoretical findings are supported by extensive simulation results.

Towards Generalization on Real Domain for Single Image Dehazing via Meta-Learning

Nov 14, 2022

Abstract:Learning-based image dehazing methods are essential to assist autonomous systems in enhancing reliability. Due to the domain gap between synthetic and real domains, the internal information learned from synthesized images is usually sub-optimal in real domains, leading to severe performance drop of dehaizing models. Driven by the ability on exploring internal information from a few unseen-domain samples, meta-learning is commonly adopted to address this issue via test-time training, which is hyperparameter-sensitive and time-consuming. In contrast, we present a domain generalization framework based on meta-learning to dig out representative and discriminative internal properties of real hazy domains without test-time training. To obtain representative domain-specific information, we attach two entities termed adaptation network and distance-aware aggregator to our dehazing network. The adaptation network assists in distilling domain-relevant information from a few hazy samples and caching it into a collection of features. The distance-aware aggregator strives to summarize the generated features and filter out misleading information for more representative internal properties. To enhance the discrimination of distilled internal information, we present a novel loss function called domain-relevant contrastive regularization, which encourages the internal features generated from the same domain more similar and that from diverse domains more distinct. The generated representative and discriminative features are regarded as some external variables of our dehazing network to regress a particular and powerful function for a given domain. The extensive experiments on real hazy datasets, such as RTTS and URHI, validate that our proposed method has superior generalization ability than the state-of-the-art competitors.

Visual Semantic Segmentation Based on Few/Zero-Shot Learning: An Overview

Nov 13, 2022Abstract:Visual semantic segmentation aims at separating a visual sample into diverse blocks with specific semantic attributes and identifying the category for each block, and it plays a crucial role in environmental perception. Conventional learning-based visual semantic segmentation approaches count heavily on large-scale training data with dense annotations and consistently fail to estimate accurate semantic labels for unseen categories. This obstruction spurs a craze for studying visual semantic segmentation with the assistance of few/zero-shot learning. The emergence and rapid progress of few/zero-shot visual semantic segmentation make it possible to learn unseen-category from a few labeled or zero-labeled samples, which advances the extension to practical applications. Therefore, this paper focuses on the recently published few/zero-shot visual semantic segmentation methods varying from 2D to 3D space and explores the commonalities and discrepancies of technical settlements under different segmentation circumstances. Specifically, the preliminaries on few/zero-shot visual semantic segmentation, including the problem definitions, typical datasets, and technical remedies, are briefly reviewed and discussed. Moreover, three typical instantiations are involved to uncover the interactions of few/zero-shot learning with visual semantic segmentation, including image semantic segmentation, video object segmentation, and 3D segmentation. Finally, the future challenges of few/zero-shot visual semantic segmentation are discussed.

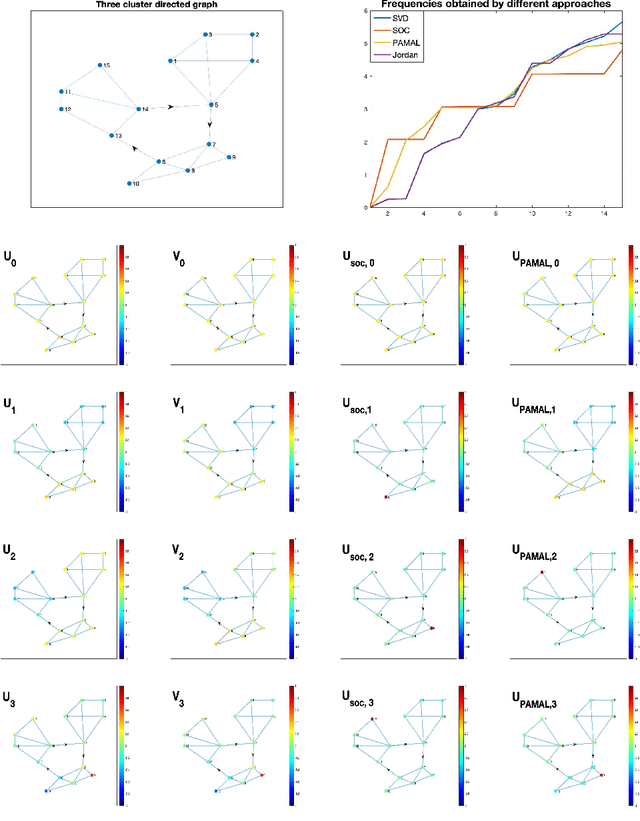

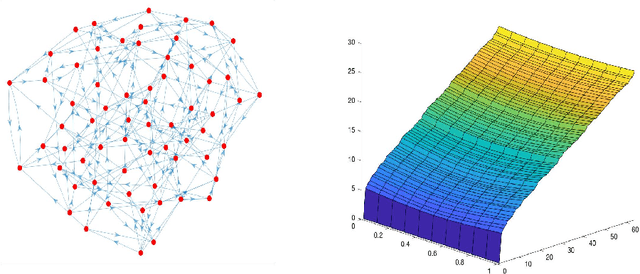

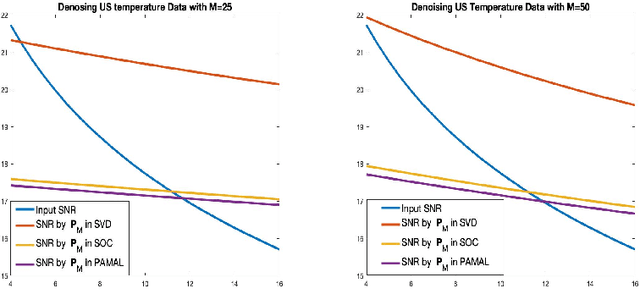

Graph Fourier transforms on directed product graphs

Sep 07, 2022

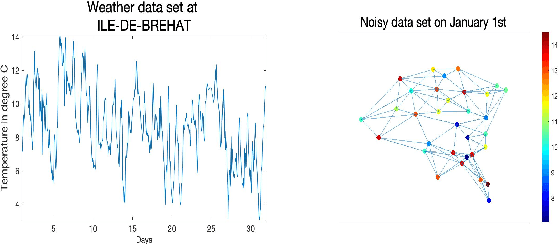

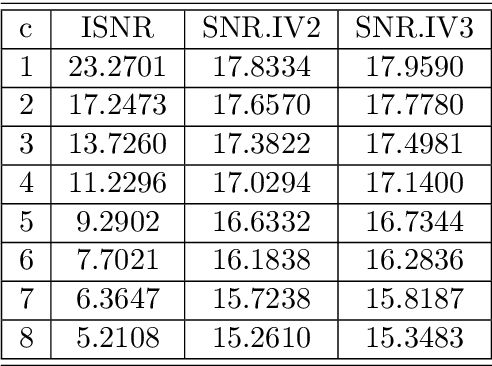

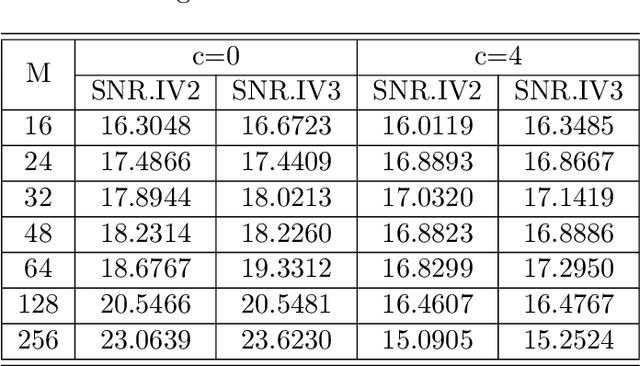

Abstract:Graph Fourier transform (GFT) is one of the fundamental tools in graph signal processing to decompose graph signals into different frequency components and to represent graph signals with strong correlation by different modes of variation effectively. The GFT on undirected graphs has been well studied and several approaches have been proposed to define GFTs on directed graphs. In this paper, based on the singular value decompositions of some graph Laplacians, we propose two GFTs on the Cartesian product graph of two directed graphs. We show that the proposed GFTs could represent spatial-temporal data sets on directed networks with strong correlation efficiently, and in the undirected graph setting they are essentially the joint GFT in the literature. In this paper, we also consider the bandlimiting procedure in the spectral domain of the proposed GFTs, and demonstrate its performance to denoise the temperature data set in the region of Brest (France) on January 2014.

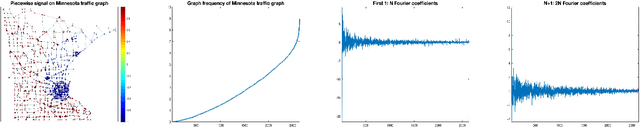

Graph Fourier transform based on singular value decomposition of directed Laplacian

May 12, 2022

Abstract:Graph Fourier transform (GFT) is a fundamental concept in graph signal processing. In this paper, based on singular value decomposition of Laplacian, we introduce a novel definition of GFT on directed graphs, and use singular values of Laplacian to carry the notion of graph frequencies. % of the proposed GFT. The proposed GFT is consistent with the conventional GFT in the undirected graph setting, and on directed circulant graphs, the proposed GFT is the classical discrete Fourier transform, up to some rotation, permutation and phase adjustment. We show that frequencies and frequency components of the proposed GFT can be evaluated by solving some constrained minimization problems with low computational cost. Numerical demonstrations indicate that the proposed GFT could represent graph signals with different modes of variation efficiently.

Wiener filters on graphs and distributed polynomial approximation algorithms

May 09, 2022

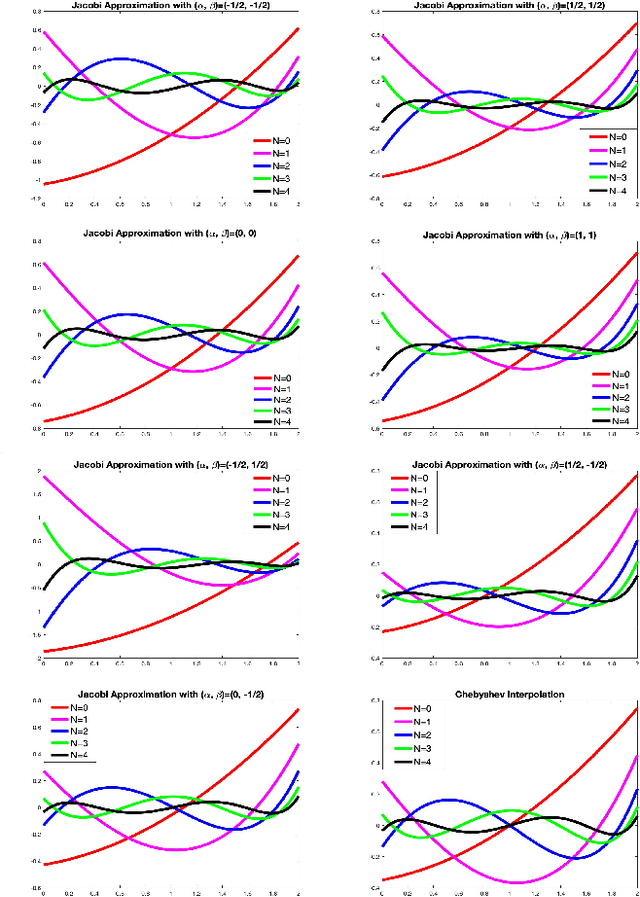

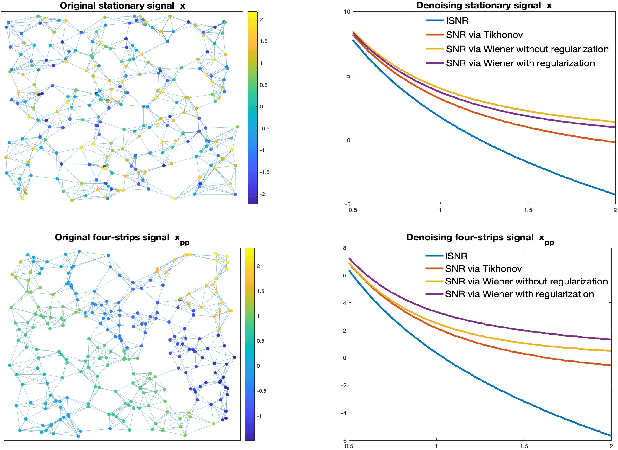

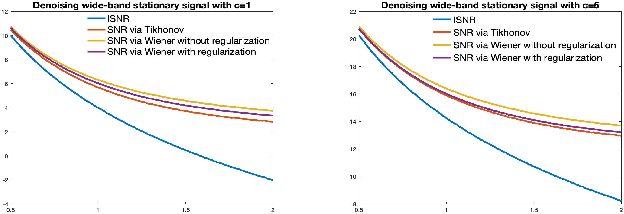

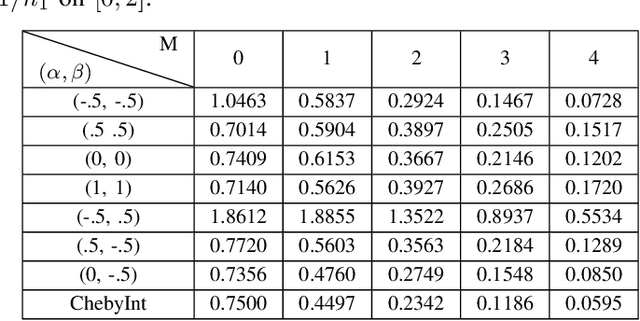

Abstract:In this paper, we consider Wiener filters to reconstruct deterministic and (wide-band) stationary graph signals from their observations corrupted by random noises, and we propose distributed algorithms to implement Wiener filters and inverse filters on networks in which agents are equipped with a data processing subsystem for limited data storage and computation power, and with a one-hop communication subsystem for direct data exchange only with their adjacent agents. The proposed distributed polynomial approximation algorithm is an exponential convergent quasi-Newton method based on Jacobi polynomial approximation and Chebyshev interpolation polynomial approximation to analytic functions on a cube. Our numerical simulations show that Wiener filtering procedure performs better on denoising (wide-band) stationary signals than the Tikhonov regularization approach does, and that the proposed polynomial approximation algorithms converge faster than the Chebyshev polynomial approximation algorithm and gradient decent algorithm do in the implementation of an inverse filtering procedure associated with a polynomial filter of commutative graph shifts.

Aggressive Quadrotor Flight Using Curiosity-Driven Reinforcement Learning

Mar 26, 2022

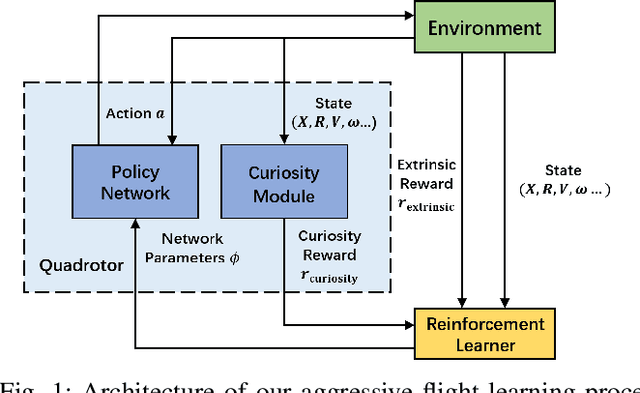

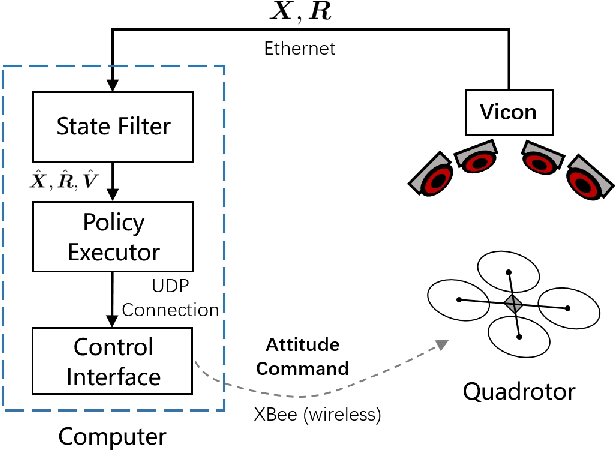

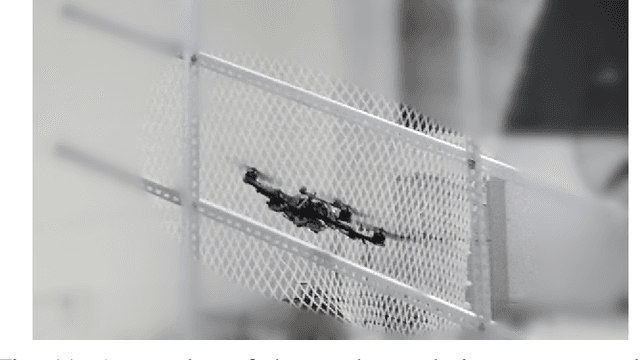

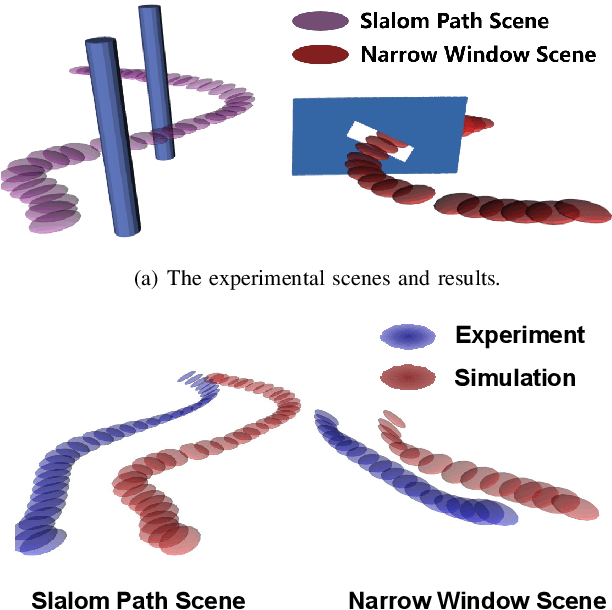

Abstract:The ability to perform aggressive movements, which are called aggressive flights, is important for quadrotors during navigation. However, aggressive quadrotor flights are still a great challenge to practical applications. The existing solutions to aggressive flights heavily rely on a predefined trajectory, which is a time-consuming preprocessing step. To avoid such path planning, we propose a curiosity-driven reinforcement learning method for aggressive flight missions and a similarity-based curiosity module is introduced to speed up the training procedure. A branch structure exploration (BSE) strategy is also applied to guarantee the robustness of the policy and to ensure the policy trained in simulations can be performed in real-world experiments directly. The experimental results in simulations demonstrate that our reinforcement learning algorithm performs well in aggressive flight tasks, speeds up the convergence process and improves the robustness of the policy. Besides, our algorithm shows a satisfactory simulated to real transferability and performs well in real-world experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge