Pushpak Bhattacharyya

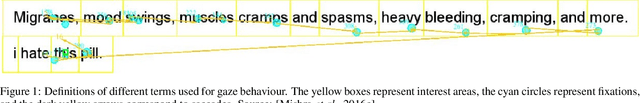

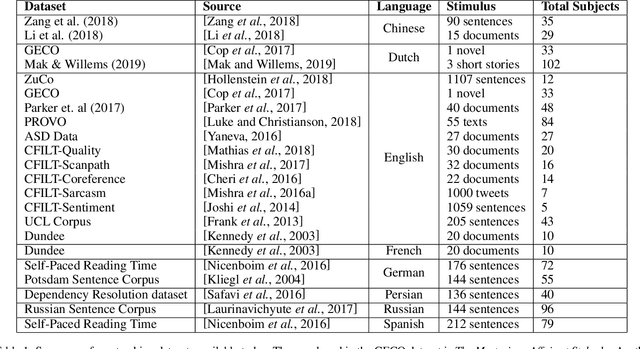

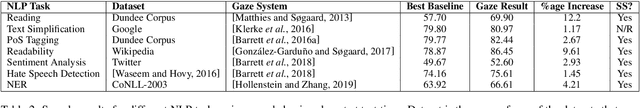

A Survey on Using Gaze Behaviour for Natural Language Processing

Jan 03, 2022

Abstract:Gaze behaviour has been used as a way to gather cognitive information for a number of years. In this paper, we discuss the use of gaze behaviour in solving different tasks in natural language processing (NLP) without having to record it at test time. This is because the collection of gaze behaviour is a costly task, both in terms of time and money. Hence, in this paper, we focus on research done to alleviate the need for recording gaze behaviour at run time. We also mention different eye tracking corpora in multiple languages, which are currently available and can be used in natural language processing. We conclude our paper by discussing applications in a domain - education - and how learning gaze behaviour can help in solving the tasks of complex word identification and automatic essay grading.

Utilizing Wordnets for Cognate Detection among Indian Languages

Dec 30, 2021

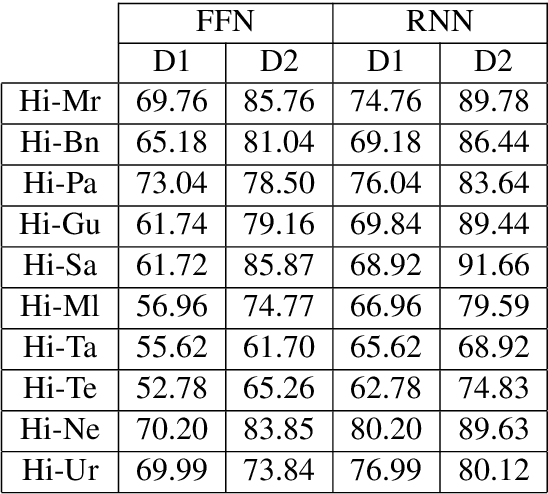

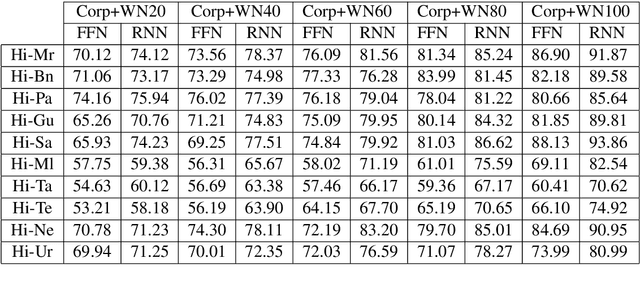

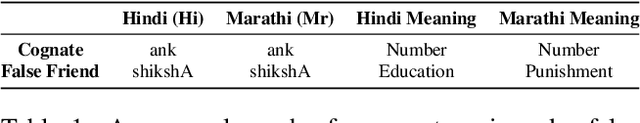

Abstract:Automatic Cognate Detection (ACD) is a challenging task which has been utilized to help NLP applications like Machine Translation, Information Retrieval and Computational Phylogenetics. Unidentified cognate pairs can pose a challenge to these applications and result in a degradation of performance. In this paper, we detect cognate word pairs among ten Indian languages with Hindi and use deep learning methodologies to predict whether a word pair is cognate or not. We identify IndoWordnet as a potential resource to detect cognate word pairs based on orthographic similarity-based methods and train neural network models using the data obtained from it. We identify parallel corpora as another potential resource and perform the same experiments for them. We also validate the contribution of Wordnets through further experimentation and report improved performance of up to 26%. We discuss the nuances of cognate detection among closely related Indian languages and release the lists of detected cognates as a dataset. We also observe the behaviour of, to an extent, unrelated Indian language pairs and release the lists of detected cognates among them as well.

"A Passage to India": Pre-trained Word Embeddings for Indian Languages

Dec 27, 2021Abstract:Dense word vectors or 'word embeddings' which encode semantic properties of words, have now become integral to NLP tasks like Machine Translation (MT), Question Answering (QA), Word Sense Disambiguation (WSD), and Information Retrieval (IR). In this paper, we use various existing approaches to create multiple word embeddings for 14 Indian languages. We place these embeddings for all these languages, viz., Assamese, Bengali, Gujarati, Hindi, Kannada, Konkani, Malayalam, Marathi, Nepali, Odiya, Punjabi, Sanskrit, Tamil, and Telugu in a single repository. Relatively newer approaches that emphasize catering to context (BERT, ELMo, etc.) have shown significant improvements, but require a large amount of resources to generate usable models. We release pre-trained embeddings generated using both contextual and non-contextual approaches. We also use MUSE and XLM to train cross-lingual embeddings for all pairs of the aforementioned languages. To show the efficacy of our embeddings, we evaluate our embedding models on XPOS, UPOS and NER tasks for all these languages. We release a total of 436 models using 8 different approaches. We hope they are useful for the resource-constrained Indian language NLP. The title of this paper refers to the famous novel 'A Passage to India' by E.M. Forster, published initially in 1924.

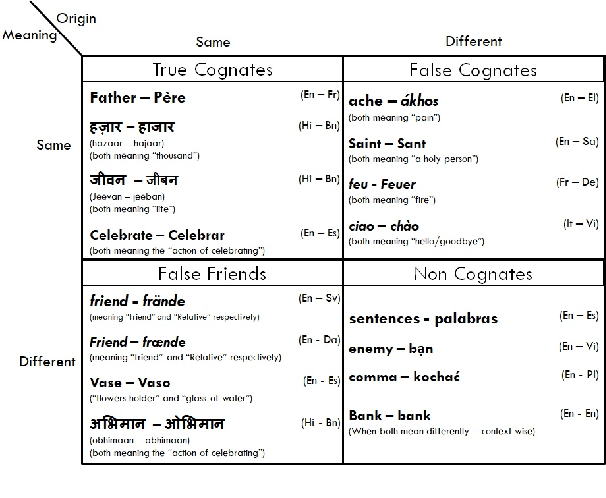

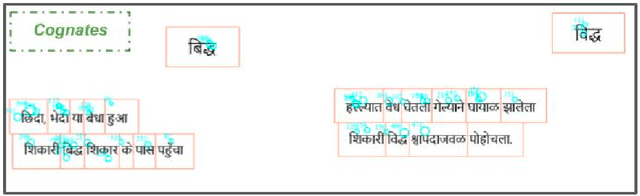

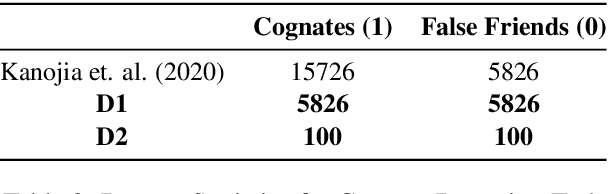

Challenge Dataset of Cognates and False Friend Pairs from Indian Languages

Dec 17, 2021

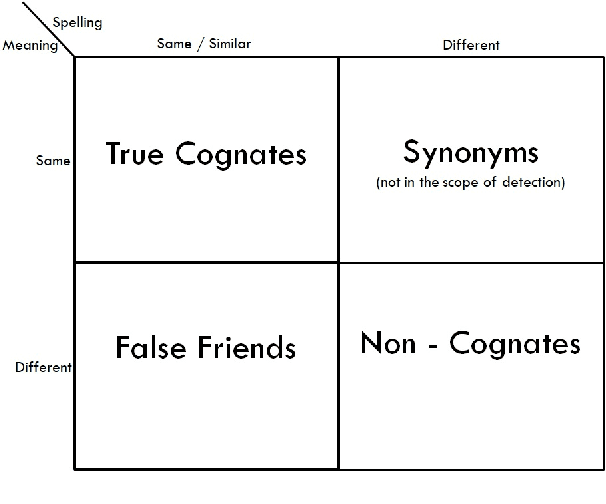

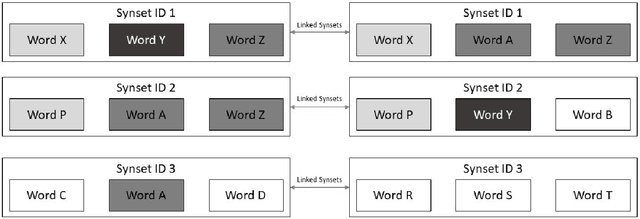

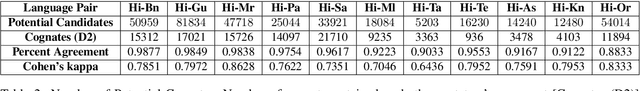

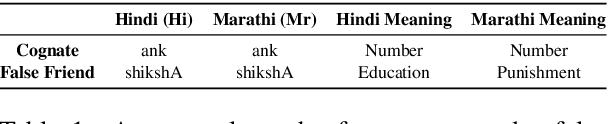

Abstract:Cognates are present in multiple variants of the same text across different languages (e.g., "hund" in German and "hound" in English language mean "dog"). They pose a challenge to various Natural Language Processing (NLP) applications such as Machine Translation, Cross-lingual Sense Disambiguation, Computational Phylogenetics, and Information Retrieval. A possible solution to address this challenge is to identify cognates across language pairs. In this paper, we describe the creation of two cognate datasets for twelve Indian languages, namely Sanskrit, Hindi, Assamese, Oriya, Kannada, Gujarati, Tamil, Telugu, Punjabi, Bengali, Marathi, and Malayalam. We digitize the cognate data from an Indian language cognate dictionary and utilize linked Indian language Wordnets to generate cognate sets. Additionally, we use the Wordnet data to create a False Friends' dataset for eleven language pairs. We also evaluate the efficacy of our dataset using previously available baseline cognate detection approaches. We also perform a manual evaluation with the help of lexicographers and release the curated gold-standard dataset with this paper.

Harnessing Cross-lingual Features to Improve Cognate Detection for Low-resource Languages

Dec 16, 2021

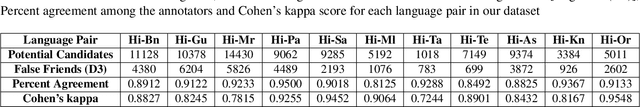

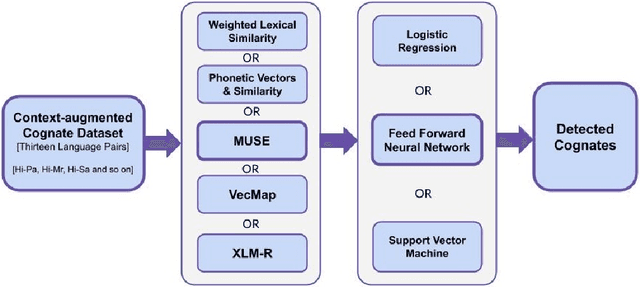

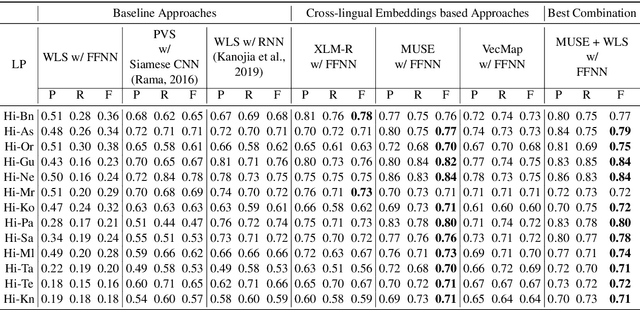

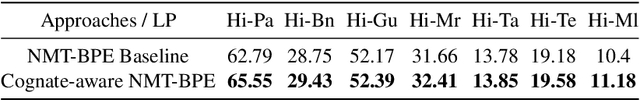

Abstract:Cognates are variants of the same lexical form across different languages; for example 'fonema' in Spanish and 'phoneme' in English are cognates, both of which mean 'a unit of sound'. The task of automatic detection of cognates among any two languages can help downstream NLP tasks such as Cross-lingual Information Retrieval, Computational Phylogenetics, and Machine Translation. In this paper, we demonstrate the use of cross-lingual word embeddings for detecting cognates among fourteen Indian Languages. Our approach introduces the use of context from a knowledge graph to generate improved feature representations for cognate detection. We, then, evaluate the impact of our cognate detection mechanism on neural machine translation (NMT), as a downstream task. We evaluate our methods to detect cognates on a challenging dataset of twelve Indian languages, namely, Sanskrit, Hindi, Assamese, Oriya, Kannada, Gujarati, Tamil, Telugu, Punjabi, Bengali, Marathi, and Malayalam. Additionally, we create evaluation datasets for two more Indian languages, Konkani and Nepali. We observe an improvement of up to 18% points, in terms of F-score, for cognate detection. Furthermore, we observe that cognates extracted using our method help improve NMT quality by up to 2.76 BLEU. We also release our code, newly constructed datasets and cross-lingual models publicly.

Cognition-aware Cognate Detection

Dec 15, 2021

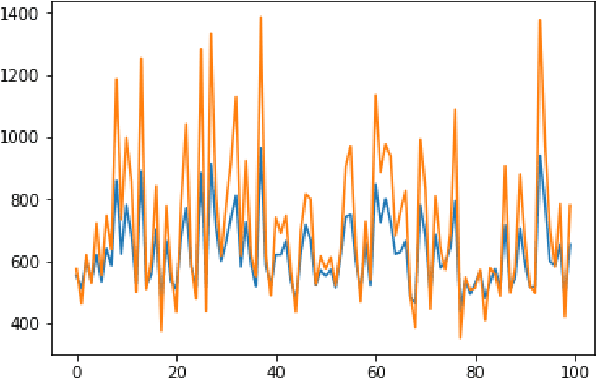

Abstract:Automatic detection of cognates helps downstream NLP tasks of Machine Translation, Cross-lingual Information Retrieval, Computational Phylogenetics and Cross-lingual Named Entity Recognition. Previous approaches for the task of cognate detection use orthographic, phonetic and semantic similarity based features sets. In this paper, we propose a novel method for enriching the feature sets, with cognitive features extracted from human readers' gaze behaviour. We collect gaze behaviour data for a small sample of cognates and show that extracted cognitive features help the task of cognate detection. However, gaze data collection and annotation is a costly task. We use the collected gaze behaviour data to predict cognitive features for a larger sample and show that predicted cognitive features, also, significantly improve the task performance. We report improvements of 10% with the collected gaze features, and 12% using the predicted gaze features, over the previously proposed approaches. Furthermore, we release the collected gaze behaviour data along with our code and cross-lingual models.

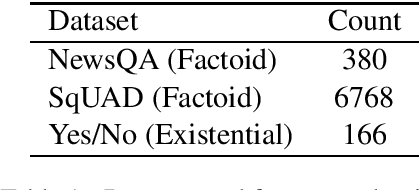

Natural Answer Generation: From Factoid Answer to Full-length Answer using Grammar Correction

Dec 07, 2021

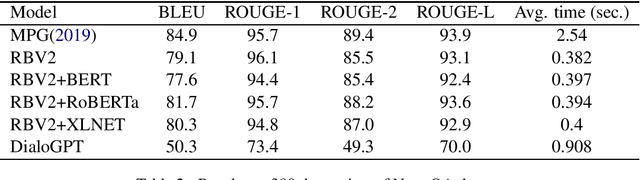

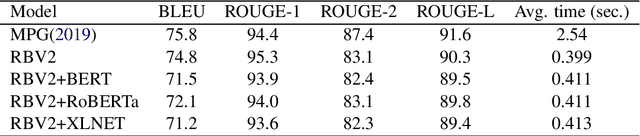

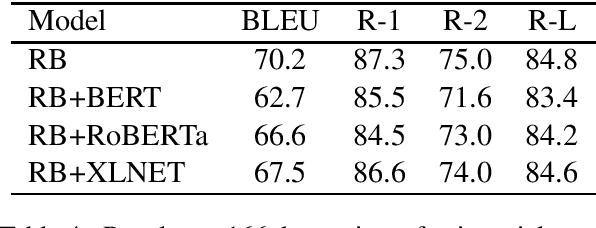

Abstract:Question Answering systems these days typically use template-based language generation. Though adequate for a domain-specific task, these systems are too restrictive and predefined for domain-independent systems. This paper proposes a system that outputs a full-length answer given a question and the extracted factoid answer (short spans such as named entities) as the input. Our system uses constituency and dependency parse trees of questions. A transformer-based Grammar Error Correction model GECToR (2020), is used as a post-processing step for better fluency. We compare our system with (i) Modified Pointer Generator (SOTA) and (ii) Fine-tuned DialoGPT for factoid questions. We also test our approach on existential (yes-no) questions with better results. Our model generates accurate and fluent answers than the state-of-the-art (SOTA) approaches. The evaluation is done on NewsQA and SqUAD datasets with an increment of 0.4 and 0.9 percentage points in ROUGE-1 score respectively. Also the inference time is reduced by 85\% as compared to the SOTA. The improved datasets used for our evaluation will be released as part of the research contribution.

Tapping BERT for Preposition Sense Disambiguation

Nov 27, 2021

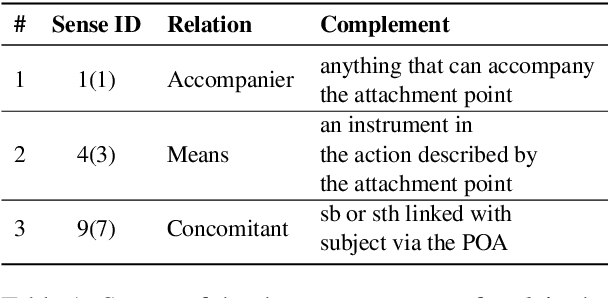

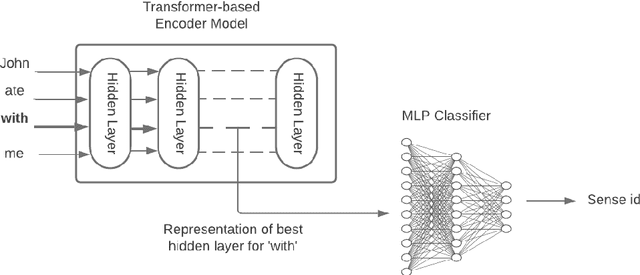

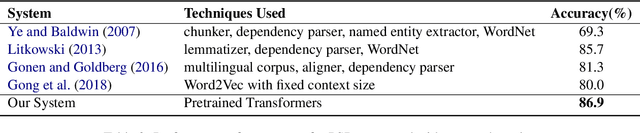

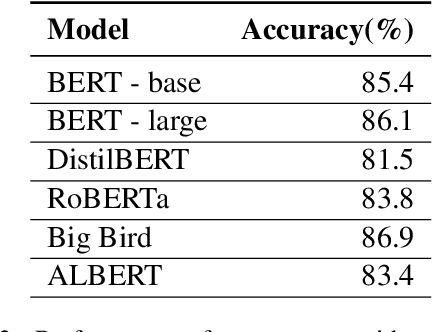

Abstract:Prepositions are frequently occurring polysemous words. Disambiguation of prepositions is crucial in tasks like semantic role labelling, question answering, text entailment, and noun compound paraphrasing. In this paper, we propose a novel methodology for preposition sense disambiguation (PSD), which does not use any linguistic tools. In a supervised setting, the machine learning model is presented with sentences wherein prepositions have been annotated with senses. These senses are IDs in what is called The Preposition Project (TPP). We use the hidden layer representations from pre-trained BERT and BERT variants. The latent representations are then classified into the correct sense ID using a Multi Layer Perceptron. The dataset used for this task is from SemEval-2007 Task-6. Our methodology gives an accuracy of 86.85% which is better than the state-of-the-art.

"So You Think You're Funny?": Rating the Humour Quotient in Standup Comedy

Oct 25, 2021

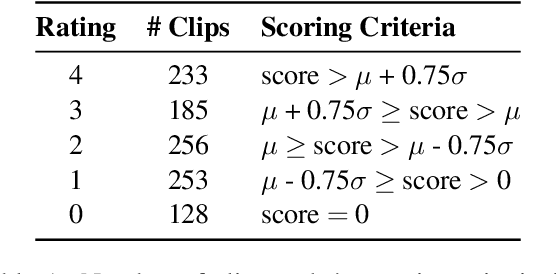

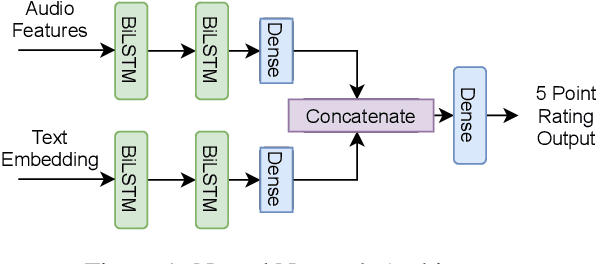

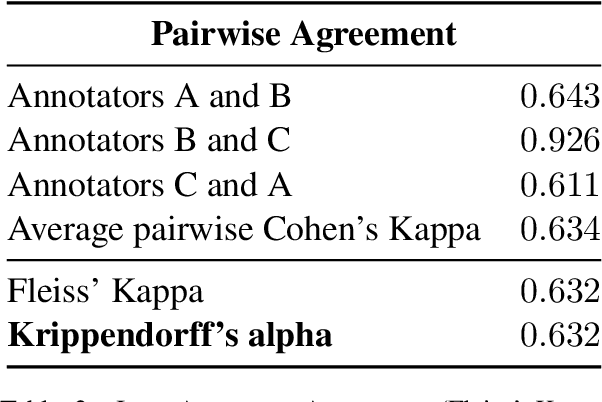

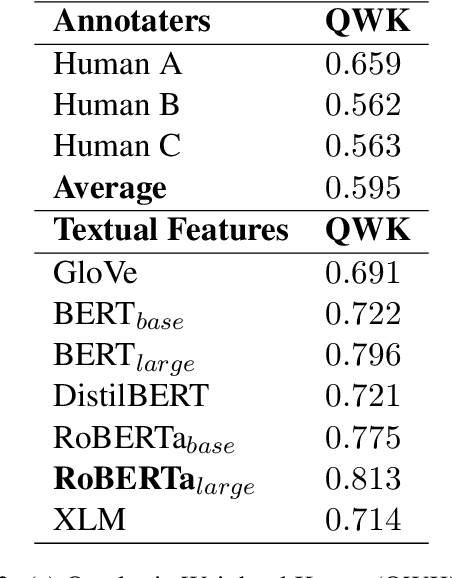

Abstract:Computational Humour (CH) has attracted the interest of Natural Language Processing and Computational Linguistics communities. Creating datasets for automatic measurement of humour quotient is difficult due to multiple possible interpretations of the content. In this work, we create a multi-modal humour-annotated dataset ($\sim$40 hours) using stand-up comedy clips. We devise a novel scoring mechanism to annotate the training data with a humour quotient score using the audience's laughter. The normalized duration (laughter duration divided by the clip duration) of laughter in each clip is used to compute this humour coefficient score on a five-point scale (0-4). This method of scoring is validated by comparing with manually annotated scores, wherein a quadratic weighted kappa of 0.6 is obtained. We use this dataset to train a model that provides a "funniness" score, on a five-point scale, given the audio and its corresponding text. We compare various neural language models for the task of humour-rating and achieve an accuracy of $0.813$ in terms of Quadratic Weighted Kappa (QWK). Our "Open Mic" dataset is released for further research along with the code.

COVIDRead: A Large-scale Question Answering Dataset on COVID-19

Oct 05, 2021

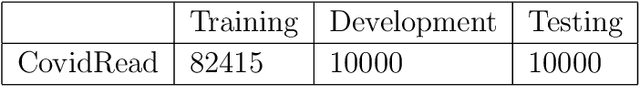

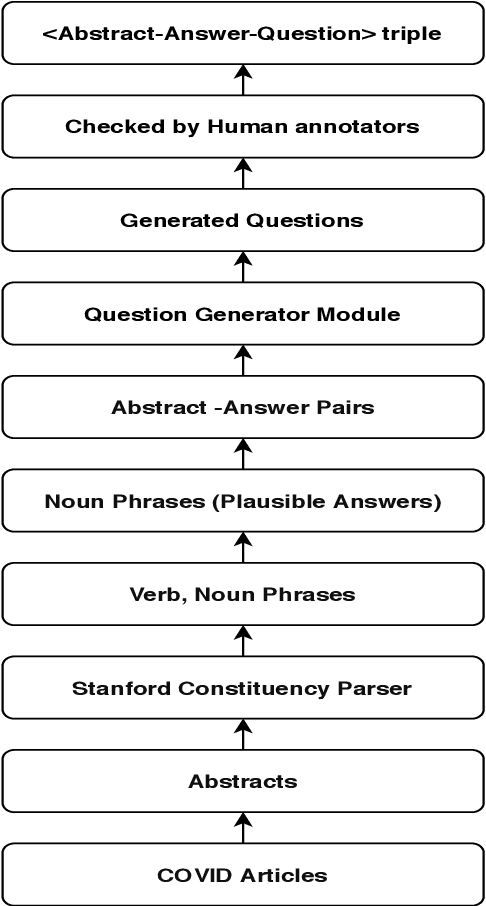

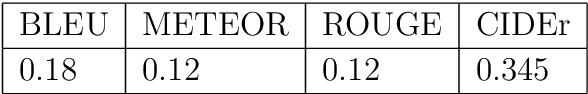

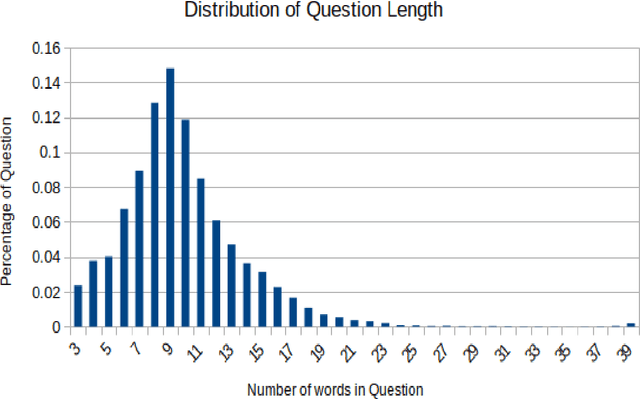

Abstract:During this pandemic situation, extracting any relevant information related to COVID-19 will be immensely beneficial to the community at large. In this paper, we present a very important resource, COVIDRead, a Stanford Question Answering Dataset (SQuAD) like dataset over more than 100k question-answer pairs. The dataset consists of Context-Answer-Question triples. Primarily the questions from the context are constructed in an automated way. After that, the system-generated questions are manually checked by hu-mans annotators. This is a precious resource that could serve many purposes, ranging from common people queries regarding this very uncommon disease to managing articles by editors/associate editors of a journal. We establish several end-to-end neural network based baseline models that attain the lowest F1 of 32.03% and the highest F1 of 37.19%. To the best of our knowledge, we are the first to provide this kind of QA dataset in such a large volume on COVID-19. This dataset creates a new avenue of carrying out research on COVID-19 by providing a benchmark dataset and a baseline model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge