Pushpak Bhattacharyya

There is No Big Brother or Small Brother: Knowledge Infusion in Language Models for Link Prediction and Question Answering

Jan 10, 2023Abstract:The integration of knowledge graphs with deep learning is thriving in improving the performance of various natural language processing (NLP) tasks. In this paper, we focus on knowledge-infused link prediction and question answering using language models, T5, and BLOOM across three domains: Aviation, Movie, and Web. In this context, we infuse knowledge in large and small language models and study their performance, and find the performance to be similar. For the link prediction task on the Aviation Knowledge Graph, we obtain a 0.2 hits@1 score using T5-small, T5-base, T5-large, and BLOOM. Using template-based scripts, we create a set of 1 million synthetic factoid QA pairs in the aviation domain from National Transportation Safety Board (NTSB) reports. On our curated QA pairs, the three models of T5 achieve a 0.7 hits@1 score. We validate out findings with the paired student t-test and Cohen's kappa scores. For link prediction on Aviation Knowledge Graph using T5-small and T5-large, we obtain a Cohen's kappa score of 0.76, showing substantial agreement between the models. Thus, we infer that small language models perform similar to large language models with the infusion of knowledge.

Knowledge Graph Construction and Its Application in Automatic Radiology Report Generation from Radiologist's Dictation

Jun 14, 2022

Abstract:Conventionally, the radiologist prepares the diagnosis notes and shares them with the transcriptionist. Then the transcriptionist prepares a preliminary formatted report referring to the notes, and finally, the radiologist reviews the report, corrects the errors, and signs off. This workflow causes significant delays and errors in the report. In current research work, we focus on applications of NLP techniques like Information Extraction (IE) and domain-specific Knowledge Graph (KG) to automatically generate radiology reports from radiologist's dictation. This paper focuses on KG construction for each organ by extracting information from an existing large corpus of free-text radiology reports. We develop an information extraction pipeline that combines rule-based, pattern-based, and dictionary-based techniques with lexical-semantic features to extract entities and relations. Missing information in short dictation can be accessed from the KGs to generate pathological descriptions and hence the radiology report. Generated pathological descriptions evaluated using semantic similarity metrics, which shows 97% similarity with gold standard pathological descriptions. Also, our analysis shows that our IE module is performing better than the OpenIE tool for the radiology domain. Furthermore, we include a manual qualitative analysis from radiologists, which shows that 80-85% of the generated reports are correctly written, and the remaining are partially correct.

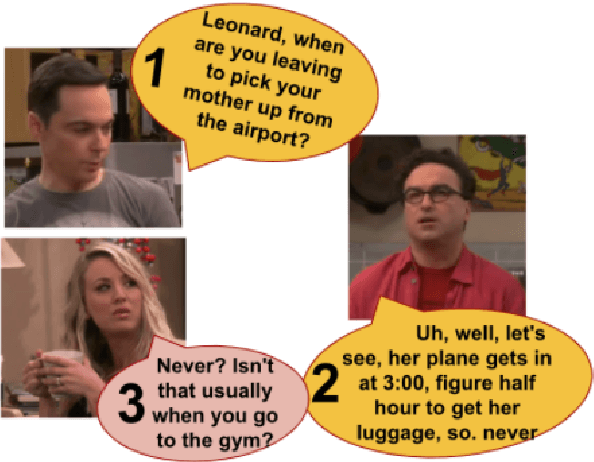

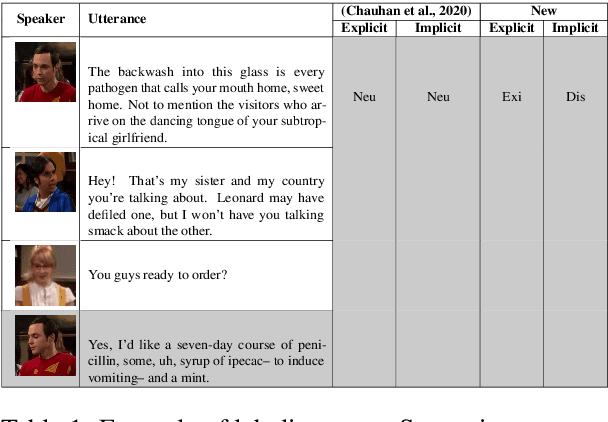

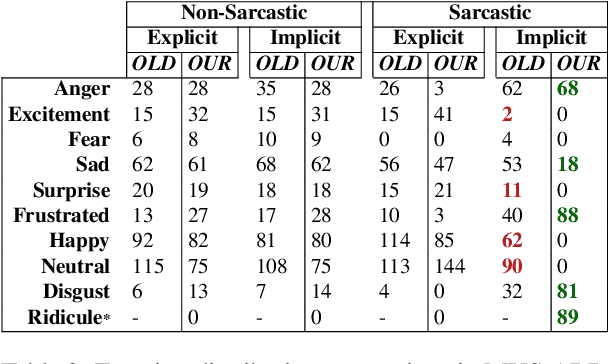

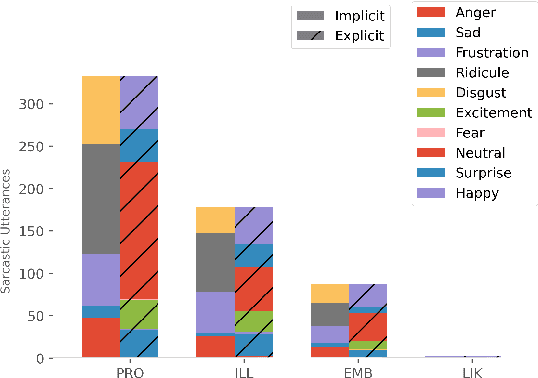

A Multimodal Corpus for Emotion Recognition in Sarcasm

Jun 05, 2022

Abstract:While sentiment and emotion analysis have been studied extensively, the relationship between sarcasm and emotion has largely remained unexplored. A sarcastic expression may have a variety of underlying emotions. For example, "I love being ignored" belies sadness, while "my mobile is fabulous with a battery backup of only 15 minutes!" expresses frustration. Detecting the emotion behind a sarcastic expression is non-trivial yet an important task. We undertake the task of detecting the emotion in a sarcastic statement, which to the best of our knowledge, is hitherto unexplored. We start with the recently released multimodal sarcasm detection dataset (MUStARD) pre-annotated with 9 emotions. We identify and correct 343 incorrect emotion labels (out of 690). We double the size of the dataset, label it with emotions along with valence and arousal which are important indicators of emotional intensity. Finally, we label each sarcastic utterance with one of the four sarcasm types-Propositional, Embedded, Likeprefixed and Illocutionary, with the goal of advancing sarcasm detection research. Exhaustive experimentation with multimodal (text, audio, and video) fusion models establishes a benchmark for exact emotion recognition in sarcasm and outperforms the state-of-art sarcasm detection. We release the dataset enriched with various annotations and the code for research purposes: https://github.com/apoorva-nunna/MUStARD_Plus_Plus

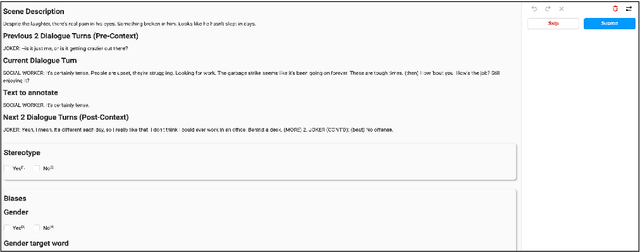

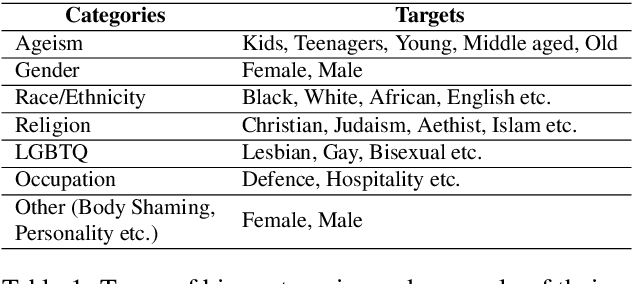

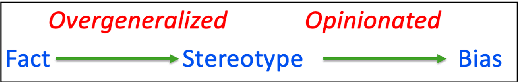

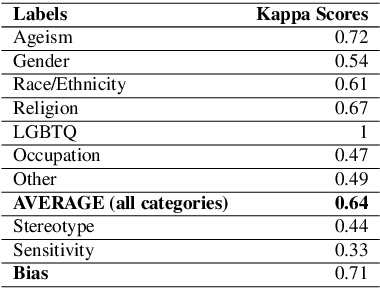

Hollywood Identity Bias Dataset: A Context Oriented Bias Analysis of Movie Dialogues

Jun 01, 2022

Abstract:Movies reflect society and also hold power to transform opinions. Social biases and stereotypes present in movies can cause extensive damage due to their reach. These biases are not always found to be the need of storyline but can creep in as the author's bias. Movie production houses would prefer to ascertain that the bias present in a script is the story's demand. Today, when deep learning models can give human-level accuracy in multiple tasks, having an AI solution to identify the biases present in the script at the writing stage can help them avoid the inconvenience of stalled release, lawsuits, etc. Since AI solutions are data intensive and there exists no domain specific data to address the problem of biases in scripts, we introduce a new dataset of movie scripts that are annotated for identity bias. The dataset contains dialogue turns annotated for (i) bias labels for seven categories, viz., gender, race/ethnicity, religion, age, occupation, LGBTQ, and other, which contains biases like body shaming, personality bias, etc. (ii) labels for sensitivity, stereotype, sentiment, emotion, emotion intensity, (iii) all labels annotated with context awareness, (iv) target groups and reason for bias labels and (v) expert-driven group-validation process for high quality annotations. We also report various baseline performances for bias identification and category detection on our dataset.

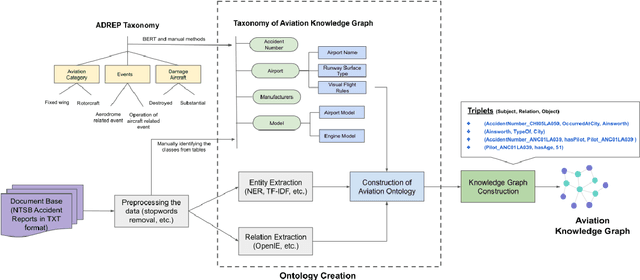

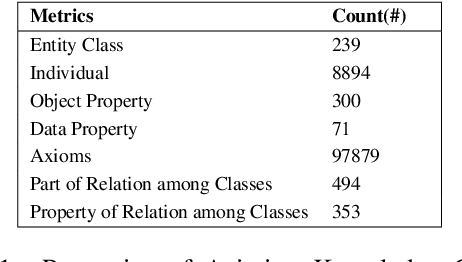

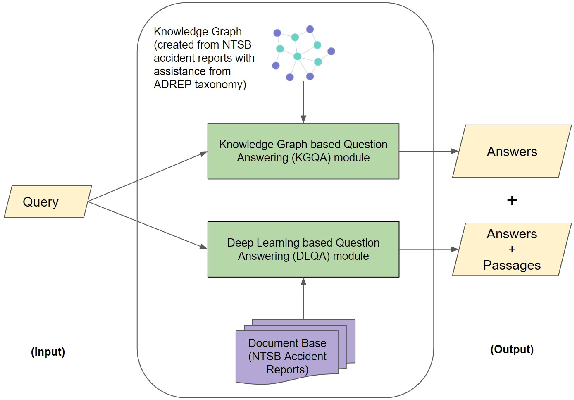

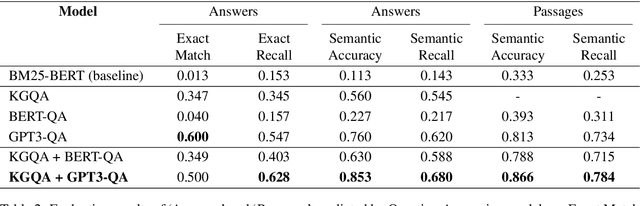

Knowledge Graph -- Deep Learning: A Case Study in Question Answering in Aviation Safety Domain

May 31, 2022

Abstract:In the commercial aviation domain, there are a large number of documents, like, accident reports (NTSB, ASRS) and regulatory directives (ADs). There is a need for a system to access these diverse repositories efficiently in order to service needs in the aviation industry, like maintenance, compliance, and safety. In this paper, we propose a Knowledge Graph (KG) guided Deep Learning (DL) based Question Answering (QA) system for aviation safety. We construct a Knowledge Graph from Aircraft Accident reports and contribute this resource to the community of researchers. The efficacy of this resource is tested and proved by the aforesaid QA system. Natural Language Queries constructed from the documents mentioned above are converted into SPARQL (the interface language of the RDF graph database) queries and answered. On the DL side, we have two different QA models: (i) BERT QA which is a pipeline of Passage Retrieval (Sentence-BERT based) and Question Answering (BERT based), and (ii) the recently released GPT-3. We evaluate our system on a set of queries created from the accident reports. Our combined QA system achieves 9.3% increase in accuracy over GPT-3 and 40.3% increase over BERT QA. Thus, we infer that KG-DL performs better than either singly.

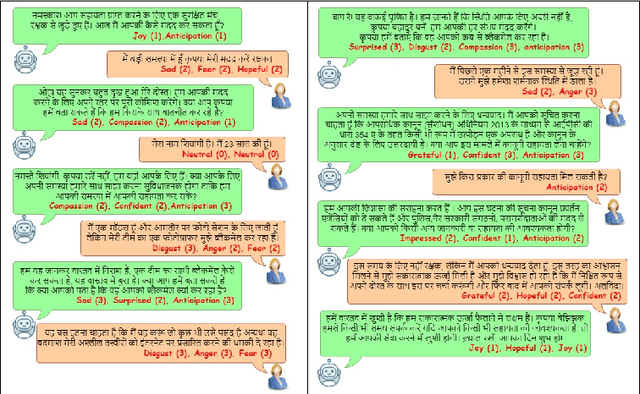

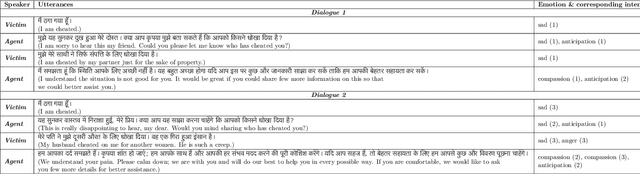

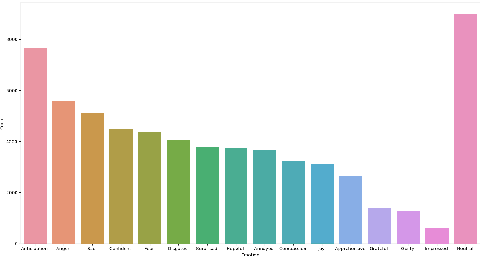

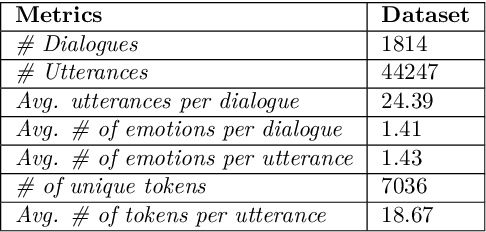

EmoInHindi: A Multi-label Emotion and Intensity Annotated Dataset in Hindi for Emotion Recognition in Dialogues

May 27, 2022

Abstract:The long-standing goal of Artificial Intelligence (AI) has been to create human-like conversational systems. Such systems should have the ability to develop an emotional connection with the users, hence emotion recognition in dialogues is an important task. Emotion detection in dialogues is a challenging task because humans usually convey multiple emotions with varying degrees of intensities in a single utterance. Moreover, emotion in an utterance of a dialogue may be dependent on previous utterances making the task more complex. Emotion recognition has always been in great demand. However, most of the existing datasets for multi-label emotion and intensity detection in conversations are in English. To this end, we create a large conversational dataset in Hindi named EmoInHindi for multi-label emotion and intensity recognition in conversations containing 1,814 dialogues with a total of 44,247 utterances. We prepare our dataset in a Wizard-of-Oz manner for mental health and legal counselling of crime victims. Each utterance of the dialogue is annotated with one or more emotion categories from the 16 emotion classes including neutral, and their corresponding intensity values. We further propose strong contextual baselines that can detect emotion(s) and the corresponding intensity of an utterance given the conversational context.

HiNER: A Large Hindi Named Entity Recognition Dataset

Apr 28, 2022

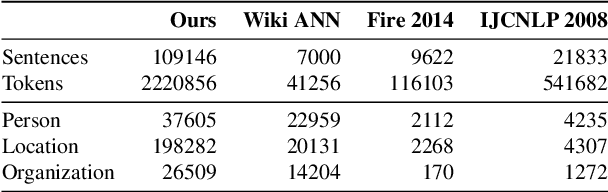

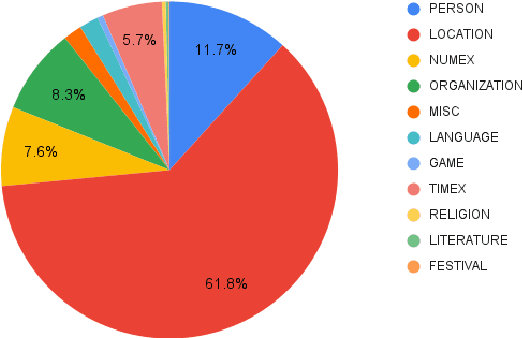

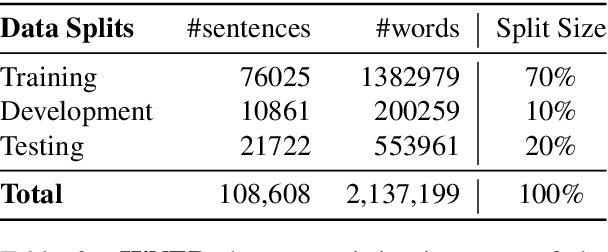

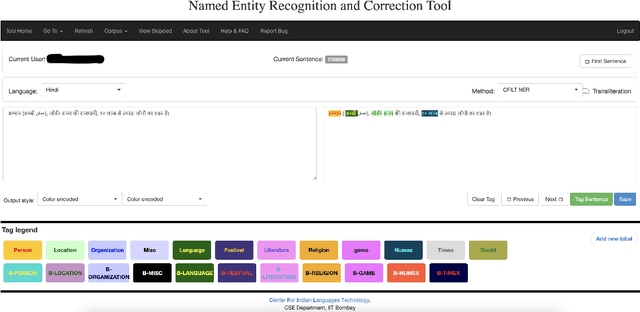

Abstract:Named Entity Recognition (NER) is a foundational NLP task that aims to provide class labels like Person, Location, Organisation, Time, and Number to words in free text. Named Entities can also be multi-word expressions where the additional I-O-B annotation information helps label them during the NER annotation process. While English and European languages have considerable annotated data for the NER task, Indian languages lack on that front -- both in terms of quantity and following annotation standards. This paper releases a significantly sized standard-abiding Hindi NER dataset containing 109,146 sentences and 2,220,856 tokens, annotated with 11 tags. We discuss the dataset statistics in all their essential detail and provide an in-depth analysis of the NER tag-set used with our data. The statistics of tag-set in our dataset show a healthy per-tag distribution, especially for prominent classes like Person, Location and Organisation. Since the proof of resource-effectiveness is in building models with the resource and testing the model on benchmark data and against the leader-board entries in shared tasks, we do the same with the aforesaid data. We use different language models to perform the sequence labelling task for NER and show the efficacy of our data by performing a comparative evaluation with models trained on another dataset available for the Hindi NER task. Our dataset helps achieve a weighted F1 score of 88.78 with all the tags and 92.22 when we collapse the tag-set, as discussed in the paper. To the best of our knowledge, no available dataset meets the standards of volume (amount) and variability (diversity), as far as Hindi NER is concerned. We fill this gap through this work, which we hope will significantly help NLP for Hindi. We release this dataset with our code and models at https://github.com/cfiltnlp/HiNER

Indian Language Wordnets and their Linkages with Princeton WordNet

Jan 09, 2022

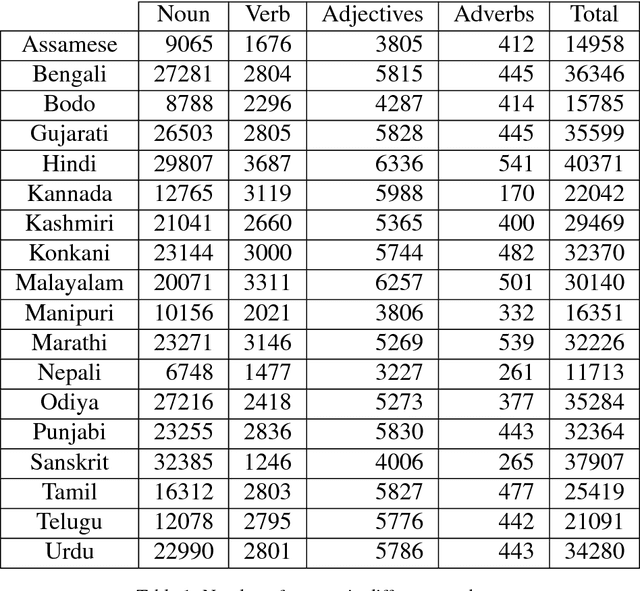

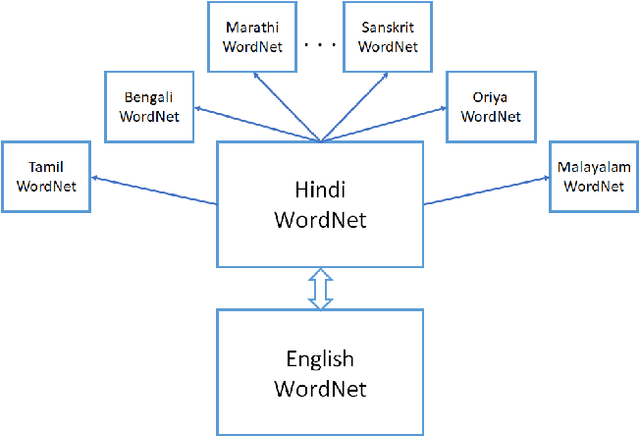

Abstract:Wordnets are rich lexico-semantic resources. Linked wordnets are extensions of wordnets, which link similar concepts in wordnets of different languages. Such resources are extremely useful in many Natural Language Processing (NLP) applications, primarily those based on knowledge-based approaches. In such approaches, these resources are considered as gold standard/oracle. Thus, it is crucial that these resources hold correct information. Thereby, they are created by human experts. However, human experts in multiple languages are hard to come by. Thus, the community would benefit from sharing of such manually created resources. In this paper, we release mappings of 18 Indian language wordnets linked with Princeton WordNet. We believe that availability of such resources will have a direct impact on the progress in NLP for these languages.

Semi-automatic WordNet Linking using Word Embeddings

Jan 05, 2022

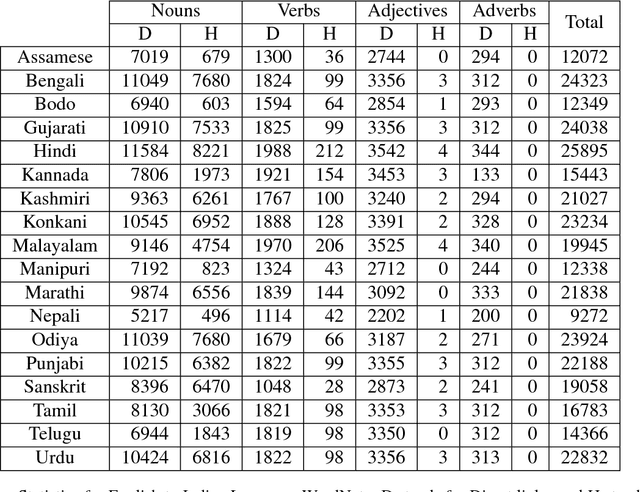

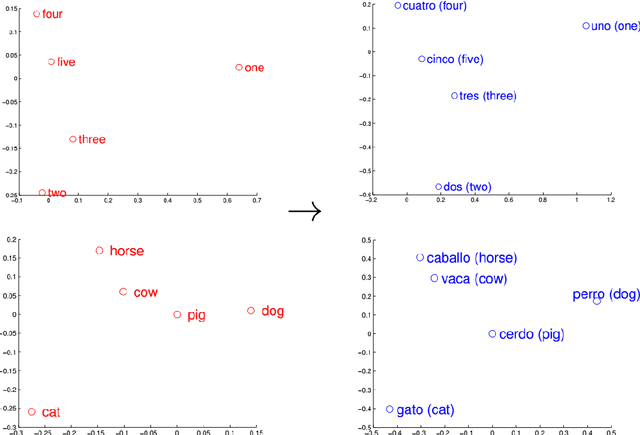

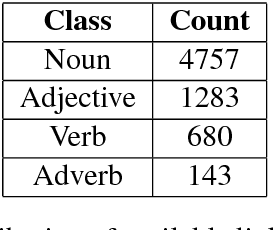

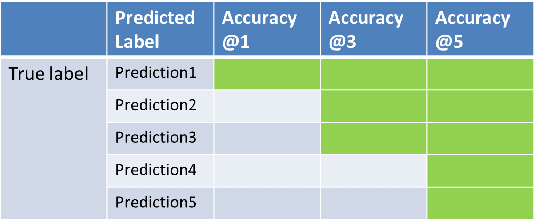

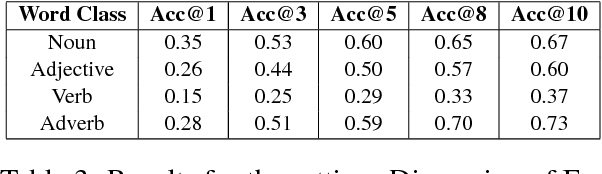

Abstract:Wordnets are rich lexico-semantic resources. Linked wordnets are extensions of wordnets, which link similar concepts in wordnets of different languages. Such resources are extremely useful in many Natural Language Processing (NLP) applications, primarily those based on knowledge-based approaches. In such approaches, these resources are considered as gold standard/oracle. Thus, it is crucial that these resources hold correct information. Thereby, they are created by human experts. However, manual maintenance of such resources is a tedious and costly affair. Thus techniques that can aid the experts are desirable. In this paper, we propose an approach to link wordnets. Given a synset of the source language, the approach returns a ranked list of potential candidate synsets in the target language from which the human expert can choose the correct one(s). Our technique is able to retrieve a winner synset in the top 10 ranked list for 60% of all synsets and 70% of noun synsets.

Strategies of Effective Digitization of Commentaries and Sub-commentaries: Towards the Construction of Textual History

Jan 05, 2022

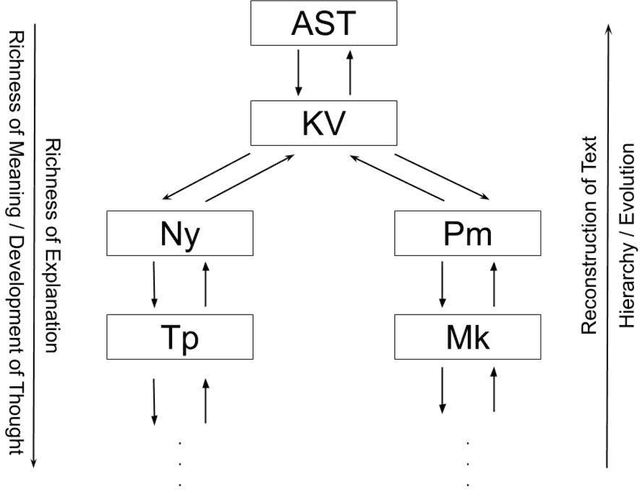

Abstract:This paper describes additional aspects of a digital tool called the 'Textual History Tool'. We describe its various salient features with special reference to those of its features that may help the philologist digitize commentaries and sub-commentaries on a text. This tool captures the historical evolution of a text through various temporal stages, and interrelated data culled from various types of related texts. We use the text of the K\=a\'sik\=avrtti (KV) as a sample text, and with the help of philologists, we digitize the commentaries available to us. We digitize the Ny\=asa (Ny), the Padama\~njar\=i (Pm) and sub commentaries on the KV text known as the Tantraprad\=ipa (Tp), and the Makaranda (Mk). We divide each commentary and sub-commentary into functional units and describe the methodology and motivation behind the functional unit division. Our functional unit division helps generate more accurate phylogenetic trees for the text, based on distance methods using the data entered in the tool.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge