Prasanna Balaprakash

Oak Ridge National Laboratory

A data-centric weak supervised learning for highway traffic incident detection

Dec 17, 2021

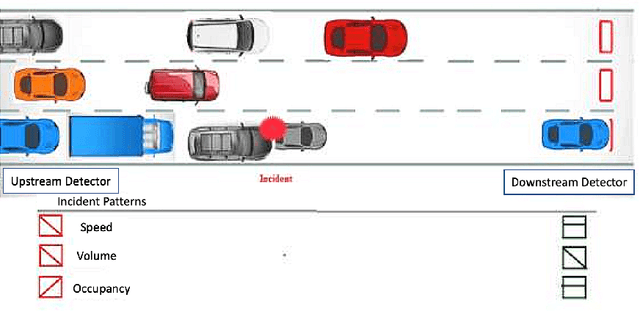

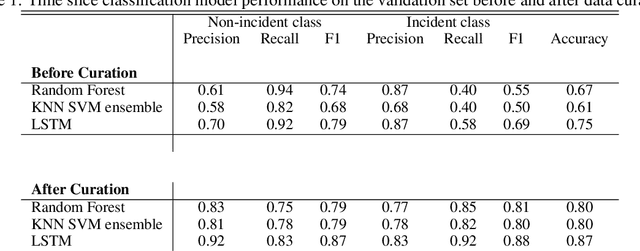

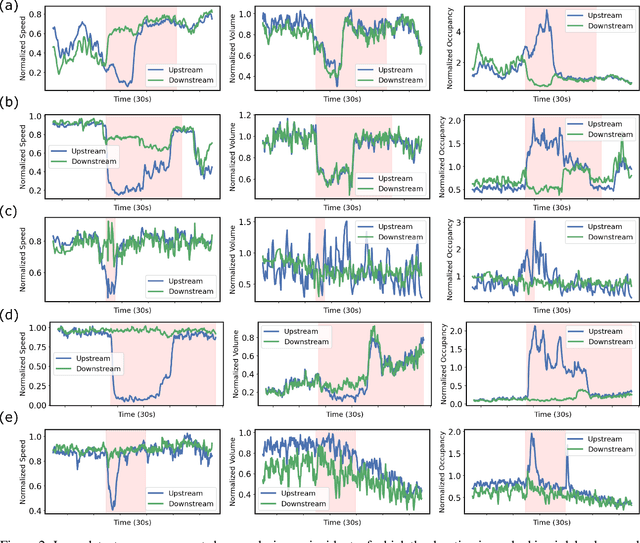

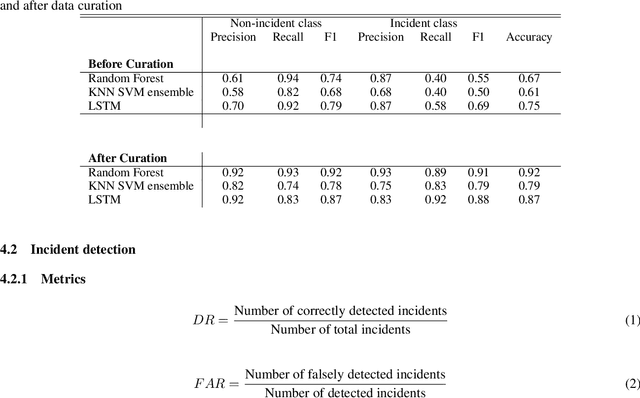

Abstract:Using the data from loop detector sensors for near-real-time detection of traffic incidents in highways is crucial to averting major traffic congestion. While recent supervised machine learning methods offer solutions to incident detection by leveraging human-labeled incident data, the false alarm rate is often too high to be used in practice. Specifically, the inconsistency in the human labeling of the incidents significantly affects the performance of supervised learning models. To that end, we focus on a data-centric approach to improve the accuracy and reduce the false alarm rate of traffic incident detection on highways. We develop a weak supervised learning workflow to generate high-quality training labels for the incident data without the ground truth labels, and we use those generated labels in the supervised learning setup for final detection. This approach comprises three stages. First, we introduce a data preprocessing and curation pipeline that processes traffic sensor data to generate high-quality training data through leveraging labeling functions, which can be domain knowledge-related or simple heuristic rules. Second, we evaluate the training data generated by weak supervision using three supervised learning models -- random forest, k-nearest neighbors, and a support vector machine ensemble -- and long short-term memory classifiers. The results show that the accuracy of all of the models improves significantly after using the training data generated by weak supervision. Third, we develop an online real-time incident detection approach that leverages the model ensemble and the uncertainty quantification while detecting incidents. Overall, we show that our proposed weak supervised learning workflow achieves a high incident detection rate (0.90) and low false alarm rate (0.08).

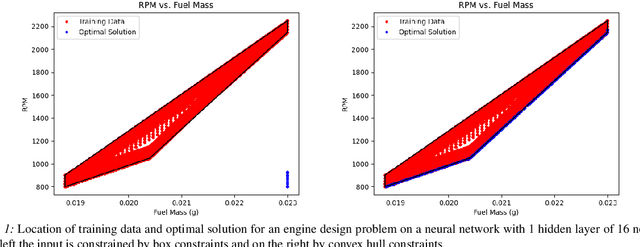

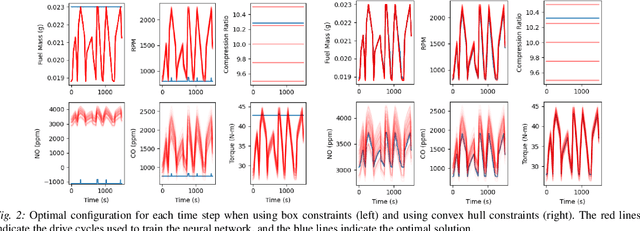

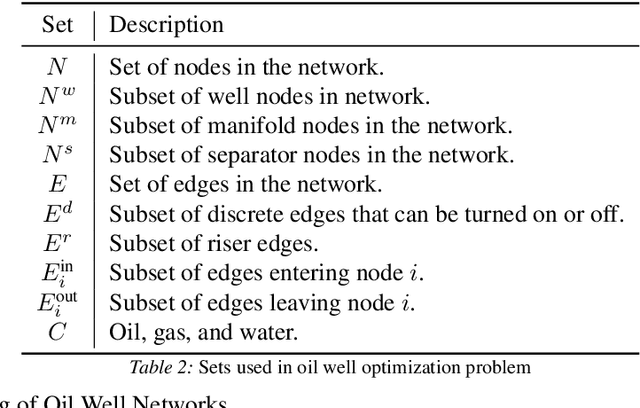

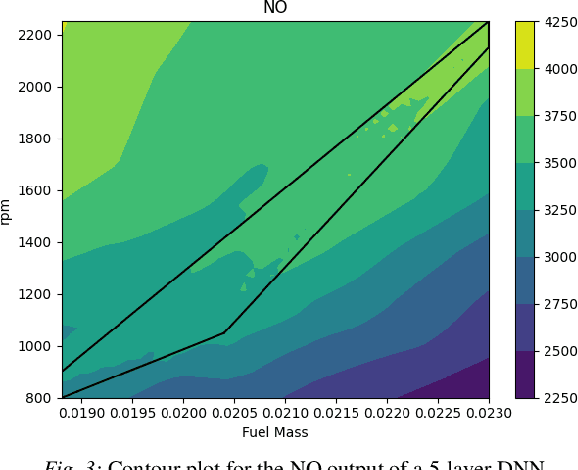

Modeling Design and Control Problems Involving Neural Network Surrogates

Nov 20, 2021

Abstract:We consider nonlinear optimization problems that involve surrogate models represented by neural networks. We demonstrate first how to directly embed neural network evaluation into optimization models, highlight a difficulty with this approach that can prevent convergence, and then characterize stationarity of such models. We then present two alternative formulations of these problems in the specific case of feedforward neural networks with ReLU activation: as a mixed-integer optimization problem and as a mathematical program with complementarity constraints. For the latter formulation we prove that stationarity at a point for this problem corresponds to stationarity of the embedded formulation. Each of these formulations may be solved with state-of-the-art optimization methods, and we show how to obtain good initial feasible solutions for these methods. We compare our formulations on three practical applications arising in the design and control of combustion engines, in the generation of adversarial attacks on classifier networks, and in the determination of optimal flows in an oil well network.

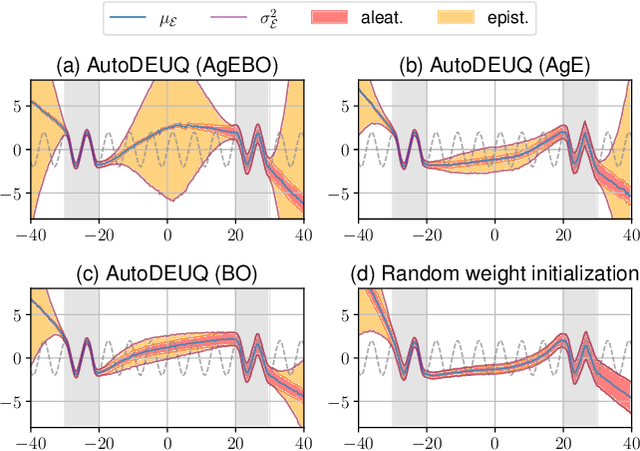

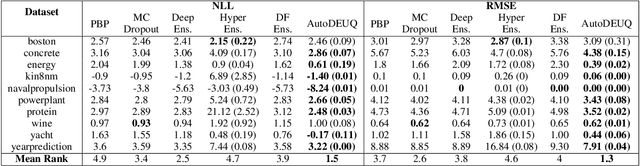

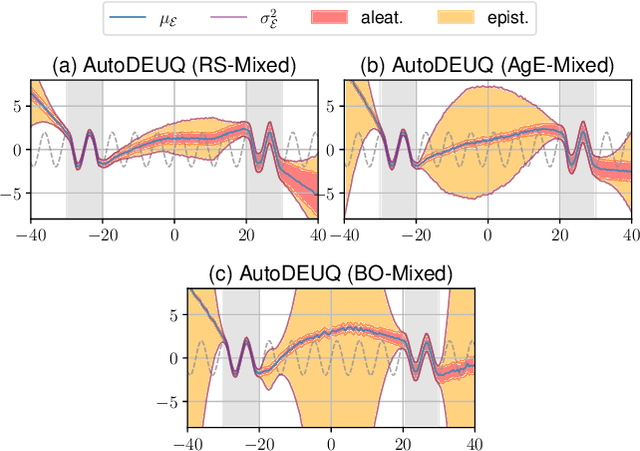

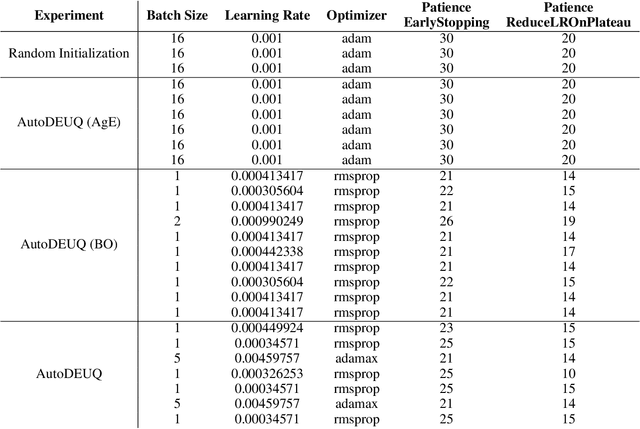

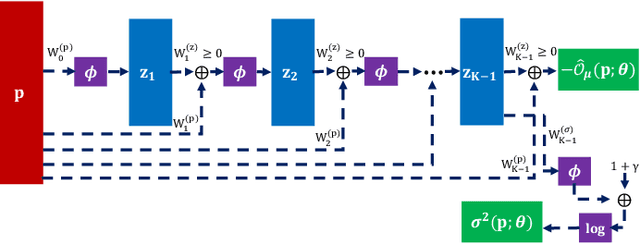

AutoDEUQ: Automated Deep Ensemble with Uncertainty Quantification

Oct 26, 2021

Abstract:Deep neural networks are powerful predictors for a variety of tasks. However, they do not capture uncertainty directly. Using neural network ensembles to quantify uncertainty is competitive with approaches based on Bayesian neural networks while benefiting from better computational scalability. However, building ensembles of neural networks is a challenging task because, in addition to choosing the right neural architecture or hyperparameters for each member of the ensemble, there is an added cost of training each model. We propose AutoDEUQ, an automated approach for generating an ensemble of deep neural networks. Our approach leverages joint neural architecture and hyperparameter search to generate ensembles. We use the law of total variance to decompose the predictive variance of deep ensembles into aleatoric (data) and epistemic (model) uncertainties. We show that AutoDEUQ outperforms probabilistic backpropagation, Monte Carlo dropout, deep ensemble, distribution-free ensembles, and hyper ensemble methods on a number of regression benchmarks.

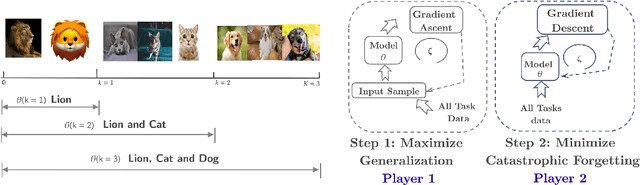

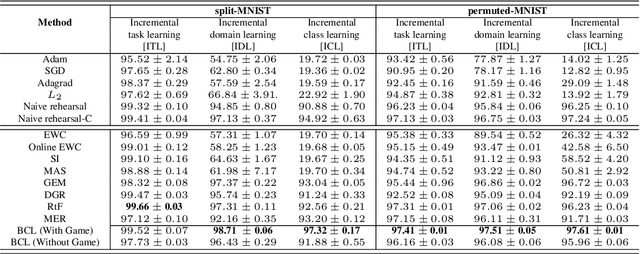

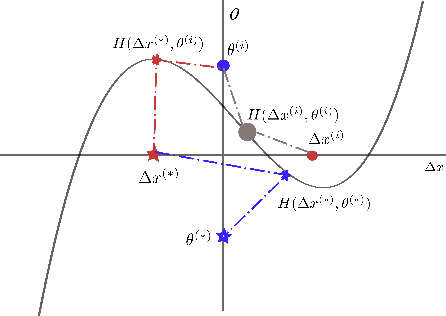

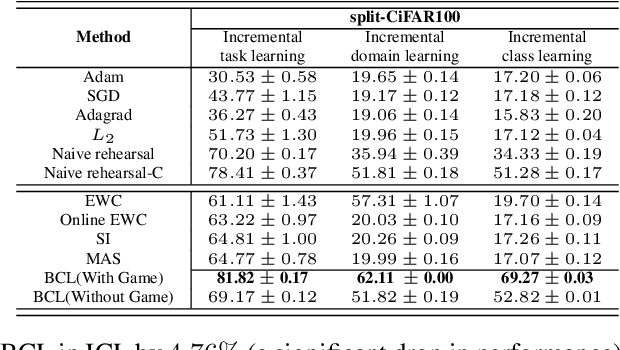

Formalizing the Generalization-Forgetting Trade-off in Continual Learning

Oct 05, 2021

Abstract:We formulate the continual learning (CL) problem via dynamic programming and model the trade-off between catastrophic forgetting and generalization as a two-player sequential game. In this approach, player 1 maximizes the cost due to lack of generalization whereas player 2 minimizes the cost due to catastrophic forgetting. We show theoretically that a balance point between the two players exists for each task and that this point is stable (once the balance is achieved, the two players stay at the balance point). Next, we introduce balanced continual learning (BCL), which is designed to attain balance between generalization and forgetting and empirically demonstrate that BCL is comparable to or better than the state of the art.

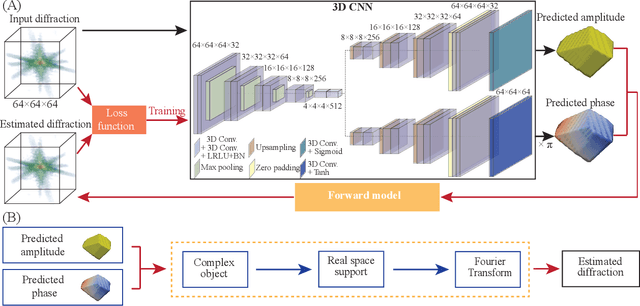

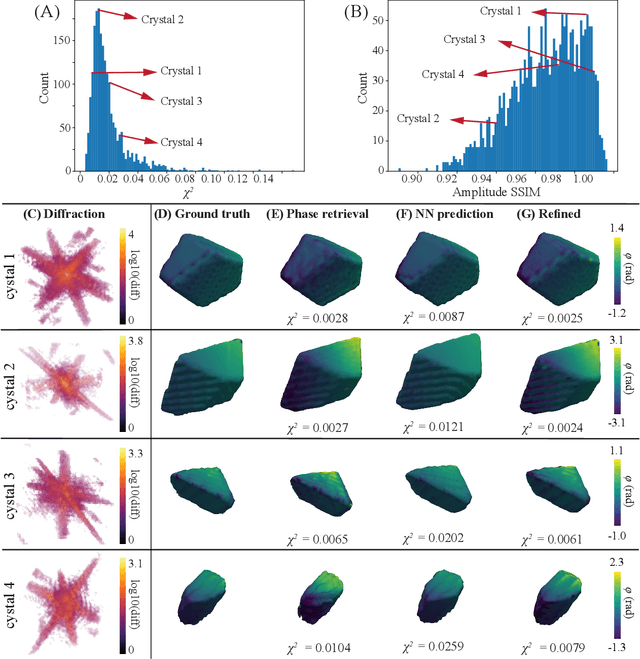

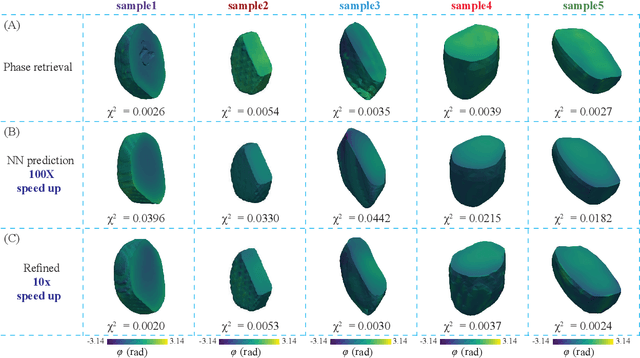

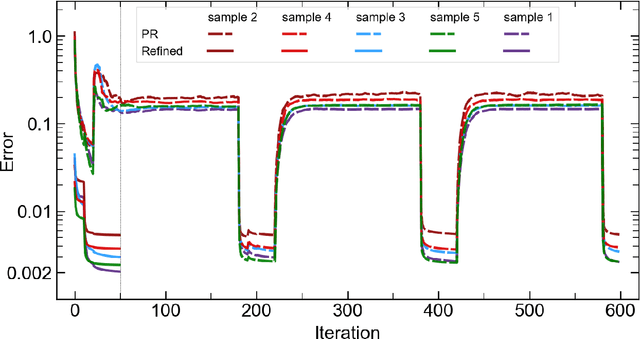

AutoPhaseNN: Unsupervised Physics-aware Deep Learning of 3D Nanoscale Coherent Imaging

Sep 28, 2021

Abstract:The problem of phase retrieval, or the algorithmic recovery of lost phase information from measured intensity alone, underlies various imaging methods from astronomy to nanoscale imaging. Traditional methods of phase retrieval are iterative in nature, and are therefore computationally expensive and time consuming. More recently, deep learning (DL) models have been developed to either provide learned priors to iterative phase retrieval or in some cases completely replace phase retrieval with networks that learn to recover the lost phase information from measured intensity alone. However, such models require vast amounts of labeled data, which can only be obtained through simulation or performing computationally prohibitive phase retrieval on hundreds of or even thousands of experimental datasets. Using a 3D nanoscale X-ray imaging modality (Bragg Coherent Diffraction Imaging or BCDI) as a representative technique, we demonstrate AutoPhaseNN, a DL-based approach which learns to solve the phase problem without labeled data. By incorporating the physics of the imaging technique into the DL model during training, AutoPhaseNN learns to invert 3D BCDI data from reciprocal space to real space in a single shot without ever being shown real space images. Once trained, AutoPhaseNN is about one hundred times faster than traditional iterative phase retrieval methods while providing comparable image quality.

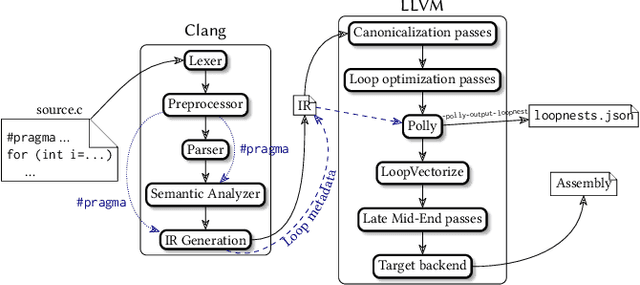

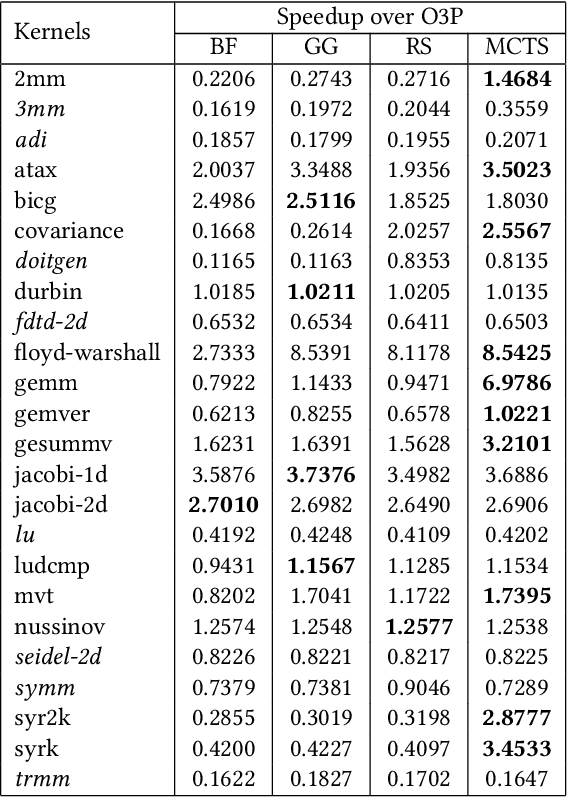

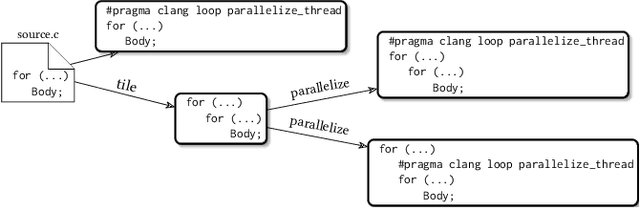

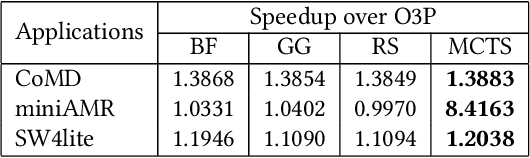

Customized Monte Carlo Tree Search for LLVM/Polly's Composable Loop Optimization Transformations

May 10, 2021

Abstract:Polly is the LLVM project's polyhedral loop nest optimizer. Recently, user-directed loop transformation pragmas were proposed based on LLVM/Clang and Polly. The search space exposed by the transformation pragmas is a tree, wherein each node represents a specific combination of loop transformations that can be applied to the code resulting from the parent node's loop transformations. We have developed a search algorithm based on Monte Carlo tree search (MCTS) to find the best combination of loop transformations. Our algorithm consists of two phases: exploring loop transformations at different depths of the tree to identify promising regions in the tree search space and exploiting those regions by performing a local search. Moreover, a restart mechanism is used to avoid the MCTS getting trapped in a local solution. The best and worst solutions are transferred from the previous phases of the restarts to leverage the search history. We compare our approach with random, greedy, and breadth-first search methods on PolyBench kernels and ECP proxy applications. Experimental results show that our MCTS algorithm finds pragma combinations with a speedup of 2.3x over Polly's heuristic optimizations on average.

Autotuning PolyBench Benchmarks with LLVM Clang/Polly Loop Optimization Pragmas Using Bayesian Optimization (extended version)

Apr 27, 2021

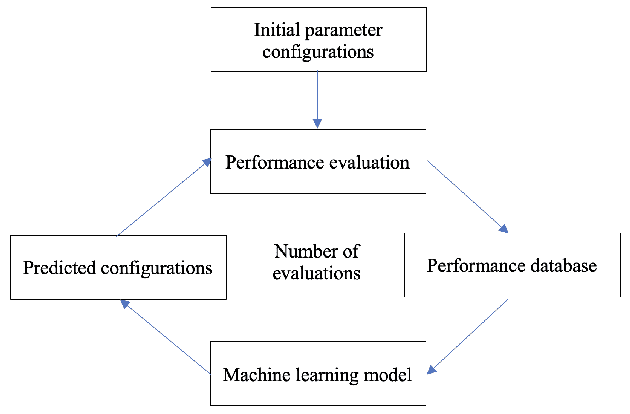

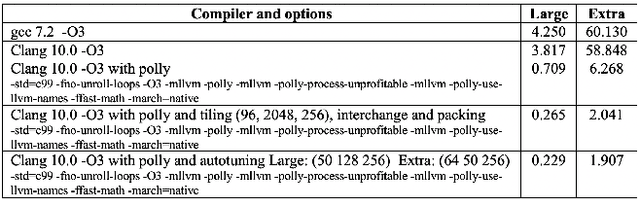

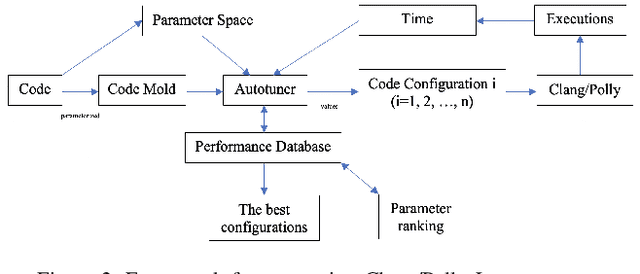

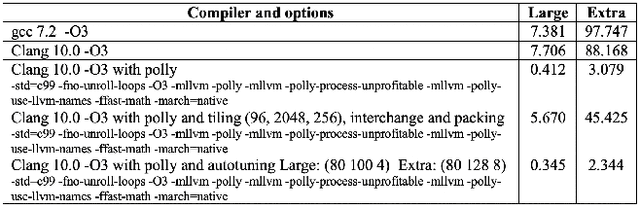

Abstract:In this paper, we develop a ytopt autotuning framework that leverages Bayesian optimization to explore the parameter space search and compare four different supervised learning methods within Bayesian optimization and evaluate their effectiveness. We select six of the most complex PolyBench benchmarks and apply the newly developed LLVM Clang/Polly loop optimization pragmas to the benchmarks to optimize them. We then use the autotuning framework to optimize the pragma parameters to improve their performance. The experimental results show that our autotuning approach outperforms the other compiling methods to provide the smallest execution time for the benchmarks syr2k, 3mm, heat-3d, lu, and covariance with two large datasets in 200 code evaluations for effectively searching the parameter spaces with up to 170,368 different configurations. We find that the Floyd-Warshall benchmark did not benefit from autotuning because Polly uses heuristics to optimize the benchmark to make it run much slower. To cope with this issue, we provide some compiler option solutions to improve the performance. Then we present loop autotuning without a user's knowledge using a simple mctree autotuning framework to further improve the performance of the Floyd-Warshall benchmark. We also extend the ytopt autotuning framework to tune a deep learning application.

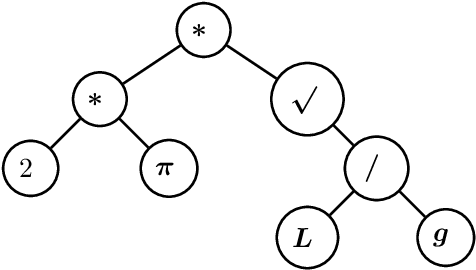

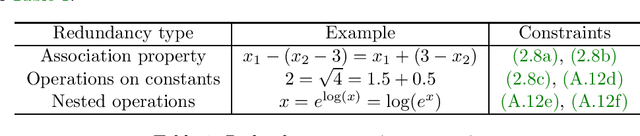

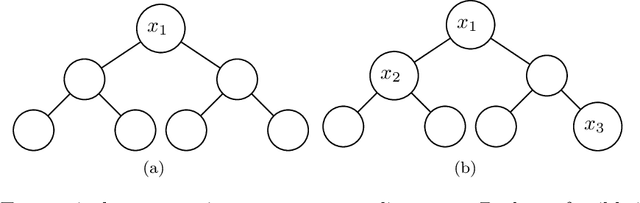

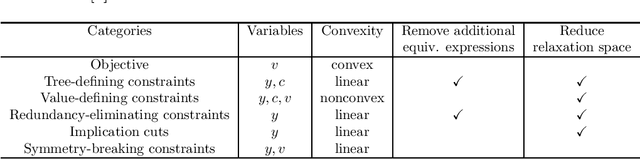

Learning Symbolic Expressions: Mixed-Integer Formulations, Cuts, and Heuristics

Feb 24, 2021

Abstract:In this paper we consider the problem of learning a regression function without assuming its functional form. This problem is referred to as symbolic regression. An expression tree is typically used to represent a solution function, which is determined by assigning operators and operands to the nodes. The symbolic regression problem can be formulated as a nonconvex mixed-integer nonlinear program (MINLP), where binary variables are used to assign operators and nonlinear expressions are used to propagate data values through nonlinear operators such as square, square root, and exponential. We extend this formulation by adding new cuts that improve the solution of this challenging MINLP. We also propose a heuristic that iteratively builds an expression tree by solving a restricted MINLP. We perform computational experiments and compare our approach with a mixed-integer program-based method and a neural-network-based method from the literature.

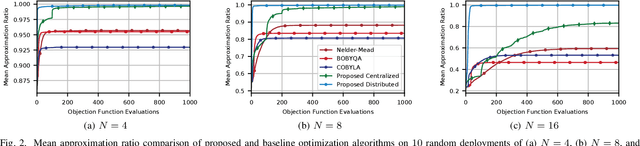

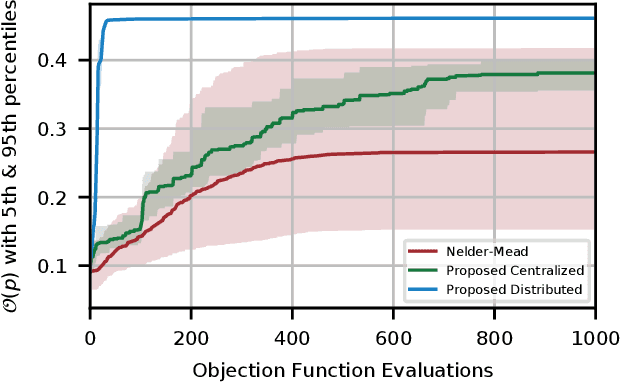

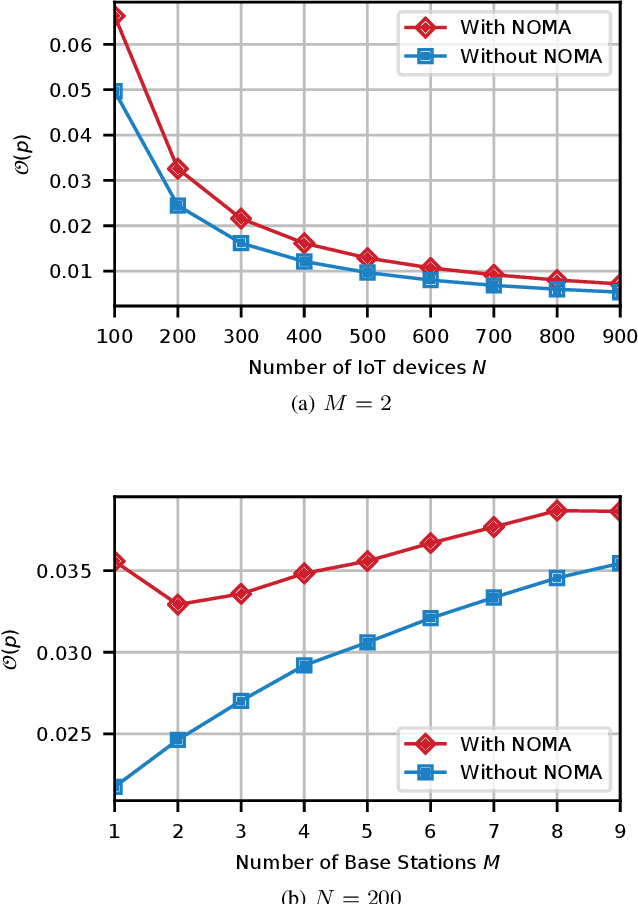

Learning-Based Distributed Random Access for Multi-Cell IoT Networks with NOMA

Jan 02, 2021

Abstract:Non-orthogonal multiple access (NOMA) is a key technology to enable massive machine type communications (mMTC) in 5G networks and beyond. In this paper, NOMA is applied to improve the random access efficiency in high-density spatially-distributed multi-cell wireless IoT networks, where IoT devices contend for accessing the shared wireless channel using an adaptive p-persistent slotted Aloha protocol. To enable a capacity-optimal network, a novel formulation of random channel access with NOMA is proposed, in which the transmission probability of each IoT device is tuned to maximize the geometric mean of users' expected capacity. It is shown that the network optimization objective is high dimensional and mathematically intractable, yet it admits favourable mathematical properties that enable the design of efficient learning-based algorithmic solutions. To this end, two algorithms, i.e., a centralized model-based algorithm and a scalable distributed model-free algorithm, are proposed to optimally tune the transmission probabilities of IoT devices to attain the maximum capacity. The convergence of the proposed algorithms to the optimal solution is further established based on convex optimization and game-theoretic analysis. Extensive simulations demonstrate the merits of the novel formulation and the efficacy of the proposed algorithms.

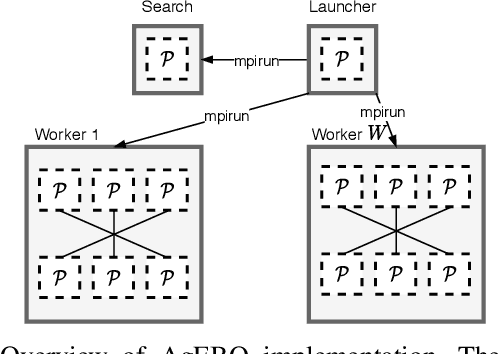

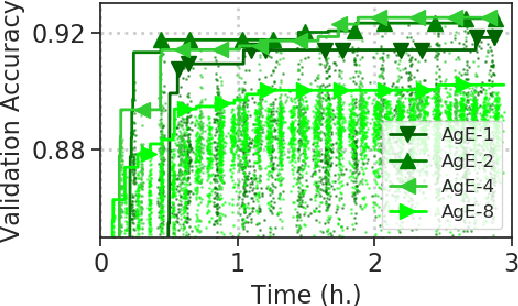

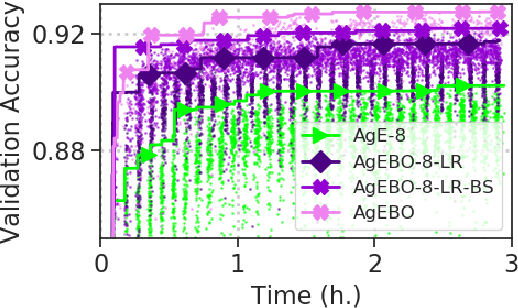

AgEBO-Tabular: Joint Neural Architecture and Hyperparameter Search with Autotuned Data-Parallel Training for Tabular Data

Oct 30, 2020

Abstract:Developing high-performing predictive models for large tabular data sets is a challenging task. The state-of-the-art methods are based on expert-developed model ensembles from different supervised learning methods. Recently, automated machine learning (AutoML) is emerging as a promising approach to automate predictive model development. Neural architecture search (NAS) is an AutoML approach that generates and evaluates multiple neural network architectures concurrently and improves the accuracy of the generated models iteratively. A key issue in NAS, particularly for large data sets, is the large computation time required to evaluate each generated architecture. While data-parallel training is a promising approach that can address this issue, its use within NAS is difficult. For different data sets, the data-parallel training settings such as the number of parallel processes, learning rate, and batch size need to be adapted to achieve high accuracy and reduction in training time. To that end, we have developed AgEBO-Tabular, an approach to combine aging evolution (AgE), a parallel NAS method that searches over neural architecture space, and an asynchronous Bayesian optimization method for tuning the hyperparameters of the data-parallel training simultaneously. We demonstrate the efficacy of the proposed method to generate high-performing neural network models for large tabular benchmark data sets. Furthermore, we demonstrate that the automatically discovered neural network models using our method outperform the state-of-the-art AutoML ensemble models in inference speed by two orders of magnitude while reaching similar accuracy values.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge