Pengwei Sui

Enabling Flexible Multi-LLM Integration for Scalable Knowledge Aggregation

May 28, 2025

Abstract:Large language models (LLMs) have shown remarkable promise but remain challenging to continually improve through traditional finetuning, particularly when integrating capabilities from other specialized LLMs. Popular methods like ensemble and weight merging require substantial memory and struggle to adapt to changing data environments. Recent efforts have transferred knowledge from multiple LLMs into a single target model; however, they suffer from interference and degraded performance among tasks, largely due to limited flexibility in candidate selection and training pipelines. To address these issues, we propose a framework that adaptively selects and aggregates knowledge from diverse LLMs to build a single, stronger model, avoiding the high memory overhead of ensemble and inflexible weight merging. Specifically, we design an adaptive selection network that identifies the most relevant source LLMs based on their scores, thereby reducing knowledge interference. We further propose a dynamic weighted fusion strategy that accounts for the inherent strengths of candidate LLMs, along with a feedback-driven loss function that prevents the selector from converging on a single subset of sources. Experimental results demonstrate that our method can enable a more stable and scalable knowledge aggregation process while reducing knowledge interference by up to 50% compared to existing approaches. Code is avaliable at https://github.com/ZLKong/LLM_Integration

Learning Implicit Credit Assignment for Multi-Agent Actor-Critic

Jul 06, 2020

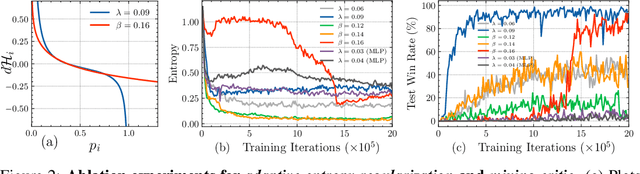

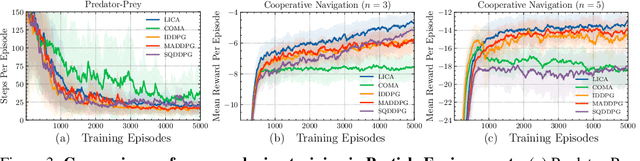

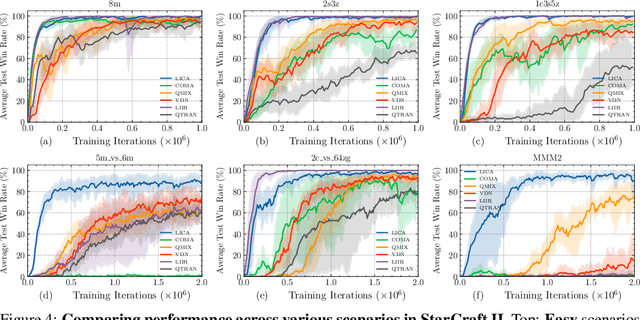

Abstract:We present a new policy-based multi-agent reinforcement learning algorithm that implicitly addresses the credit assignment problem under fully cooperative settings. Our key motivation is that credit assignment may not require an explicit formulation as long as (1) the policy gradients of a trained, centralized critic carry sufficient information for the decentralized agents to maximize the critic estimate through optimal cooperation and (2) a sustained level of agent exploration is enforced throughout training. In this work, we achieve the former by formulating the centralized critic as a hypernetwork such that the latent state representation is now fused into the policy gradients through its multiplicative association with the agent policies, and we show that this is key to learning optimal joint actions that may otherwise require explicit credit assignment. To achieve the latter, we further propose a practical technique called adaptive entropy regularization where magnitudes of the policy gradients from the entropy term are dynamically rescaled to sustain consistent levels of exploration throughout training. Our final algorithm, which we call LICA, is evaluated on several benchmarks including the multi-agent particle environments and a set of challenging StarCraft II micromanagement tasks, and we show that LICA significantly outperforms previous methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge