Neeraj Kumar

Single GPU Task Adaptation of Pathology Foundation Models for Whole Slide Image Analysis

Jun 05, 2025

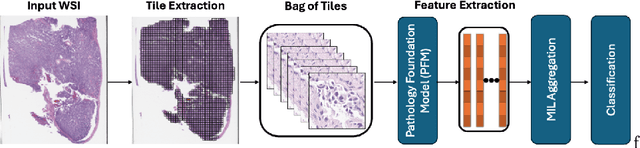

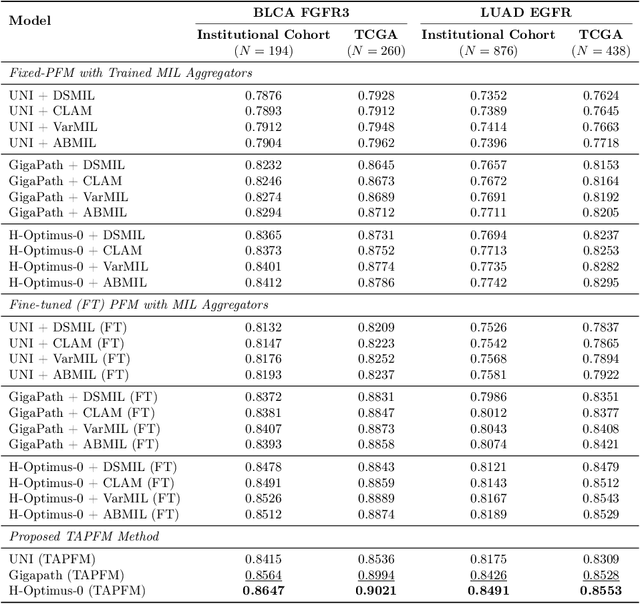

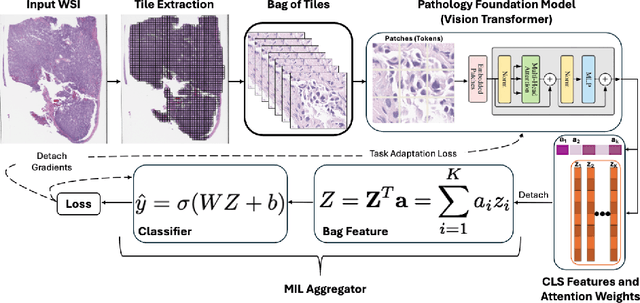

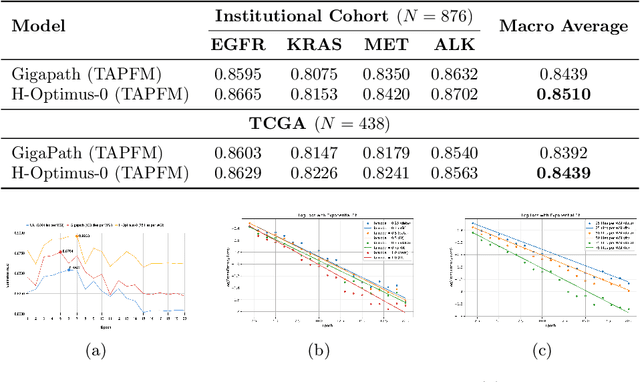

Abstract:Pathology foundation models (PFMs) have emerged as powerful tools for analyzing whole slide images (WSIs). However, adapting these pretrained PFMs for specific clinical tasks presents considerable challenges, primarily due to the availability of only weak (WSI-level) labels for gigapixel images, necessitating multiple instance learning (MIL) paradigm for effective WSI analysis. This paper proposes a novel approach for single-GPU \textbf{T}ask \textbf{A}daptation of \textbf{PFM}s (TAPFM) that uses vision transformer (\vit) attention for MIL aggregation while optimizing both for feature representations and attention weights. The proposed approach maintains separate computational graphs for MIL aggregator and the PFM to create stable training dynamics that align with downstream task objectives during end-to-end adaptation. Evaluated on mutation prediction tasks for bladder cancer and lung adenocarcinoma across institutional and TCGA cohorts, TAPFM consistently outperforms conventional approaches, with H-Optimus-0 (TAPFM) outperforming the benchmarks. TAPFM effectively handles multi-label classification of actionable mutations as well. Thus, TAPFM makes adaptation of powerful pre-trained PFMs practical on standard hardware for various clinical applications.

Electrical Load Forecasting in Smart Grid: A Personalized Federated Learning Approach

Nov 15, 2024

Abstract:Electric load forecasting is essential for power management and stability in smart grids. This is mainly achieved via advanced metering infrastructure, where smart meters (SMs) are used to record household energy consumption. Traditional machine learning (ML) methods are often employed for load forecasting but require data sharing which raises data privacy concerns. Federated learning (FL) can address this issue by running distributed ML models at local SMs without data exchange. However, current FL-based approaches struggle to achieve efficient load forecasting due to imbalanced data distribution across heterogeneous SMs. This paper presents a novel personalized federated learning (PFL) method to load prediction under non-independent and identically distributed (non-IID) metering data settings. Specifically, we introduce meta-learning, where the learning rates are manipulated using the meta-learning idea to maximize the gradient for each client in each global round. Clients with varying processing capacities, data sizes, and batch sizes can participate in global model aggregation and improve their local load forecasting via personalized learning. Simulation results show that our approach outperforms state-of-the-art ML and FL methods in terms of better load forecasting accuracy.

CACTUS: Chemistry Agent Connecting Tool-Usage to Science

May 02, 2024Abstract:Large language models (LLMs) have shown remarkable potential in various domains, but they often lack the ability to access and reason over domain-specific knowledge and tools. In this paper, we introduced CACTUS (Chemistry Agent Connecting Tool-Usage to Science), an LLM-based agent that integrates cheminformatics tools to enable advanced reasoning and problem-solving in chemistry and molecular discovery. We evaluate the performance of CACTUS using a diverse set of open-source LLMs, including Gemma-7b, Falcon-7b, MPT-7b, Llama2-7b, and Mistral-7b, on a benchmark of thousands of chemistry questions. Our results demonstrate that CACTUS significantly outperforms baseline LLMs, with the Gemma-7b and Mistral-7b models achieving the highest accuracy regardless of the prompting strategy used. Moreover, we explore the impact of domain-specific prompting and hardware configurations on model performance, highlighting the importance of prompt engineering and the potential for deploying smaller models on consumer-grade hardware without significant loss in accuracy. By combining the cognitive capabilities of open-source LLMs with domain-specific tools, CACTUS can assist researchers in tasks such as molecular property prediction, similarity searching, and drug-likeness assessment. Furthermore, CACTUS represents a significant milestone in the field of cheminformatics, offering an adaptable tool for researchers engaged in chemistry and molecular discovery. By integrating the strengths of open-source LLMs with domain-specific tools, CACTUS has the potential to accelerate scientific advancement and unlock new frontiers in the exploration of novel, effective, and safe therapeutic candidates, catalysts, and materials. Moreover, CACTUS's ability to integrate with automated experimentation platforms and make data-driven decisions in real time opens up new possibilities for autonomous discovery.

Style Description based Text-to-Speech with Conditional Prosodic Layer Normalization based Diffusion GAN

Oct 27, 2023Abstract:In this paper, we present a Diffusion GAN based approach (Prosodic Diff-TTS) to generate the corresponding high-fidelity speech based on the style description and content text as an input to generate speech samples within only 4 denoising steps. It leverages the novel conditional prosodic layer normalization to incorporate the style embeddings into the multi head attention based phoneme encoder and mel spectrogram decoder based generator architecture to generate the speech. The style embedding is generated by fine tuning the pretrained BERT model on auxiliary tasks such as pitch, speaking speed, emotion,gender classifications. We demonstrate the efficacy of our proposed architecture on multi-speaker LibriTTS and PromptSpeech datasets, using multiple quantitative metrics that measure generated accuracy and MOS.

Distraction-free Embeddings for Robust VQA

Aug 31, 2023

Abstract:The generation of effective latent representations and their subsequent refinement to incorporate precise information is an essential prerequisite for Vision-Language Understanding (VLU) tasks such as Video Question Answering (VQA). However, most existing methods for VLU focus on sparsely sampling or fine-graining the input information (e.g., sampling a sparse set of frames or text tokens), or adding external knowledge. We present a novel "DRAX: Distraction Removal and Attended Cross-Alignment" method to rid our cross-modal representations of distractors in the latent space. We do not exclusively confine the perception of any input information from various modalities but instead use an attention-guided distraction removal method to increase focus on task-relevant information in latent embeddings. DRAX also ensures semantic alignment of embeddings during cross-modal fusions. We evaluate our approach on a challenging benchmark (SUTD-TrafficQA dataset), testing the framework's abilities for feature and event queries, temporal relation understanding, forecasting, hypothesis, and causal analysis through extensive experiments.

An Effective Meaningful Way to Evaluate Survival Models

Jun 01, 2023Abstract:One straightforward metric to evaluate a survival prediction model is based on the Mean Absolute Error (MAE) -- the average of the absolute difference between the time predicted by the model and the true event time, over all subjects. Unfortunately, this is challenging because, in practice, the test set includes (right) censored individuals, meaning we do not know when a censored individual actually experienced the event. In this paper, we explore various metrics to estimate MAE for survival datasets that include (many) censored individuals. Moreover, we introduce a novel and effective approach for generating realistic semi-synthetic survival datasets to facilitate the evaluation of metrics. Our findings, based on the analysis of the semi-synthetic datasets, reveal that our proposed metric (MAE using pseudo-observations) is able to rank models accurately based on their performance, and often closely matches the true MAE -- in particular, is better than several alternative methods.

The ACROBAT 2022 Challenge: Automatic Registration Of Breast Cancer Tissue

May 29, 2023Abstract:The alignment of tissue between histopathological whole-slide-images (WSI) is crucial for research and clinical applications. Advances in computing, deep learning, and availability of large WSI datasets have revolutionised WSI analysis. Therefore, the current state-of-the-art in WSI registration is unclear. To address this, we conducted the ACROBAT challenge, based on the largest WSI registration dataset to date, including 4,212 WSIs from 1,152 breast cancer patients. The challenge objective was to align WSIs of tissue that was stained with routine diagnostic immunohistochemistry to its H&E-stained counterpart. We compare the performance of eight WSI registration algorithms, including an investigation of the impact of different WSI properties and clinical covariates. We find that conceptually distinct WSI registration methods can lead to highly accurate registration performances and identify covariates that impact performances across methods. These results establish the current state-of-the-art in WSI registration and guide researchers in selecting and developing methods.

KL Regularized Normalization Framework for Low Resource Tasks

Dec 21, 2022

Abstract:Large pre-trained models, such as Bert, GPT, and Wav2Vec, have demonstrated great potential for learning representations that are transferable to a wide variety of downstream tasks . It is difficult to obtain a large quantity of supervised data due to the limited availability of resources and time. In light of this, a significant amount of research has been conducted in the area of adopting large pre-trained datasets for diverse downstream tasks via fine tuning, linear probing, or prompt tuning in low resource settings. Normalization techniques are essential for accelerating training and improving the generalization of deep neural networks and have been successfully used in a wide variety of applications. A lot of normalization techniques have been proposed but the success of normalization in low resource downstream NLP and speech tasks is limited. One of the reasons is the inability to capture expressiveness by rescaling parameters of normalization. We propose KullbackLeibler(KL) Regularized normalization (KL-Norm) which make the normalized data well behaved and helps in better generalization as it reduces over-fitting, generalises well on out of domain distributions and removes irrelevant biases and features with negligible increase in model parameters and memory overheads. Detailed experimental evaluation on multiple low resource NLP and speech tasks, demonstrates the superior performance of KL-Norm as compared to other popular normalization and regularization techniques.

Dynamic Molecular Graph-based Implementation for Biophysical Properties Prediction

Dec 20, 2022

Abstract:Neural Networks (GNNs) have revolutionized the molecular discovery to understand patterns and identify unknown features that can aid in predicting biophysical properties and protein-ligand interactions. However, current models typically rely on 2-dimensional molecular representations as input, and while utilization of 2\3- dimensional structural data has gained deserved traction in recent years as many of these models are still limited to static graph representations. We propose a novel approach based on the transformer model utilizing GNNs for characterizing dynamic features of protein-ligand interactions. Our message passing transformer pre-trains on a set of molecular dynamic data based off of physics-based simulations to learn coordinate construction and make binding probability and affinity predictions as a downstream task. Through extensive testing we compare our results with the existing models, our MDA-PLI model was able to outperform the molecular interaction prediction models with an RMSE of 1.2958. The geometric encodings enabled by our transformer architecture and the addition of time series data add a new dimensionality to this form of research.

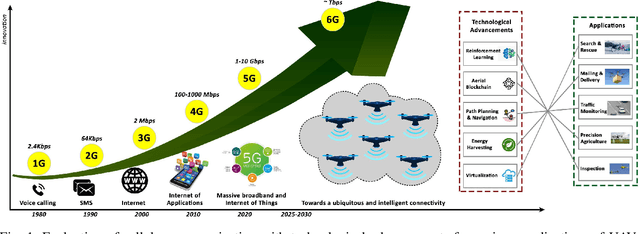

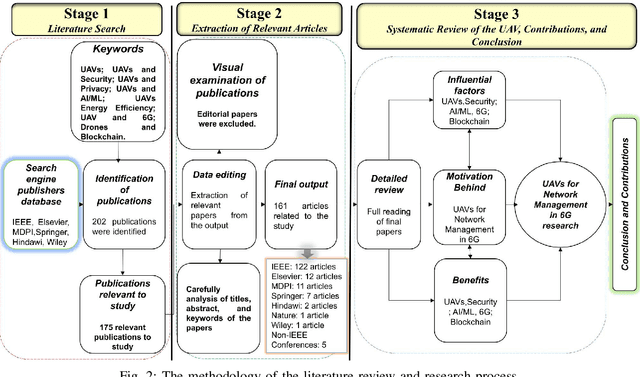

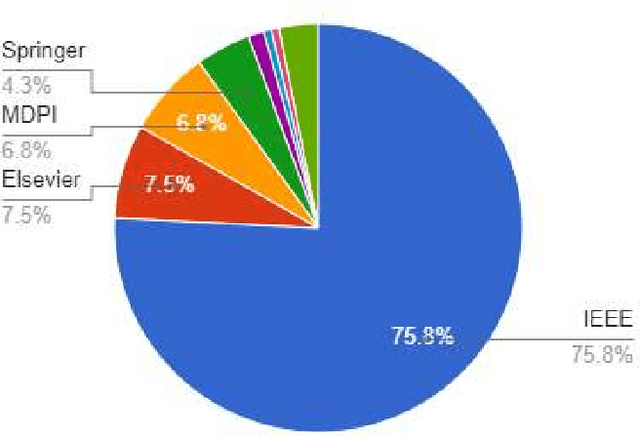

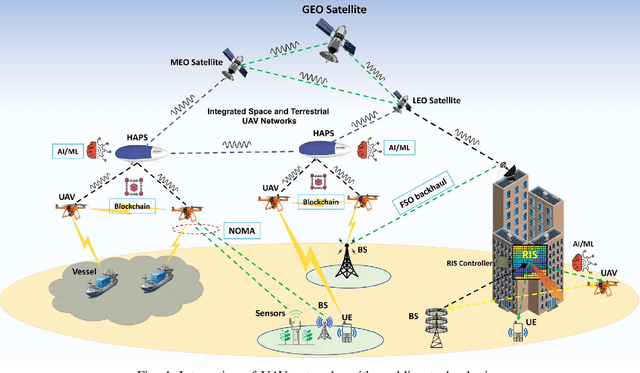

Swarm of UAVs for Network Management in 6G: A Technical Review

Oct 06, 2022

Abstract:Fifth-generation (5G) cellular networks have led to the implementation of beyond 5G (B5G) networks, which are capable of incorporating autonomous services to swarm of unmanned aerial vehicles (UAVs). They provide capacity expansion strategies to address massive connectivity issues and guarantee ultra-high throughput and low latency, especially in extreme or emergency situations where network density, bandwidth, and traffic patterns fluctuate. On the one hand, 6G technology integrates AI/ML, IoT, and blockchain to establish ultra-reliable, intelligent, secure, and ubiquitous UAV networks. 6G networks, on the other hand, rely on new enabling technologies such as air interface and transmission technologies, as well as a unique network design, posing new challenges for the swarm of UAVs. Keeping these challenges in mind, this article focuses on the security and privacy, intelligence, and energy-efficiency issues faced by swarms of UAVs operating in 6G mobile networks. In this state-of-the-art review, we integrated blockchain and AI/ML with UAV networks utilizing the 6G ecosystem. The key findings are then presented, and potential research challenges are identified. We conclude the review by shedding light on future research in this emerging field of research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge