Mohamed Reda Bouadjenek

Learning Rewards, Not Labels: Adversarial Inverse Reinforcement Learning for Machinery Fault Detection

Feb 25, 2026Abstract:Reinforcement learning (RL) offers significant promise for machinery fault detection (MFD). However, most existing RL-based MFD approaches do not fully exploit RL's sequential decision-making strengths, often treating MFD as a simple guessing game (Contextual Bandits). To bridge this gap, we formulate MFD as an offline inverse reinforcement learning problem, where the agent learns the reward dynamics directly from healthy operational sequences, thereby bypassing the need for manual reward engineering and fault labels. Our framework employs Adversarial Inverse Reinforcement Learning to train a discriminator that distinguishes between normal (expert) and policy-generated transitions. The discriminator's learned reward serves as an anomaly score, indicating deviations from normal operating behaviour. When evaluated on three run-to-failure benchmark datasets (HUMS2023, IMS, and XJTU-SY), the model consistently assigns low anomaly scores to normal samples and high scores to faulty ones, enabling early and robust fault detection. By aligning RL's sequential reasoning with MFD's temporal structure, this work opens a path toward RL-based diagnostics in data-driven industrial settings.

MissHDD: Hybrid Deterministic Diffusion for Hetrogeneous Incomplete Data Imputation

Nov 18, 2025Abstract:Incomplete data are common in real-world tabular applications, where numerical, categorical, and discrete attributes coexist within a single dataset. This heterogeneous structure presents significant challenges for existing diffusion-based imputation models, which typically assume a homogeneous feature space and rely on stochastic denoising trajectories. Such assumptions make it difficult to maintain conditional consistency, and they often lead to information collapse for categorical variables or instability when numerical variables require deterministic updates. These limitations indicate that a single diffusion process is insufficient for mixed-type tabular imputation. We propose a hybrid deterministic diffusion framework that separates heterogeneous features into two complementary generative channels. A continuous DDIM-based channel provides efficient and stable deterministic denoising for numerical variables, while a discrete latent-path diffusion channel, inspired by loopholing-based discrete diffusion, models categorical and discrete features without leaving their valid sample manifolds. The two channels are trained under a unified conditional imputation objective, enabling coherent reconstruction of mixed-type incomplete data. Extensive experiments on multiple real-world datasets show that the proposed framework achieves higher imputation accuracy, more stable sampling trajectories, and improved robustness across MCAR, MAR, and MNAR settings compared with existing diffusion-based and classical methods. These results demonstrate the importance of structure-aware diffusion processes for advancing deep learning approaches to incomplete tabular data.

Multi-Scale Attention and Gated Shifting for Fine-Grained Event Spotting in Videos

Jul 10, 2025

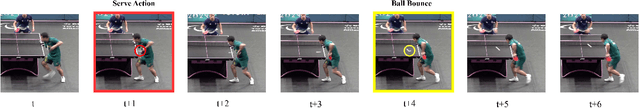

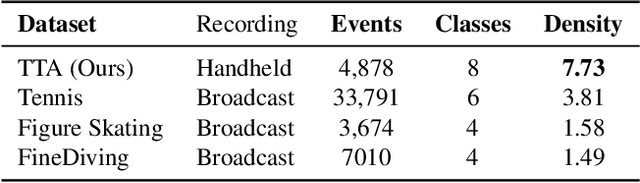

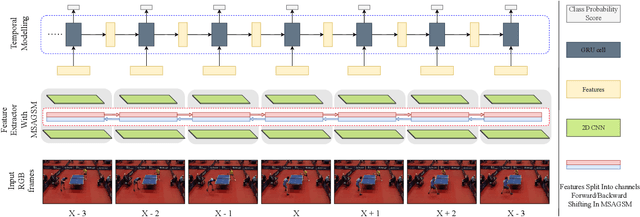

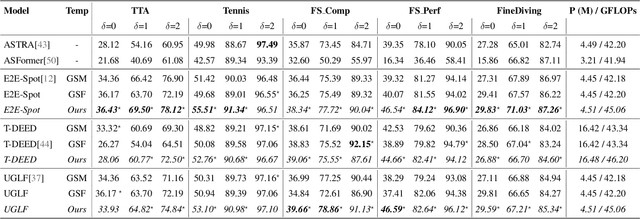

Abstract:Precise Event Spotting (PES) in sports videos requires frame-level recognition of fine-grained actions from single-camera footage. Existing PES models typically incorporate lightweight temporal modules such as Gate Shift Module (GSM) or Gate Shift Fuse (GSF) to enrich 2D CNN feature extractors with temporal context. However, these modules are limited in both temporal receptive field and spatial adaptability. We propose a Multi-Scale Attention Gate Shift Module (MSAGSM) that enhances GSM with multi-scale temporal dilations and multi-head spatial attention, enabling efficient modeling of both short- and long-term dependencies while focusing on salient regions. MSAGSM is a lightweight plug-and-play module that can be easily integrated with various 2D backbones. To further advance the field, we introduce the Table Tennis Australia (TTA) dataset-the first PES benchmark for table tennis-containing over 4800 precisely annotated events. Extensive experiments across five PES benchmarks demonstrate that MSAGSM consistently improves performance with minimal overhead, setting new state-of-the-art results.

Far From Sight, Far From Mind: Inverse Distance Weighting for Graph Federated Recommendation

Jul 02, 2025Abstract:Graph federated recommendation systems offer a privacy-preserving alternative to traditional centralized recommendation architectures, which often raise concerns about data security. While federated learning enables personalized recommendations without exposing raw user data, existing aggregation methods overlook the unique properties of user embeddings in this setting. Indeed, traditional aggregation methods fail to account for their complexity and the critical role of user similarity in recommendation effectiveness. Moreover, evolving user interactions require adaptive aggregation while preserving the influence of high-relevance anchor users (the primary users before expansion in graph-based frameworks). To address these limitations, we introduce Dist-FedAvg, a novel distance-based aggregation method designed to enhance personalization and aggregation efficiency in graph federated learning. Our method assigns higher aggregation weights to users with similar embeddings, while ensuring that anchor users retain significant influence in local updates. Empirical evaluations on multiple datasets demonstrate that Dist-FedAvg consistently outperforms baseline aggregation techniques, improving recommendation accuracy while maintaining seamless integration into existing federated learning frameworks.

Navigating loss manifolds via rigid body dynamics: A promising avenue for robustness and generalisation

May 26, 2025

Abstract:Training large neural networks through gradient-based optimization requires navigating high-dimensional loss landscapes, which often exhibit pathological geometry, leading to undesirable training dynamics. In particular, poor generalization frequently results from convergence to sharp minima that are highly sensitive to input perturbations, causing the model to overfit the training data while failing to generalize to unseen examples. Furthermore, these optimization procedures typically display strong dependence on the fine structure of the loss landscape, leading to unstable training dynamics, due to the fractal-like nature of the loss surface. In this work, we propose an alternative optimizer that simultaneously reduces this dependence, and avoids sharp minima, thereby improving generalization. This is achieved by simulating the motion of the center of a ball rolling on the loss landscape. The degree to which our optimizer departs from the standard gradient descent is controlled by a hyperparameter, representing the radius of the ball. Changing this hyperparameter allows for probing the loss landscape at different scales, making it a valuable tool for understanding its geometry.

A Comprehensive Analysis of Large Language Model Outputs: Similarity, Diversity, and Bias

May 14, 2025Abstract:Large Language Models (LLMs) represent a major step toward artificial general intelligence, significantly advancing our ability to interact with technology. While LLMs perform well on Natural Language Processing tasks -- such as translation, generation, code writing, and summarization -- questions remain about their output similarity, variability, and ethical implications. For instance, how similar are texts generated by the same model? How does this compare across different models? And which models best uphold ethical standards? To investigate, we used 5{,}000 prompts spanning diverse tasks like generation, explanation, and rewriting. This resulted in approximately 3 million texts from 12 LLMs, including proprietary and open-source systems from OpenAI, Google, Microsoft, Meta, and Mistral. Key findings include: (1) outputs from the same LLM are more similar to each other than to human-written texts; (2) models like WizardLM-2-8x22b generate highly similar outputs, while GPT-4 produces more varied responses; (3) LLM writing styles differ significantly, with Llama 3 and Mistral showing higher similarity, and GPT-4 standing out for distinctiveness; (4) differences in vocabulary and tone underscore the linguistic uniqueness of LLM-generated content; (5) some LLMs demonstrate greater gender balance and reduced bias. These results offer new insights into the behavior and diversity of LLM outputs, helping guide future development and ethical evaluation.

Action Spotting and Precise Event Detection in Sports: Datasets, Methods, and Challenges

May 06, 2025Abstract:Video event detection has become an essential component of sports analytics, enabling automated identification of key moments and enhancing performance analysis, viewer engagement, and broadcast efficiency. Recent advancements in deep learning, particularly Convolutional Neural Networks (CNNs) and Transformers, have significantly improved accuracy and efficiency in Temporal Action Localization (TAL), Action Spotting (AS), and Precise Event Spotting (PES). This survey provides a comprehensive overview of these three key tasks, emphasizing their differences, applications, and the evolution of methodological approaches. We thoroughly review and categorize existing datasets and evaluation metrics specifically tailored for sports contexts, highlighting the strengths and limitations of each. Furthermore, we analyze state-of-the-art techniques, including multi-modal approaches that integrate audio and visual information, methods utilizing self-supervised learning and knowledge distillation, and approaches aimed at generalizing across multiple sports. Finally, we discuss critical open challenges and outline promising research directions toward developing more generalized, efficient, and robust event detection frameworks applicable to diverse sports. This survey serves as a foundation for future research on efficient, generalizable, and multi-modal sports event detection.

Enforcing Consistency and Fairness in Multi-level Hierarchical Classification with a Mask-based Output Layer

Mar 19, 2025Abstract:Traditional Multi-level Hierarchical Classification (MLHC) classifiers often rely on backbone models with $n$ independent output layers. This structure tends to overlook the hierarchical relationships between classes, leading to inconsistent predictions that violate the underlying taxonomy. Additionally, once a backbone architecture for an MLHC classifier is selected, adapting the model to accommodate new tasks can be challenging. For example, incorporating fairness to protect sensitive attributes within a hierarchical classifier necessitates complex adjustments to maintain the class hierarchy while enforcing fairness constraints. In this paper, we extend this concept to hierarchical classification by introducing a fair, model-agnostic layer designed to enforce taxonomy and optimize specific objectives, including consistency, fairness, and exact match. Our evaluations demonstrate that the proposed layer not only improves the fairness of predictions but also enforces the taxonomy, resulting in consistent predictions and superior performance. Compared to Large Language Models (LLMs) employing in-processing de-biasing techniques and models without any bias correction, our approach achieves better outcomes in both fairness and accuracy, making it particularly valuable in sectors like e-commerce, healthcare, and education, where predictive reliability is crucial.

Developing robust methods to handle missing data in real-world applications effectively

Feb 28, 2025Abstract:Missing data is a pervasive challenge spanning diverse data types, including tabular, sensor data, time-series, images and so on. Its origins are multifaceted, resulting in various missing mechanisms. Prior research in this field has predominantly revolved around the assumption of the Missing Completely At Random (MCAR) mechanism. However, Missing At Random (MAR) and Missing Not At Random (MNAR) mechanisms, though equally prevalent, have often remained underexplored despite their significant influence. This PhD project presents a comprehensive research agenda designed to investigate the implications of diverse missing data mechanisms. The principal aim is to devise robust methodologies capable of effectively handling missing data while accommodating the unique characteristics of MCAR, MAR, and MNAR mechanisms. By addressing these gaps, this research contributes to an enriched understanding of the challenges posed by missing data across various industries and data modalities. It seeks to provide practical solutions that enable the effective management of missing data, empowering researchers and practitioners to leverage incomplete datasets confidently.

Unmasking Gender Bias in Recommendation Systems and Enhancing Category-Aware Fairness

Feb 25, 2025

Abstract:Recommendation systems are now an integral part of our daily lives. We rely on them for tasks such as discovering new movies, finding friends on social media, and connecting job seekers with relevant opportunities. Given their vital role, we must ensure these recommendations are free from societal stereotypes. Therefore, evaluating and addressing such biases in recommendation systems is crucial. Previous work evaluating the fairness of recommended items fails to capture certain nuances as they mainly focus on comparing performance metrics for different sensitive groups. In this paper, we introduce a set of comprehensive metrics for quantifying gender bias in recommendations. Specifically, we show the importance of evaluating fairness on a more granular level, which can be achieved using our metrics to capture gender bias using categories of recommended items like genres for movies. Furthermore, we show that employing a category-aware fairness metric as a regularization term along with the main recommendation loss during training can help effectively minimize bias in the models' output. We experiment on three real-world datasets, using five baseline models alongside two popular fairness-aware models, to show the effectiveness of our metrics in evaluating gender bias. Our metrics help provide an enhanced insight into bias in recommended items compared to previous metrics. Additionally, our results demonstrate how incorporating our regularization term significantly improves the fairness in recommendations for different categories without substantial degradation in overall recommendation performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge