Mengyao Zhu

The CCF AATC 2025: Speech Restoration Challenge

Sep 16, 2025

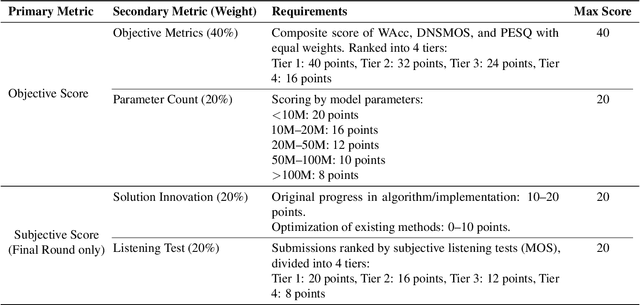

Abstract:Real-world speech communication is often hampered by a variety of distortions that degrade quality and intelligibility. While many speech enhancement algorithms target specific degradations like noise or reverberation, they often fall short in realistic scenarios where multiple distortions co-exist and interact. To spur research in this area, we introduce the Speech Restoration Challenge as part of the China Computer Federation (CCF) Advanced Audio Technology Competition (AATC) 2025. This challenge focuses on restoring speech signals affected by a composite of three degradation types: (1) complex acoustic degradations including non-stationary noise and reverberation; (2) signal-chain artifacts such as those from MP3 compression; and (3) secondary artifacts introduced by other pre-processing enhancement models. We describe the challenge's background, the design of the task, the comprehensive dataset creation methodology, and the detailed evaluation protocol, which assesses both objective performance and model complexity. Homepage: https://ccf-aatc.org.cn/.

End-to-End Model for Speech Enhancement by Consistent Spectrogram Masking

Jan 02, 2019

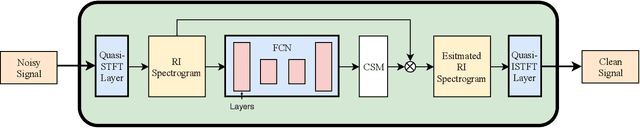

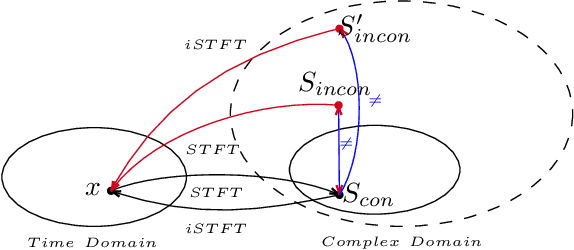

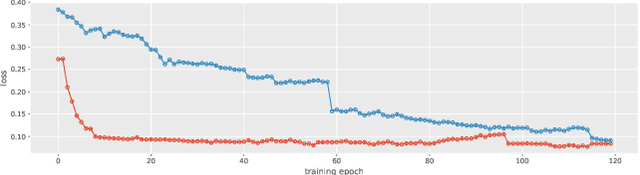

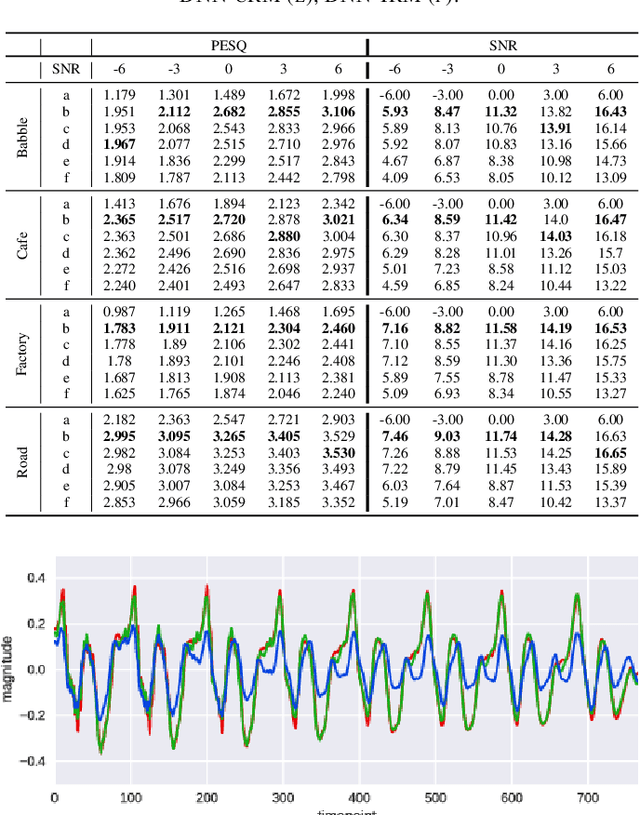

Abstract:Recently, phase processing is attracting increasinginterest in speech enhancement community. Some researchersintegrate phase estimations module into speech enhancementmodels by using complex-valued short-time Fourier transform(STFT) spectrogram based training targets, e.g. Complex RatioMask (cRM) [1]. However, masking on spectrogram would violentits consistency constraints. In this work, we prove that theinconsistent problem enlarges the solution space of the speechenhancement model and causes unintended artifacts. ConsistencySpectrogram Masking (CSM) is proposed to estimate the complexspectrogram of a signal with the consistency constraint in asimple but not trivial way. The experiments comparing ourCSM based end-to-end model with other methods are conductedto confirm that the CSM accelerate the model training andhave significant improvements in speech quality. From ourexperimental results, we assured that our method could enha

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge