Matthew B. Dwyer

LabelAny3D: Label Any Object 3D in the Wild

Jan 04, 2026Abstract:Detecting objects in 3D space from monocular input is crucial for applications ranging from robotics to scene understanding. Despite advanced performance in the indoor and autonomous driving domains, existing monocular 3D detection models struggle with in-the-wild images due to the lack of 3D in-the-wild datasets and the challenges of 3D annotation. We introduce LabelAny3D, an \emph{analysis-by-synthesis} framework that reconstructs holistic 3D scenes from 2D images to efficiently produce high-quality 3D bounding box annotations. Built on this pipeline, we present COCO3D, a new benchmark for open-vocabulary monocular 3D detection, derived from the MS-COCO dataset and covering a wide range of object categories absent from existing 3D datasets. Experiments show that annotations generated by LabelAny3D improve monocular 3D detection performance across multiple benchmarks, outperforming prior auto-labeling approaches in quality. These results demonstrate the promise of foundation-model-driven annotation for scaling up 3D recognition in realistic, open-world settings.

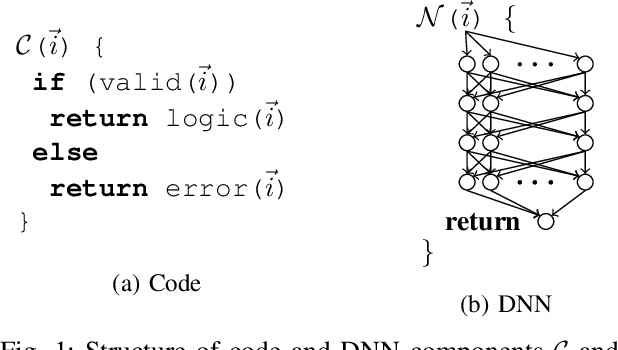

RBR4DNN: Requirements-based Testing of Neural Networks

Apr 03, 2025

Abstract:Deep neural network (DNN) testing is crucial for the reliability and safety of critical systems, where failures can have severe consequences. Although various techniques have been developed to create robustness test suites, requirements-based testing for DNNs remains largely unexplored -- yet such tests are recognized as an essential component of software validation of critical systems. In this work, we propose a requirements-based test suite generation method that uses structured natural language requirements formulated in a semantic feature space to create test suites by prompting text-conditional latent diffusion models with the requirement precondition and then using the associated postcondition to define a test oracle to judge outputs of the DNN under test. We investigate the approach using fine-tuned variants of pre-trained generative models. Our experiments on the MNIST, CelebA-HQ, ImageNet, and autonomous car driving datasets demonstrate that the generated test suites are realistic, diverse, consistent with preconditions, and capable of revealing faults.

Quantitative Predictive Monitoring and Control for Safe Human-Machine Interaction

Dec 17, 2024

Abstract:There is a growing trend toward AI systems interacting with humans to revolutionize a range of application domains such as healthcare and transportation. However, unsafe human-machine interaction can lead to catastrophic failures. We propose a novel approach that predicts future states by accounting for the uncertainty of human interaction, monitors whether predictions satisfy or violate safety requirements, and adapts control actions based on the predictive monitoring results. Specifically, we develop a new quantitative predictive monitor based on Signal Temporal Logic with Uncertainty (STL-U) to compute a robustness degree interval, which indicates the extent to which a sequence of uncertain predictions satisfies or violates an STL-U requirement. We also develop a new loss function to guide the uncertainty calibration of Bayesian deep learning and a new adaptive control method, both of which leverage STL-U quantitative predictive monitoring results. We apply the proposed approach to two case studies: Type 1 Diabetes management and semi-autonomous driving. Experiments show that the proposed approach improves safety and effectiveness in both case studies.

Measuring Feature Dependency of Neural Networks by Collapsing Feature Dimensions in the Data Manifold

Apr 18, 2024

Abstract:This paper introduces a new technique to measure the feature dependency of neural network models. The motivation is to better understand a model by querying whether it is using information from human-understandable features, e.g., anatomical shape, volume, or image texture. Our method is based on the principle that if a model is dependent on a feature, then removal of that feature should significantly harm its performance. A targeted feature is "removed" by collapsing the dimension in the data distribution that corresponds to that feature. We perform this by moving data points along the feature dimension to a baseline feature value while staying on the data manifold, as estimated by a deep generative model. Then we observe how the model's performance changes on the modified test data set, with the target feature dimension removed. We test our method on deep neural network models trained on synthetic image data with known ground truth, an Alzheimer's disease prediction task using MRI and hippocampus segmentations from the OASIS-3 dataset, and a cell nuclei classification task using the Lizard dataset.

Harnessing Neuron Stability to Improve DNN Verification

Jan 19, 2024

Abstract:Deep Neural Networks (DNN) have emerged as an effective approach to tackling real-world problems. However, like human-written software, DNNs are susceptible to bugs and attacks. This has generated significant interests in developing effective and scalable DNN verification techniques and tools. In this paper, we present VeriStable, a novel extension of recently proposed DPLL-based constraint DNN verification approach. VeriStable leverages the insight that while neuron behavior may be non-linear across the entire DNN input space, at intermediate states computed during verification many neurons may be constrained to have linear behavior - these neurons are stable. Efficiently detecting stable neurons reduces combinatorial complexity without compromising the precision of abstractions. Moreover, the structure of clauses arising in DNN verification problems shares important characteristics with industrial SAT benchmarks. We adapt and incorporate multi-threading and restart optimizations targeting those characteristics to further optimize DPLL-based DNN verification. We evaluate the effectiveness of VeriStable across a range of challenging benchmarks including fully-connected feedforward networks (FNNs), convolutional neural networks (CNNs) and residual networks (ResNets) applied to the standard MNIST and CIFAR datasets. Preliminary results show that VeriStable is competitive and outperforms state-of-the-art DNN verification tools, including $\alpha$-$\beta$-CROWN and MN-BaB, the first and second performers of the VNN-COMP, respectively.

PCV: A Point Cloud-Based Network Verifier

Jan 30, 2023

Abstract:3D vision with real-time LiDAR-based point cloud data became a vital part of autonomous system research, especially perception and prediction modules use for object classification, segmentation, and detection. Despite their success, point cloud-based network models are vulnerable to multiple adversarial attacks, where the certain factor of changes in the validation set causes significant performance drop in well-trained networks. Most of the existing verifiers work perfectly on 2D convolution. Due to complex architecture, dimension of hyper-parameter, and 3D convolution, no verifiers can perform the basic layer-wise verification. It is difficult to conclude the robustness of a 3D vision model without performing the verification. Because there will be always corner cases and adversarial input that can compromise the model's effectiveness. In this project, we describe a point cloud-based network verifier that successfully deals state of the art 3D classifier PointNet verifies the robustness by generating adversarial inputs. We have used extracted properties from the trained PointNet and changed certain factors for perturbation input. We calculate the impact on model accuracy versus property factor and can test PointNet network's robustness against a small collection of perturbing input states resulting from adversarial attacks like the suggested hybrid reverse signed attack. The experimental results reveal that the resilience property of PointNet is affected by our hybrid reverse signed perturbation strategy

White-box Testing of NLP models with Mask Neuron Coverage

May 10, 2022

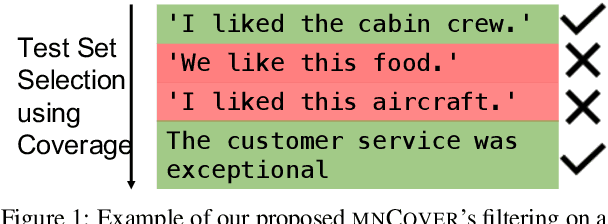

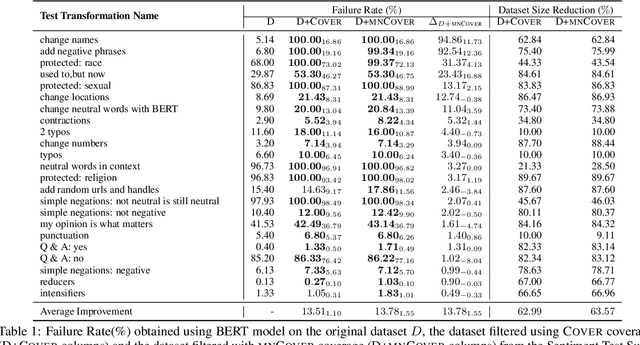

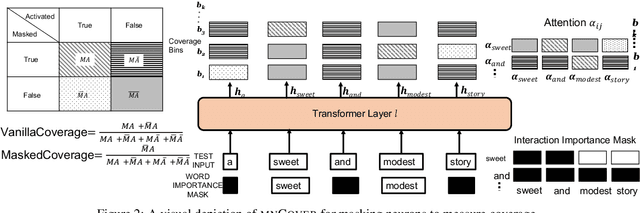

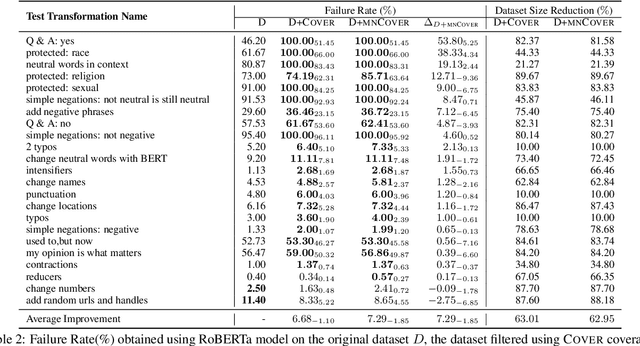

Abstract:Recent literature has seen growing interest in using black-box strategies like CheckList for testing the behavior of NLP models. Research on white-box testing has developed a number of methods for evaluating how thoroughly the internal behavior of deep models is tested, but they are not applicable to NLP models. We propose a set of white-box testing methods that are customized for transformer-based NLP models. These include Mask Neuron Coverage (MNCOVER) that measures how thoroughly the attention layers in models are exercised during testing. We show that MNCOVER can refine testing suites generated by CheckList by substantially reduce them in size, for more than 60\% on average, while retaining failing tests -- thereby concentrating the fault detection power of the test suite. Further we show how MNCOVER can be used to guide CheckList input generation, evaluate alternative NLP testing methods, and drive data augmentation to improve accuracy.

* Findings of NAACL 2022 submission, 12 pages

DNNV: A Framework for Deep Neural Network Verification

May 26, 2021

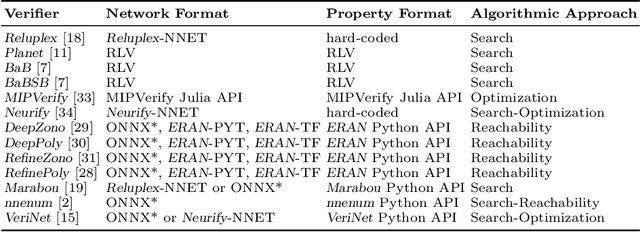

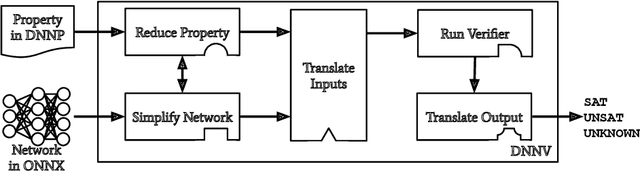

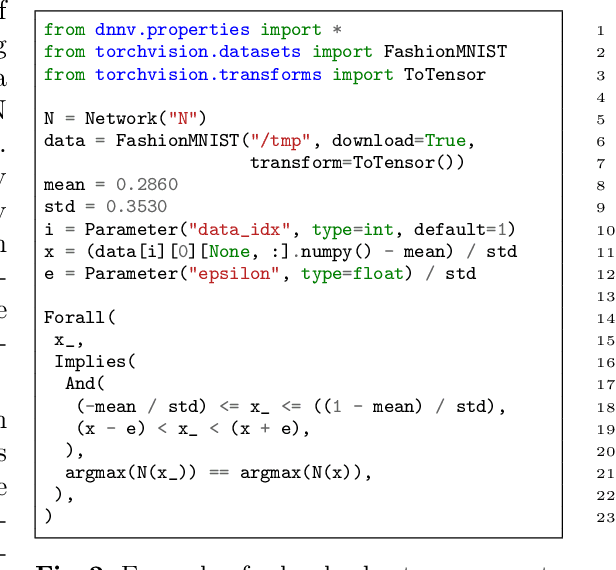

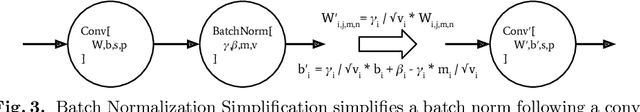

Abstract:Despite the large number of sophisticated deep neural network (DNN) verification algorithms, DNN verifier developers, users, and researchers still face several challenges. First, verifier developers must contend with the rapidly changing DNN field to support new DNN operations and property types. Second, verifier users have the burden of selecting a verifier input format to specify their problem. Due to the many input formats, this decision can greatly restrict the verifiers that a user may run. Finally, researchers face difficulties in re-using benchmarks to evaluate and compare verifiers, due to the large number of input formats required to run different verifiers. Existing benchmarks are rarely in formats supported by verifiers other than the one for which the benchmark was introduced. In this work we present DNNV, a framework for reducing the burden on DNN verifier researchers, developers, and users. DNNV standardizes input and output formats, includes a simple yet expressive DSL for specifying DNN properties, and provides powerful simplification and reduction operations to facilitate the application, development, and comparison of DNN verifiers. We show how DNNV increases the support of verifiers for existing benchmarks from 30% to 74%.

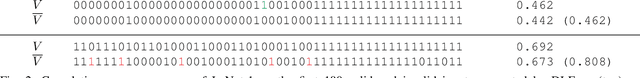

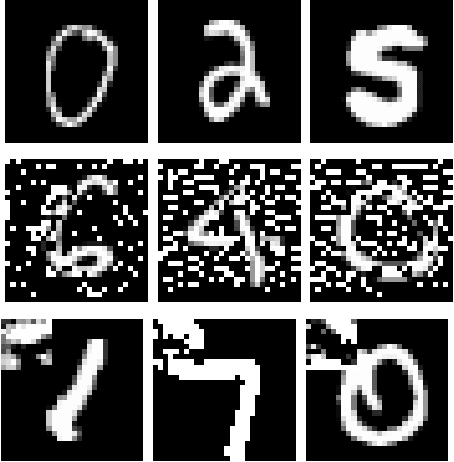

Distribution-Aware Testing of Neural Networks Using Generative Models

Feb 26, 2021

Abstract:The reliability of software that has a Deep Neural Network (DNN) as a component is urgently important today given the increasing number of critical applications being deployed with DNNs. The need for reliability raises a need for rigorous testing of the safety and trustworthiness of these systems. In the last few years, there have been a number of research efforts focused on testing DNNs. However the test generation techniques proposed so far lack a check to determine whether the test inputs they are generating are valid, and thus invalid inputs are produced. To illustrate this situation, we explored three recent DNN testing techniques. Using deep generative model based input validation, we show that all the three techniques generate significant number of invalid test inputs. We further analyzed the test coverage achieved by the test inputs generated by the DNN testing techniques and showed how invalid test inputs can falsely inflate test coverage metrics. To overcome the inclusion of invalid inputs in testing, we propose a technique to incorporate the valid input space of the DNN model under test in the test generation process. Our technique uses a deep generative model-based algorithm to generate only valid inputs. Results of our empirical studies show that our technique is effective in eliminating invalid tests and boosting the number of valid test inputs generated.

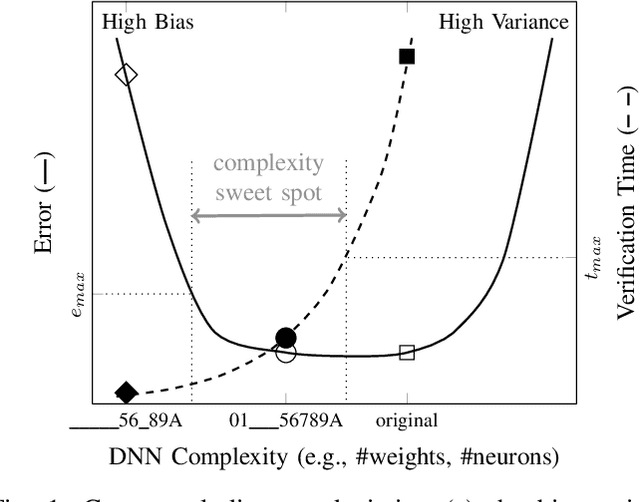

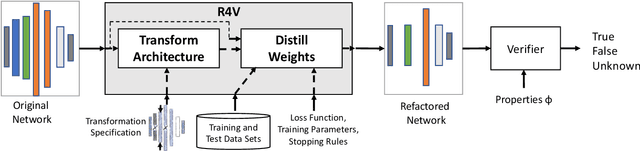

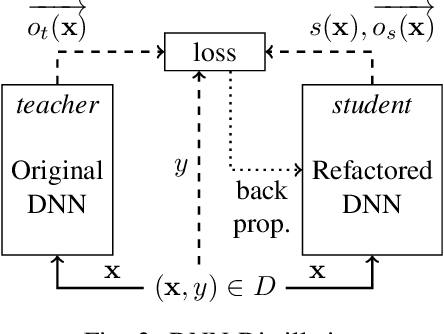

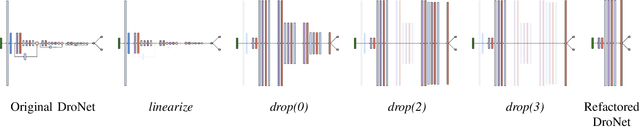

Refactoring Neural Networks for Verification

Aug 06, 2019

Abstract:Deep neural networks (DNN) are growing in capability and applicability. Their effectiveness has led to their use in safety critical and autonomous systems, yet there is a dearth of cost-effective methods available for reasoning about the behavior of a DNN. In this paper, we seek to expand the applicability and scalability of existing DNN verification techniques through DNN refactoring. A DNN refactoring defines (a) the transformation of the DNN's architecture, i.e., the number and size of its layers, and (b) the distillation of the learned relationships between the input features and function outputs of the original to train the transformed network. Unlike with traditional code refactoring, DNN refactoring does not guarantee functional equivalence of the two networks, but rather it aims to preserve the accuracy of the original network while producing a simpler network that is amenable to more efficient property verification. We present an automated framework for DNN refactoring, and demonstrate its potential effectiveness through three case studies on networks used in autonomous systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge