Ke Zhang

Senior Member, IEEE

ShapePU: A New PU Learning Framework Regularized by Global Consistency for Scribble Supervised Cardiac Segmentation

Jun 05, 2022

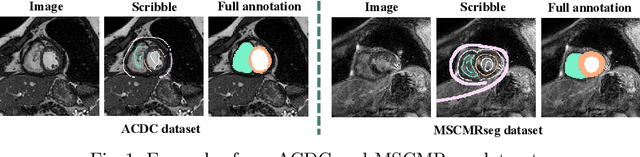

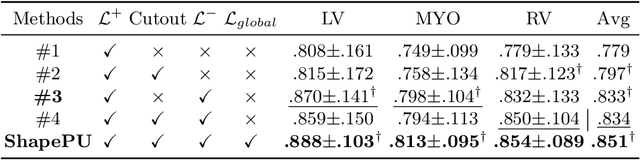

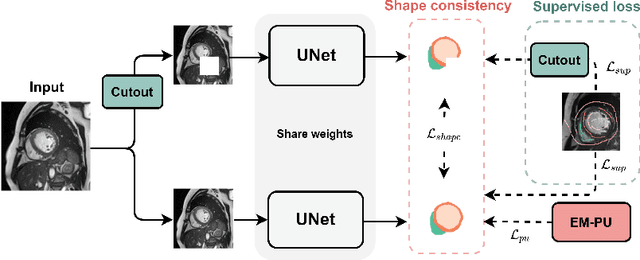

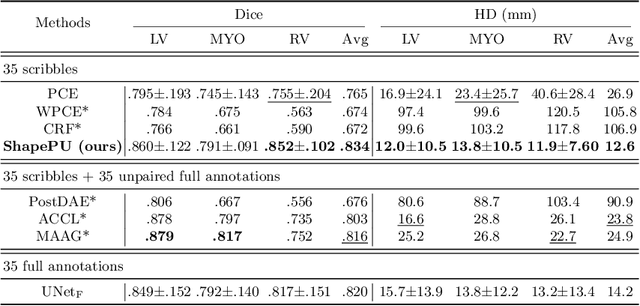

Abstract:Cardiac segmentation is an essential step for the diagnosis of cardiovascular diseases. However, pixel-wise dense labeling is both costly and time-consuming. Scribble, as a form of sparse annotation, is more accessible than full annotations. However, it's particularly challenging to train a segmentation network with weak supervision from scribbles. To tackle this problem, we propose a new scribble-guided method for cardiac segmentation, based on the Positive-Unlabeled (PU) learning framework and global consistency regularization, and termed as ShapePU. To leverage unlabeled pixels via PU learning, we first present an Expectation-Maximization (EM) algorithm to estimate the proportion of each class in the unlabeled pixels. Given the estimated ratios, we then introduce the marginal probability maximization to identify the classes of unlabeled pixels. To exploit shape knowledge, we apply cutout operations to training images, and penalize the inconsistent segmentation results. Evaluated on two open datasets, i.e, ACDC and MSCMRseg, our scribble-supervised ShapePU surpassed the fully supervised approach respectively by 1.4% and 9.8% in average Dice, and outperformed the state-of-the-art weakly supervised and PU learning methods by large margins. Our code is available at https://github.com/BWGZK/ShapePU.

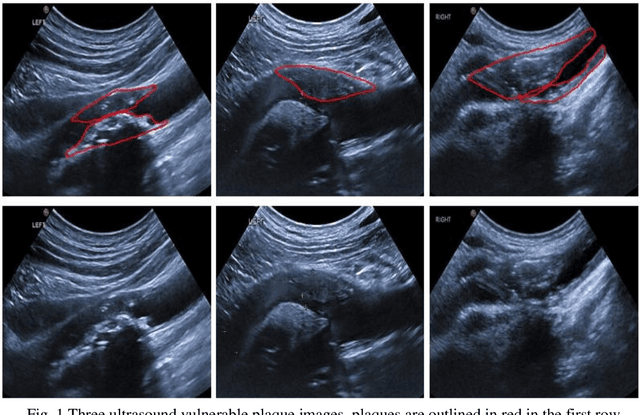

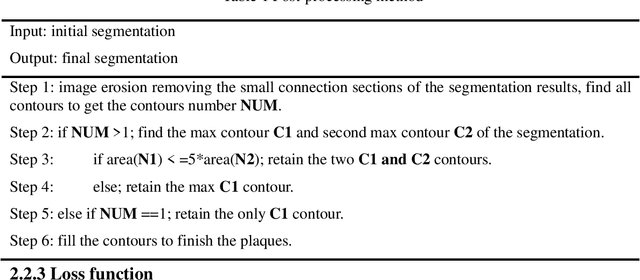

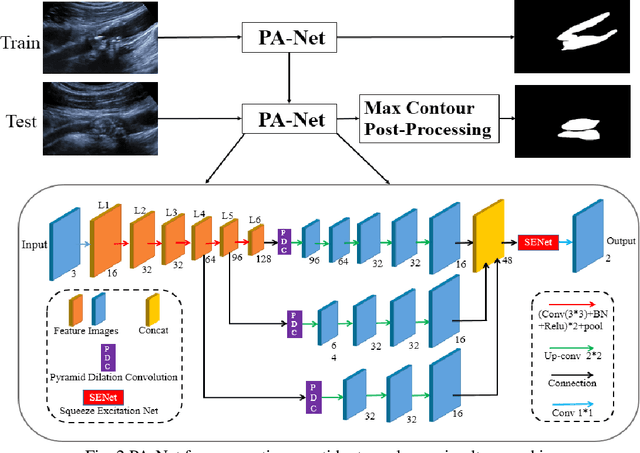

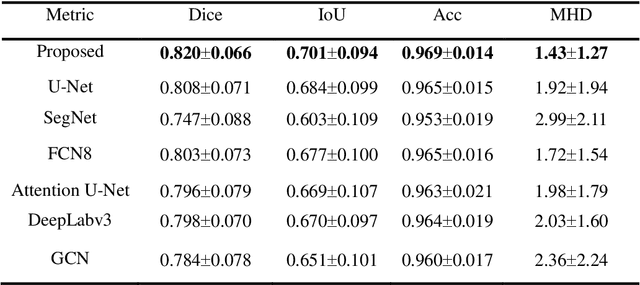

Parallel Network with Channel Attention and Post-Processing for Carotid Arteries Vulnerable Plaque Segmentation in Ultrasound Images

Apr 18, 2022

Abstract:Carotid arteries vulnerable plaques are a crucial factor in the screening of atherosclerosis by ultrasound technique. However, the plaques are contaminated by various noises such as artifact, speckle noise, and manual segmentation may be time-consuming. This paper proposes an automatic convolutional neural network (CNN) method for plaque segmentation in carotid ultrasound images using a small dataset. First, a parallel network with three independent scale decoders is utilized as our base segmentation network, pyramid dilation convolutions are used to enlarge receptive fields in the three segmentation sub-networks. Subsequently, the three decoders are merged to be rectified in channels by SENet. Thirdly, in test stage, the initially segmented plaque is refined by the max contour morphology post-processing to obtain the final plaque. Moreover, three loss function Dice loss, SSIM loss and cross-entropy loss are compared to segment plaques. Test results show that the proposed method with dice loss function yields a Dice value of 0.820, an IoU of 0.701, Acc of 0.969, and modified Hausdorff distance (MHD) of 1.43 for 30 vulnerable cases of plaques, it outperforms some of the conventional CNN-based methods on these metrics. Additionally, we apply an ablation experiment to show the validity of each proposed module. Our study provides some reference for similar researches and may be useful in actual applications for plaque segmentation of ultrasound carotid arteries.

InsCon:Instance Consistency Feature Representation via Self-Supervised Learning

Mar 15, 2022

Abstract:Feature representation via self-supervised learning has reached remarkable success in image-level contrastive learning, which brings impressive performances on image classification tasks. While image-level feature representation mainly focuses on contrastive learning in single instance, it ignores the objective differences between pretext and downstream prediction tasks such as object detection and instance segmentation. In order to fully unleash the power of feature representation on downstream prediction tasks, we propose a new end-to-end self-supervised framework called InsCon, which is devoted to capturing multi-instance information and extracting cell-instance features for object recognition and localization. On the one hand, InsCon builds a targeted learning paradigm that applies multi-instance images as input, aligning the learned feature between corresponding instance views, which makes it more appropriate for multi-instance recognition tasks. On the other hand, InsCon introduces the pull and push of cell-instance, which utilizes cell consistency to enhance fine-grained feature representation for precise boundary localization. As a result, InsCon learns multi-instance consistency on semantic feature representation and cell-instance consistency on spatial feature representation. Experiments demonstrate the method we proposed surpasses MoCo v2 by 1.1% AP^{bb} on COCO object detection and 1.0% AP^{mk} on COCO instance segmentation using Mask R-CNN R50-FPN network structure with 90k iterations, 2.1% APbb on PASCAL VOC objection detection using Faster R-CNN R50-C4 network structure with 24k iterations.

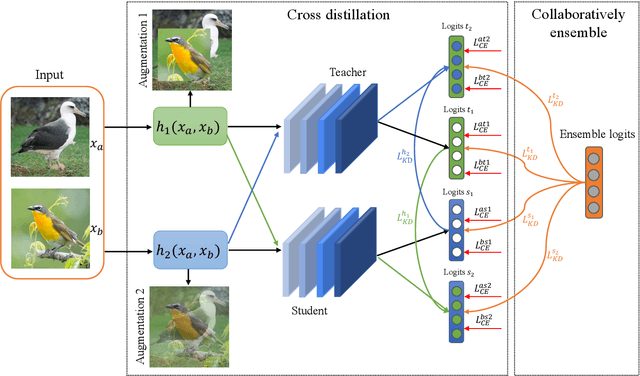

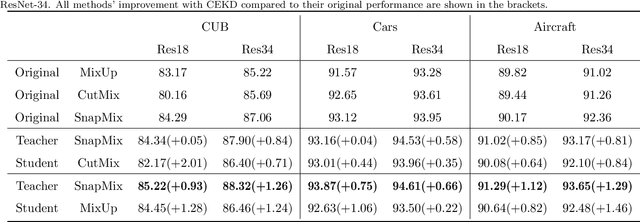

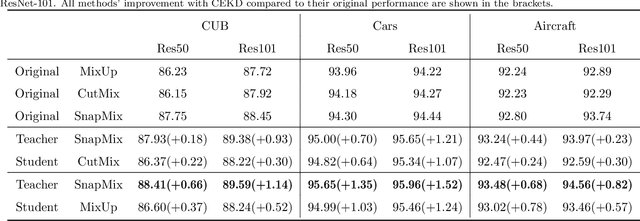

CEKD:Cross Ensemble Knowledge Distillation for Augmented Fine-grained Data

Mar 13, 2022

Abstract:Data augmentation has been proved effective in training deep models. Existing data augmentation methods tackle the fine-grained problem by blending image pairs and fusing corresponding labels according to the statistics of mixed pixels, which produces additional noise harmful to the performance of networks. Motivated by this, we present a simple yet effective cross ensemble knowledge distillation (CEKD) model for fine-grained feature learning. We innovatively propose a cross distillation module to provide additional supervision to alleviate the noise problem, and propose a collaborative ensemble module to overcome the target conflict problem. The proposed model can be trained in an end-to-end manner, and only requires image-level label supervision. Extensive experiments on widely used fine-grained benchmarks demonstrate the effectiveness of our proposed model. Specifically, with the backbone of ResNet-101, CEKD obtains the accuracy of 89.59%, 95.96% and 94.56% in three datasets respectively, outperforming state-of-the-art API-Net by 0.99%, 1.06% and 1.16%.

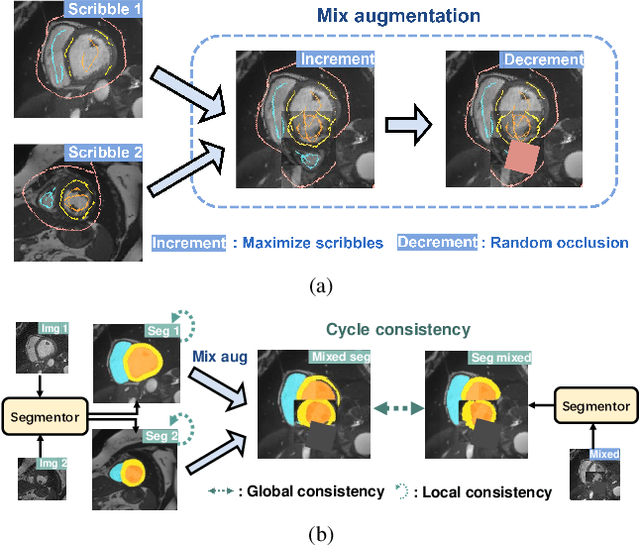

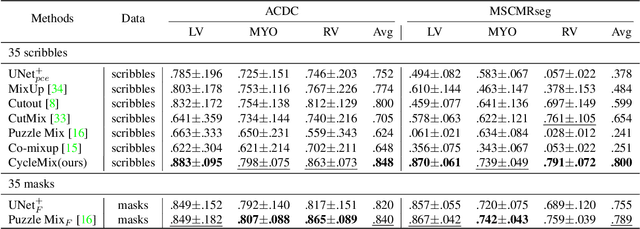

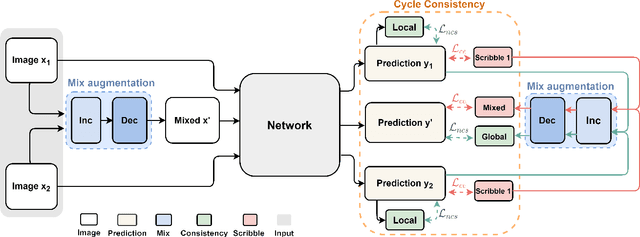

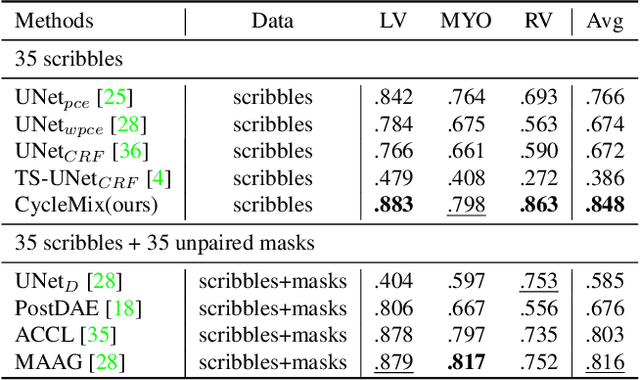

CycleMix: A Holistic Strategy for Medical Image Segmentation from Scribble Supervision

Mar 12, 2022

Abstract:Curating a large set of fully annotated training data can be costly, especially for the tasks of medical image segmentation. Scribble, a weaker form of annotation, is more obtainable in practice, but training segmentation models from limited supervision of scribbles is still challenging. To address the difficulties, we propose a new framework for scribble learning-based medical image segmentation, which is composed of mix augmentation and cycle consistency and thus is referred to as CycleMix. For augmentation of supervision, CycleMix adopts the mixup strategy with a dedicated design of random occlusion, to perform increments and decrements of scribbles. For regularization of supervision, CycleMix intensifies the training objective with consistency losses to penalize inconsistent segmentation, which results in significant improvement of segmentation performance. Results on two open datasets, i.e., ACDC and MSCMRseg, showed that the proposed method achieved exhilarating performance, demonstrating comparable or even better accuracy than the fully-supervised methods. The code and expert-made scribble annotations for MSCMRseg are publicly available at https://github.com/BWGZK/CycleMix.

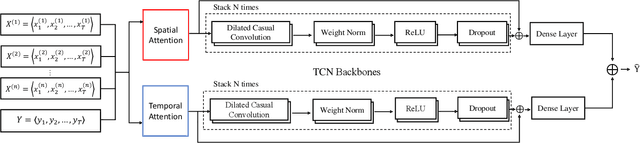

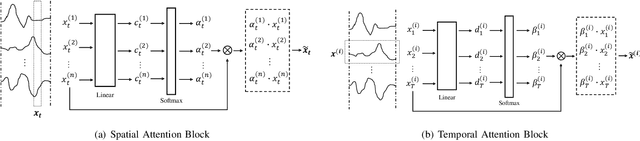

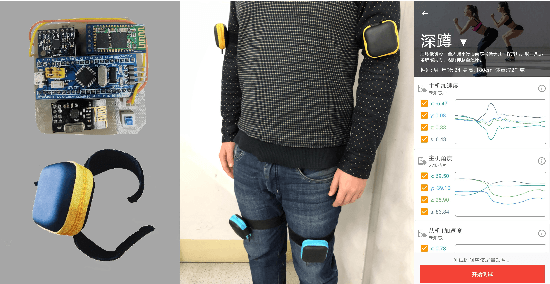

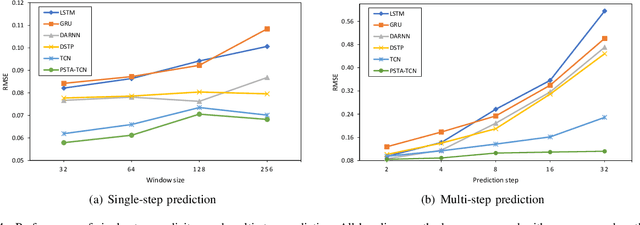

Parallel Spatio-Temporal Attention-Based TCN for Multivariate Time Series Prediction

Mar 02, 2022

Abstract:As industrial systems become more complex and monitoring sensors for everything from surveillance to our health become more ubiquitous, multivariate time series prediction is taking an important place in the smooth-running of our society. A recurrent neural network with attention to help extend the prediction windows is the current-state-of-the-art for this task. However, we argue that their vanishing gradients, short memories, and serial architecture make RNNs fundamentally unsuited to long-horizon forecasting with complex data. Temporal convolutional networks (TCNs) do not suffer from gradient problems and they support parallel calculations, making them a more appropriate choice. Additionally, they have longer memories than RNNs, albeit with some instability and efficiency problems. Hence, we propose a framework, called PSTA-TCN, that combines a parallel spatio-temporal attention mechanism to extract dynamic internal correlations with stacked TCN backbones to extract features from different window sizes. The framework makes full use parallel calculations to dramatically reduce training times, while substantially increasing accuracy with stable prediction windows up to 13 times longer than the status quo.

Virtual Adversarial Training for Semi-supervised Breast Mass Classification

Jan 25, 2022Abstract:This study aims to develop a novel computer-aided diagnosis (CAD) scheme for mammographic breast mass classification using semi-supervised learning. Although supervised deep learning has achieved huge success across various medical image analysis tasks, its success relies on large amounts of high-quality annotations, which can be challenging to acquire in practice. To overcome this limitation, we propose employing a semi-supervised method, i.e., virtual adversarial training (VAT), to leverage and learn useful information underlying in unlabeled data for better classification of breast masses. Accordingly, our VAT-based models have two types of losses, namely supervised and virtual adversarial losses. The former loss acts as in supervised classification, while the latter loss aims at enhancing model robustness against virtual adversarial perturbation, thus improving model generalizability. To evaluate the performance of our VAT-based CAD scheme, we retrospectively assembled a total of 1024 breast mass images, with equal number of benign and malignant masses. A large CNN and a small CNN were used in this investigation, and both were trained with and without the adversarial loss. When the labeled ratios were 40% and 80%, VAT-based CNNs delivered the highest classification accuracy of 0.740 and 0.760, respectively. The experimental results suggest that the VAT-based CAD scheme can effectively utilize meaningful knowledge from unlabeled data to better classify mammographic breast mass images.

TriCoLo: Trimodal Contrastive Loss for Fine-grained Text to Shape Retrieval

Jan 19, 2022Abstract:Recent work on contrastive losses for learning joint embeddings over multimodal data has been successful at downstream tasks such as retrieval and classification. On the other hand, work on joint representation learning for 3D shapes and text has thus far mostly focused on improving embeddings through modeling of complex attention between representations , or multi-task learning . We show that with large batch contrastive learning we achieve SoTA on text-shape retrieval without complex attention mechanisms or losses. Prior work in 3D and text representations has also focused on bimodal representation learning using either voxels or multi-view images with text. To this end, we propose a trimodal learning scheme to achieve even higher performance and better representations for all modalities.

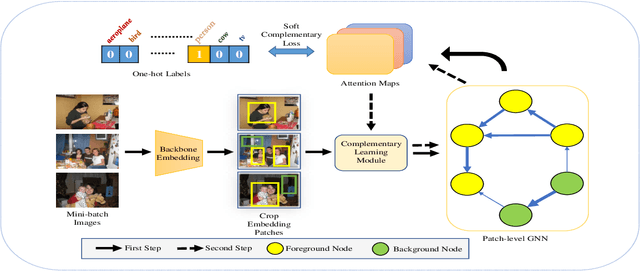

Maximize the Exploration of Congeneric Semantics for Weakly Supervised Semantic Segmentation

Oct 08, 2021

Abstract:With the increase in the number of image data and the lack of corresponding labels, weakly supervised learning has drawn a lot of attention recently in computer vision tasks, especially in the fine-grained semantic segmentation problem. To alleviate human efforts from expensive pixel-by-pixel annotations, our method focuses on weakly supervised semantic segmentation (WSSS) with image-level tags, which are much easier to obtain. As a huge gap exists between pixel-level segmentation and image-level labels, how to reflect the image-level semantic information on each pixel is an important question. To explore the congeneric semantic regions from the same class to the maximum, we construct the patch-level graph neural network (P-GNN) based on the self-detected patches from different images that contain the same class labels. Patches can frame the objects as much as possible and include as little background as possible. The graph network that is established with patches as the nodes can maximize the mutual learning of similar objects. We regard the embedding vectors of patches as nodes, and use transformer-based complementary learning module to construct weighted edges according to the embedding similarity between different nodes. Moreover, to better supplement semantic information, we propose soft-complementary loss functions matched with the whole network structure. We conduct experiments on the popular PASCAL VOC 2012 benchmarks, and our model yields state-of-the-art performance.

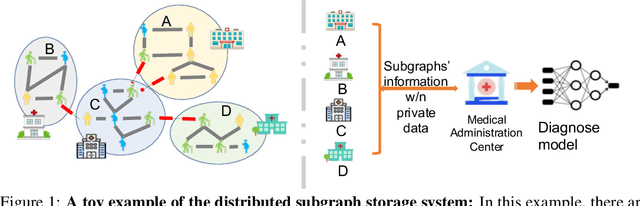

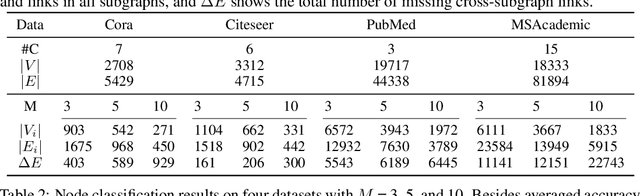

Subgraph Federated Learning with Missing Neighbor Generation

Jul 22, 2021

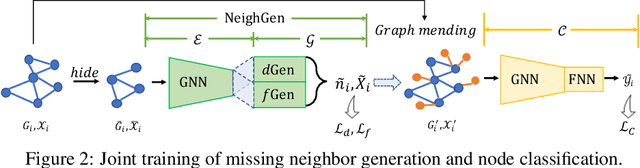

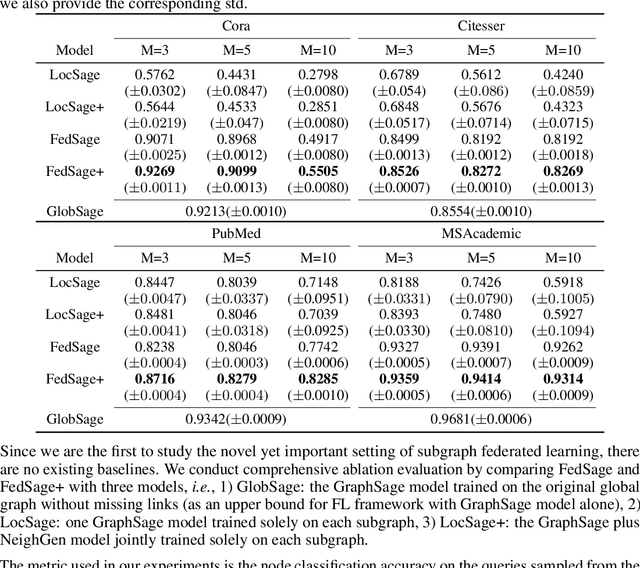

Abstract:Graphs have been widely used in data mining and machine learning due to their unique representation of real-world objects and their interactions. As graphs are getting bigger and bigger nowadays, it is common to see their subgraphs separately collected and stored in multiple local systems. Therefore, it is natural to consider the subgraph federated learning setting, where each local system holding a small subgraph that may be biased from the distribution of the whole graph. Hence, the subgraph federated learning aims to collaboratively train a powerful and generalizable graph mining model without directly sharing their graph data. In this work, towards the novel yet realistic setting of subgraph federated learning, we propose two major techniques: (1) FedSage, which trains a GraphSage model based on FedAvg to integrate node features, link structures, and task labels on multiple local subgraphs; (2) FedSage+, which trains a missing neighbor generator along FedSage to deal with missing links across local subgraphs. Empirical results on four real-world graph datasets with synthesized subgraph federated learning settings demonstrate the effectiveness and efficiency of our proposed techniques. At the same time, consistent theoretical implications are made towards their generalization ability on the global graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge