Jiri Matas

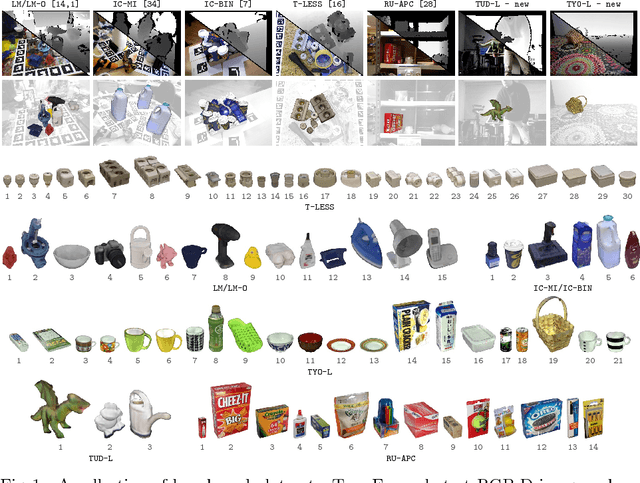

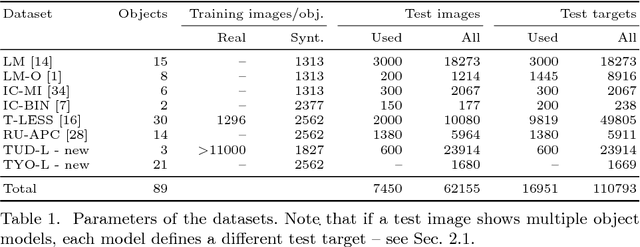

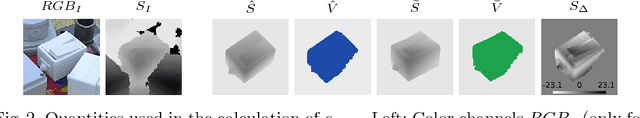

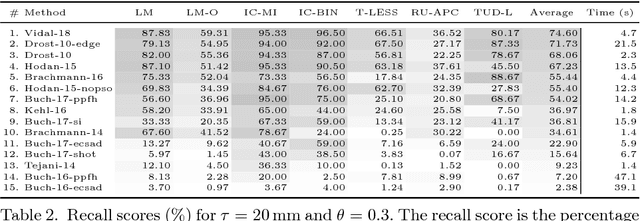

BOP: Benchmark for 6D Object Pose Estimation

Aug 24, 2018

Abstract:We propose a benchmark for 6D pose estimation of a rigid object from a single RGB-D input image. The training data consists of a texture-mapped 3D object model or images of the object in known 6D poses. The benchmark comprises of: i) eight datasets in a unified format that cover different practical scenarios, including two new datasets focusing on varying lighting conditions, ii) an evaluation methodology with a pose-error function that deals with pose ambiguities, iii) a comprehensive evaluation of 15 diverse recent methods that captures the status quo of the field, and iv) an online evaluation system that is open for continuous submission of new results. The evaluation shows that methods based on point-pair features currently perform best, outperforming template matching methods, learning-based methods and methods based on 3D local features. The project website is available at bop.felk.cvut.cz.

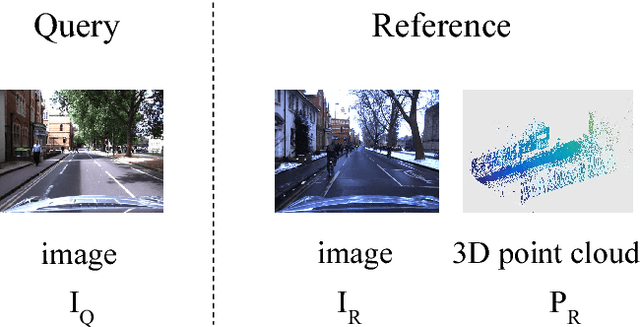

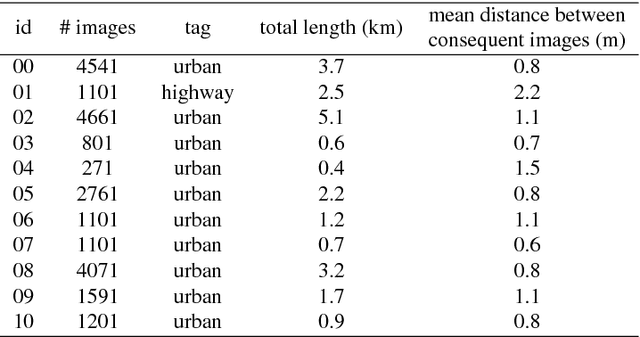

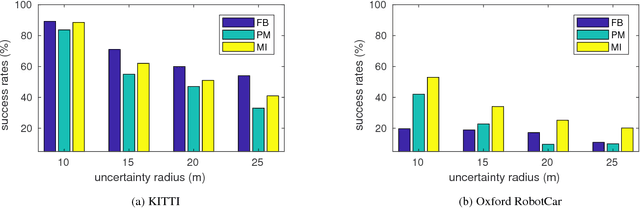

Performance Analysis and Robustification of Single-query 6-DoF Camera Pose Estimation

Aug 17, 2018

Abstract:We consider a single-query 6-DoF camera pose estimation with reference images and a point cloud, i.e. the problem of estimating the position and orientation of a camera by using reference images and a point cloud. In this work, we perform a systematic comparison of three state-of-the-art strategies for 6-DoF camera pose estimation, i.e. feature-based, photometric-based and mutual-information-based approaches. The performance of the studied methods is evaluated on two standard datasets in terms of success rate, translation error and max orientation error. Building on the results analysis, we propose a hybrid approach that combines feature-based and mutual-information-based pose estimation methods since it provides complementary properties for pose estimation. Experiments show that (1) in cases with large environmental variance, the hybrid approach outperforms feature-based and mutual-information-based approaches by an average of 25.1% and 5.8% in terms of success rate, respectively; (2) in cases where query and reference images are captured at similar imaging conditions, the hybrid approach performs similarly as the feature-based approach, but outperforms both photometric-based and mutual-information-based approaches with a clear margin; (3) the feature-based approach is consistently more accurate than mutual-information-based and photometric-based approaches when at least 4 consistent matching points are found between the query and reference images.

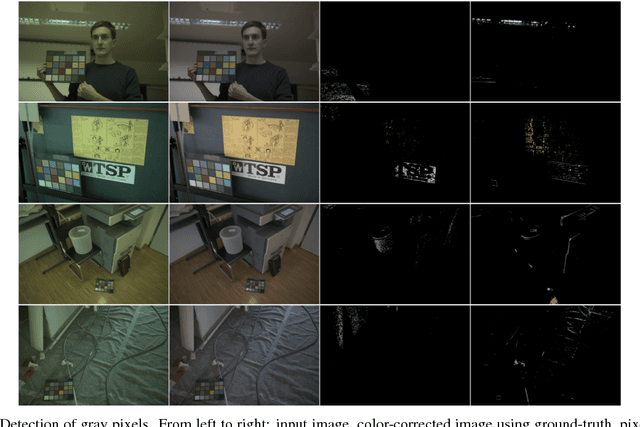

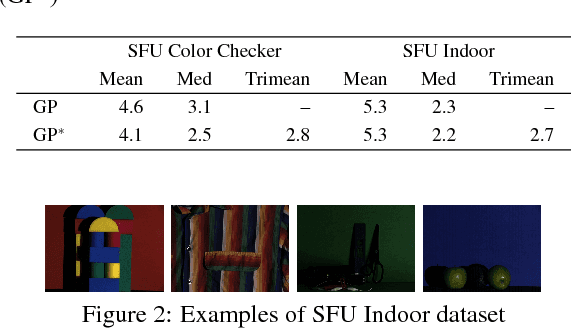

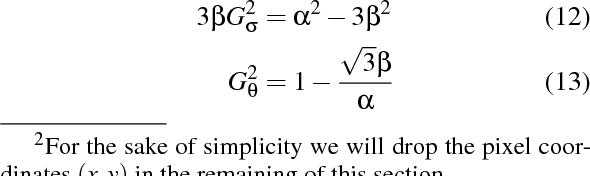

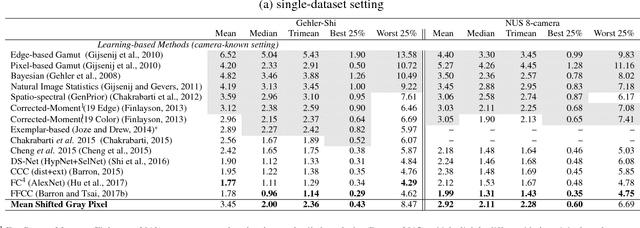

Dichromatic Gray Pixel for Camera-agnostic Color Constancy

Jul 05, 2018

Abstract:We present a statistical color constancy method that relies on novel gray pixel detection and mean shift clustering. The method, called Mean Shifted Grey Pixel -- MSGP -- is compact, easy to compute and requires no training. Experiments on different datasets show that the proposed approach outperforms state-of-the-art methods in the camera-agnostic scenario. In the setting where the camera is known, MSGP outperforms all statistical methods.

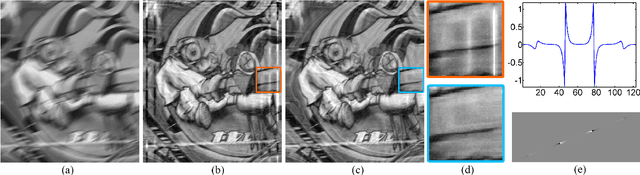

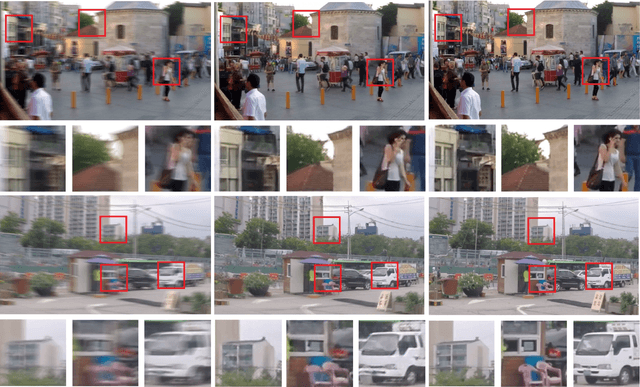

Fast Motion Deblurring for Feature Detection and Matching Using Inertial Measurements

May 22, 2018

Abstract:Many computer vision and image processing applications rely on local features. It is well-known that motion blur decreases the performance of traditional feature detectors and descriptors. We propose an inertial-based deblurring method for improving the robustness of existing feature detectors and descriptors against the motion blur. Unlike most deblurring algorithms, the method can handle spatially-variant blur and rolling shutter distortion. Furthermore, it is capable of running in real-time contrary to state-of-the-art algorithms. The limitations of inertial-based blur estimation are taken into account by validating the blur estimates using image data. The evaluation shows that when the method is used with traditional feature detector and descriptor, it increases the number of detected keypoints, provides higher repeatability and improves the localization accuracy. We also demonstrate that such features will lead to more accurate and complete reconstructions when used in the application of 3D visual reconstruction.

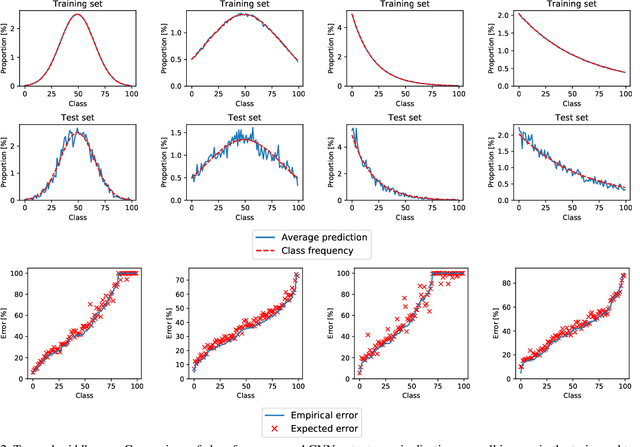

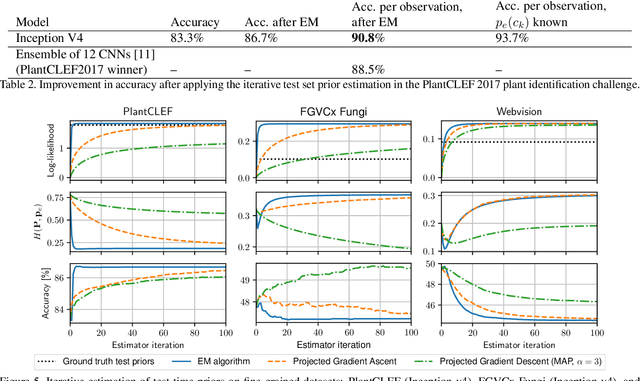

Improving CNN classifiers by estimating test-time priors

May 21, 2018

Abstract:The problem of different training and test set class priors is addressed in the context of CNN classifiers. An EM-based algorithm for test-time class priors estimation is evaluated on fine-grained computer vision problems for both the batch and on-line situations. Experimental results show a significant improvement on the fine-grained classification tasks using the known evaluation-time priors, increasing the top-1 accuracy by 4.0% on the FGVC iNaturalist 2018 validation set and by 3.9% on the FGVCx Fungi 2018 validation set. Iterative estimation of test-time priors on the PlantCLEF 2017 dataset increased the image classification accuracy by 3.4%, allowing a single CNN model to achieve state-of-the-art results and outperform the competition-winning ensemble of 12 CNNs.

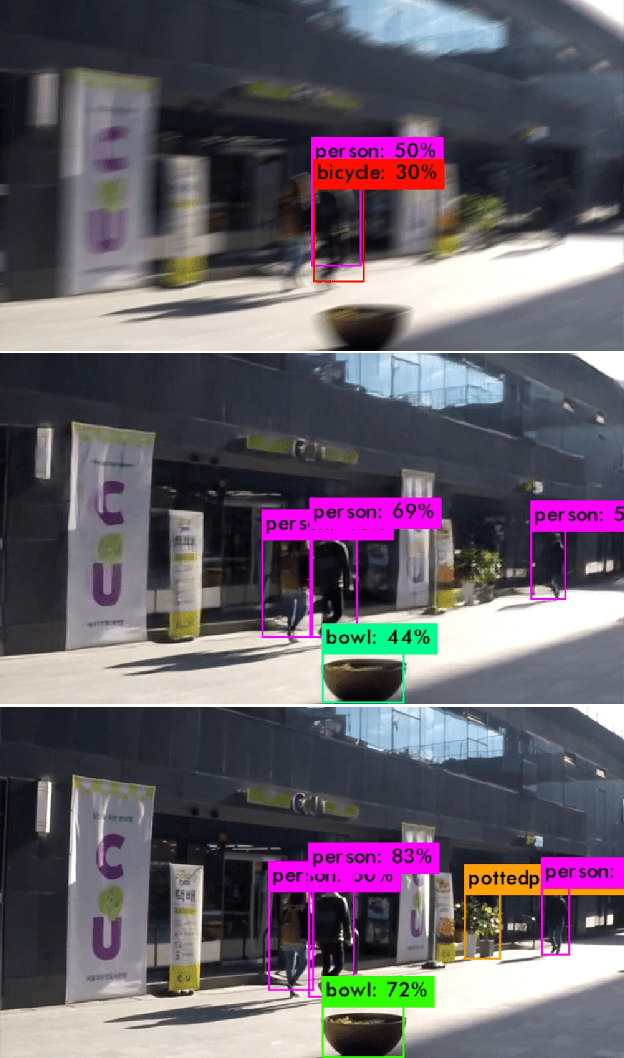

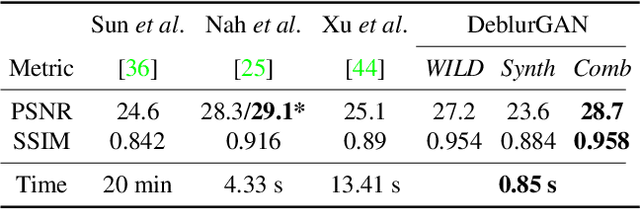

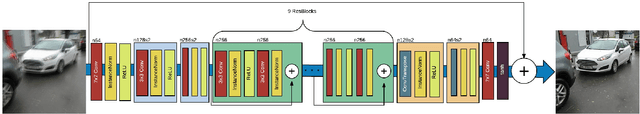

DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks

Apr 03, 2018

Abstract:We present DeblurGAN, an end-to-end learned method for motion deblurring. The learning is based on a conditional GAN and the content loss . DeblurGAN achieves state-of-the art performance both in the structural similarity measure and visual appearance. The quality of the deblurring model is also evaluated in a novel way on a real-world problem -- object detection on (de-)blurred images. The method is 5 times faster than the closest competitor -- DeepDeblur. We also introduce a novel method for generating synthetic motion blurred images from sharp ones, allowing realistic dataset augmentation. The model, code and the dataset are available at https://github.com/KupynOrest/DeblurGAN

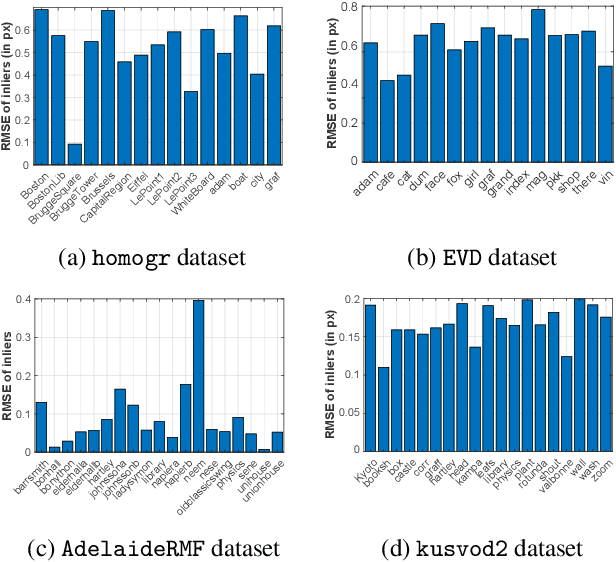

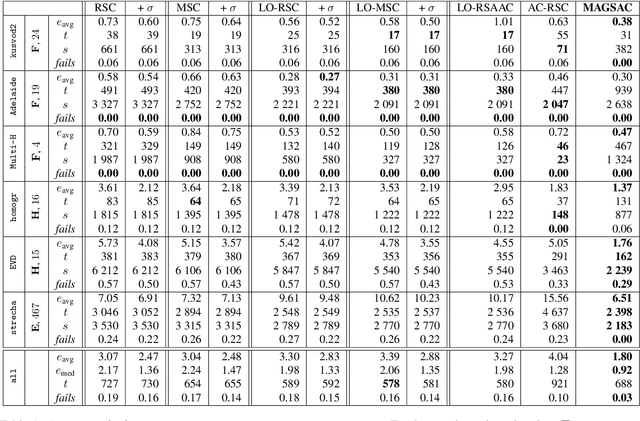

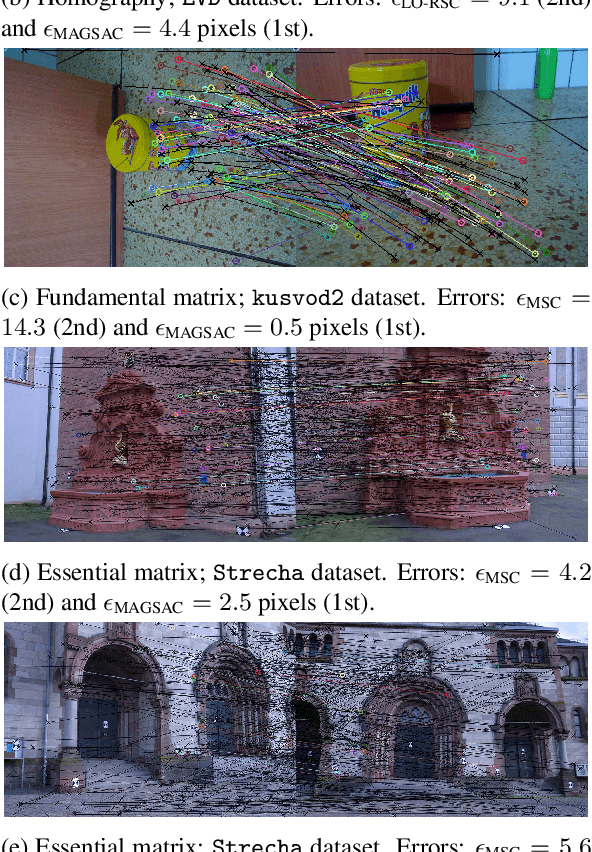

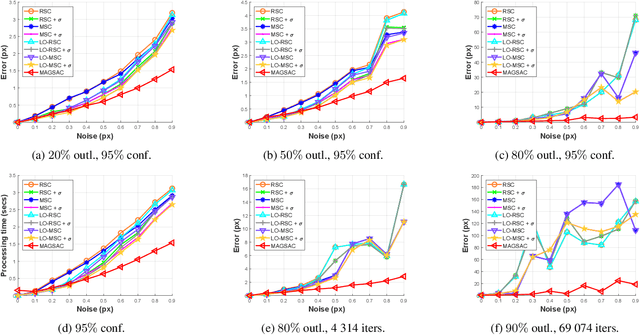

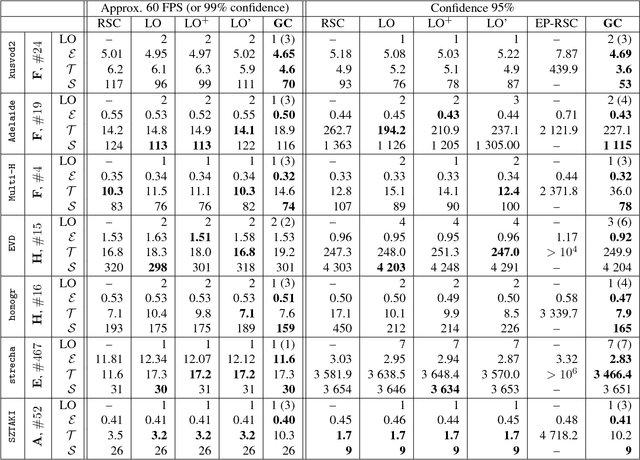

MAGSAC: marginalizing sample consensus

Mar 20, 2018

Abstract:A method called sigma-consensus is proposed to eliminate the need for a user-defined inlier-outlier threshold in RANSAC. Instead of estimating sigma, it is marginalized over a range of noise scales using a Bayesian estimator, i.e. the optimized model is obtained as the weighted average using the posterior probabilities as weights. Applying sigma-consensus, two methods are proposed: (i) a post-processing step which always improved the model quality on a wide range of vision problems without noticeable deterioration in processing time, i.e. at most 1-2 milliseconds; and (ii) a locally optimized RANSAC, called LO-MAGSAC, which includes sigma-consensus to the local optimization of LO-RANSAC. The method is superior to the state-of-the-art in terms of geometric accuracy on publicly available real world datasets for epipolar geometry (F and E), homography and affine transformation estimation.

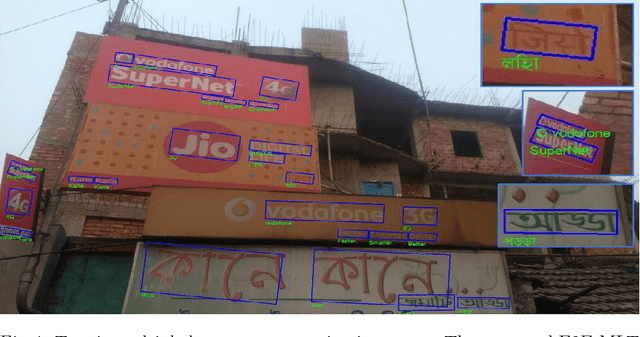

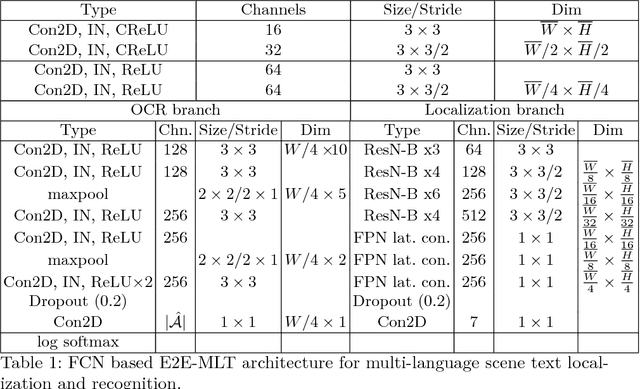

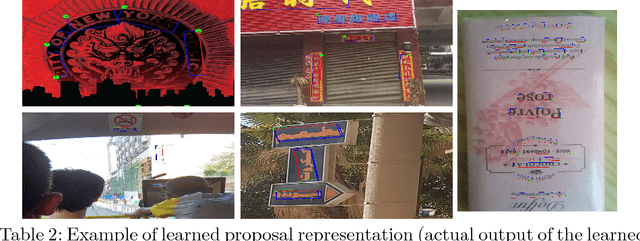

E2E-MLT - an Unconstrained End-to-End Method for Multi-Language Scene Text

Jan 30, 2018

Abstract:An end-to-end method for multi-language scene text localization, recognition and script identification is proposed. The approach is based on a set of convolutional neural nets. The method, called E2E-MLT, achieves state-of-the-art performance for both joint localization and script identification in natural images and in cropped word script identification. E2E-MLT is the first published multi-language OCR for scene text. The experiments show that obtaining accurate multi-language multi-script annotations is a challenging problem.

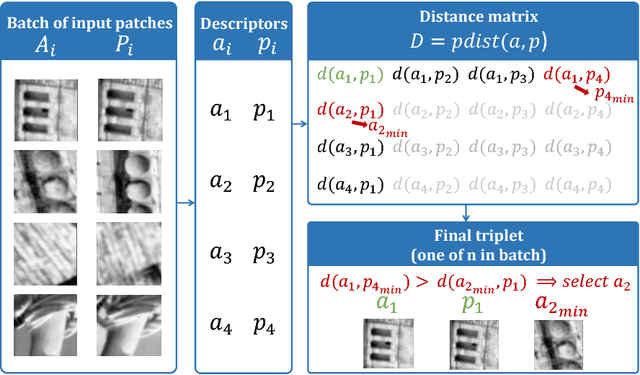

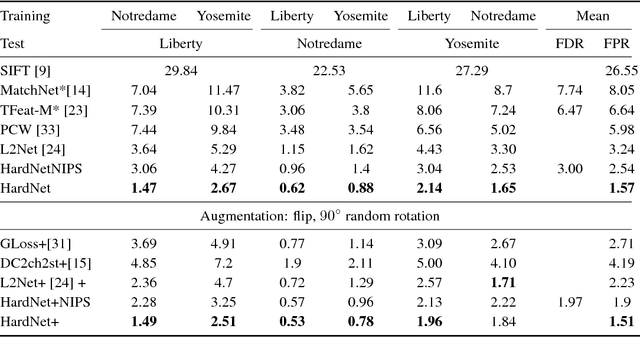

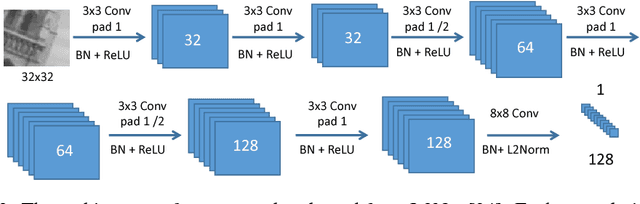

Working hard to know your neighbor's margins: Local descriptor learning loss

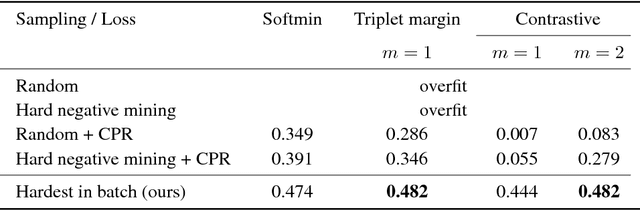

Jan 12, 2018

Abstract:We introduce a novel loss for learning local feature descriptors which is inspired by the Lowe's matching criterion for SIFT. We show that the proposed loss that maximizes the distance between the closest positive and closest negative patch in the batch is better than complex regularization methods; it works well for both shallow and deep convolution network architectures. Applying the novel loss to the L2Net CNN architecture results in a compact descriptor -- it has the same dimensionality as SIFT (128) that shows state-of-art performance in wide baseline stereo, patch verification and instance retrieval benchmarks. It is fast, computing a descriptor takes about 1 millisecond on a low-end GPU.

Graph-Cut RANSAC

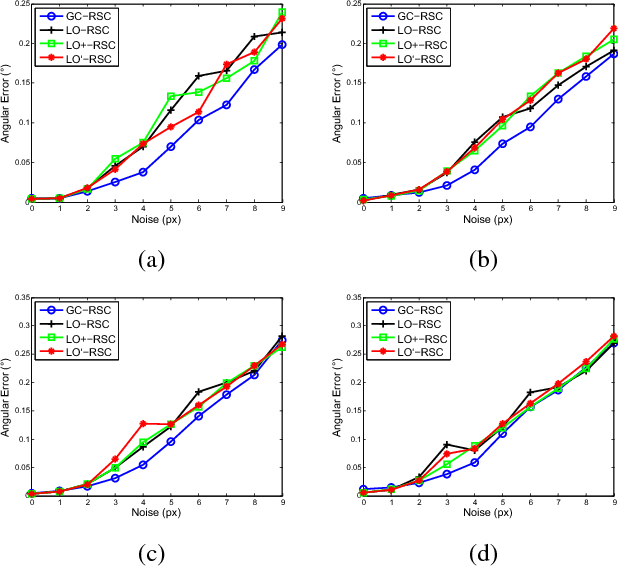

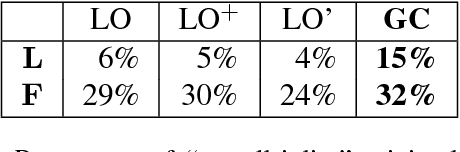

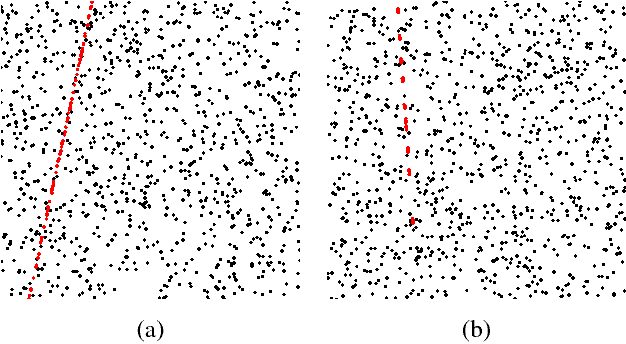

Nov 16, 2017

Abstract:A novel method for robust estimation, called Graph-Cut RANSAC, GC-RANSAC in short, is introduced. To separate inliers and outliers, it runs the graph-cut algorithm in the local optimization (LO) step which is applied when a so-far-the-best model is found. The proposed LO step is conceptually simple, easy to implement, globally optimal and efficient. GC-RANSAC is shown experimentally, both on synthesized tests and real image pairs, to be more geometrically accurate than state-of-the-art methods on a range of problems, e.g. line fitting, homography, affine transformation, fundamental and essential matrix estimation. It runs in real-time for many problems at a speed approximately equal to that of the less accurate alternatives (in milliseconds on standard CPU).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge