Jieyun Huang

Quantitative Analysis of Performance Drop in DeepSeek Model Quantization

May 05, 2025Abstract:Recently, there is a high demand for deploying DeepSeek-R1 and V3 locally, possibly because the official service often suffers from being busy and some organizations have data privacy concerns. While single-machine deployment offers infrastructure simplicity, the models' 671B FP8 parameter configuration exceeds the practical memory limits of a standard 8-GPU machine. Quantization is a widely used technique that helps reduce model memory consumption. However, it is unclear what the performance of DeepSeek-R1 and V3 will be after being quantized. This technical report presents the first quantitative evaluation of multi-bitwidth quantization across the complete DeepSeek model spectrum. Key findings reveal that 4-bit quantization maintains little performance degradation versus FP8 while enabling single-machine deployment on standard NVIDIA GPU devices. We further propose DQ3_K_M, a dynamic 3-bit quantization method that significantly outperforms traditional Q3_K_M variant on various benchmarks, which is also comparable with 4-bit quantization (Q4_K_M) approach in most tasks. Moreover, DQ3_K_M supports single-machine deployment configurations for both NVIDIA H100/A100 and Huawei 910B. Our implementation of DQ3\_K\_M is released at https://github.com/UnicomAI/DeepSeek-Eval, containing optimized 3-bit quantized variants of both DeepSeek-R1 and DeepSeek-V3.

DAST: Difficulty-Adaptive Slow-Thinking for Large Reasoning Models

Mar 06, 2025Abstract:Recent advancements in slow-thinking reasoning models have shown exceptional performance in complex reasoning tasks. However, these models often exhibit overthinking-generating redundant reasoning steps for simple problems, leading to excessive computational resource usage. While current mitigation strategies uniformly reduce reasoning tokens, they risk degrading performance on challenging tasks that require extended reasoning. This paper introduces Difficulty-Adaptive Slow-Thinking (DAST), a novel framework that enables models to autonomously adjust the length of Chain-of-Thought(CoT) based on problem difficulty. We first propose a Token Length Budget (TLB) metric to quantify difficulty, then leveraging length-aware reward shaping and length preference optimization to implement DAST. DAST penalizes overlong responses for simple tasks while incentivizing sufficient reasoning for complex problems. Experiments on diverse datasets and model scales demonstrate that DAST effectively mitigates overthinking (reducing token usage by over 30\% on average) while preserving reasoning accuracy on complex problems.

A Tensor-Based Sub-Mode Coordinate Algorithm for Stock Prediction

May 21, 2018

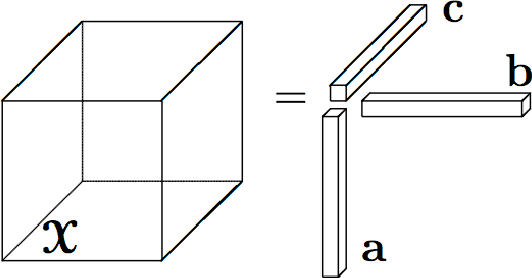

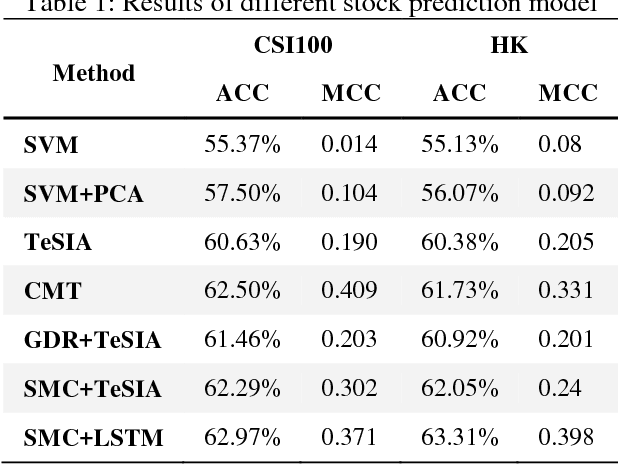

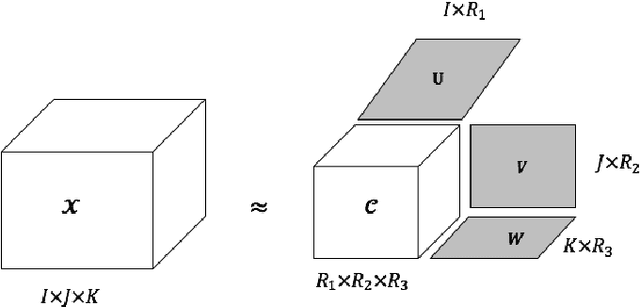

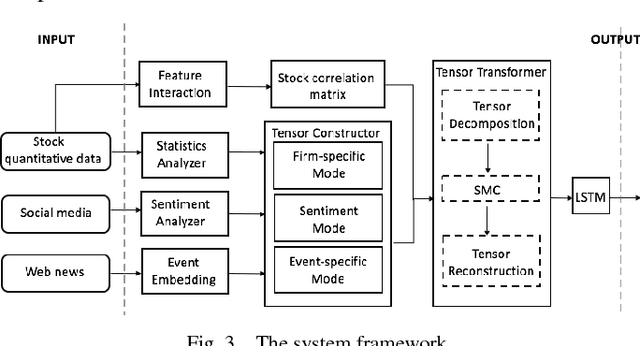

Abstract:The investment on the stock market is prone to be affected by the Internet. For the purpose of improving the prediction accuracy, we propose a multi-task stock prediction model that not only considers the stock correlations but also supports multi-source data fusion. Our proposed model first utilizes tensor to integrate the multi-sourced data, including financial Web news, investors' sentiments extracted from the social network and some quantitative data on stocks. In this way, the intrinsic relationships among different information sources can be captured, and meanwhile, multi-sourced information can be complemented to solve the data sparsity problem. Secondly, we propose an improved sub-mode coordinate algorithm (SMC). SMC is based on the stock similarity, aiming to reduce the variance of their subspace in each dimension produced by the tensor decomposition. The algorithm is able to improve the quality of the input features, and thus improves the prediction accuracy. And the paper utilizes the Long Short-Term Memory (LSTM) neural network model to predict the stock fluctuation trends. Finally, the experiments on 78 A-share stocks in CSI 100 and thirteen popular HK stocks in the year 2015 and 2016 are conducted. The results demonstrate the improvement on the prediction accuracy and the effectiveness of the proposed model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge