Jiahao Tang

ArtiSG: Functional 3D Scene Graph Construction via Human-demonstrated Articulated Objects Manipulation

Dec 31, 2025Abstract:3D scene graphs have empowered robots with semantic understanding for navigation and planning, yet they often lack the functional information required for physical manipulation, particularly regarding articulated objects. Existing approaches for inferring articulation mechanisms from static observations are prone to visual ambiguity, while methods that estimate parameters from state changes typically rely on constrained settings such as fixed cameras and unobstructed views. Furthermore, fine-grained functional elements like small handles are frequently missed by general object detectors. To bridge this gap, we present ArtiSG, a framework that constructs functional 3D scene graphs by encoding human demonstrations into structured robotic memory. Our approach leverages a robust articulation data collection pipeline utilizing a portable setup to accurately estimate 6-DoF articulation trajectories and axes even under camera ego-motion. We integrate these kinematic priors into a hierarchical and open-vocabulary graph while utilizing interaction data to discover inconspicuous functional elements missed by visual perception. Extensive real-world experiments demonstrate that ArtiSG significantly outperforms baselines in functional element recall and articulation estimation precision. Moreover, we show that the constructed graph serves as a reliable functional memory that effectively guides robots to perform language-directed manipulation tasks in real-world environments containing diverse articulated objects.

MR-COGraphs: Communication-efficient Multi-Robot Open-vocabulary Mapping System via 3D Scene Graphs

Dec 24, 2024Abstract:Collaborative perception in unknown environments is crucial for multi-robot systems. With the emergence of foundation models, robots can now not only perceive geometric information but also achieve open-vocabulary scene understanding. However, existing map representations that support open-vocabulary queries often involve large data volumes, which becomes a bottleneck for multi-robot transmission in communication-limited environments. To address this challenge, we develop a method to construct a graph-structured 3D representation called COGraph, where nodes represent objects with semantic features and edges capture their spatial relationships. Before transmission, a data-driven feature encoder is applied to compress the feature dimensions of the COGraph. Upon receiving COGraphs from other robots, the semantic features of each node are recovered using a decoder. We also propose a feature-based approach for place recognition and translation estimation, enabling the merging of local COGraphs into a unified global map. We validate our framework using simulation environments built on Isaac Sim and real-world datasets. The results demonstrate that, compared to transmitting semantic point clouds and 512-dimensional COGraphs, our framework can reduce the data volume by two orders of magnitude, without compromising mapping and query performance. For more details, please visit our website at https://github.com/efc-robot/MR-COGraphs.

Explore-Bench: Data Sets, Metrics and Evaluations for Frontier-based and Deep-reinforcement-learning-based Autonomous Exploration

Feb 24, 2022

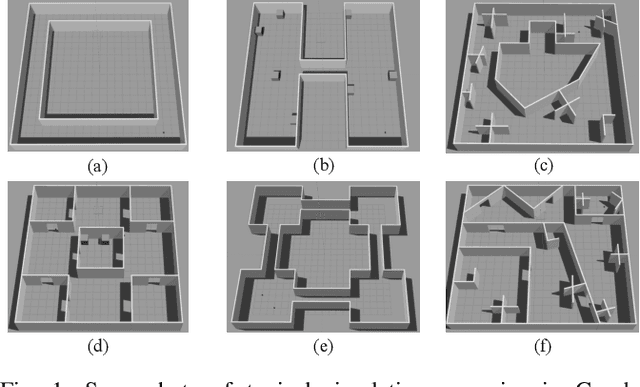

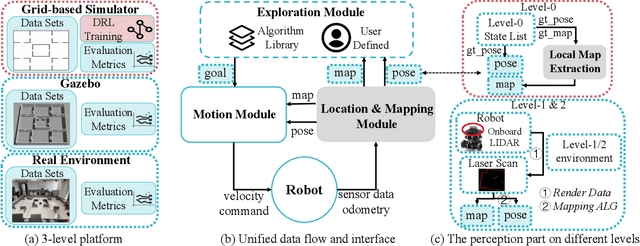

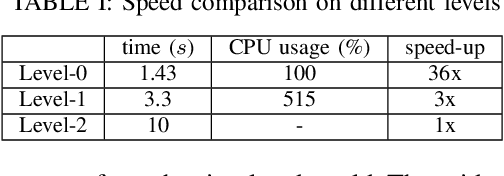

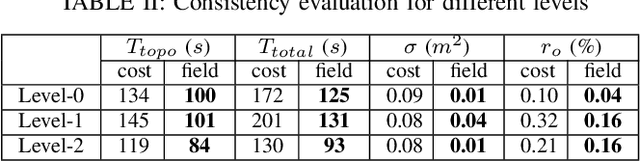

Abstract:Autonomous exploration and mapping of unknown terrains employing single or multiple robots is an essential task in mobile robotics and has therefore been widely investigated. Nevertheless, given the lack of unified data sets, metrics, and platforms to evaluate the exploration approaches, we develop an autonomous robot exploration benchmark entitled Explore-Bench. The benchmark involves various exploration scenarios and presents two types of quantitative metrics to evaluate exploration efficiency and multi-robot cooperation. Explore-Bench is extremely useful as, recently, deep reinforcement learning (DRL) has been widely used for robot exploration tasks and achieved promising results. However, training DRL-based approaches requires large data sets, and additionally, current benchmarks rely on realistic simulators with a slow simulation speed, which is not appropriate for training exploration strategies. Hence, to support efficient DRL training and comprehensive evaluation, the suggested Explore-Bench designs a 3-level platform with a unified data flow and $12 \times$ speed-up that includes a grid-based simulator for fast evaluation and efficient training, a realistic Gazebo simulator, and a remotely accessible robot testbed for high-accuracy tests in physical environments. The practicality of the proposed benchmark is highlighted with the application of one DRL-based and three frontier-based exploration approaches. Furthermore, we analyze the performance differences and provide some insights about the selection and design of exploration methods. Our benchmark is available at https://github.com/efc-robot/Explore-Bench.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge