Jiacheng Hou

Learning a Shape-adaptive Assist-as-needed Rehabilitation Policy from Therapist-informed Input

Oct 06, 2025Abstract:Therapist-in-the-loop robotic rehabilitation has shown great promise in enhancing rehabilitation outcomes by integrating the strengths of therapists and robotic systems. However, its broader adoption remains limited due to insufficient safe interaction and limited adaptation capability. This article proposes a novel telerobotics-mediated framework that enables therapists to intuitively and safely deliver assist-as-needed~(AAN) therapy based on two primary contributions. First, our framework encodes the therapist-informed corrective force into via-points in a latent space, allowing the therapist to provide only minimal assistance while encouraging patient maintaining own motion preferences. Second, a shape-adaptive ANN rehabilitation policy is learned to partially and progressively deform the reference trajectory for movement therapy based on encoded patient motion preferences and therapist-informed via-points. The effectiveness of the proposed shape-adaptive AAN strategy was validated on a telerobotic rehabilitation system using two representative tasks. The results demonstrate its practicality for remote AAN therapy and its superiority over two state-of-the-art methods in reducing corrective force and improving movement smoothness.

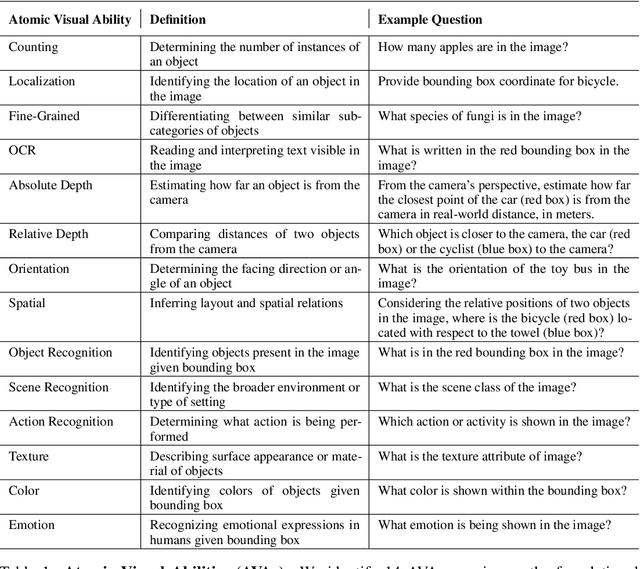

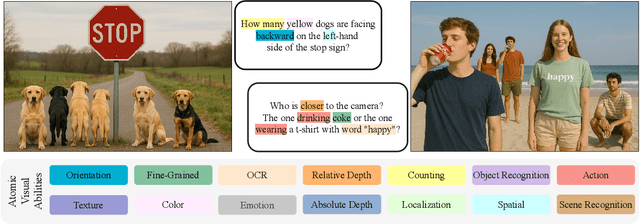

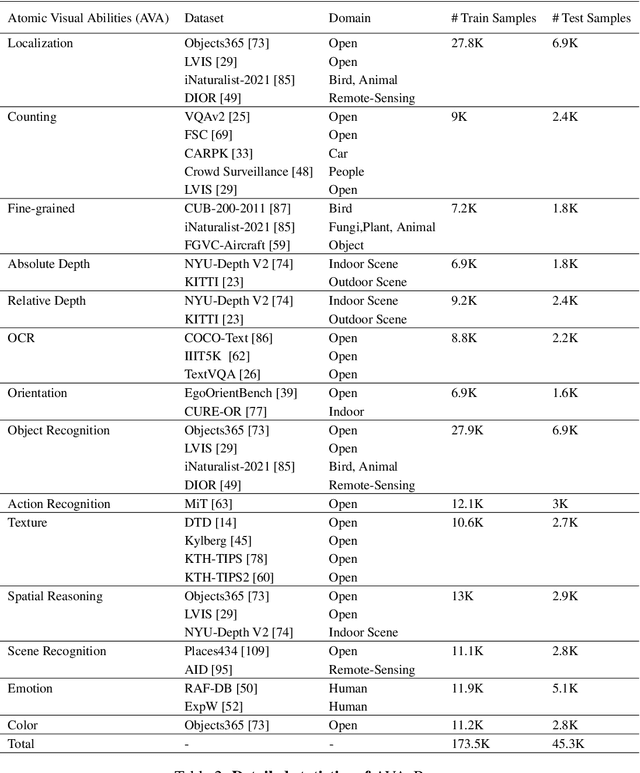

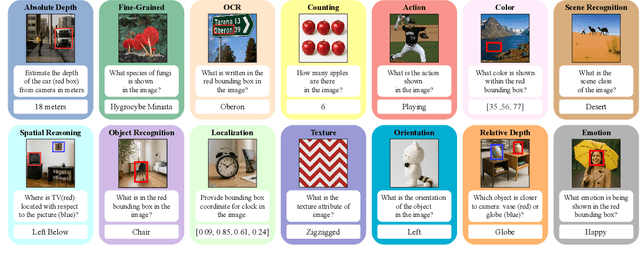

AVA-Bench: Atomic Visual Ability Benchmark for Vision Foundation Models

Jun 10, 2025

Abstract:The rise of vision foundation models (VFMs) calls for systematic evaluation. A common approach pairs VFMs with large language models (LLMs) as general-purpose heads, followed by evaluation on broad Visual Question Answering (VQA) benchmarks. However, this protocol has two key blind spots: (i) the instruction tuning data may not align with VQA test distributions, meaning a wrong prediction can stem from such data mismatch rather than a VFM' visual shortcomings; (ii) VQA benchmarks often require multiple visual abilities, making it hard to tell whether errors stem from lacking all required abilities or just a single critical one. To address these gaps, we introduce AVA-Bench, the first benchmark that explicitly disentangles 14 Atomic Visual Abilities (AVAs) -- foundational skills like localization, depth estimation, and spatial understanding that collectively support complex visual reasoning tasks. By decoupling AVAs and matching training and test distributions within each, AVA-Bench pinpoints exactly where a VFM excels or falters. Applying AVA-Bench to leading VFMs thus reveals distinctive "ability fingerprints," turning VFM selection from educated guesswork into principled engineering. Notably, we find that a 0.5B LLM yields similar VFM rankings as a 7B LLM while cutting GPU hours by 8x, enabling more efficient evaluation. By offering a comprehensive and transparent benchmark, we hope AVA-Bench lays the foundation for the next generation of VFMs.

BrainNetMLP: An Efficient and Effective Baseline for Functional Brain Network Classification

May 14, 2025Abstract:Recent studies have made great progress in functional brain network classification by modeling the brain as a network of Regions of Interest (ROIs) and leveraging their connections to understand brain functionality and diagnose mental disorders. Various deep learning architectures, including Convolutional Neural Networks, Graph Neural Networks, and the recent Transformer, have been developed. However, despite the increasing complexity of these models, the performance gain has not been as salient. This raises a question: Does increasing model complexity necessarily lead to higher classification accuracy? In this paper, we revisit the simplest deep learning architecture, the Multi-Layer Perceptron (MLP), and propose a pure MLP-based method, named BrainNetMLP, for functional brain network classification, which capitalizes on the advantages of MLP, including efficient computation and fewer parameters. Moreover, BrainNetMLP incorporates a dual-branch structure to jointly capture both spatial connectivity and spectral information, enabling precise spatiotemporal feature fusion. We evaluate our proposed BrainNetMLP on two public and popular brain network classification datasets, the Human Connectome Project (HCP) and the Autism Brain Imaging Data Exchange (ABIDE). Experimental results demonstrate pure MLP-based methods can achieve state-of-the-art performance, revealing the potential of MLP-based models as more efficient yet effective alternatives in functional brain network classification. The code will be available at https://github.com/JayceonHo/BrainNetMLP.

Signal Processing over Time-Varying Graphs: A Systematic Review

Nov 30, 2024

Abstract:As irregularly structured data representations, graphs have received a large amount of attention in recent years and have been widely applied to various real-world scenarios such as social, traffic, and energy settings. Compared to non-graph algorithms, numerous graph-based methodologies benefited from the strong power of graphs for representing high-dimensional and non-Euclidean data. In the field of Graph Signal Processing (GSP), analogies of classical signal processing concepts, such as shifting, convolution, filtering, and transformations are developed. However, many GSP techniques usually postulate the graph is static in both signal and typology. This assumption hinders the effectiveness of GSP methodologies as the assumption ignores the time-varying properties in numerous real-world systems. For example, in the traffic network, the signal on each node varies over time and contains underlying temporal correlation and patterns worthy of analysis. To tackle this challenge, more and more work are being done recently to investigate the processing of time-varying graph signals. They cope with time-varying challenges from three main directions: 1) graph time-spectral filtering, 2) multi-variate time-series forecasting, and 3) spatiotemporal graph data mining by neural networks, where non-negligible progress has been achieved. Despite the success of signal processing and learning over time-varying graphs, there is no survey to compare and conclude the current methodology for GSP and graph learning. To compensate for this, in this paper, we aim to review the development and recent progress on signal processing and learning over time-varying graphs, and compare their advantages and disadvantages from both the methodological and experimental side, to outline the challenges and potential research directions for future research.

Raformer: Redundancy-Aware Transformer for Video Wire Inpainting

Apr 24, 2024

Abstract:Video Wire Inpainting (VWI) is a prominent application in video inpainting, aimed at flawlessly removing wires in films or TV series, offering significant time and labor savings compared to manual frame-by-frame removal. However, wire removal poses greater challenges due to the wires being longer and slimmer than objects typically targeted in general video inpainting tasks, and often intersecting with people and background objects irregularly, which adds complexity to the inpainting process. Recognizing the limitations posed by existing video wire datasets, which are characterized by their small size, poor quality, and limited variety of scenes, we introduce a new VWI dataset with a novel mask generation strategy, namely Wire Removal Video Dataset 2 (WRV2) and Pseudo Wire-Shaped (PWS) Masks. WRV2 dataset comprises over 4,000 videos with an average length of 80 frames, designed to facilitate the development and efficacy of inpainting models. Building upon this, our research proposes the Redundancy-Aware Transformer (Raformer) method that addresses the unique challenges of wire removal in video inpainting. Unlike conventional approaches that indiscriminately process all frame patches, Raformer employs a novel strategy to selectively bypass redundant parts, such as static background segments devoid of valuable information for inpainting. At the core of Raformer is the Redundancy-Aware Attention (RAA) module, which isolates and accentuates essential content through a coarse-grained, window-based attention mechanism. This is complemented by a Soft Feature Alignment (SFA) module, which refines these features and achieves end-to-end feature alignment. Extensive experiments on both the traditional video inpainting datasets and our proposed WRV2 dataset demonstrate that Raformer outperforms other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge