Huiyun Liu

Optical Computation-in-Communication enables low-latency, high-fidelity perception in telesurgery

Oct 15, 2025Abstract:Artificial intelligence (AI) holds significant promise for enhancing intraoperative perception and decision-making in telesurgery, where physical separation impairs sensory feedback and control. Despite advances in medical AI and surgical robotics, conventional electronic AI architectures remain fundamentally constrained by the compounded latency from serial processing of inference and communication. This limitation is especially critical in latency-sensitive procedures such as endovascular interventions, where delays over 200 ms can compromise real-time AI reliability and patient safety. Here, we introduce an Optical Computation-in-Communication (OCiC) framework that reduces end-to-end latency significantly by performing AI inference concurrently with optical communication. OCiC integrates Optical Remote Computing Units (ORCUs) directly into the optical communication pathway, with each ORCU experimentally achieving up to 69 tera-operations per second per channel through spectrally efficient two-dimensional photonic convolution. The system maintains ultrahigh inference fidelity within 0.1% of CPU/GPU baselines on classification and coronary angiography segmentation, while intrinsically mitigating cumulative error propagation, a longstanding barrier to deep optical network scalability. We validated the robustness of OCiC through outdoor dark fibre deployments, confirming consistent and stable performance across varying environmental conditions. When scaled globally, OCiC transforms long-haul fibre infrastructure into a distributed photonic AI fabric with exascale potential, enabling reliable, low-latency telesurgery across distances up to 10,000 km and opening a new optical frontier for distributed medical intelligence.

Improving Emotional Expression and Cohesion in Image-Based Playlist Description and Music Topics: A Continuous Parameterization Approach

Oct 12, 2023Abstract:Text generation in image-based platforms, particularly for music-related content, requires precise control over text styles and the incorporation of emotional expression. However, existing approaches often need help to control the proportion of external factors in generated text and rely on discrete inputs, lacking continuous control conditions for desired text generation. This study proposes Continuous Parameterization for Controlled Text Generation (CPCTG) to overcome these limitations. Our approach leverages a Language Model (LM) as a style learner, integrating Semantic Cohesion (SC) and Emotional Expression Proportion (EEP) considerations. By enhancing the reward method and manipulating the CPCTG level, our experiments on playlist description and music topic generation tasks demonstrate significant improvements in ROUGE scores, indicating enhanced relevance and coherence in the generated text.

Reciprocal phase transition-enabled electro-optic modulation

Mar 28, 2022

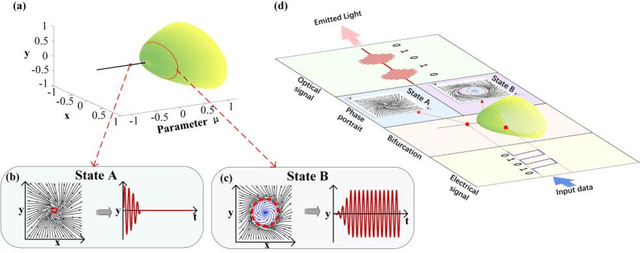

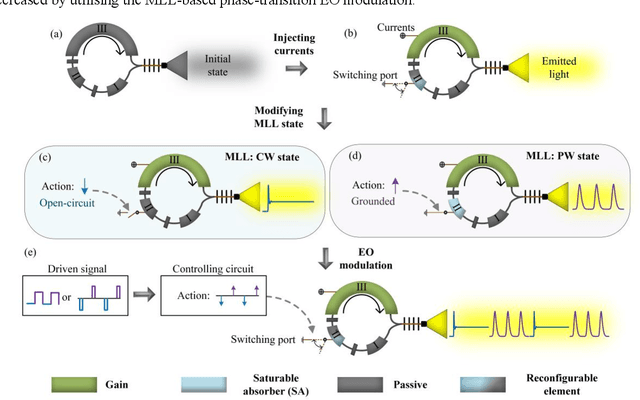

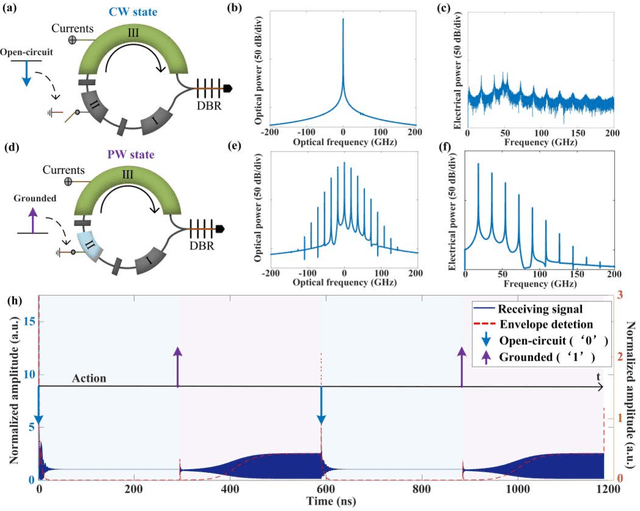

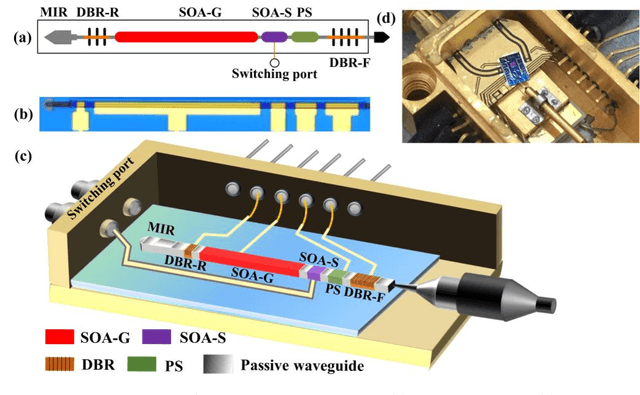

Abstract:Electro-optic (EO) modulation is a well-known and essential topic in the field of communications and sensing. Its ultrahigh efficiency is unprecedentedly desired in the current green and data era. However, dramatically increasing the modulation efficiency is difficult due to the monotonic mapping relationship between the electrical signal and modulated optical signal. Here, a new mechanism termed phase-transition EO modulation is revealed from the reciprocal transition between two distinct phase planes arising from the bifurcation. Remarkably, a monolithically integrated mode-locked laser (MLL) is implemented as a prototype. A 24.8-GHz radio-frequency signal is generated and modulated, achieving a modulation energy efficiency of 3.06 fJ/bit improved by about four orders of magnitude and a contrast ratio exceeding 50 dB. Thus, MLL-based phase-transition EO modulation is characterised by ultrahigh modulation efficiency and ultrahigh contrast ratio, as experimentally proved in radio-over-fibre and underwater acoustic-sensing systems. This phase-transition EO modulation opens a new avenue for green communication and ubiquitous connections.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge