Hongtao Xu

Skrull: Towards Efficient Long Context Fine-tuning through Dynamic Data Scheduling

May 26, 2025Abstract:Long-context supervised fine-tuning (Long-SFT) plays a vital role in enhancing the performance of large language models (LLMs) on long-context tasks. To smoothly adapt LLMs to long-context scenarios, this process typically entails training on mixed datasets containing both long and short sequences. However, this heterogeneous sequence length distribution poses significant challenges for existing training systems, as they fail to simultaneously achieve high training efficiency for both long and short sequences, resulting in sub-optimal end-to-end system performance in Long-SFT. In this paper, we present a novel perspective on data scheduling to address the challenges posed by the heterogeneous data distributions in Long-SFT. We propose Skrull, a dynamic data scheduler specifically designed for efficient long-SFT. Through dynamic data scheduling, Skrull balances the computation requirements of long and short sequences, improving overall training efficiency. Furthermore, we formulate the scheduling process as a joint optimization problem and thoroughly analyze the trade-offs involved. Based on those analysis, Skrull employs a lightweight scheduling algorithm to achieve near-zero cost online scheduling in Long-SFT. Finally, we implement Skrull upon DeepSpeed, a state-of-the-art distributed training system for LLMs. Experimental results demonstrate that Skrull outperforms DeepSpeed by 3.76x on average (up to 7.54x) in real-world long-SFT scenarios.

MC-UNet Multi-module Concatenation based on U-shape Network for Retinal Blood Vessels Segmentation

Apr 07, 2022

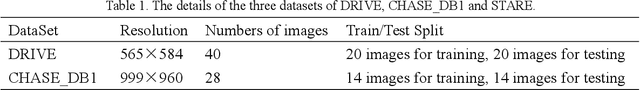

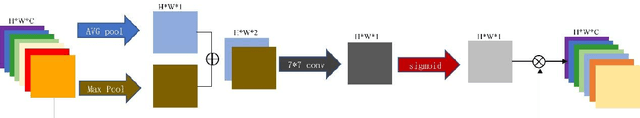

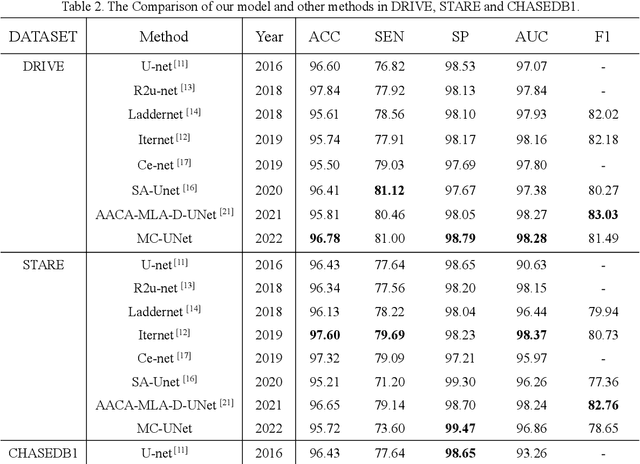

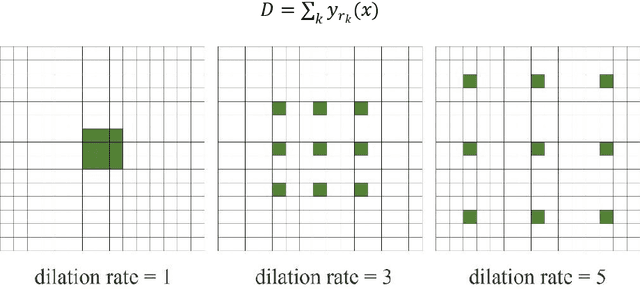

Abstract:Accurate segmentation of the blood vessels of the retina is an important step in clinical diagnosis of ophthalmic diseases. Many deep learning frameworks have come up for retinal blood vessels segmentation tasks. However, the complex vascular structure and uncertain pathological features make the blood vessel segmentation still very challenging. A novel U-shaped network named Multi-module Concatenation which is based on Atrous convolution and multi-kernel pooling is put forward to retinal vessels segmentation in this paper. The proposed network structure retains three layers the essential structure of U-Net, in which the atrous convolution combining the multi-kernel pooling blocks are designed to obtain more contextual information. The spatial attention module is concatenated with dense atrous convolution module and multi-kernel pooling module to form a multi-module concatenation. And different dilation rates are selected by cascading to acquire a larger receptive field in atrous convolution. Adequate comparative experiments are conducted on these public retinal datasets: DRIVE, STARE and CHASE_DB1. The results show that the proposed method is effective, especially for microvessels. The code will be put out at https://github.com/Rebeccala/MC-UNet

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge