Word-Level Explanations for Analyzing Bias in Text-to-Image Models

Jun 03, 2023Alexander Lin, Lucas Monteiro Paes, Sree Harsha Tanneru, Suraj Srinivas, Himabindu Lakkaraju

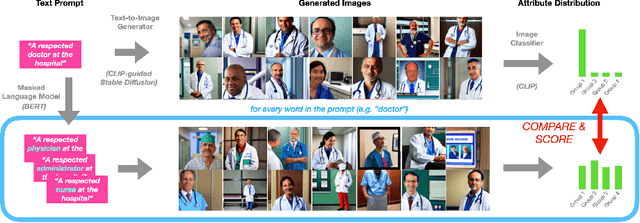

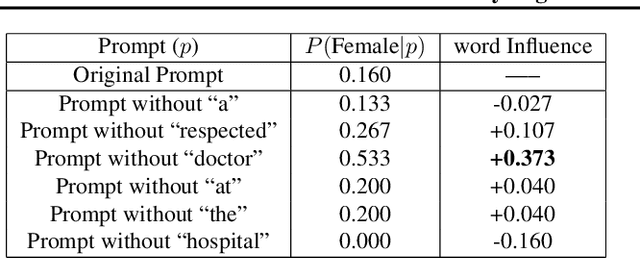

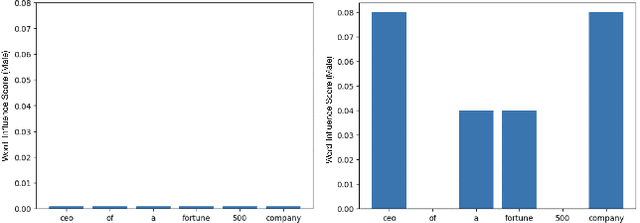

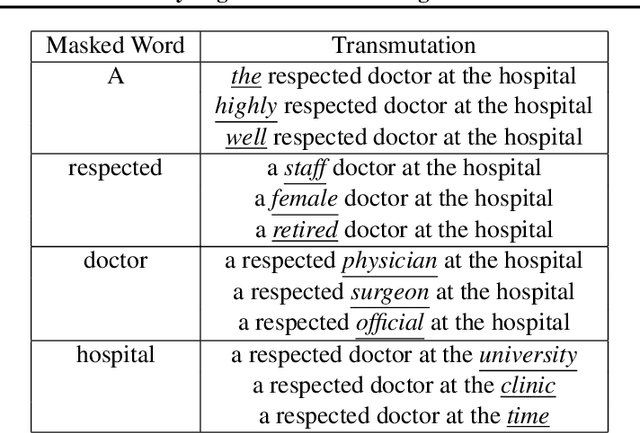

Text-to-image models take a sentence (i.e., prompt) and generate images associated with this input prompt. These models have created award wining-art, videos, and even synthetic datasets. However, text-to-image (T2I) models can generate images that underrepresent minorities based on race and sex. This paper investigates which word in the input prompt is responsible for bias in generated images. We introduce a method for computing scores for each word in the prompt; these scores represent its influence on biases in the model's output. Our method follows the principle of \emph{explaining by removing}, leveraging masked language models to calculate the influence scores. We perform experiments on Stable Diffusion to demonstrate that our method identifies the replication of societal stereotypes in generated images.

Post Hoc Explanations of Language Models Can Improve Language Models

May 19, 2023Satyapriya, Krishna, Jiaqi Ma, Dylan Slack, Asma Ghandeharioun, Sameer Singh, Himabindu Lakkaraju

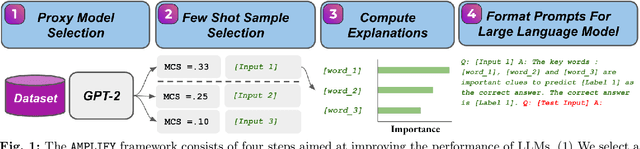

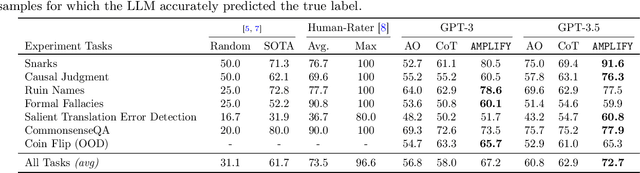

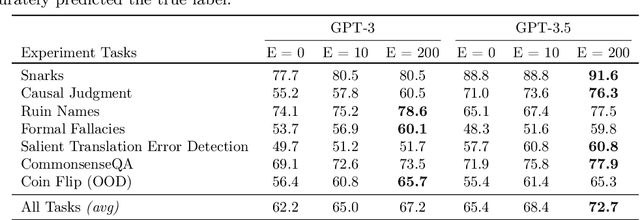

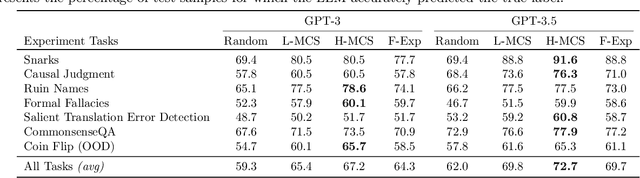

Large Language Models (LLMs) have demonstrated remarkable capabilities in performing complex tasks. Moreover, recent research has shown that incorporating human-annotated rationales (e.g., Chain-of- Thought prompting) during in-context learning can significantly enhance the performance of these models, particularly on tasks that require reasoning capabilities. However, incorporating such rationales poses challenges in terms of scalability as this requires a high degree of human involvement. In this work, we present a novel framework, Amplifying Model Performance by Leveraging In-Context Learning with Post Hoc Explanations (AMPLIFY), which addresses the aforementioned challenges by automating the process of rationale generation. To this end, we leverage post hoc explanation methods which output attribution scores (explanations) capturing the influence of each of the input features on model predictions. More specifically, we construct automated natural language rationales that embed insights from post hoc explanations to provide corrective signals to LLMs. Extensive experimentation with real-world datasets demonstrates that our framework, AMPLIFY, leads to prediction accuracy improvements of about 10-25% over a wide range of tasks, including those where prior approaches which rely on human-annotated rationales such as Chain-of-Thought prompting fall short. Our work makes one of the first attempts at highlighting the potential of post hoc explanations as valuable tools for enhancing the effectiveness of LLMs. Furthermore, we conduct additional empirical analyses and ablation studies to demonstrate the impact of each of the components of AMPLIFY, which, in turn, lead to critical insights for refining in-context learning.

Towards Bridging the Gaps between the Right to Explanation and the Right to be Forgotten

Feb 10, 2023Satyapriya Krishna, Jiaqi Ma, Himabindu Lakkaraju

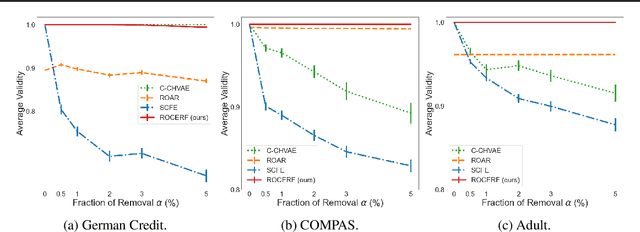

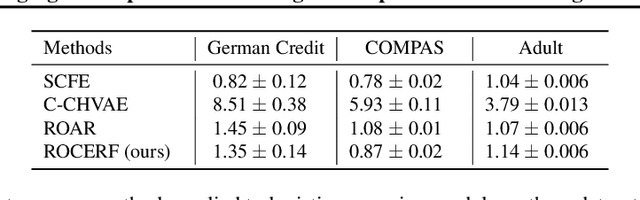

The Right to Explanation and the Right to be Forgotten are two important principles outlined to regulate algorithmic decision making and data usage in real-world applications. While the right to explanation allows individuals to request an actionable explanation for an algorithmic decision, the right to be forgotten grants them the right to ask for their data to be deleted from all the databases and models of an organization. Intuitively, enforcing the right to be forgotten may trigger model updates which in turn invalidate previously provided explanations, thus violating the right to explanation. In this work, we investigate the technical implications arising due to the interference between the two aforementioned regulatory principles, and propose the first algorithmic framework to resolve the tension between them. To this end, we formulate a novel optimization problem to generate explanations that are robust to model updates due to the removal of training data instances by data deletion requests. We then derive an efficient approximation algorithm to handle the combinatorial complexity of this optimization problem. We theoretically demonstrate that our method generates explanations that are provably robust to worst-case data deletion requests with bounded costs in case of linear models and certain classes of non-linear models. Extensive experimentation with real-world datasets demonstrates the efficacy of the proposed framework.

On the Privacy Risks of Algorithmic Recourse

Nov 10, 2022Martin Pawelczyk, Himabindu Lakkaraju, Seth Neel

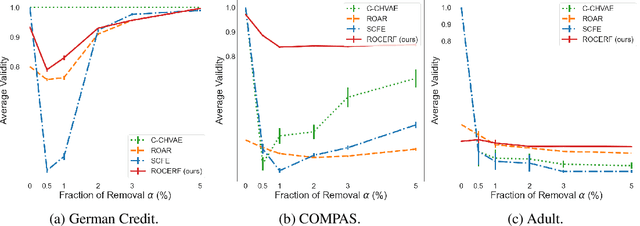

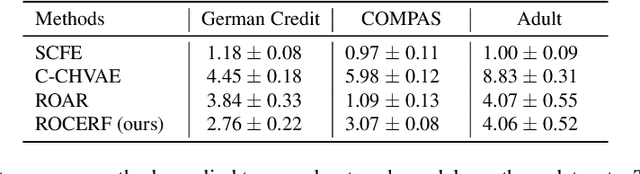

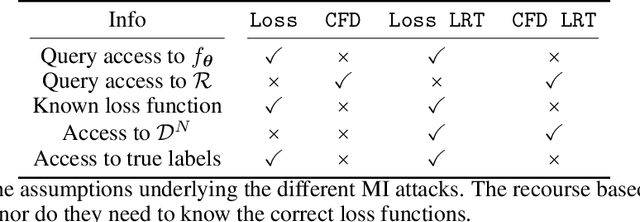

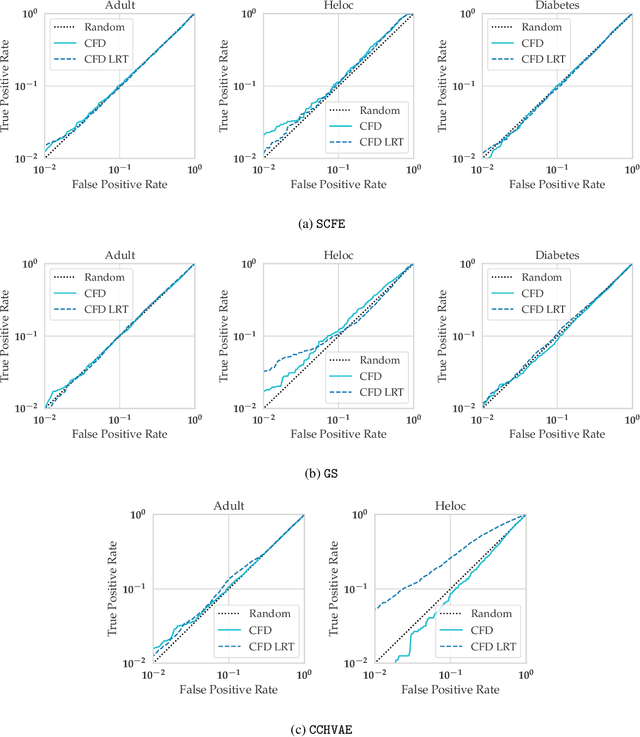

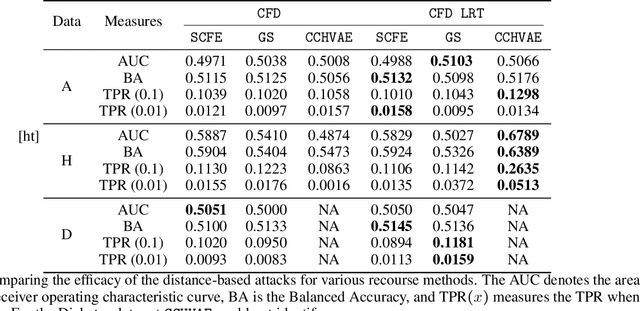

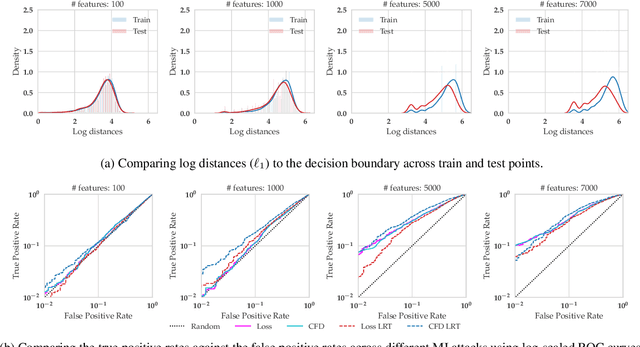

As predictive models are increasingly being employed to make consequential decisions, there is a growing emphasis on developing techniques that can provide algorithmic recourse to affected individuals. While such recourses can be immensely beneficial to affected individuals, potential adversaries could also exploit these recourses to compromise privacy. In this work, we make the first attempt at investigating if and how an adversary can leverage recourses to infer private information about the underlying model's training data. To this end, we propose a series of novel membership inference attacks which leverage algorithmic recourse. More specifically, we extend the prior literature on membership inference attacks to the recourse setting by leveraging the distances between data instances and their corresponding counterfactuals output by state-of-the-art recourse methods. Extensive experimentation with real world and synthetic datasets demonstrates significant privacy leakage through recourses. Our work establishes unintended privacy leakage as an important risk in the widespread adoption of recourse methods.

Towards Robust Off-Policy Evaluation via Human Inputs

Sep 18, 2022Harvineet Singh, Shalmali Joshi, Finale Doshi-Velez, Himabindu Lakkaraju

Off-policy Evaluation (OPE) methods are crucial tools for evaluating policies in high-stakes domains such as healthcare, where direct deployment is often infeasible, unethical, or expensive. When deployment environments are expected to undergo changes (that is, dataset shifts), it is important for OPE methods to perform robust evaluation of the policies amidst such changes. Existing approaches consider robustness against a large class of shifts that can arbitrarily change any observable property of the environment. This often results in highly pessimistic estimates of the utilities, thereby invalidating policies that might have been useful in deployment. In this work, we address the aforementioned problem by investigating how domain knowledge can help provide more realistic estimates of the utilities of policies. We leverage human inputs on which aspects of the environments may plausibly change, and adapt the OPE methods to only consider shifts on these aspects. Specifically, we propose a novel framework, Robust OPE (ROPE), which considers shifts on a subset of covariates in the data based on user inputs, and estimates worst-case utility under these shifts. We then develop computationally efficient algorithms for OPE that are robust to the aforementioned shifts for contextual bandits and Markov decision processes. We also theoretically analyze the sample complexity of these algorithms. Extensive experimentation with synthetic and real world datasets from the healthcare domain demonstrates that our approach not only captures realistic dataset shifts accurately, but also results in less pessimistic policy evaluations.

Evaluating Explainability for Graph Neural Networks

Aug 19, 2022Chirag Agarwal, Owen Queen, Himabindu Lakkaraju, Marinka Zitnik

As post hoc explanations are increasingly used to understand the behavior of graph neural networks (GNNs), it becomes crucial to evaluate the quality and reliability of GNN explanations. However, assessing the quality of GNN explanations is challenging as existing graph datasets have no or unreliable ground-truth explanations for a given task. Here, we introduce a synthetic graph data generator, ShapeGGen, which can generate a variety of benchmark datasets (e.g., varying graph sizes, degree distributions, homophilic vs. heterophilic graphs) accompanied by ground-truth explanations. Further, the flexibility to generate diverse synthetic datasets and corresponding ground-truth explanations allows us to mimic the data generated by various real-world applications. We include ShapeGGen and several real-world graph datasets into an open-source graph explainability library, GraphXAI. In addition to synthetic and real-world graph datasets with ground-truth explanations, GraphXAI provides data loaders, data processing functions, visualizers, GNN model implementations, and evaluation metrics to benchmark the performance of GNN explainability methods.

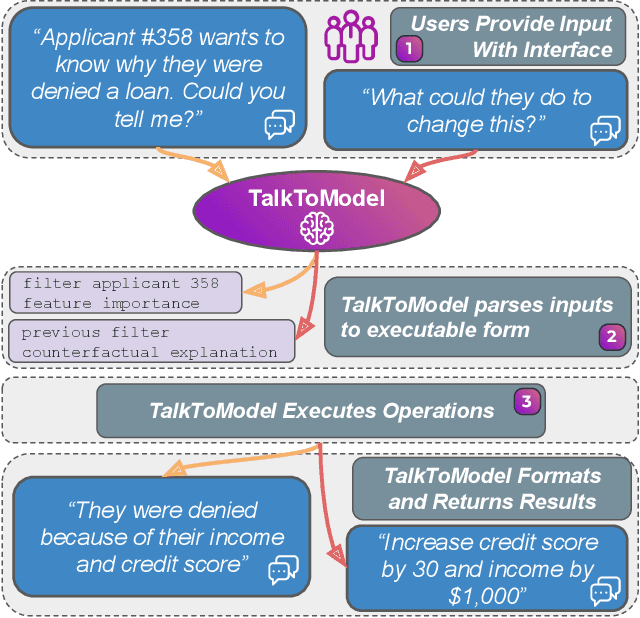

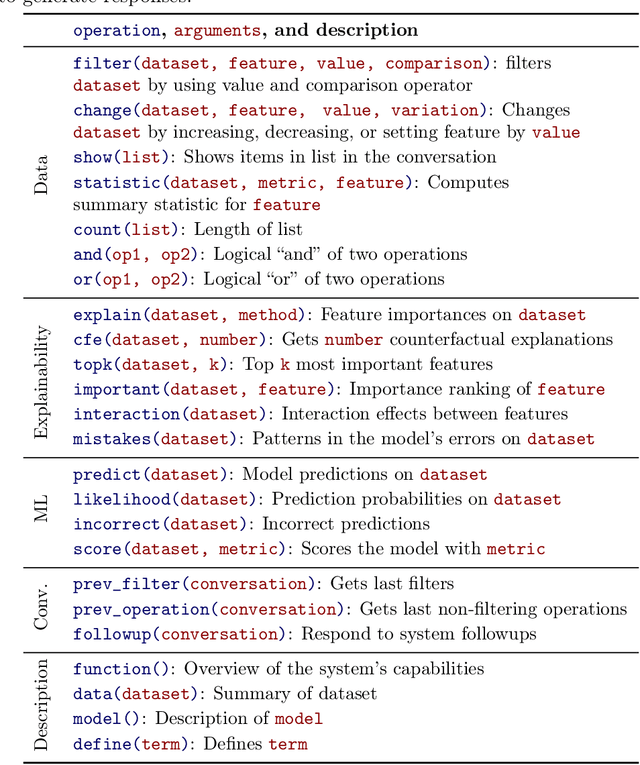

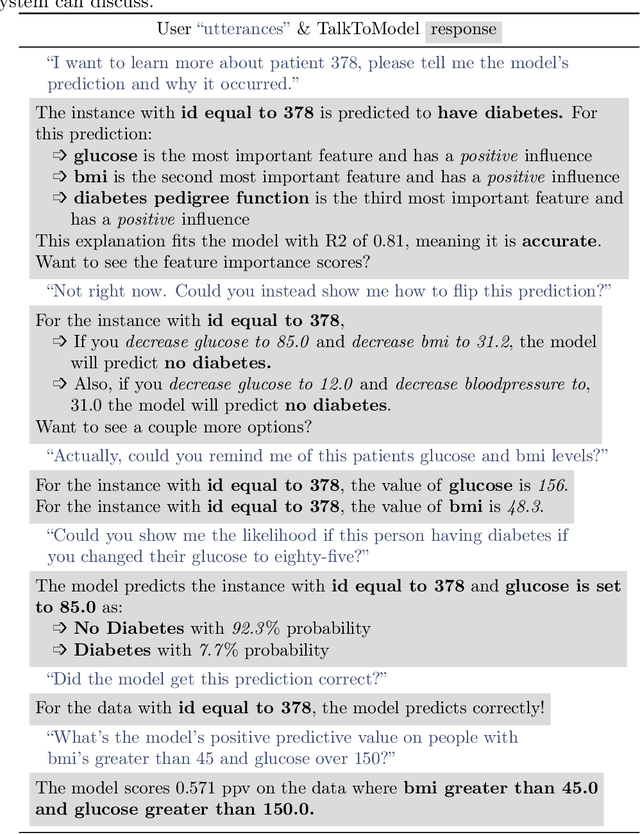

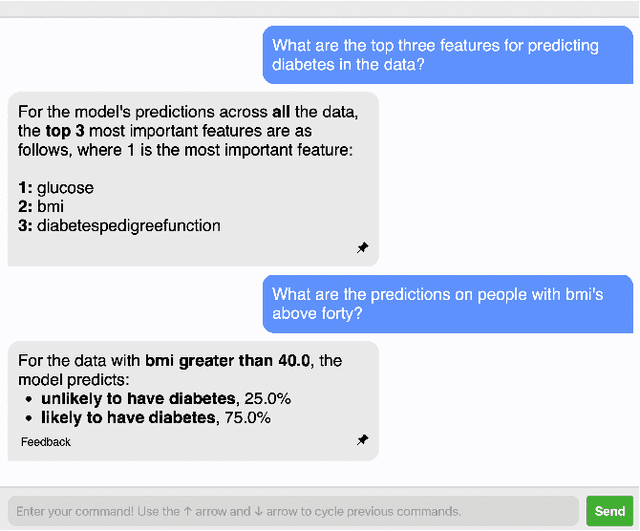

TalkToModel: Understanding Machine Learning Models With Open Ended Dialogues

Jul 08, 2022Dylan Slack, Satyapriya Krishna, Himabindu Lakkaraju, Sameer Singh

Machine Learning (ML) models are increasingly used to make critical decisions in real-world applications, yet they have also become more complex, making them harder to understand. To this end, several techniques to explain model predictions have been proposed. However, practitioners struggle to leverage explanations because they often do not know which to use, how to interpret the results, and may have insufficient data science experience to obtain explanations. In addition, most current works focus on generating one-shot explanations and do not allow users to follow up and ask fine-grained questions about the explanations, which can be frustrating. In this work, we address these challenges by introducing TalkToModel: an open-ended dialogue system for understanding machine learning models. Specifically, TalkToModel comprises three key components: 1) a natural language interface for engaging in dialogues, making understanding ML models highly accessible, 2) a dialogue engine that adapts to any tabular model and dataset, interprets natural language, maps it to appropriate operations (e.g., feature importance explanations, counterfactual explanations, showing model errors), and generates text responses, and 3) an execution component that run the operations and ensures explanations are accurate. We carried out quantitative and human subject evaluations of TalkToModel. We found the system understands user questions on novel datasets and models with high accuracy, demonstrating the system's capacity to generalize to new situations. In human evaluations, 73% of healthcare workers (e.g., doctors and nurses) agreed they would use TalkToModel over baseline point-and-click systems, and 84.6% of ML graduate students agreed TalkToModel was easier to use.

OpenXAI: Towards a Transparent Evaluation of Model Explanations

Jun 22, 2022Chirag Agarwal, Eshika Saxena, Satyapriya Krishna, Martin Pawelczyk, Nari Johnson, Isha Puri, Marinka Zitnik, Himabindu Lakkaraju

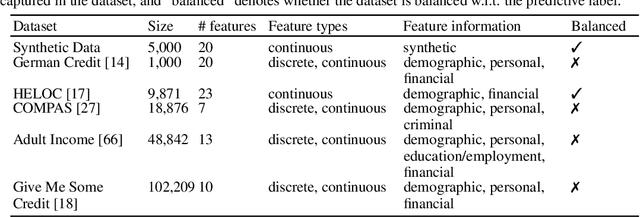

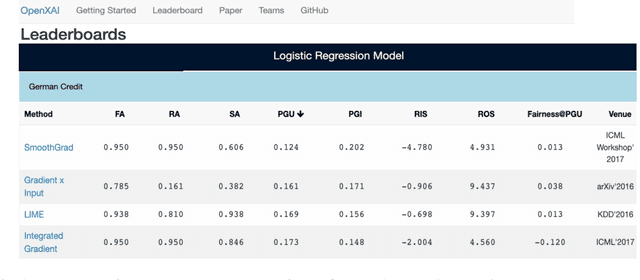

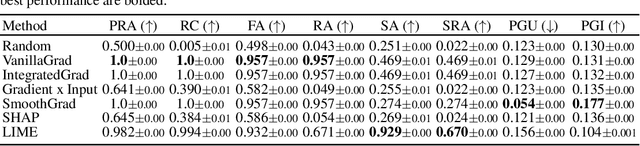

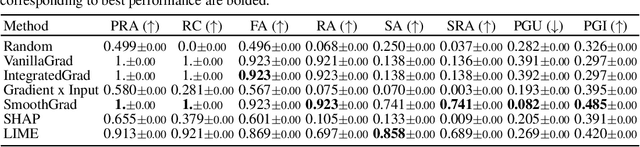

While several types of post hoc explanation methods (e.g., feature attribution methods) have been proposed in recent literature, there is little to no work on systematically benchmarking these methods in an efficient and transparent manner. Here, we introduce OpenXAI, a comprehensive and extensible open source framework for evaluating and benchmarking post hoc explanation methods. OpenXAI comprises of the following key components: (i) a flexible synthetic data generator and a collection of diverse real-world datasets, pre-trained models, and state-of-the-art feature attribution methods, (ii) open-source implementations of twenty-two quantitative metrics for evaluating faithfulness, stability (robustness), and fairness of explanation methods, and (iii) the first ever public XAI leaderboards to benchmark explanations. OpenXAI is easily extensible, as users can readily evaluate custom explanation methods and incorporate them into our leaderboards. Overall, OpenXAI provides an automated end-to-end pipeline that not only simplifies and standardizes the evaluation of post hoc explanation methods, but also promotes transparency and reproducibility in benchmarking these methods. OpenXAI datasets and data loaders, implementations of state-of-the-art explanation methods and evaluation metrics, as well as leaderboards are publicly available at https://open-xai.github.io/.

Flatten the Curve: Efficiently Training Low-Curvature Neural Networks

Jun 14, 2022Suraj Srinivas, Kyle Matoba, Himabindu Lakkaraju, Francois Fleuret

The highly non-linear nature of deep neural networks causes them to be susceptible to adversarial examples and have unstable gradients which hinders interpretability. However, existing methods to solve these issues, such as adversarial training, are expensive and often sacrifice predictive accuracy. In this work, we consider curvature, which is a mathematical quantity which encodes the degree of non-linearity. Using this, we demonstrate low-curvature neural networks (LCNNs) that obtain drastically lower curvature than standard models while exhibiting similar predictive performance, which leads to improved robustness and stable gradients, with only a marginally increased training time. To achieve this, we minimize a data-independent upper bound on the curvature of a neural network, which decomposes overall curvature in terms of curvatures and slopes of its constituent layers. To efficiently minimize this bound, we introduce two novel architectural components: first, a non-linearity called centered-softplus that is a stable variant of the softplus non-linearity, and second, a Lipschitz-constrained batch normalization layer. Our experiments show that LCNNs have lower curvature, more stable gradients and increased off-the-shelf adversarial robustness when compared to their standard high-curvature counterparts, all without affecting predictive performance. Our approach is easy to use and can be readily incorporated into existing neural network models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge