Real-time Neural-based Input Method

Oct 19, 2018Jiali Yao, Raphael Shu, Xinjian Li, Katsutoshi Ohtsuki, Hideki Nakayama

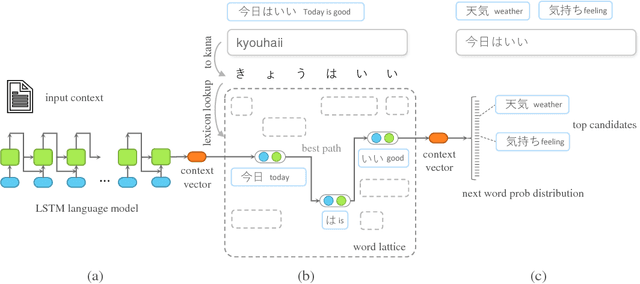

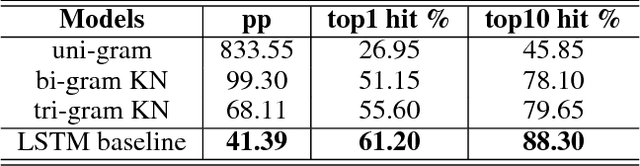

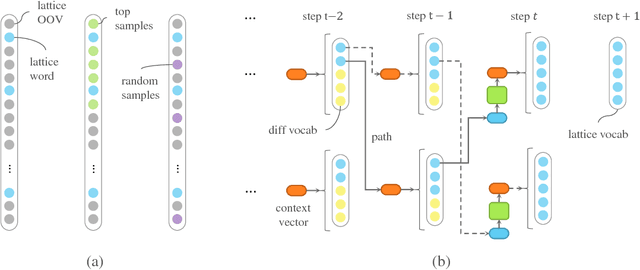

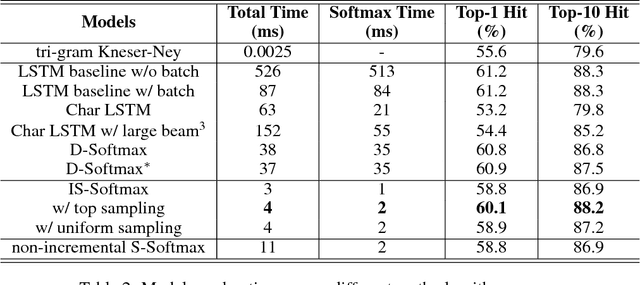

The input method is an essential service on every mobile and desktop devices that provides text suggestions. It converts sequential keyboard inputs to the characters in its target language, which is indispensable for Japanese and Chinese users. Due to critical resource constraints and limited network bandwidth of the target devices, applying neural models to input method is not well explored. In this work, we apply a LSTM-based language model to input method and evaluate its performance for both prediction and conversion tasks with Japanese BCCWJ corpus. We articulate the bottleneck to be the slow softmax computation during conversion. To solve the issue, we propose incremental softmax approximation approach, which computes softmax with a selected subset vocabulary and fix the stale probabilities when the vocabulary is updated in future steps. We refer to this method as incremental selective softmax. The results show a two order speedup for the softmax computation when converting Japanese input sequences with a large vocabulary, reaching real-time speed on commodity CPU. We also exploit the model compressing potential to achieve a 92% model size reduction without losing accuracy.

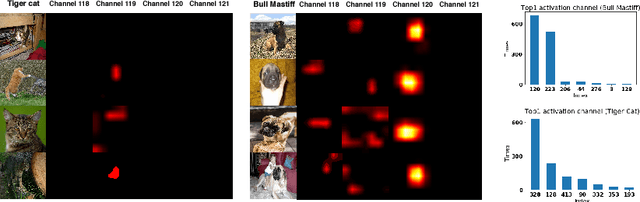

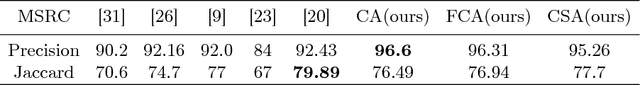

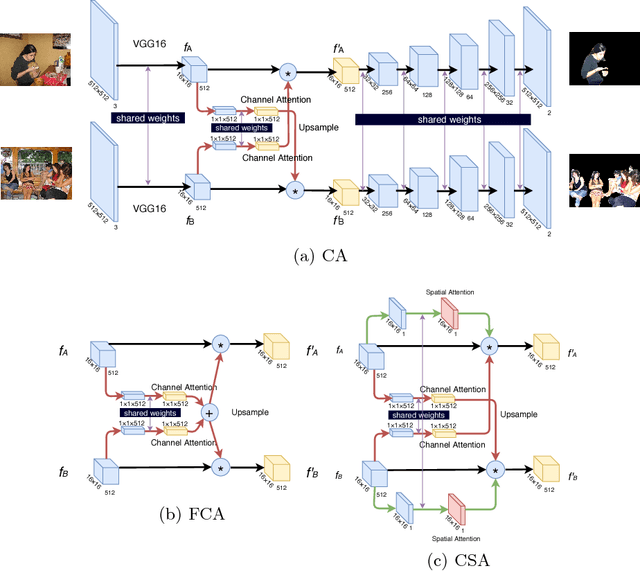

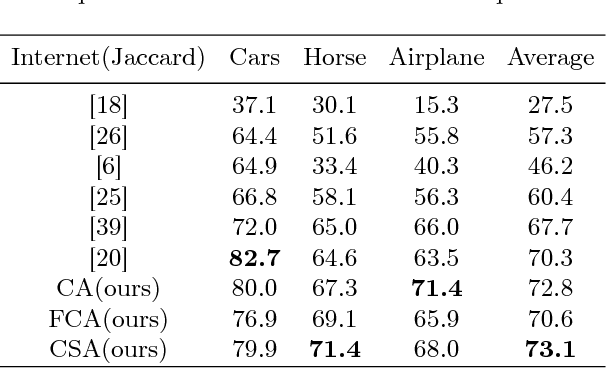

Semantic Aware Attention Based Deep Object Co-segmentation

Oct 16, 2018Hong Chen, Yifei Huang, Hideki Nakayama

Object co-segmentation is the task of segmenting the same objects from multiple images. In this paper, we propose the Attention Based Object Co-Segmentation for object co-segmentation that utilize a novel attention mechanism in the bottleneck layer of deep neural network for the selection of semantically related features. Furthermore, we take the benefit of attention learner and propose an algorithm to segment multi-input images in linear time complexity. Experiment results demonstrate that our model achieves state of the art performance on multiple datasets, with a significant reduction of computational time.

Discrete Structural Planning for Neural Machine Translation

Aug 14, 2018Raphael Shu, Hideki Nakayama

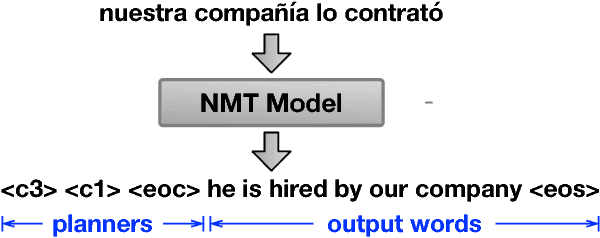

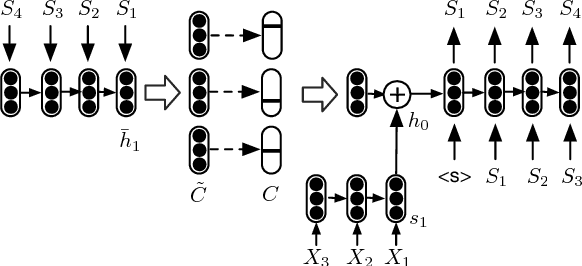

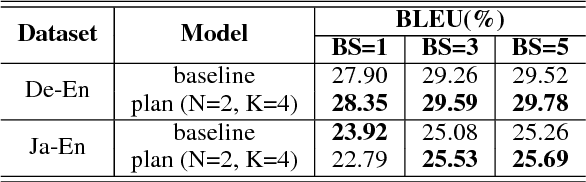

Structural planning is important for producing long sentences, which is a missing part in current language generation models. In this work, we add a planning phase in neural machine translation to control the coarse structure of output sentences. The model first generates some planner codes, then predicts real output words conditioned on them. The codes are learned to capture the coarse structure of the target sentence. In order to obtain the codes, we design an end-to-end neural network with a discretization bottleneck, which predicts the simplified part-of-speech tags of target sentences. Experiments show that the translation performance are generally improved by planning ahead. We also find that translations with different structures can be obtained by manipulating the planner codes.

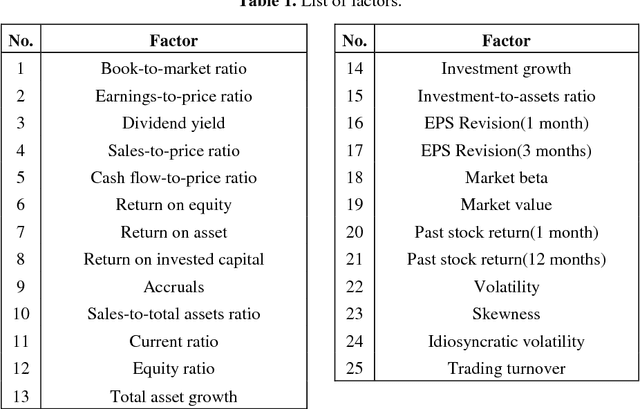

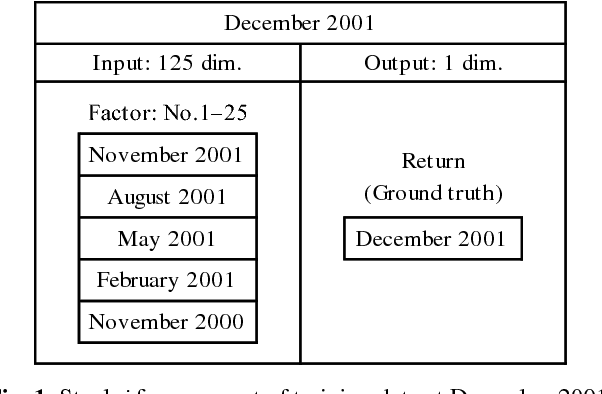

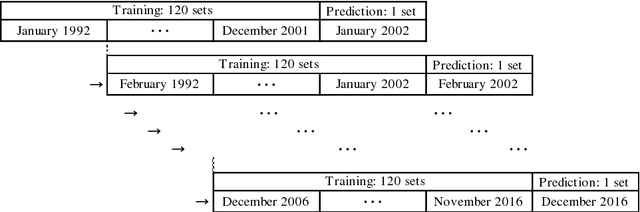

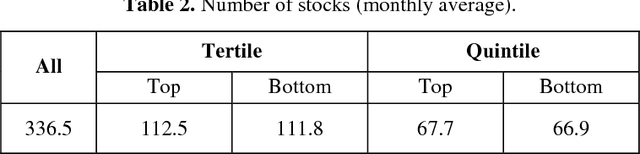

Deep Learning for Forecasting Stock Returns in the Cross-Section

Jun 13, 2018Masaya Abe, Hideki Nakayama

Many studies have been undertaken by using machine learning techniques, including neural networks, to predict stock returns. Recently, a method known as deep learning, which achieves high performance mainly in image recognition and speech recognition, has attracted attention in the machine learning field. This paper implements deep learning to predict one-month-ahead stock returns in the cross-section in the Japanese stock market and investigates the performance of the method. Our results show that deep neural networks generally outperform shallow neural networks, and the best networks also outperform representative machine learning models. These results indicate that deep learning shows promise as a skillful machine learning method to predict stock returns in the cross-section.

Parameter Reference Loss for Unsupervised Domain Adaptation

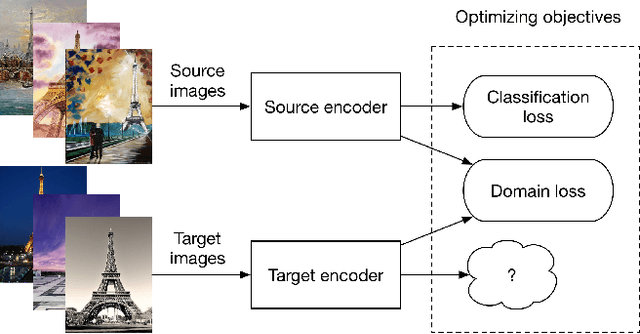

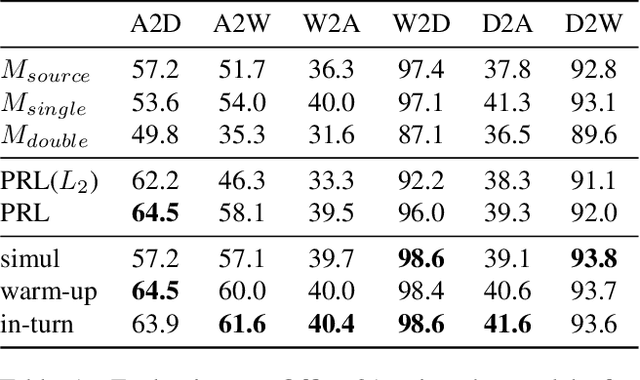

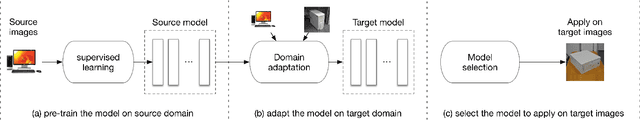

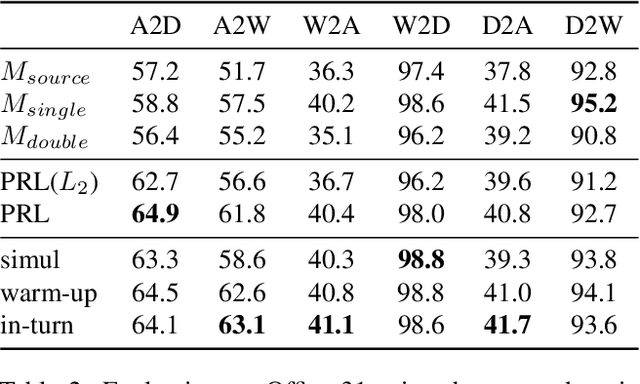

Dec 05, 2017Jiren Jin, Richard G. Calland, Takeru Miyato, Brian K. Vogel, Hideki Nakayama

The success of deep learning in computer vision is mainly attributed to an abundance of data. However, collecting large-scale data is not always possible, especially for the supervised labels. Unsupervised domain adaptation (UDA) aims to utilize labeled data from a source domain to learn a model that generalizes to a target domain of unlabeled data. A large amount of existing work uses Siamese network-based models, where two streams of neural networks process the source and the target domain data respectively. Nevertheless, most of these approaches focus on minimizing the domain discrepancy, overlooking the importance of preserving the discriminative ability for target domain features. Another important problem in UDA research is how to evaluate the methods properly. Common evaluation procedures require target domain labels for hyper-parameter tuning and model selection, contradicting the definition of the UDA task. Hence we propose a more reasonable evaluation principle that avoids this contradiction by simply adopting the latest snapshot of a model for evaluation. This adds an extra requirement for UDA methods besides the main performance criteria: the stability during training. We design a novel method that connects the target domain stream to the source domain stream with a Parameter Reference Loss (PRL) to solve these problems simultaneously. Experiments on various datasets show that the proposed PRL not only improves the performance on the target domain, but also stabilizes the training procedure. As a result, PRL based models do not need the contradictory model selection, and thus are more suitable for practical applications.

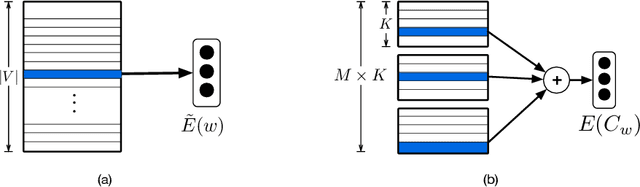

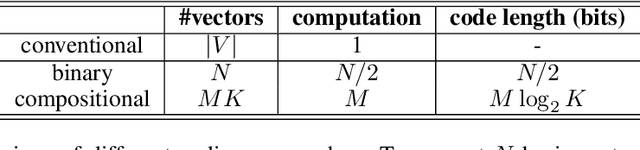

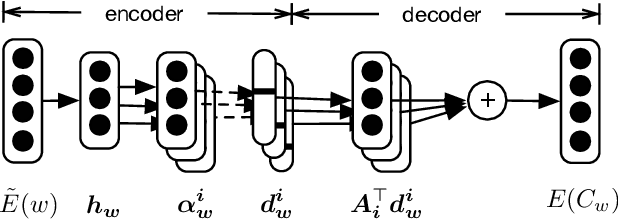

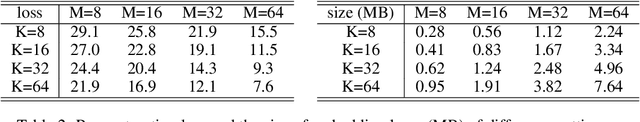

Compressing Word Embeddings via Deep Compositional Code Learning

Nov 17, 2017Raphael Shu, Hideki Nakayama

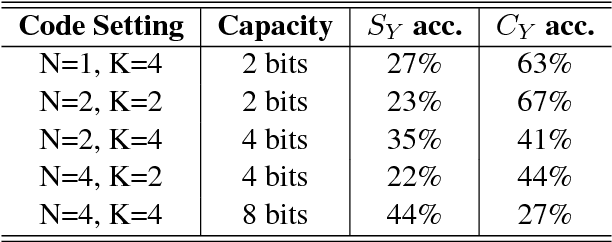

Natural language processing (NLP) models often require a massive number of parameters for word embeddings, resulting in a large storage or memory footprint. Deploying neural NLP models to mobile devices requires compressing the word embeddings without any significant sacrifices in performance. For this purpose, we propose to construct the embeddings with few basis vectors. For each word, the composition of basis vectors is determined by a hash code. To maximize the compression rate, we adopt the multi-codebook quantization approach instead of binary coding scheme. Each code is composed of multiple discrete numbers, such as (3, 2, 1, 8), where the value of each component is limited to a fixed range. We propose to directly learn the discrete codes in an end-to-end neural network by applying the Gumbel-softmax trick. Experiments show the compression rate achieves 98% in a sentiment analysis task and 94% ~ 99% in machine translation tasks without performance loss. In both tasks, the proposed method can improve the model performance by slightly lowering the compression rate. Compared to other approaches such as character-level segmentation, the proposed method is language-independent and does not require modifications to the network architecture.

Zero-resource Machine Translation by Multimodal Encoder-decoder Network with Multimedia Pivot

Jul 23, 2017Hideki Nakayama, Noriki Nishida

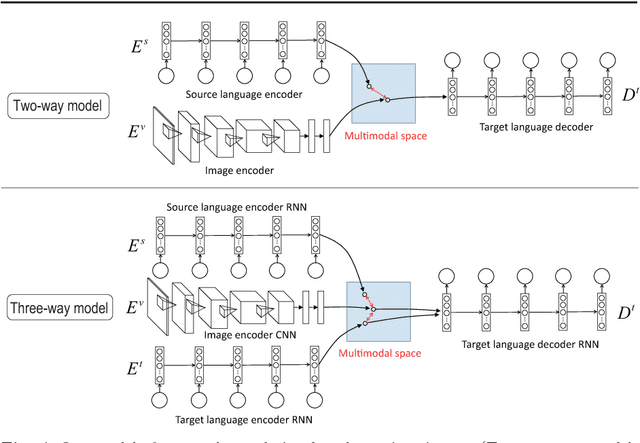

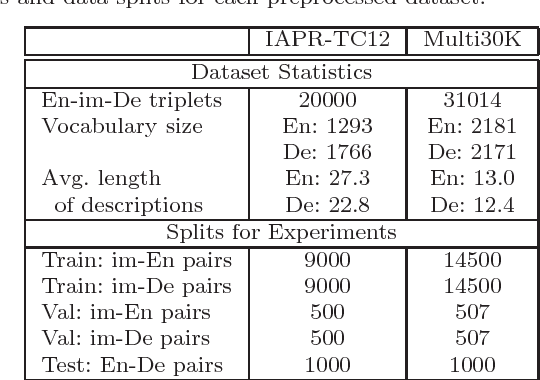

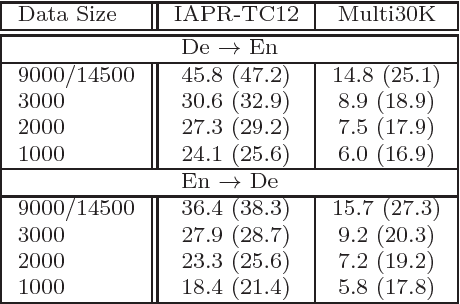

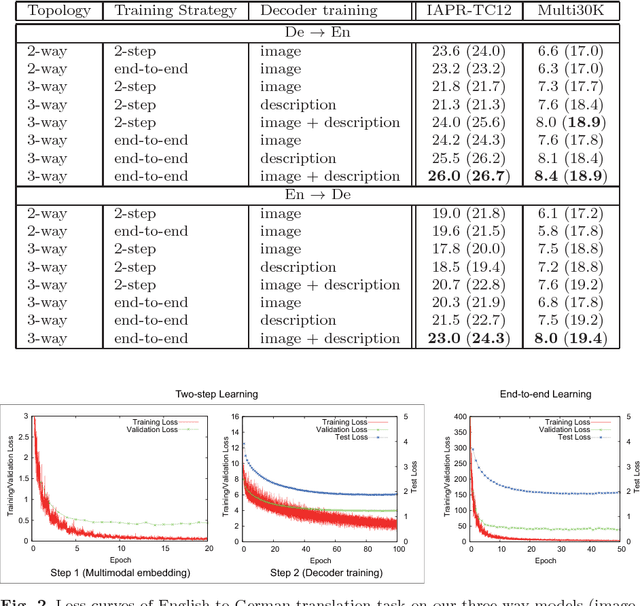

We propose an approach to build a neural machine translation system with no supervised resources (i.e., no parallel corpora) using multimodal embedded representation over texts and images. Based on the assumption that text documents are often likely to be described with other multimedia information (e.g., images) somewhat related to the content, we try to indirectly estimate the relevance between two languages. Using multimedia as the "pivot", we project all modalities into one common hidden space where samples belonging to similar semantic concepts should come close to each other, whatever the observed space of each sample is. This modality-agnostic representation is the key to bridging the gap between different modalities. Putting a decoder on top of it, our network can flexibly draw the outputs from any input modality. Notably, in the testing phase, we need only source language texts as the input for translation. In experiments, we tested our method on two benchmarks to show that it can achieve reasonable translation performance. We compared and investigated several possible implementations and found that an end-to-end model that simultaneously optimized both rank loss in multimodal encoders and cross-entropy loss in decoders performed the best.

Single-Queue Decoding for Neural Machine Translation

Jul 08, 2017Raphael Shu, Hideki Nakayama

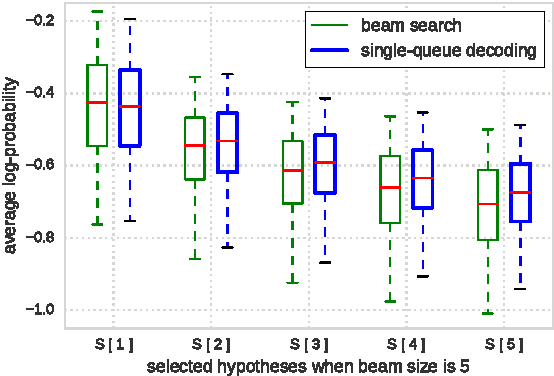

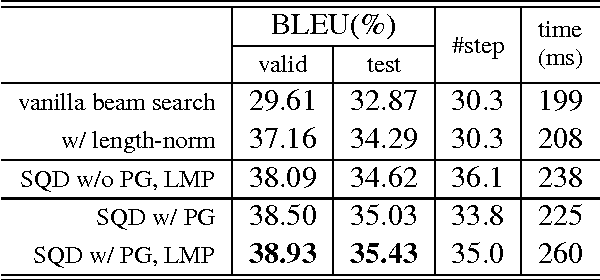

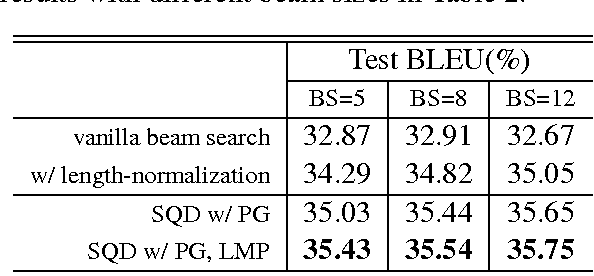

Neural machine translation models rely on the beam search algorithm for decoding. In practice, we found that the quality of hypotheses in the search space is negatively affected owing to the fixed beam size. To mitigate this problem, we store all hypotheses in a single priority queue and use a universal score function for hypothesis selection. The proposed algorithm is more flexible as the discarded hypotheses can be revisited in a later step. We further design a penalty function to punish the hypotheses that tend to produce a final translation that is much longer or shorter than expected. Despite its simplicity, we show that the proposed decoding algorithm is able to select hypotheses with better qualities and improve the translation performance.

Later-stage Minimum Bayes-Risk Decoding for Neural Machine Translation

Jun 08, 2017Raphael Shu, Hideki Nakayama

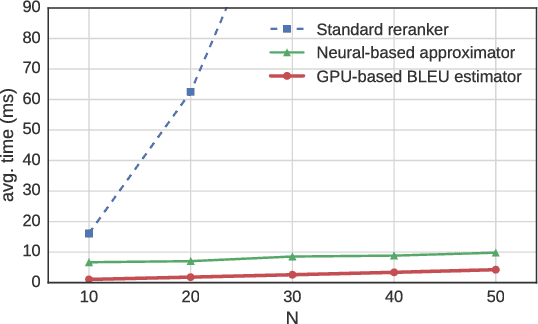

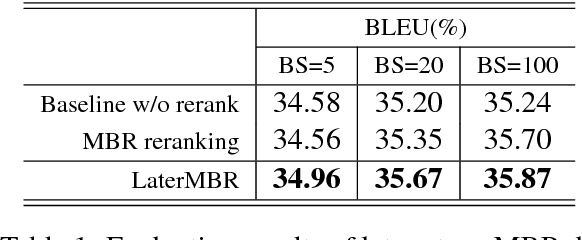

For extended periods of time, sequence generation models rely on beam search algorithm to generate output sequence. However, the correctness of beam search degrades when the a model is over-confident about a suboptimal prediction. In this paper, we propose to perform minimum Bayes-risk (MBR) decoding for some extra steps at a later stage. In order to speed up MBR decoding, we compute the Bayes risks on GPU in batch mode. In our experiments, we found that MBR reranking works with a large beam size. Later-stage MBR decoding is shown to outperform simple MBR reranking in machine translation tasks.

An Empirical Study of Adequate Vision Span for Attention-Based Neural Machine Translation

Jun 08, 2017Raphael Shu, Hideki Nakayama

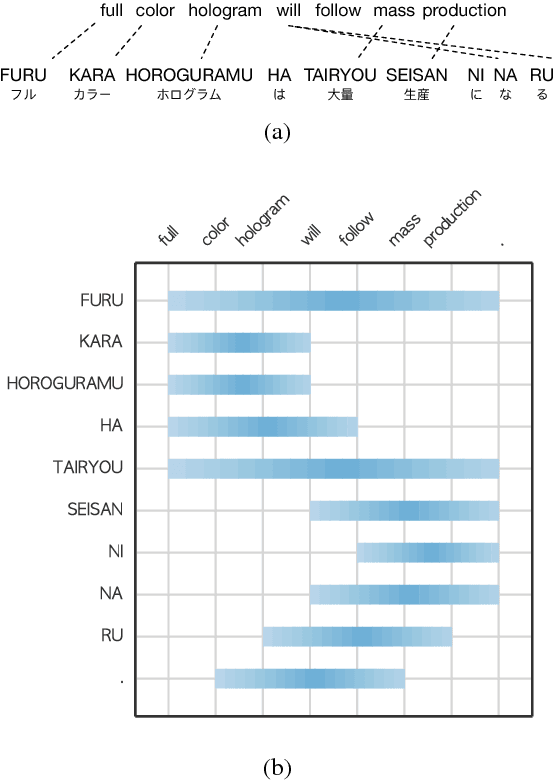

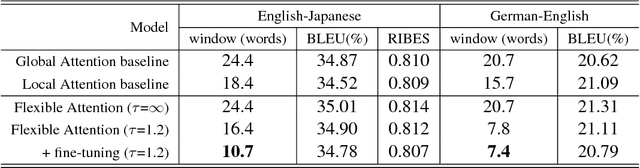

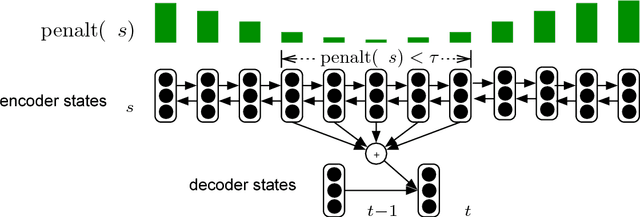

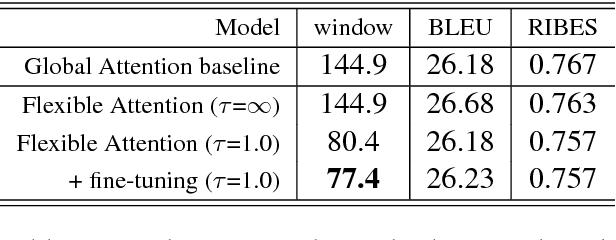

Recently, the attention mechanism plays a key role to achieve high performance for Neural Machine Translation models. However, as it computes a score function for the encoder states in all positions at each decoding step, the attention model greatly increases the computational complexity. In this paper, we investigate the adequate vision span of attention models in the context of machine translation, by proposing a novel attention framework that is capable of reducing redundant score computation dynamically. The term "vision span" means a window of the encoder states considered by the attention model in one step. In our experiments, we found that the average window size of vision span can be reduced by over 50% with modest loss in accuracy on English-Japanese and German-English translation tasks.% This results indicate that the conventional attention mechanism performs a significant amount of redundant computation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge