Hai Zhao

Department of Computer Science and Engineering, Shanghai Jiao Tong University, Key Laboratory of Shanghai Education Commission for Intelligent Interaction and Cognitive Engineering, Shanghai Jiao Tong University, MoE Key Lab of Artificial Intelligence, AI Institute, Shanghai Jiao Tong University

Enhancing Pre-trained Language Model with Lexical Simplification

Dec 30, 2020

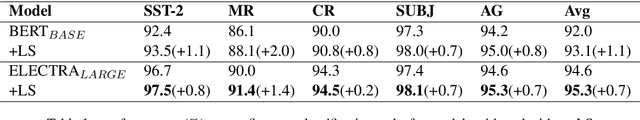

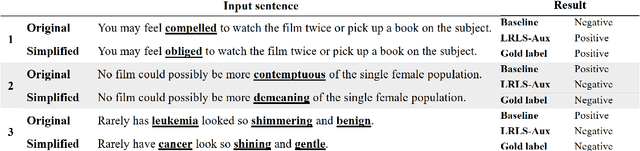

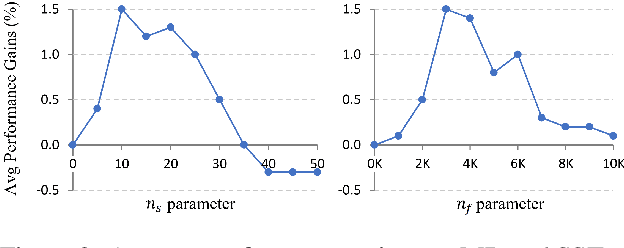

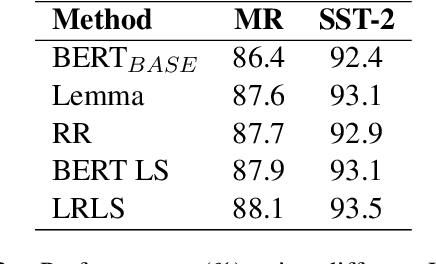

Abstract:For both human readers and pre-trained language models (PrLMs), lexical diversity may lead to confusion and inaccuracy when understanding the underlying semantic meanings of given sentences. By substituting complex words with simple alternatives, lexical simplification (LS) is a recognized method to reduce such lexical diversity, and therefore to improve the understandability of sentences. In this paper, we leverage LS and propose a novel approach which can effectively improve the performance of PrLMs in text classification. A rule-based simplification process is applied to a given sentence. PrLMs are encouraged to predict the real label of the given sentence with auxiliary inputs from the simplified version. Using strong PrLMs (BERT and ELECTRA) as baselines, our approach can still further improve the performance in various text classification tasks.

Dialogue Graph Modeling for Conversational Machine Reading

Dec 29, 2020

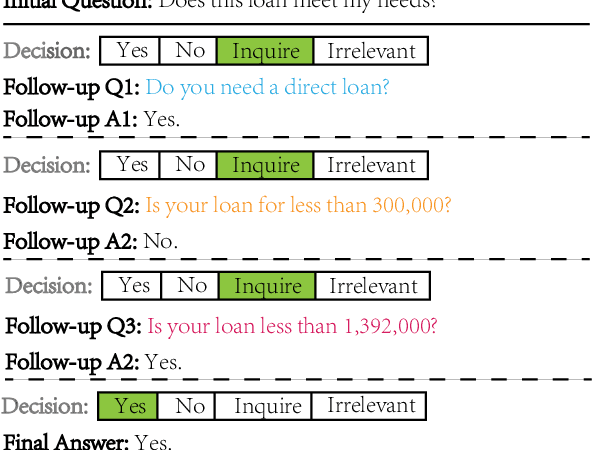

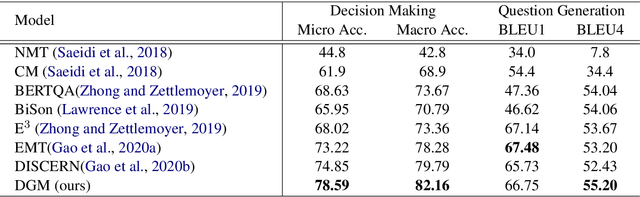

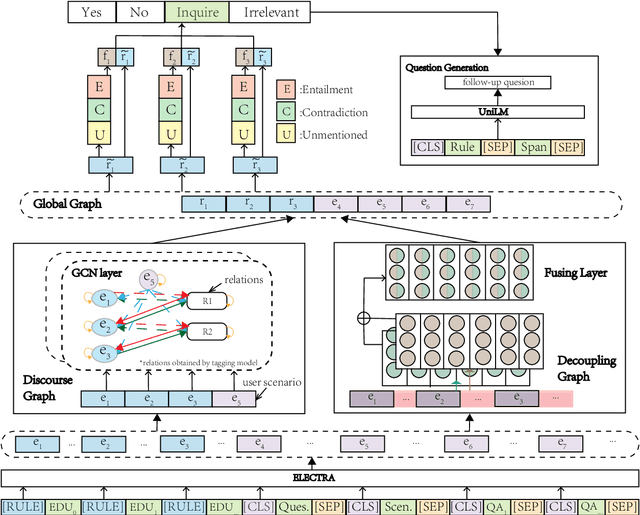

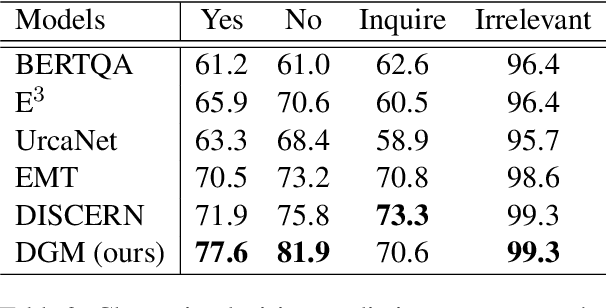

Abstract:Conversational Machine Reading (CMR) aims at answering questions in a complicated manner. Machine needs to answer questions through interactions with users based on given rule document, user scenario and dialogue history, and ask questions to clarify if necessary. In this paper, we propose a dialogue graph modeling framework to improve the understanding and reasoning ability of machine on CMR task. There are three types of graph in total. Specifically, Discourse Graph is designed to learn explicitly and extract the discourse relation among rule texts as well as the extra knowledge of scenario; Decoupling Graph is used for understanding local and contextualized connection within rule texts. And finally a global graph for fusing the information together and reply to the user with our final decision being either ``Yes/No/Irrelevant" or to ask a follow-up question to clarify.

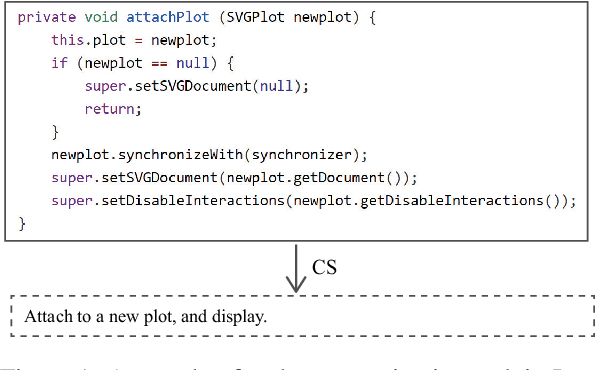

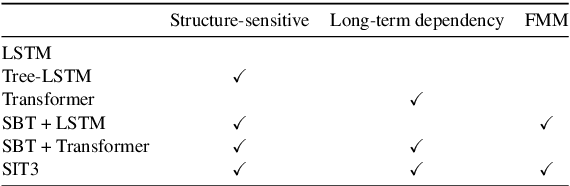

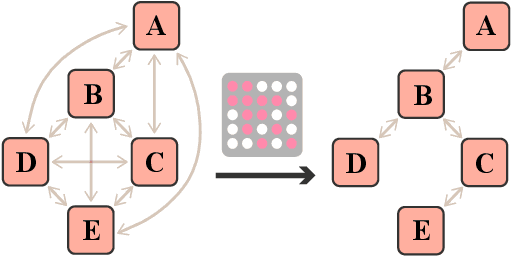

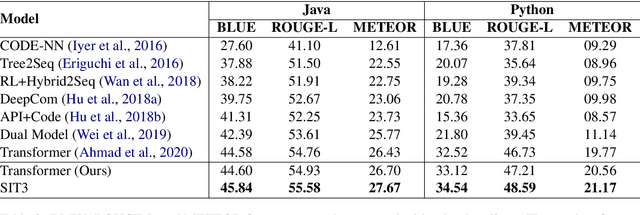

SIT3: Code Summarization with Structure-Induced Transformer

Dec 29, 2020

Abstract:Code summarization (CS) is becoming a promising area in recent natural language understanding, which aims to generate sensible annotations automatically for source code and is known as programmer oriented. Previous works attempt to apply structure-based traversal (SBT) or non-sequential models like Tree-LSTM and GNN to learn structural program semantics. They both meet the following drawbacks: 1) it is shown ineffective to incorporate SBT into Transformer; 2) it is limited to capture global information through GNN; 3) it is underestimated to capture structural semantics only using Transformer. In this paper, we propose a novel model based on structure-induced self-attention, which encodes sequential inputs with highly-effective structure modeling. Extensive experiments show that our newly-proposed model achieves new state-of-the-art results on popular benchmarks. To our best knowledge, it is the first work on code summarization that uses Transformer to model structural information with high efficiency and no extra parameters. We also provide a tutorial on how we pre-process.

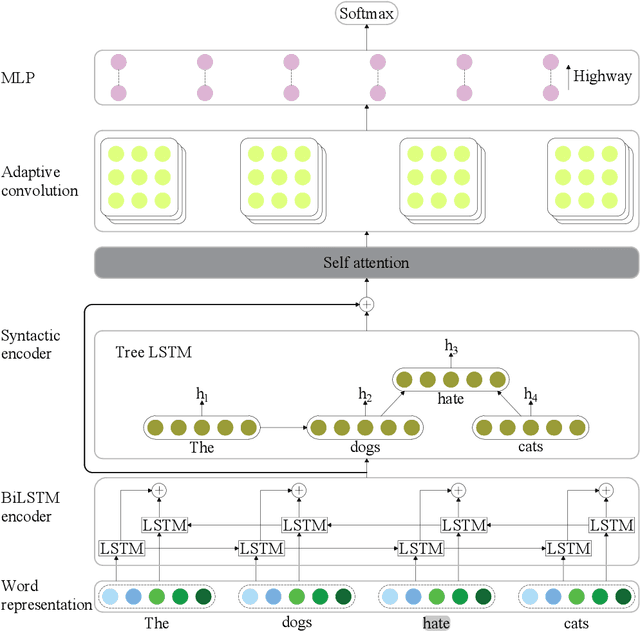

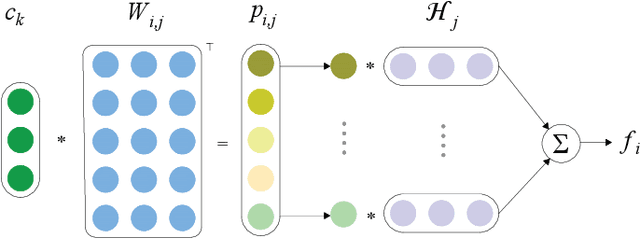

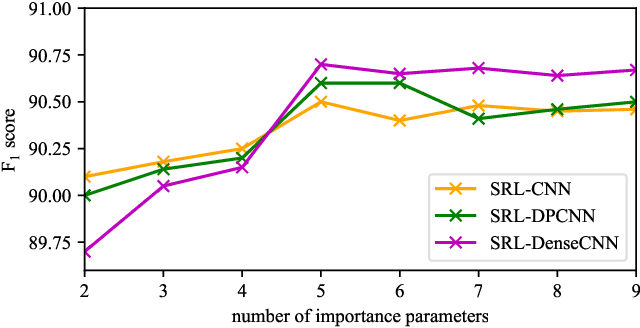

Adaptive Convolution for Semantic Role Labeling

Dec 27, 2020

Abstract:Semantic role labeling (SRL) aims at elaborating the meaning of a sentence by forming a predicate-argument structure. Recent researches depicted that the effective use of syntax can improve SRL performance. However, syntax is a complicated linguistic clue and is hard to be effectively applied in a downstream task like SRL. This work effectively encodes syntax using adaptive convolution which endows strong flexibility to existing convolutional networks. The existing CNNs may help in encoding a complicated structure like syntax for SRL, but it still has shortcomings. Contrary to traditional convolutional networks that use same filters for different inputs, adaptive convolution uses adaptively generated filters conditioned on syntactically informed inputs. We achieve this with the integration of a filter generation network which generates the input specific filters. This helps the model to focus on important syntactic features present inside the input, thus enlarging the gap between syntax-aware and syntax-agnostic SRL systems. We further study a hashing technique to compress the size of the filter generation network for SRL in terms of trainable parameters. Experiments on CoNLL-2009 dataset confirm that the proposed model substantially outperforms most previous SRL systems for both English and Chinese languages

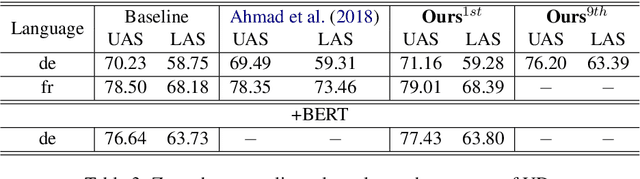

Cross-lingual Dependency Parsing as Domain Adaptation

Dec 24, 2020

Abstract:In natural language processing (NLP), cross-lingual transfer learning is as essential as in-domain learning due to the unavailability of annotated resources for low-resource languages. In this paper, we use the ability of a pre-training task that extracts universal features without supervision. We add two pre-training tasks as the auxiliary task into dependency parsing as multi-tasking, which improves the performance of the model in both in-domain and cross-lingual aspects. Moreover, inspired by the usefulness of self-training in cross-domain learning, we combine the traditional self-training and the two pre-training tasks. In this way, we can continuously extract universal features not only in training corpus but also in extra unannotated data and gain further improvement.

Reference Knowledgeable Network for Machine Reading Comprehension

Dec 07, 2020

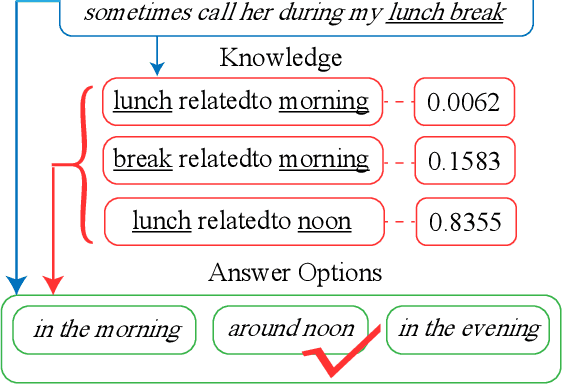

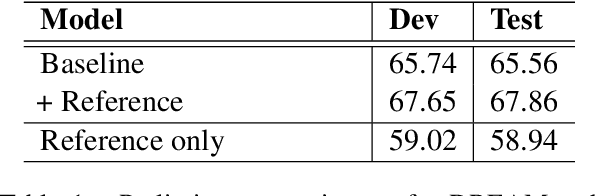

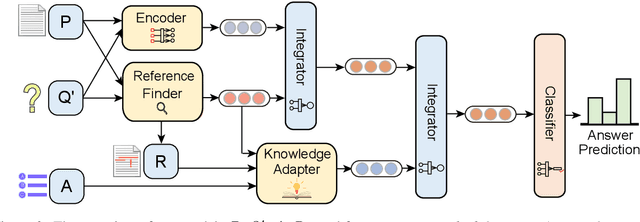

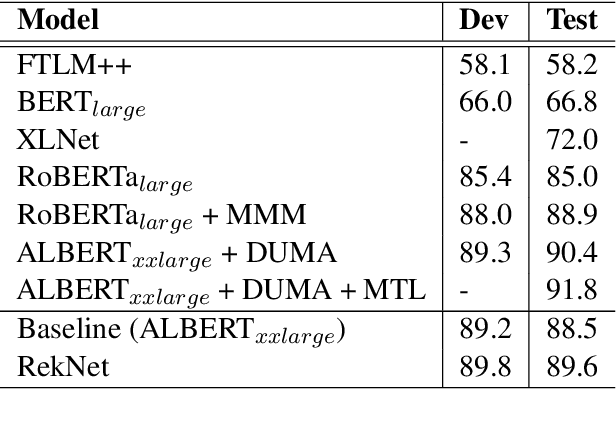

Abstract:Multi-choice Machine Reading Comprehension (MRC) is a major and challenging form of MRC tasks that requires model to select the most appropriate answer from a set of candidates given passage and question. Most of the existing researches focus on the modeling of the task datasets without explicitly referring to external fine-grained commonsense sources, which is a well-known challenge in multi-choice tasks. Thus we propose a novel reference-based knowledge enhancement model based on span extraction called Reference Knowledgeable Network (RekNet), which simulates human reading strategy to refine critical information from the passage and quote external knowledge in necessity. In detail, RekNet refines fine-grained critical information and defines it as Reference Span, then quotes external knowledge quadruples by the co-occurrence information of Reference Span and answer options. Our proposed method is evaluated on two multi-choice MRC benchmarks: RACE and DREAM, which shows remarkable performance improvement with observable statistical significance level over strong baselines.

SJTU-NICT's Supervised and Unsupervised Neural Machine Translation Systems for the WMT20 News Translation Task

Oct 11, 2020

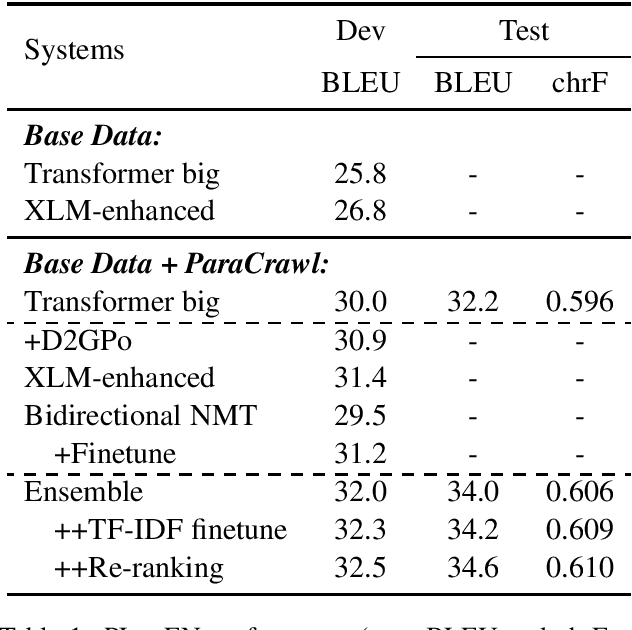

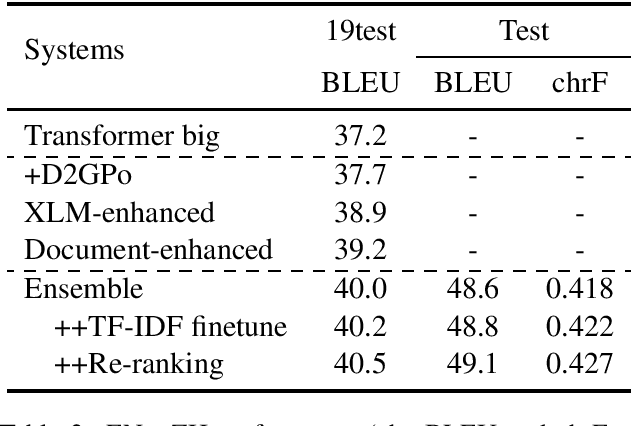

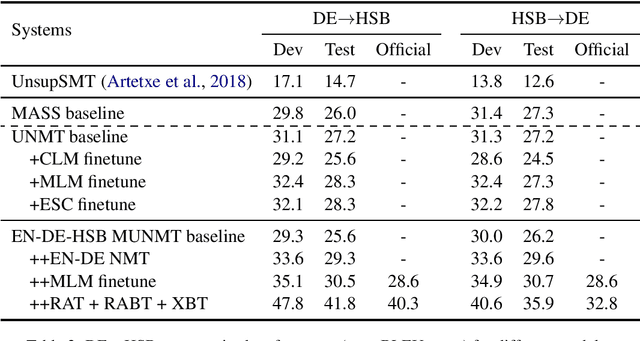

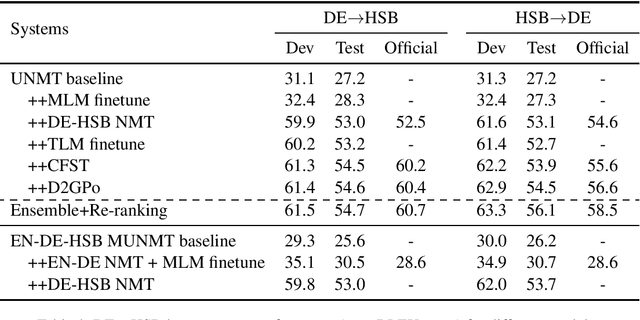

Abstract:In this paper, we introduced our joint team SJTU-NICT 's participation in the WMT 2020 machine translation shared task. In this shared task, we participated in four translation directions of three language pairs: English-Chinese, English-Polish on supervised machine translation track, German-Upper Sorbian on low-resource and unsupervised machine translation tracks. Based on different conditions of language pairs, we have experimented with diverse neural machine translation (NMT) techniques: document-enhanced NMT, XLM pre-trained language model enhanced NMT, bidirectional translation as a pre-training, reference language based UNMT, data-dependent gaussian prior objective, and BT-BLEU collaborative filtering self-training. We also used the TF-IDF algorithm to filter the training set to obtain a domain more similar set with the test set for finetuning. In our submissions, the primary systems won the first place on English to Chinese, Polish to English, and German to Upper Sorbian translation directions.

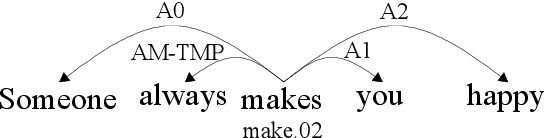

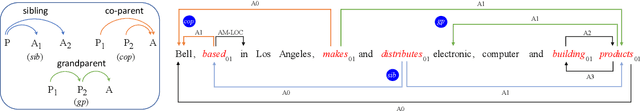

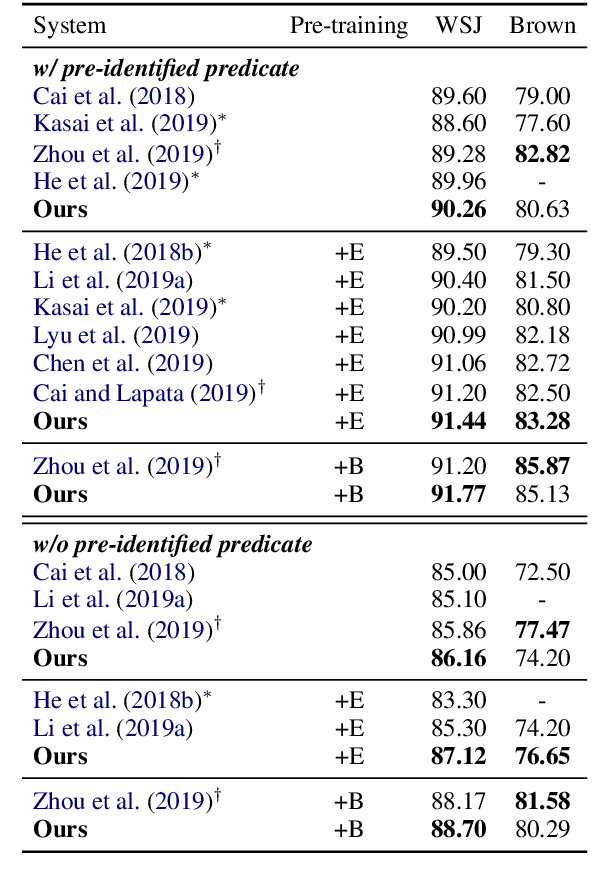

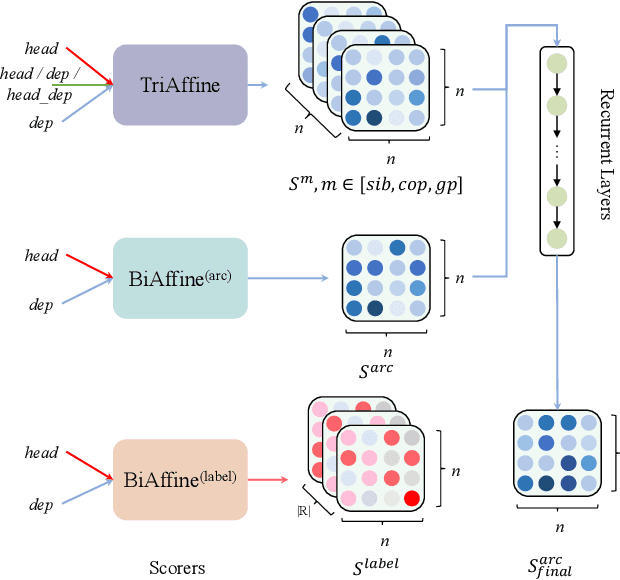

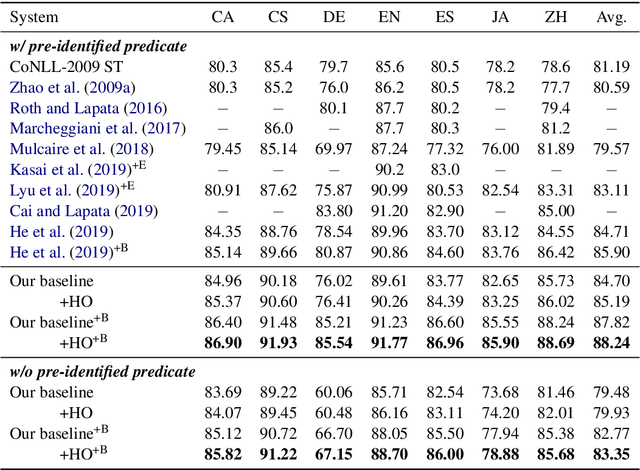

High-order Semantic Role Labeling

Oct 09, 2020

Abstract:Semantic role labeling is primarily used to identify predicates, arguments, and their semantic relationships. Due to the limitations of modeling methods and the conditions of pre-identified predicates, previous work has focused on the relationships between predicates and arguments and the correlations between arguments at most, while the correlations between predicates have been neglected for a long time. High-order features and structure learning were very common in modeling such correlations before the neural network era. In this paper, we introduce a high-order graph structure for the neural semantic role labeling model, which enables the model to explicitly consider not only the isolated predicate-argument pairs but also the interaction between the predicate-argument pairs. Experimental results on 7 languages of the CoNLL-2009 benchmark show that the high-order structural learning techniques are beneficial to the strong performing SRL models and further boost our baseline to achieve new state-of-the-art results.

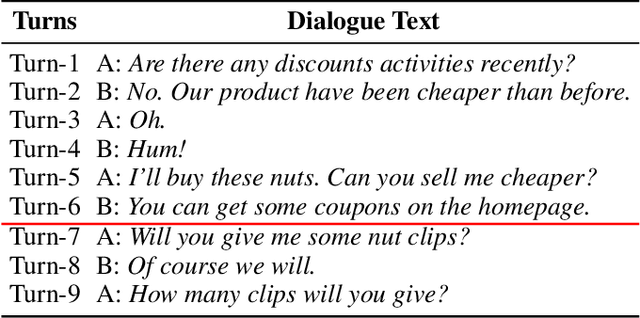

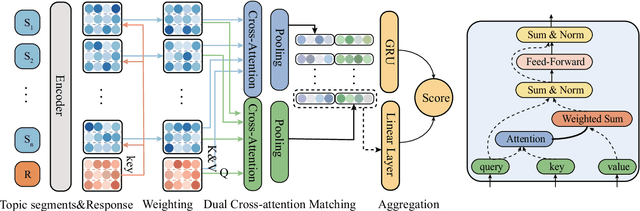

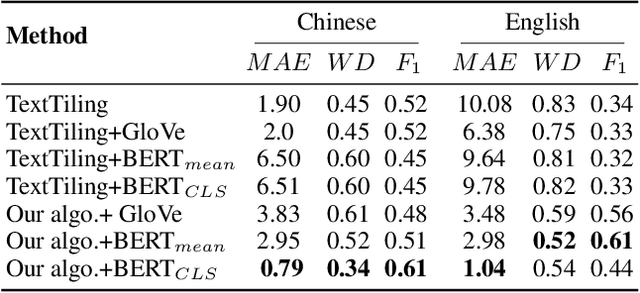

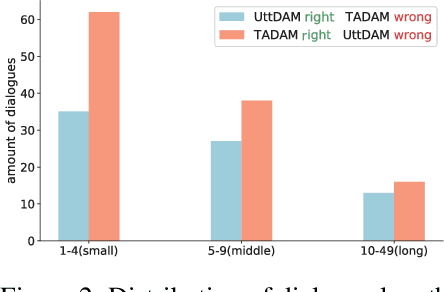

Topic-Aware Multi-turn Dialogue Modeling

Sep 26, 2020

Abstract:In the retrieval-based multi-turn dialogue modeling, it remains a challenge to select the most appropriate response according to extracting salient features in context utterances. As a conversation goes on, topic shift at discourse-level naturally happens through the continuous multi-turn dialogue context. However, all known retrieval-based systems are satisfied with exploiting local topic words for context utterance representation but fail to capture such essential global topic-aware clues at discourse-level. Instead of taking topic-agnostic n-gram utterance as processing unit for matching purpose in existing systems, this paper presents a novel topic-aware solution for multi-turn dialogue modeling, which segments and extracts topic-aware utterances in an unsupervised way, so that the resulted model is capable of capturing salient topic shift at discourse-level in need and thus effectively track topic flow during multi-turn conversation. Our topic-aware modeling is implemented by a newly proposed unsupervised topic-aware segmentation algorithm and Topic-Aware Dual-attention Matching (TADAM) Network, which matches each topic segment with the response in a dual cross-attention way. Experimental results on three public datasets show TADAM can outperform the state-of-the-art method by a large margin, especially by 3.4% on E-commerce dataset that has an obvious topic shift.

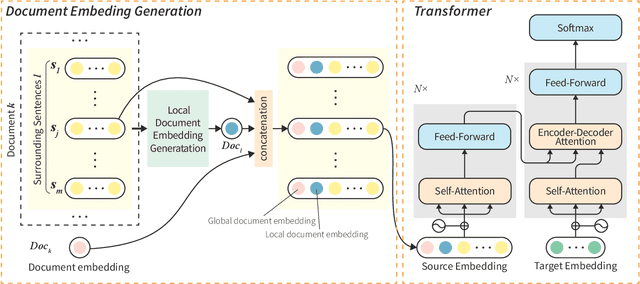

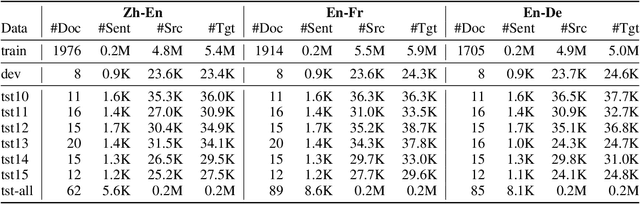

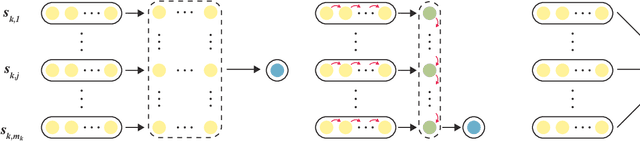

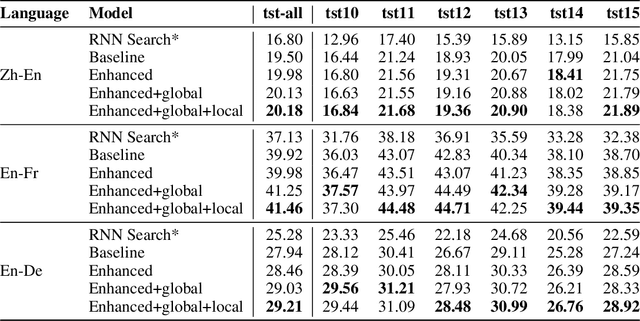

Document-level Neural Machine Translation with Document Embeddings

Sep 16, 2020

Abstract:Standard neural machine translation (NMT) is on the assumption of document-level context independent. Most existing document-level NMT methods are satisfied with a smattering sense of brief document-level information, while this work focuses on exploiting detailed document-level context in terms of multiple forms of document embeddings, which is capable of sufficiently modeling deeper and richer document-level context. The proposed document-aware NMT is implemented to enhance the Transformer baseline by introducing both global and local document-level clues on the source end. Experiments show that the proposed method significantly improves the translation performance over strong baselines and other related studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge